CuNi

Member

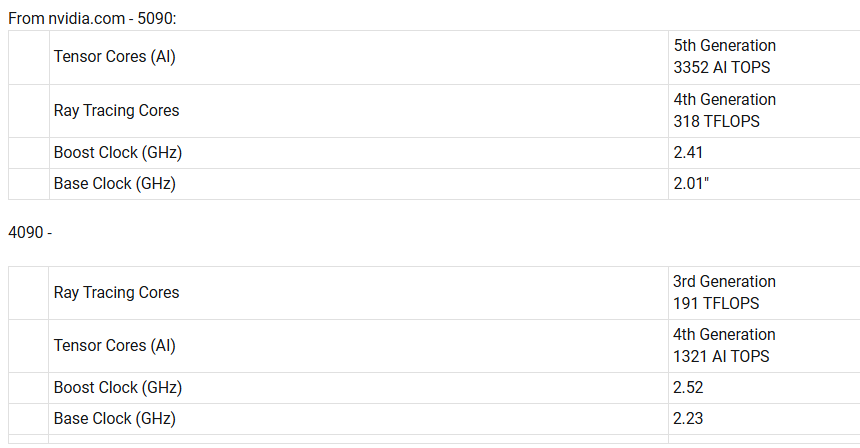

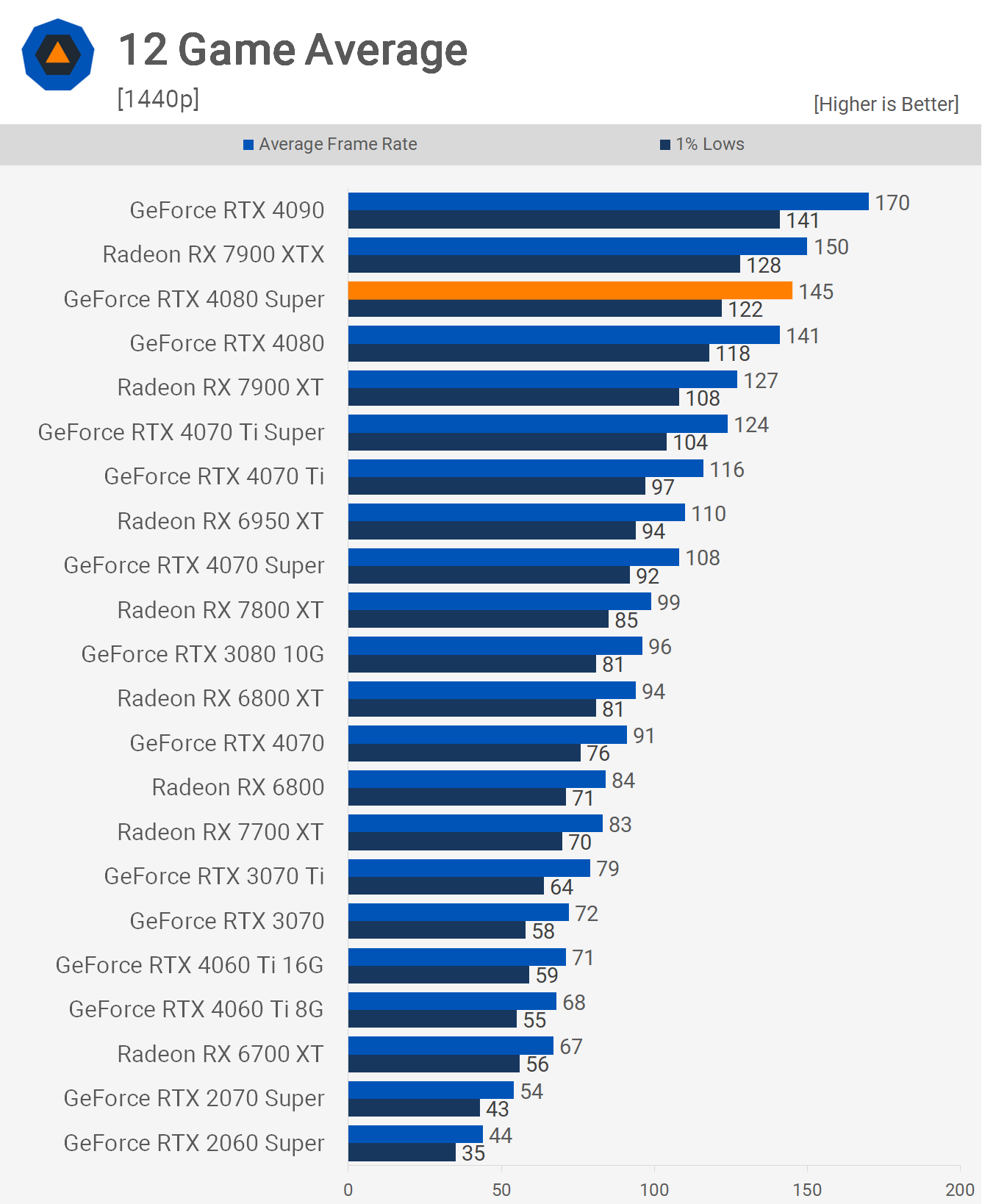

Ngl, this is terrible. Like it's looking like 30% real gains across the board. This frame-gen tomfoolery is there to obfuscate the numbers. Considering that the notes suggest that the games are rendered at 4k max settings, it's pretty bad. Performance gains look to be worse than ada maybe barring the low end, wild.

This maybe hyperbolic on my part but anytime a company's stock appreciates this much and employees can cash out on their RSUs, the company starts a slow decline in innovation. I suspected that it might happen with Nvidia and this lacklustre release only confirms it for me. These guys are about to enter a coasting phase.

I feel like Raster gains are going to decline gen over gen but Ray and path-tracing, followed by AI features are where the future of uplifts is.