Not like this.

If the 70Ti is AD104 based and on the same bus as the 4070 expect it to sell horribly.

It needs to be on AD103 else that extra 100 dollars wont do much to sway people towards it.....considering you would be spitting distance from an FE 4080.

I think we are gonna have to.

My only choices going into this generation were:

320bit 20GB Card - 4080Ti

Skip the generation

RTX 4090

In that order.

So if that 4080Ti has any chance, any chance of coming out before August 2023, im holding out.

Its the perfect upgrade IMO for 3080 class cards.

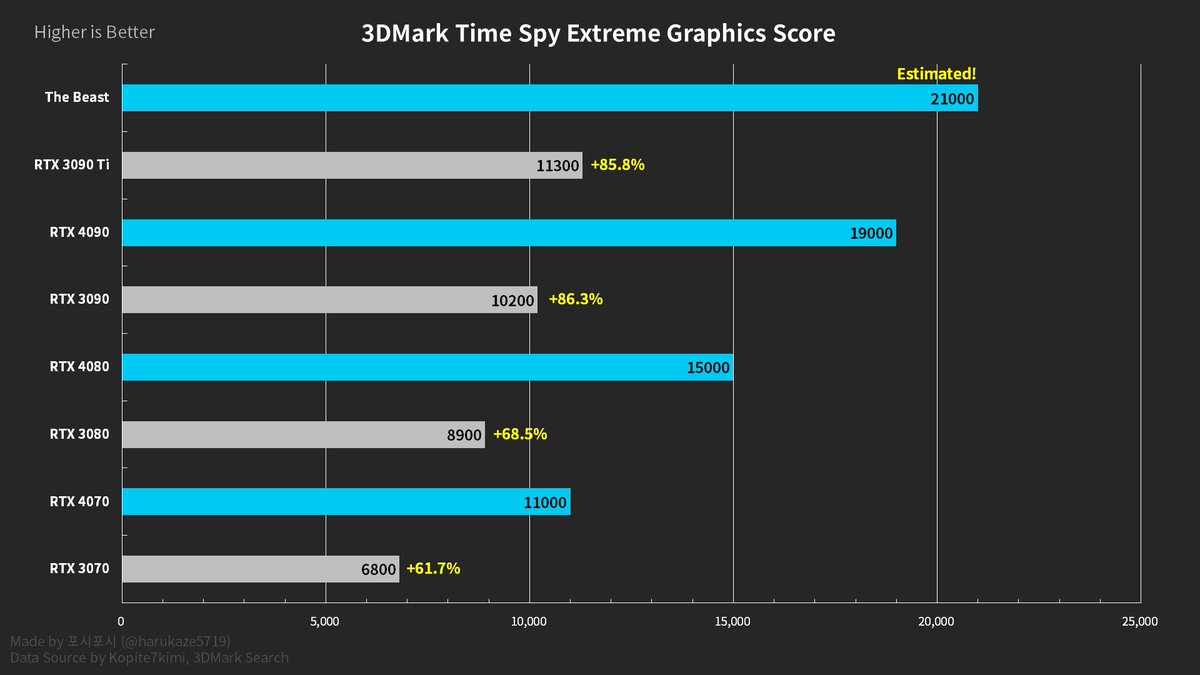

The 4090 is likely gonna cost an arm and a leg but performance and FOMO had a chance of forcing my hand, seeing that Nvidia is atleast thinking about an 80Ti takes the 4090 off the table.