Don't you think it's kind of weird with Microsoft being transparent they didn't go into so much depth with their I/O system?

Same reason why Cerny avoided talking about the GPU alot while he didn't hesitate to give us details on the PS5 I/O.

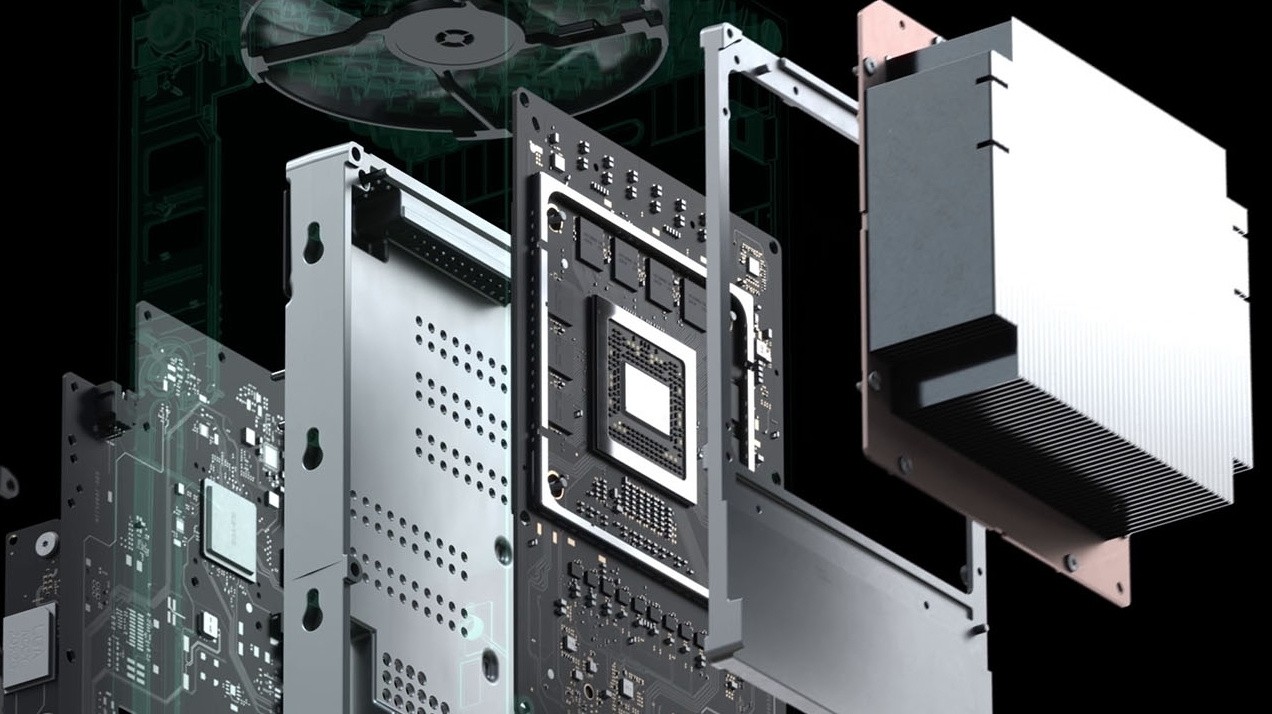

Then there's this.

Because MS are doing a system architecture presentation in August where they'll probably go in-depth on Velocity Architecture, if they haven't done so already between now and the July event. Also FWIW they've actually talked a lot about the I/O system, arguably as much as Sony has. However, much of MS's approach is software-driven and the software implementation is being tuned probably even right now, so it would be premature to go in-depth on those aspects until they are finalized.

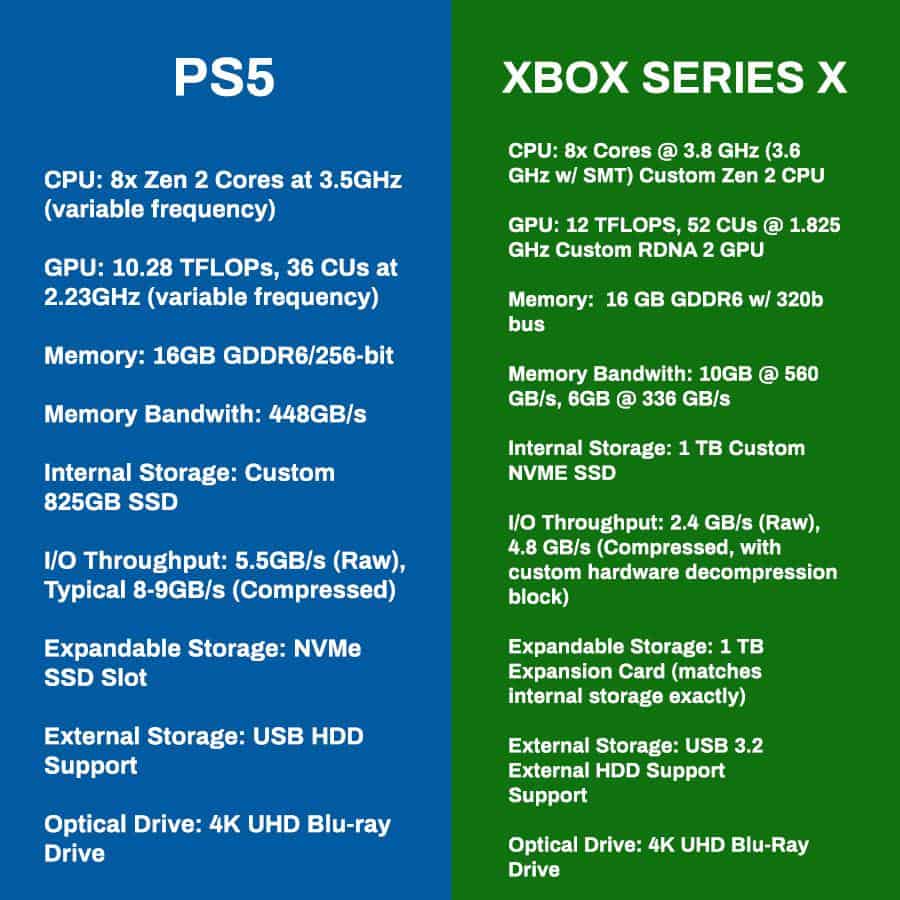

That graph also has a few things wrong; I'm nitpicking here but the TF numbers should be 10.275 TF and 12.147 TF respectively. Also, if they're using "bit" for describing the buses why not just consistently use bit between both columns? Just really small errors that don't mean too much at the end of the day, but I like numbers and nomenclature to be clean and consistent with this kind of stuff, personally

I summary there's a theory that because of BCPACK, SFS and other features in the Xbox I/O system it will be superior to Sonys I/O system.

I'm still not seeing how that's possible since it goes against the spec sheets.

But I guess we will have to wait until June to find out if the rumors are true.

Wait, who's been suggesting this? It reads like a bad interpretation. The prevailing idea I've seen is that those things will help narrow the delta between the two I/O systems, which is perfectly plausible considering there are multiple ways of addressing the bottlenecks Cerny mentioned. Sony has taken their approach, and MS has taken theirs.

Now, if MS's hardware on the I/O stack were a little bit beefier, then I suspect the software-optimized implementations would probably make the delta imperceptible or perhaps even eliminated. To my knowledge, that isn't going to happen, but I can see a scenario where the delta presented by the paper specs ends up with a smaller real-world delta in terms of actual performance when all things are considered.

People are just trying to assume where that delta actually shrinks to. I'd think for the benefit of multi-platform games across both platforms the smaller the delta the better. Probably something around 50% - 75% still favoring PS5's approach is likely favorable. But we'll have a better picture for where that actually potentially falls at once they give a deeper system analysis, most likely in August but some parts could be discussed before then.

My understanding is, just like with the GPUs, it's perfectly fine to assume that the paper specs between the SSD I/Os is not truly indicative of actual performance. As in there are some areas with the GPUs (as far as we know) where Sony's made some decisions to help them punch a bit above their weight, such as the clocks, which helps with things like pixel fillrate and cache speed (NOT cache bandwidth, that's something different).

All the same, we could have scenarios where XSX's SSD I/O performs better than Sony's in selective aspects, and punches above its weight, but Sony's still holds the overall advantage since the hardware is beefier. At the very least MS seem confident their approach is competitive enough, so I would think there's more to their implementation than what their SSD I/O paper specs belay.

But as you said, we'll have to wait until more official information arrives.