Sutton Dagger

Member

I'm planning on doing 4K at 30fps with a GTX 970, hopefully high settings are possible.

I'm planning on doing 4K at 30fps with a GTX 970, hopefully high settings are possible.

Is this "I must be able to ultra everything or its bad optimization" a new trend? I remember when ultra was supposed to be extreme in order to allow the game to look better with future hardware

I'm planning on doing 4K at 30fps with a GTX 970, hopefully high settings are possible.

It's not inefficient when you consider the fact it also improves texture quality.

Here's a comparison shot I made for FC4. Textures are drastically improved in 2880p vs. 1440p.

http://screenshotcomparison.com/comparison/105760

Why are some people so obsessed with 4K?

Wouldn't you rather run the game at Ultra @1080P?

IMO settings trump resolution any day.

What Durante is saying is that downsampling is really inefficient compared to proper supersampling.

What about people who have a 4K display? Doesn't playing at less than native resolution of your display usually create ugly upscaling artifacts?

I don't know when I should start counting, my PC is not pre-built. It's a machine I continually upgraded ever since 2010. I couldn't tell you how much I spent because I would need to remember every parts I bought and sold.

What I do know however, is that it's well worth it and I'll keep doing it. You see, cost-effectiveness is not arbitrarily defined. No doubt I must have spent more than a PS4 or Xbox One but when I'm looking at the result before my very eyes I really can't complain.

Selling my PS4/XBO was the best decision I could have ever made.

Why are some people so obsessed with 4K?

Wouldn't you rather run the game at Ultra @1080P?

IMO settings trump resolution any day.

SGSSAA also improves texture quality, since it also fully samples everything. What's inefficient about downsampling is the sample placement.It's not inefficient when you consider the fact it also improves texture quality.

only on non-integer multiples.

I'm happy for you. I just don't see how spending hundreds or even thousands of dollars since 2010 has any impact on how I or anyone enjoys a game. I actually asked the question legitimately to see if I wanted to go in on a gaming PC as a console player, but I never get a straight answer. Yeah, there's separate settings lower this or that, but that's not the point. From what I read on GAF and other sites is that PC gaming is the end all be all of game experience and that's why I ask you... How much do you have in your PC right now to enjoy the games like you do at this moment.

I'm happy for you. I just don't see how spending hundreds or even thousands of dollars since 2010 has any impact on how I or anyone enjoys a game. I actually asked the question legitimately to see if I wanted to go in on a gaming PC as a console player, but I never get a straight answer. Yeah, there's separate settings lower this or that, but that's not the point. From what I read on GAF and other sites is that PC gaming is the end all be all of game experience and that's why I ask you... How much do you have in your PC right now to enjoy the games like you do at this moment.

only on non-integer multiples.

Today I learned!

Is that also why DSR settings like 1.50x, 1.78x, or 2.25x look bad?

You are never going to get a straight answer because PC's are so scalable to your desired hardware and the graphic/framerates you desire. Everybody has a price point in which they are willing to spend to enjoy the games they want at the technical specs they have. It all comes down to personal preference on the pc.

The problem is, pc gaming talk has devolved into playing games at the utmost highest settings regardless of cost and performance ratio to graphics ratio.

This isn't a useful question to ask. How much you enjoy whatever experience on PC for x price is completely variable depending on the person. Bad questions get bad answers.

Dude you're gettin a dell!I want to enjoy playing games the same way he does. I have tons of disposable income and I'd like to pretty much copy exactly what he has because I've seen him post about how he likes his settings, etc. Nothing hard about that. I'm legitimately curious. I don't know why anyone skirts around the issue. Post your hardware and prices. Is that so hard?

What's the difference? I thought they were different names for the same thing.

I want to enjoy playing games the same way he does. I have tons of disposable income and I'd like to pretty much copy exactly what he has because I've seen him post about how he likes his settings, etc. Nothing hard about that. I'm legitimately curious. I don't know why anyone skirts around the issue. Post your hardware and prices. Is that so hard?

I want to enjoy playing games the same way he does. I have tons of disposable income and I'd like to pretty much copy exactly what he has because I've seen him post about how he likes his settings, etc. Nothing hard about that. I'm legitimately curious. I don't know why anyone skirts around the issue. Post your hardware and prices. Is that so hard?

This man speaks the truthBecause 4k looks unbelievable if you have the horses to pull it off.

Why are some people so obsessed with 4K?

Wouldn't you rather run the game at Ultra @1080P?

IMO settings trump resolution any day.

Why are some people so obsessed with 4K?

Wouldn't you rather run the game at Ultra @1080P?

IMO settings trump resolution any day.

Display quality matters a lot too. I was caught up in the 4K hype until I saw LG's 1080P OLED - looks better than any 4K LCD I've seen despite the lower resolution (as long as you're not sitting super close). I'll wait until I can afford a 4K OLED.

That's a....tall order. The 970 can runs games at 4k sure but The Witcher 3 might be too much even one notch below max. And 3.5 of VRAM will probably not suffice.

Why are some people so obsessed with 4K?

Wouldn't you rather run the game at Ultra @1080P?

IMO settings trump resolution any day.

only on non-integer multiples.

720p looks like a blurry mess in my 1440p display.

1080p looks better than it. (I think this is what you are talking about, right?)

If the game looks incredible its understandable that the GPU can't do that, but this game looks average, so if a 980 can't run it in 60 FPS its bad optimized, its obvious really.

Guys let's say you had to chose between something like 4k 30 fps with ~medium overall settings, or 1080p 60fps with ~ultra overall settings, which would you chose? I know it's hard to say when we don't know what the difference in settings looks like yet, but just in general, what is your preference?

Guys let's say you had to chose between something like 4k 30 fps with ~medium overall settings, or 1080p 60fps with ~ultra overall settings, which would you chose? I know it's hard to say when we don't know what the difference in settings looks like yet, but just in general, what is your preference?

Yeah the issue is I have a 4k monitor but I always see people saying that you should play at your monitor's native resolution. Mine is 4k, but in a game like Witcher 3 I'd probably end up getting around 30 fps on medium settings. I don't know if this would look better than something like 1080p 60fps ultra.Whatever your native screen resolution is and viewing distance.

Im playing from the sofa, 42" 720p plasma, 1080p Ultra settings, 8 foot viewing distance.

If you had the choice i think the 1080p ultra is going to look overall better than 4k at medium settings as a lot of detail is just gone. You just see glaring issues quicker with 4k.

And for me 30fps is not enjoyable.

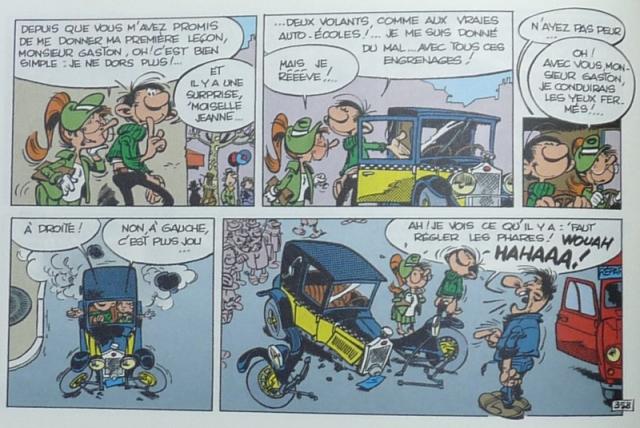

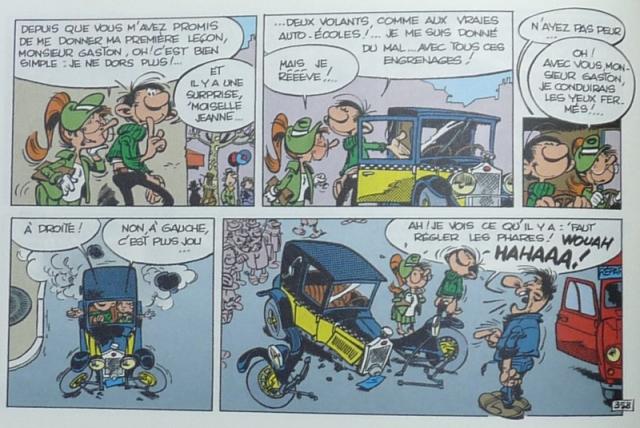

Could anyone translate? I'm genuinely interested in what it's about haha.this

i think it depends most upon how much VRAM TW3 uses. Shading wise... 30 fps @ 4k seems perhaps reasonable @ high. But it is hard to say obviously.I somehow missed this thread entirely.

I have a 3770k, 970 FTW Edition, and 16 gigs of ram.

Possible that I will be able to do a mix of Ultra and High at 4K@ 30fps?

That would be fantastic.

How much slower is a 970 FTW than a 980?

Edit: Looking at this 970 FTW review,

http://www.overclockers.com/evga-gtx970-ftw-graphics-card-review/

it looks like the 970 FTW is never more than 10 frames behind the 980, in any game, most of the time less.

I wonder then if I could actually manage all Ultra at half the framerate but 4K... Somehow doubt it, but my hope is now higher for a mix of Ultra/High 4K.

i think it depends most upon how much VRAM TW3 uses. Shading wise... 30 fps @ 4k seems perhaps reasonable @ high. But it is hard to say obviously.

I somehow missed this thread entirely.

I have a 3770k, 970 FTW Edition, and 16 gigs of ram.

Possible that I will be able to do a mix of Ultra and High at 4K@ 30fps?

That would be fantastic.

How much slower is a 970 FTW than a 980?

Edit: Looking at this 970 FTW review,

http://www.overclockers.com/evga-gtx970-ftw-graphics-card-review/

it looks like the 970 FTW is never more than 10 frames behind the 980, in any game, most of the time less.

I wonder then if I could actually manage all Ultra at half the framerate but 4K... Somehow doubt it, but my hope is now higher for a mix of Ultra/High 4K.

Who cares about another witcher

More news on cyberpunk please

Does anybody regret buying a 970 when you know you need a 989 to play on ultra?

Titan X should knock it out of the park then. Can't wait.

You need a 980 to play Ultra at 60fps solid.

A 970 will easily run it on Ultra at 30-40+ FPS.

My 970 FTW edition is within 10 frames of a stock 980 on pretty much every single benchmark.

So no.

Although I am considering buying a second 970 FTW+ just so my 4K options are more robust.