cinnamonandgravy

Member

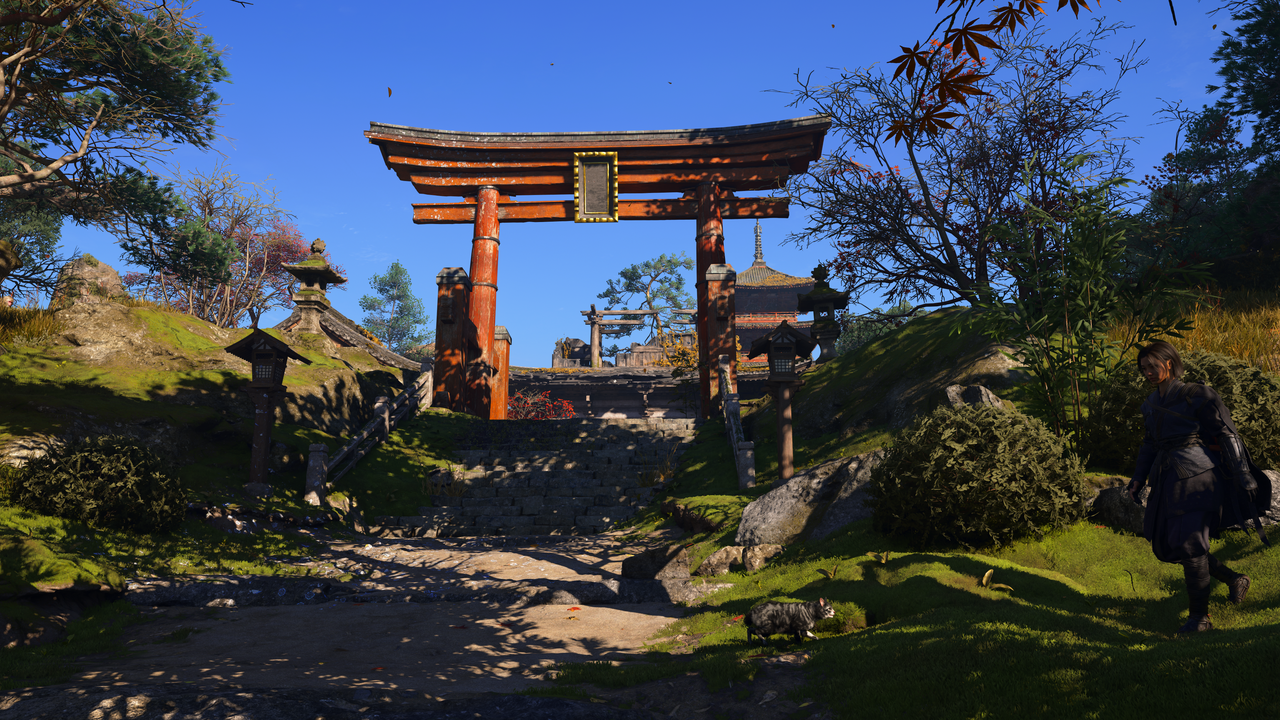

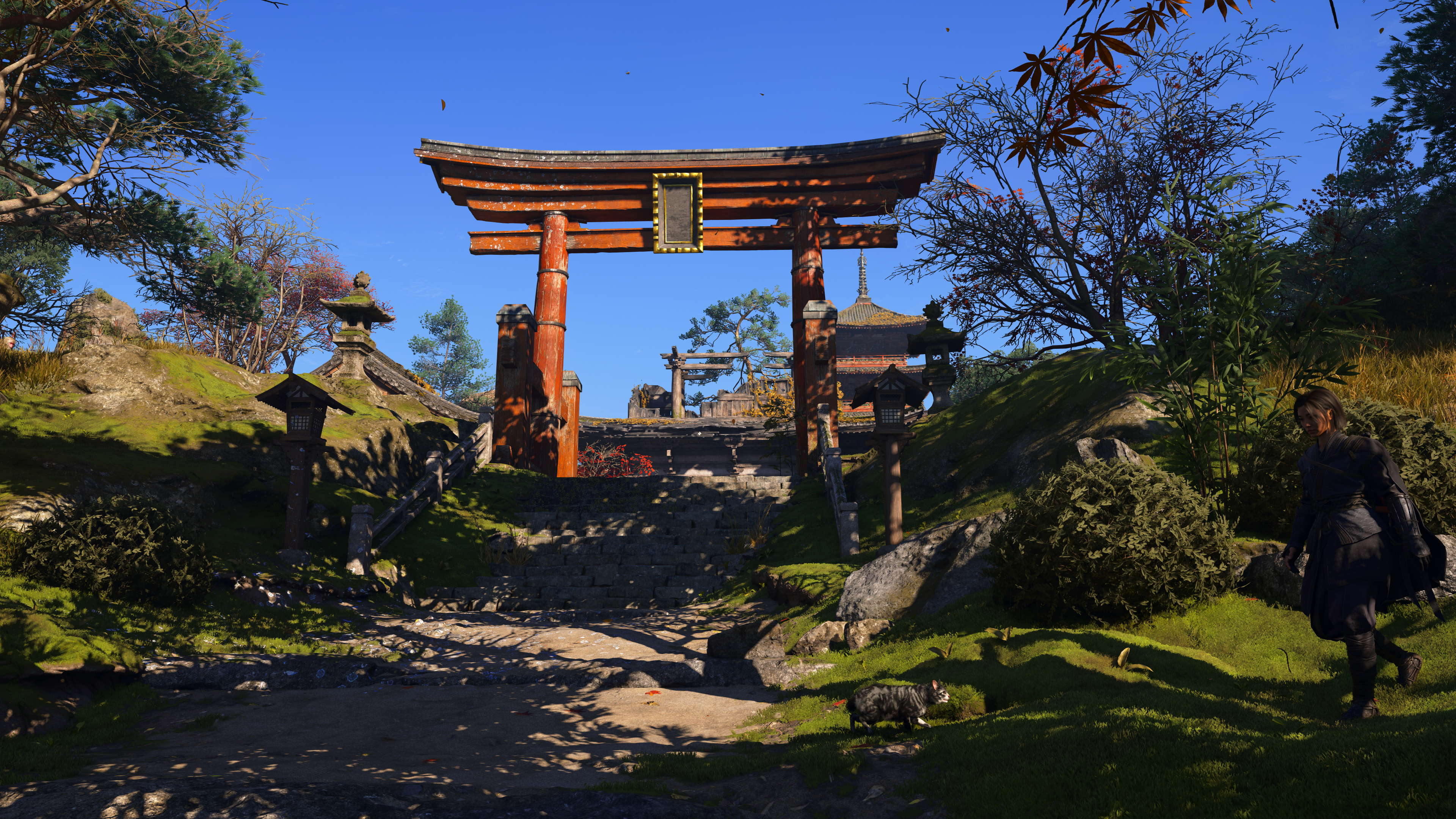

first AC game this gen that isnt cross-genAgreed, this game feels really next gen

cross-gen should be illegal.

first AC game this gen that isnt cross-genAgreed, this game feels really next gen

Nice shoots and it's in performance mode too, but ....I bet you can take similar shoots in any current gen game where there's a photo mode. You know well what I meant that shoots don't do the game justice. How are you gonna show super weather effects in ss?Tbh, screenshots can do the game justice as well. These are my own pictures, but they were taken on console and not on PC.

RDR2 is old and dated and didn't even look that great compared to other PC games when it came to PC. It's a great looking PS4 game but that's it.Is it better than Red Dead 2? Seems like its the benchmark touted by most people.

Is playing an Ubisoft game the only thing worth doing on a computing device?This is actually exactly why I don't understand the point in owning a $3000 PC. Could you imagine spending $3000 to play a fucking Ubisoft game? Lmao

RDR2 maxed out on PC looks fantastic.RDR2 is old and dated and didn't even look that great compared to other PC games when it came to PC. It's a great looking PS4 game but that's it.

The game is drop dead gorgeous seriously I'm blown away by the visual UBI knock it out of the park technically.

Never knew opinions could be factually wrong, but here we are.RDR2 is old and dated and didn't even look that great compared to other PC games when it came to PC. It's a great looking PS4 game but that's it.

Let me put it to you this way. Super Mario World 2 looks fantastic and that's running on a SNES, Tloz Windwaker looks fantastic and that's running on a Gamecube. Now if super Mario world 2 and Windwaker launched on PC today they'd still look great but they would be dated in many ways.RDR2 maxed out on PC looks fantastic.

You exposed your ignorance when you said it didn't look great compared to other PC games when it came out in 2019. Pray tell, what PC games looked better than RDR2 in 2019?Let me put it to you this way. Super Mario World 2 looks fantastic and that's running on a SNES, Tloz Windwaker looks fantastic and that's running on a Gamecube. Now if super Mario world 2 and Windwaker launched on PC today they'd still look great but they would be dated in many ways.

RDR2 is a PS4 game in everyway, it launched on PC in 2019 but it used older tech and it didn't try to take advantage of what PC could do in 2019 like Cyberpunk did in 2020, like Forza Horizon 5 did in 2021, like Callisto Protocol did in 2022, like Alan Wake 2 did in 2023, like Wukong and Indiana Jones did in 2024, like Doom the Dark Ages is doing in 2025. RDR2 essentially grabbed what they did on PS4 and ported it to PC without much fuss, to give some credit they did add DLSS but the game already had it's own TAA so adding DLSS is easy at that point.

In February 2019 we had Metro Exodus (mom enhanced edition) with RT GI providing Assassin's Creed Shadows level lighting (the assassins creed shadows RT GI is actually heavily inspired by what Metro Exodus achieved). Then we got Control which brought a crazy level of RT effects before Cyberpunk took things even further, control with all RT effects on looks much better than RDR2.

RDR2 wasn't leveraging any cutting edge tech it was all stuff made to run on AMD GCN1 hardware from 2012. It had great art direction and was a big budget game but it wasn't some technical feat on PC (the game literally ran well on an HD 7950 from 2011. Think of it this way, on console RDR2 was a big fish in a small pond, in 2019 on PC it was a small fish in a big pond.

I don't want to be combative. I'll just say a game can be demanding for many reasons Cyberpunk is a much more impressive game than RDR2 (on PC, remember this is about PC) because it looks better and achieves so much more in a far more complex environment, RDR2 wasn't known for being ultra optimized. See here to refresh your memory:You exposed your ignorance when you said it didn't look great compared to other PC games when it came out in 2019. Pray tell, what PC games looked better than RDR2 in 2019?

Also funny you talk about hardware requirements, when maxed out, it's still more demanding than most games without RT.

Cyberpunk came out 1 year later and was widely believed to be the best looking game ever and is still a benchmark to this day.I don't want to be combative. I'll just say a game can be demanding for many reasons Cyberpunk is a much more impressive game than RDR2 (on PC, remember this is about PC) because it looks better and achieves so much more in a far more complex environment, RDR2 wasn't known for being ultra optimized. See here to refresh your memory:

Yea I'll answer when you address the rest of my post (both posts) instead of ignoring what's inconvenient to your narrative. How about that? No? That says all anyone needs to hear. Glad we cleared things up.Cyberpunk came out 1 year later and was widely believed to be the best looking game ever and is still a benchmark to this day.

I ask again, what game looked better than RDR2 in 2019? You said it didn't look great compared to other PC games when it was released. Surely, you must have a laundry list of games from 2019 and before that looked better.

Why the fuck would I answer you when you didn't answer me? I asked the question first and you blatantly ignored it. Now you want me to address your post? Lol.Yea I'll answer when you address the rest of my post (both posts) instead of ignoring what's inconvenient to your narrative. How about that? No? That says all anyone needs to hear. Glad we cleared things up.

In 2019, RDR2 assets still looked good, because people were used to raster lighting, PS4 / xbox one like geometry and textures quality. But yes, Rockstar could theoretically improve assets quality on the PC, or push RTX technology to improve the game's graphics even more. A game build with PC platform in mind would do it (like cyberpunk for example). But at least RDR2 port on PC offered much higher detail settings (especially shadow quality, draw distance, grass density) compared to consoles, so it wasnt low effort port.Let me put it to you this way. Super Mario World 2 looks fantastic and that's running on a SNES, Tloz Windwaker looks fantastic and that's running on a Gamecube. Now if super Mario world 2 and Windwaker launched on PC today they'd still look great but they would be dated in many ways.

RDR2 is a PS4 game in everyway, it launched on PC in 2019 but it used older tech and it didn't try to take advantage of what PC could do in 2019 like Cyberpunk did in 2020, like Forza Horizon 5 did in 2021, like Callisto Protocol did in 2022, like Alan Wake 2 did in 2023, like Wukong and Indiana Jones did in 2024, like Doom the Dark Ages is doing in 2025. RDR2 essentially grabbed what they did on PS4 and ported it to PC without much fuss, to give some credit they did add DLSS but the game already had it's own TAA so adding DLSS is easy at that point.

In February 2019 we had Metro Exodus (mom enhanced edition) with RT GI providing Assassin's Creed Shadows level lighting (the assassins creed shadows RT GI is actually heavily inspired by what Metro Exodus achieved). Then we got Control which brought a crazy level of RT effects before Cyberpunk took things even further, control with all RT effects on looks much better than RDR2.

RDR2 wasn't leveraging any cutting edge tech it was all stuff made to run on AMD GCN1 hardware from 2012. It had great art direction and was a big budget game but it wasn't some technical feat on PC (the game literally ran well on an HD 7950 from 2011. Think of it this way, on console RDR2 was a big fish in a small pond, in 2019 on PC it was a small fish in a big pond.

That's not possible considering there were several settings that were lower than the lowest available on PC.My only complaint at the time was the requirements on PC. I had a 1080ti (OC'ed to 14TF) and the game ran comparable to the Xbox One X (6TF) even with xbox like settings, while in all other games I was able to double the Xbox One X's frame rate even when I maxed out settings. GPUs that run Xbox One / PS4 ports well (GTX780 / GTX970 / Radeon 7970) struggled to even run RDR2. RDR2 on a PC is still demanding even today if you max out all the settings at 4K. .

The XBOX ONE X had a 6TF GPU. My asus strix 1080ti had 14TF, that's more than twice the GPU power and keep in mind pascal architecture was also more efficient.That's not possible considering there were several settings that were lower than the lowest available on PC.

Furthermore, you running at double the performance of an Xbox One X on your 1080 isn't because the 1080 is twice as powerful as the X1X's GPU, it's because of the console's anemic CPU. Its GPU is between a 1060 and a 1070. The closest AMD equivalent is the RX 580. The 1080 is nowhere near twice as powerful.

DF states: Note: Some of Xbox One X's effects appear to be hybrid versions of low and medium, or medium and high. We'd recommend starting with the higher quality version, but should emphasise that the lower quality rendition of the effect still looks quite comparable across the run of play.

So you couldn't quite match the settings.

Also: Volumetric resolution - one of the game's defining features, remember - delivers a 'lower than low' quality level on Xbox One X, as does far shadow quality (and yet both look absolutely fine!)

Those two settings are incredibly demanding and you couldn't even match them on your 1080. I also bet your 1080 ran it at more than 30fps but obviously not 60fps, maybe ~40? Your results really aren't out of the ordinary if we contextualize and consider everything.

No, that's twice the compute throughput, not GPU power which cannot be measured by any single metric.The XBOX ONE X had a 6TF GPU. My asus strix 1080ti had 14TF, that's more than twice the GPU power and keep in mind pascal architecture was also more efficient.

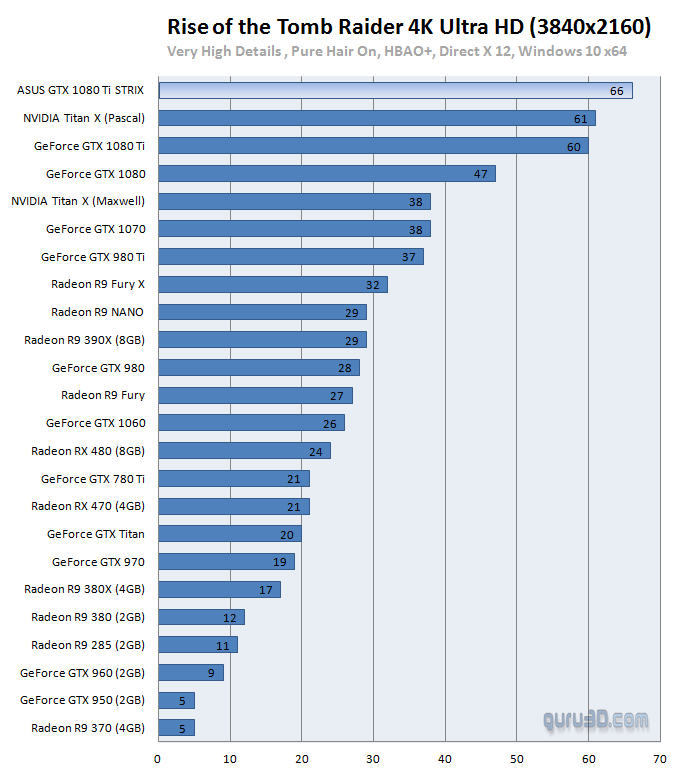

I read 1080, not 1080 Ti.This is with stock settings. With OC I probably had over 70fps in this benchmark because my asus strix was OC monster. There are no RX580 (xbox one x equivalent GPU) results in this chart, but that card was somewhere between the RX480 and the GTX980, yet my 1080ti still doubled the performance of the GTX980. You still think that my GTX1080ti wasnt twice as fast as xbox one x?

Some settings on xbox one x were lower than low on PC, but I dont belive they would affect framerate noticeably and they sure didnt made any visual difference to me. The game looked exactly the same on my xbox one x and GTX1080ti.

1080ti with hardware unboxed optimized settings. 30-40fps at 4K native.

A

No, that's twice the compute throughput, not GPU power which cannot be measured by any single metric.

Furthermore, you're comparing different architectures. How many times are we going to do this exercise?

I read 1080, not 1080 Ti.

Optimized HU are higher than much DF's Xbox One X settings. Here is the game with Xbox One X settings (actually higher) running on a 1080 Ti.

45-50fps fps in towns. DF said the Xbox One X has "minor" dips in those places. The 1080 Ti runs this game 50-60% better than the Xbox One X. Factor the higher settings that cannot be dropped to lower than low or custom values that are set higher rather than lower, you're looking at a few extra percent for the 1080 Ti. Even if those settings increased the performance by a small 10%, this would make it around 80% faster than the Xbox One X. Then there's the small overhead from Shadowplay which might cost 1-5fps.

So no, your game most certainly wasn't running with comparable performance to the Xbox One X on your 1080 Ti with the same settings. It was running 50% better at least with higher settings to boot.

RDR2 looks extremely dated in comparison.In 2019, RDR2 assets still looked good, because people were used to raster lighting, PS4 / xbox one like geometry and textures quality. But yes, Rockstar could theoretically improve assets quality on the PC, or push RTX technology to improve the game's graphics even more. A game build with PC platform in mind would do it (like cyberpunk for example). But at least RDR2 port on PC offered much higher detail settings (especially shadow quality, draw distance, grass density) compared to consoles, so it wasnt low effort port.

My only complaint at the time was the requirements on PC. I had a 1080ti (OC'ed to 14TF) and the game ran comparable to the Xbox One X (6TF) even with xbox like settings, while in all other games I was able to double the Xbox One X's frame rate even when I maxed out settings. GPUs that run Xbox One / PS4 ports well (GTX780 / GTX970 / Radeon 7970) struggled to even run RDR2. RDR2 on a PC is still demanding even today if you max out all the settings at 4K. .

When PS5 / XSX launched I started however noticing the age of RDR2 graphics. RDR2 still impress people with it's graphics because this game has beautiful scenery and art direction, but current gen games offer much higher quality assets and way more advanced lighting.

RDR2

AC:S on the PS5Prorofif Screenshots

For example forest in AC:S is way more dense compared to RDR2 and each tree is way more detailed and has much higher texture quality. Even if you look at the surface of the ground, there's a noticeable difference. But the biggest difference is in the lighting. RDR2 uses raster lighting, AC:S uses RT. If you know what RT does and where to look, it's a generational difference.

The game is drop dead gorgeous seriously I'm blown away by the visual UBI knock it out of the park technically.

The console dips to below 30 in this area. It's the heaviest town and with the fog, it's even worse. It wouldn't run with an overhead of 5-10fps here at all. It'd be lucky to even maintain 30 without hitching.The GTX1080ti in this video has around 45 fps in gameplay segment. We don't know how many fps the Xbox One X GPU could render without the 30fps cap, but the game had a solid 30fps on the Xbox One X. This means that the Xbox GPU could render 5-10fps more on average in order to maintain 30fps most of the time.

Because you can't actually mix and match your settings you the level of an X1X. There's nothing outrageous with the performance. The 1080 Ti is at a bare minimum 50% faster than the X1X and if we're being honest, it's much closer to 80% or more.My old 4K Sony Bravia X9005B only supported a fixed refresh rate of 60fps, so if I wanted to play without constant judder I had to choose either 30fps or 60fps, there was nothing in between. If I wanted to run RDR2 at 4K native, I had to run the game with a 30fps lock, exactly like xbox one x. GTX1080ti was however able to run this game at 60fps, but at 1440p instead of 4K, while xbox version didnt had performance mode (60fps).

And this very article highlights 6 settings that run on lower than low.Digital Foundry also benchmarked many different GPU's with details comparable to xbox one x settings (I'm not saying exact xbox one x settings, but I doubt these small differences could improve GPU performance noticeably). The first GPU capable of running RDR2 in 4K at an average of 60fps was the 2080ti (GPU power comparable to PS5 Pro), and these settings were close to xbox one x settings, not even maxed out settings. It takes RTX3080 to run this game at 4K 60fps with maxed out settings and that's insanse considering RDR2 was just PS4 port.

What does it take to run Red Dead Redemption 2 PC at 60fps?

Do you really need ultra settings?www.eurogamer.net

I was disappointed with the optimization of this game, because other xbox one x ports could run on my PC with much higher settings and the twice the framerate on top of that. For example I remember playing Rise Of The Tomb Raider, Gears Of War 4, Forza Horizon 3, AC Origins at 4K 60fps. 40fps with details comparable to xbox one x was very disapointing to me and therefore I always considered RDR2 port to be very demading. I think around the PS5 launch developers stoped optimizng console ports on PC, especially playstation ports. For example Uncharted 4 ran at 1.8TF (7850 / 7870 radeon) on the PS4 GPU at 1080p, but on the PC you had to use radeon 290X 5.6TF just to run the game at 720p and minimum settings. Soon TLOU2 will be ported to PC and this PS4 port will also require a much more powerful GPU than the 1.8TF radeon 7850/7870.

I'm starting this tonight. The ACG review sold me. Why the F are people not talking more about the physics objects and being able to slice things realistically with the sword? That's is awesome. So many games are totally lacking when it comes to interactable objects.

I dont know how much of a difference these little tweaks would make, but certainly they wouldnt boost my framerate from 45fps to desired 60-70fps. That's how my 1080ti run other xbox one x ports even with maxed out settings.The console dips to below 30 in this area. It's the heaviest town and with the fog, it's even worse. It wouldn't run with an overhead of 5-10fps here at all. It'd be lucky to even maintain 30 without hitching.

Because you can't actually mix and match your settings you the level of an X1X. There's nothing outrageous with the performance. The 1080 Ti is at a bare minimum 50% faster than the X1X and if we're being honest, it's much closer to 80% or more.

And this very article highlights a 8 settings that run on lower than low.

Far Shadow Quality, Reflection Quality, Near Volumetric Resolution, Far Volumetric Resolution, Water Physics Quality (1/6), Tree Quality, Grass Level of Detail (2/10)

In the case of hybrid settings, DF went with the higher preset. The reason you're seeing such performance on PC is because you can't go that low. The Xbox One X could easily drop to 20fps with those settings and you'd have the 1080 TI running at 2.25-2.5x its performance.

It's not so much as how your frame rate would increase as it is about how much the Xbox One X's frame rate would decrease. The run showed 45-50fps. If dropping those settings added 5-10fps, we're suddenly at 50-55fps or 55-60fps. Is it that outlandish? Plus, the DF article also highlights that AMD cards perform better relative to their NVIDIA counterparts.I dont know how much of a difference these little tweaks would make, but certainly they wouldnt boost my framerate from 45fps to desired 60-70fps. That's how my 1080ti run other xbox one x ports even with maxed out settings.

Frequent 20fps dips? Xbox One X gameplay, flat 30fps even in the same town.

There is no way of knowing how many fps the 1080ti would get with exactly the same settings, but you could use the lowest settings across the board and RDR2 still wouldnt run at 60fps on the 1080ti. 42fps with the lowest settings possible. These settings make the game look like crap. Xbox one x settings were definitely more demanding, yet 1080ti still cant double xbox framerate.It's not so much as how your frame rate would increase as it is about how much the Xbox One X's frame rate would decrease. The run showed 45-50fps. If dropping those settings added 5-10fps, we're suddenly at 50-55fps or 55-60fps. Is it that outlandish? Plus, the DF article also highlights that AMD cards perform better relative to their NVIDIA counterparts.

Again, it's nothing we haven't seen before. Some games run better on AMD, others on NVIDIA.

You said: "The Xbox One X could easily drop to 20fps". Different words, but the same meaning.I didn't say frequentl. John mentioned there were occasional dips in the heaviest area.

32fps average is not good enough to run the game at locked 30fps for 99.9% of time. And remember, even if the game did dip below 30fps on occasions, the CPU was most likely to blame, especially in cities. Xbox One X had very slow CPU.This means there's almost no overheard above 30, so it's very unlikely it would run at 35-40fps, more like 29-32fps.

Yeah.....the cutscenes are definitely weird, but.....they are cutscenes. I don't know what he is talking about with the rest of the game. It runs great.

I have no idea. Only AMD knows that.No, I have not. Any idea how long they stay in beta?

I have no idea. Only AMD knows that.

AMD is not playing around lately. They optimized Monster Hunter Wild by 26% and now AC:Shadows by 20%. I cant remember the last time new nvidia drivers increased frame rates this much. I guess nvidia engineers are too busy counting money now.

I have no idea. Only AMD knows that.

AMD is not playing around lately. They optimized Monster Hunter Wild by 26% and now AC:Shadows by 20%. I cant remember the last time new nvidia drivers increased frame rates this much. I guess nvidia engineers are too busy counting money now.