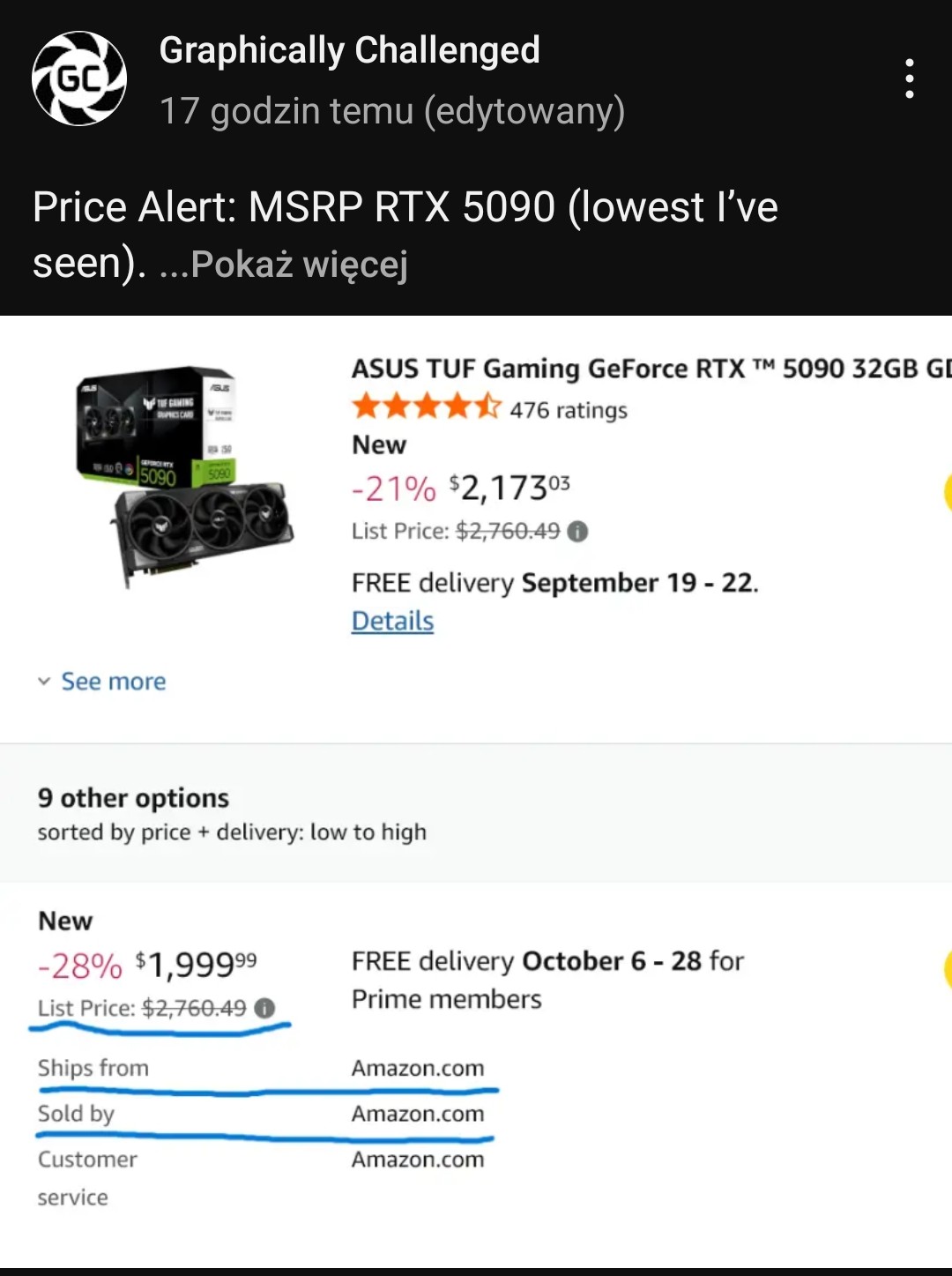

The RTX5090 has $2000 msrp and it's actually available at that price right now. I know that's still expensive, but it's nowhere near $5000 as you said.

Moreover, the RTX 5090 certainly isn't struggling to run Borderlands 4 at 1080p60fps, as my 4080S can already achieve a locked 60fps with Badass (maxed out) settings at 1080p. I'm guessing you watched YouTubers who showed you Borderlands 4 performance while it was still compiling its shades in the background. When I first started playing the game, the performance was much worse for around 5–10 minutes, but soon I was getting 95 fps instead of 45 fps in exactly the same place. Even if you just change the graphics settings you need to wait few minutes before performance will improve.

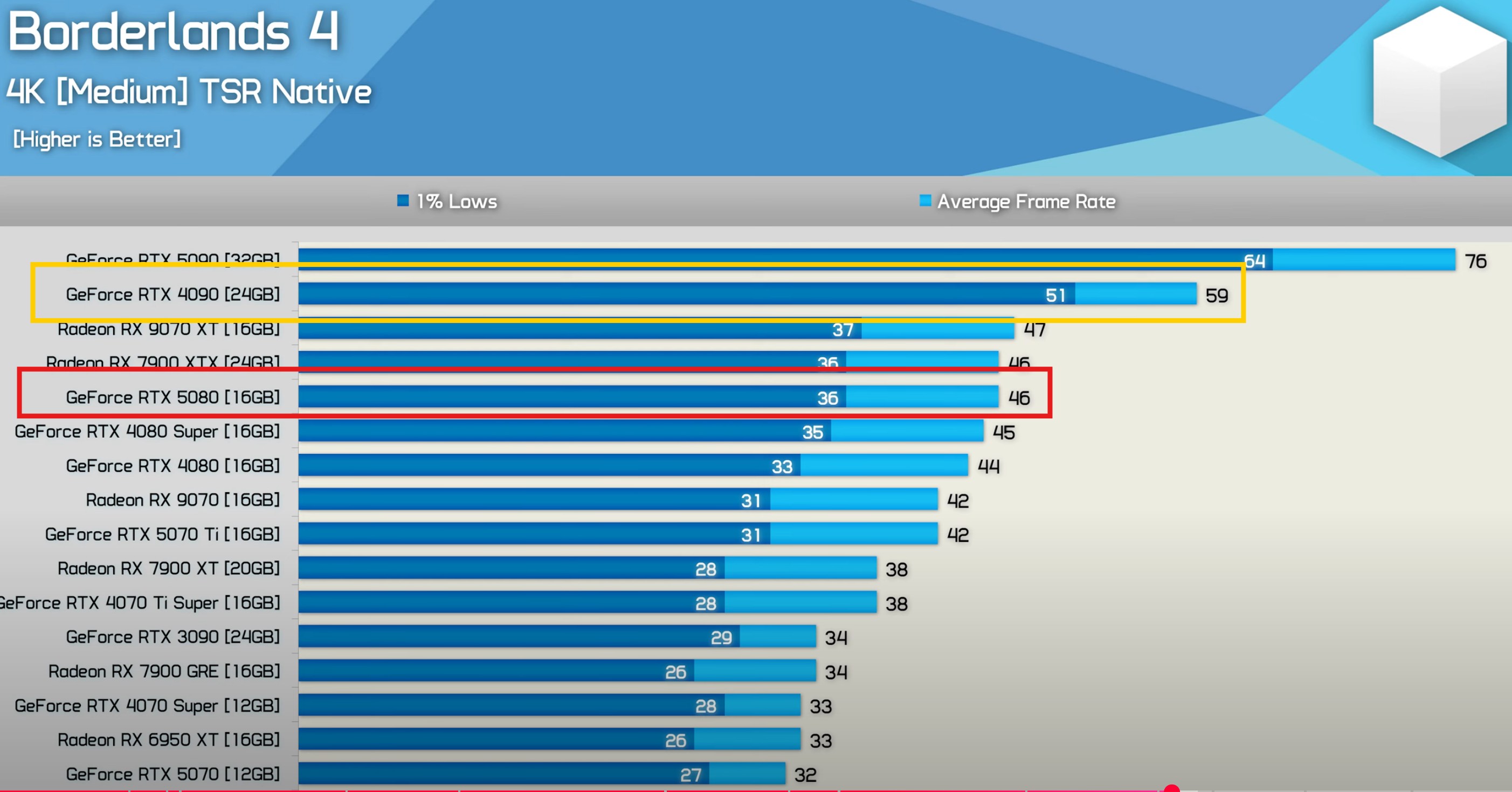

Here's 1080p DLAA (native) with badass (maxed out) settings on my RTX4080S. Keep in mind the RTX5090 is twice as fast (and two times as power hungry and three times as expenisve

).

At 1440p with DLAA (native) and high settings, the game runs below 60 fps on my PC. However, I think the 5090 could handle these settings and maintain a consistent 60 fps, because even my 4080S isnt that far from that target,

With DLSS-Quality Borderlands 4 already runs at well over 60fps at 1440p and badass settings.

WIth FGx2 on top of that. The game feels smooth as butter at 138fps and I measured a latency of between 32 and 36 ms. Without FGx2 latency was 28-32ms, so not big difference and you get much smoother and sharper image during motion. It's also easier to aim compared to base framerate, so IMO DLSS FGx2 is a must in this game.

Here's comparison between badass vs high vs medium settings at 1440p DLSSQ + FGx2:

On my old 2560x1440 LCD I would probably play with badass settings, but on 4K OLED monitor I want much sharper image, so I need to lower some settings.

I need to use medium settings at to get similar framerate at 4K DLSSQ (75-90fps)

With FGx2 I get around 130-150fps

I can also use the high settings preset and achieve a similar framerate by choosing DLSSPerformance instead of DLSSQuality.

Here's 4K DLSS Ultra Performance with high settings and FGx2 (170-180fps).

4K Ultra Performance with Medium Settings and FGx2 190fps. I wouldn't be surprised if the PS5's image quality looked worse than that. Perhaps a kind PS5 owner will share a screenshot taken in exactly the same spot for a proper comparison. I didn't buy that awesome console, so I can't post my own comparison.

I dont want to defend Borderlands 4, becasue this game is much more demanding as a typical UE5 game and in my opinion, the graphics do not justify the requirements. Some of the assets in the game, especially the textures and trees reminds me Xbox Classic games, so not even X360.

The lighting can looks stunning at times, but I think it would be possible to achieve similar look with much lower cost. Borderlands 4 is almost as demanding as PT games and that shouldn't be the case, because "lumen" supposed to be faster than standard RT and that was the whole point of it. However, thanks to the AI, even this demanding game is perfectly playable on PC. I'm totally convinced that I'm playing at 4K with 130–140 fps, and that's what matters to me. Sure, 4K DLAA (native) and real 240fps would be even better, but it wouldnt change my experience that much. I paid for Tensor Cores and I dont mind using the AI technology that my graphics card provides.