U are technically right, but once again, new dlss4 model even upscaled from 720p to 4k looks crisper from native 1080p, hell it produces so few artifacts u could mistake it for native 1440p at times.

Not saying switch2 games gonna look as crisp as native 1440p obviously but upscaling from below 1080p can mean 20 different upscaling resolutions multiplied by many different ai upscaling methods, res u upscaling to makes a difference too.

720p to 1080p using fsr1 will produce pretty terrible results but 720p upscaled to 4k by dlss4 will produce very few artifacts and it will be more crisper/less blurry on top.

What im trying to say- there are many determining factors, not only native resolution u upscaling from but method of upscaling, and final resolution, hell same native to final resolution by same method can still look "different" if we look at it in 2 different games.

My plea here is- lets judge it by actual final result, not just native resolution picture is upscaled from, and very important- if we dont use video footage but screens, lets make sure those screens arent "stills", but taken in motion, ai upscaling usually falls apart in motion where it shows all kinds of artifacts so if we ever comparing it to other upscaling or even better- native res- we need to do it in motion, those screen captures gotta be of motion, not of stills, if u guys know what i mean.

Here is exactly what im talking about on 4k vid yt, dlss4 ultra performance so upscaling from 720p to final resolution of 4k +every other setting on ultra ultra( including forraytracing, which is far from max, since rt can be set way above, with psycho and even pathtracing, but thats for top of the top nvidia cards only).

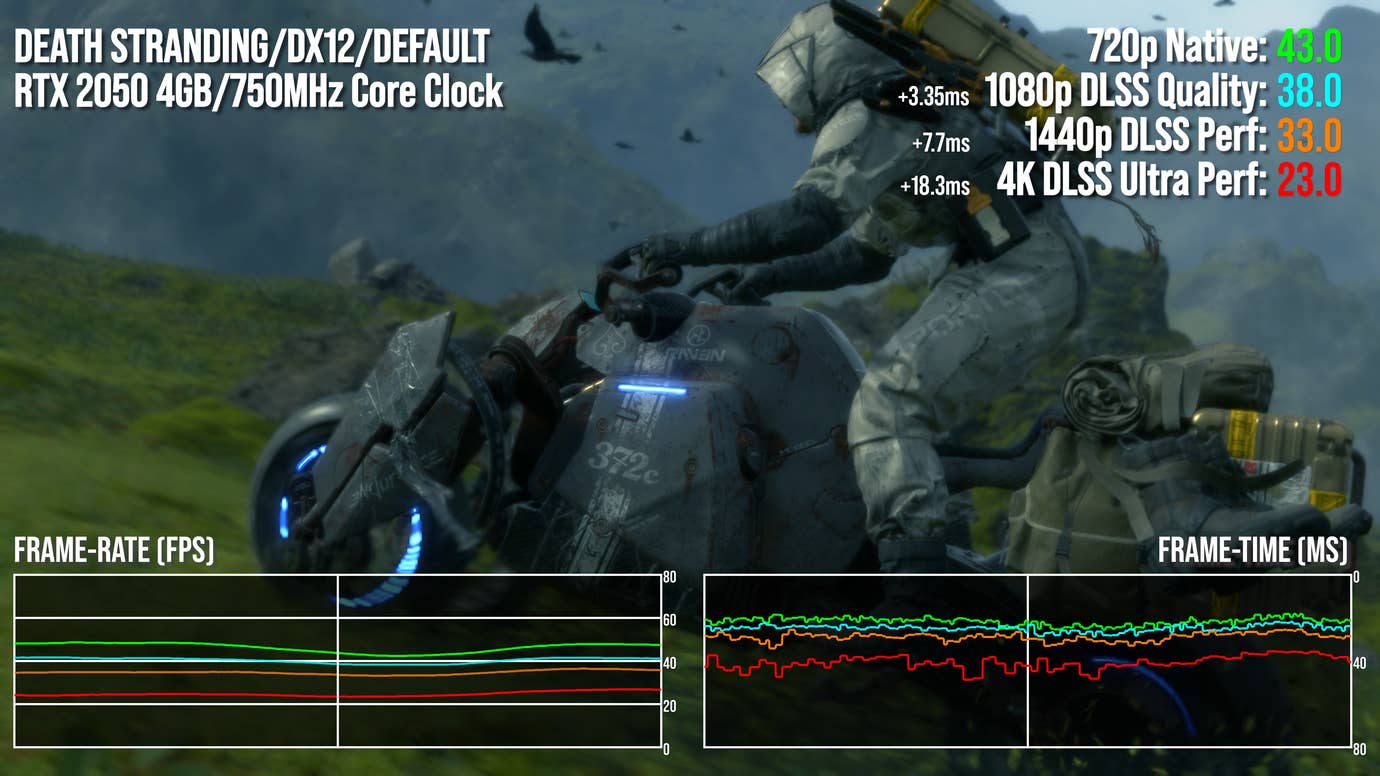

Now lets compare it to 720p native:

ultra settings too(gtx 1070ti so obviously cant do any raytracing so its diseabled), so game looks good except for image quality, coz thats a blurry mess with pixels size of grown man's fist

It makes sense tho, first vid is on 3090, 2nd is on 1070ti and even with all rt on ultra(again, not max, "just" ultra which is still above current gen console rt settings- cp2077 didnt get pr0 paych yet so we talking base ps5 and xbox series s/x only).

NVIDIA GP104, 1683 MHz, 2432 Cores, 152 TMUs, 64 ROPs, 8192 MB GDDR5, 2002 MHz, 256 bit

www.techpowerup.com

thats the difference between those gpu's performance on avg, 3090 is 269%(so +169%) of 1070ti.

Here we got current gen cp2077 patch for ps5/xbox series consoles, and that 720p upscaled to 4k with dlss4 still look at least just as crisp as native 1440p, if not even crisper, somehow, it feels like magic literally, at least in this single example nvidia/cdpr really have if not get rid off then vastly minimised amount of artifacts u normally get when upscaling from such a low res like native 720p