-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Roronoa Zoro

Gold Member

remember this moment

Man that one guy trying to make it all about him "I knew it I knew it I knew it" "mAh heart!" I'd be like "shhhh I'm trying to watch the show!"

TLZ

Banned

From this?

I think it will concentrate on games, but console itself has to be revealed surely? If they wait until August it will leak. Console will be helpful to head up all the main stream press articles.

The console has to be a given at this point. There's nothing more to hide and it's pointless anyway. Leaks will happen since they're in production.

Plus, consoles are usually revealed during E3, which is now.

TLZ

Banned

According to the event tab on the PS4, 2 hours.How long is the event?

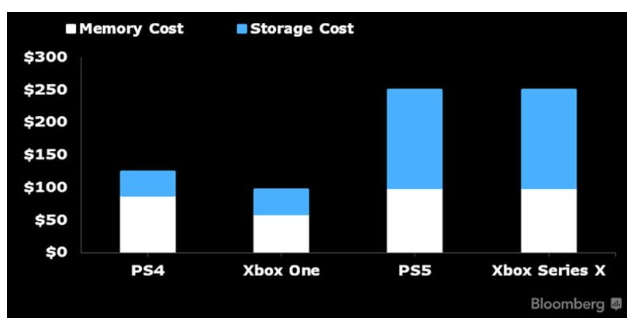

Exactly. Everything will be 'downgraded' to some extent. The SOC is absolutely not the majority of the cost.

Estimate a BOM delta for PS5 and XBSX

XBSX is 52 CUs and 360 mm². RAM is 16 GBs GDDR6 on a 320 bit bus. XBSX uses standard NMVe SSD. PS5 is 36 CUs (70% of XBSX). RAM is 16 GBs on a 256 bit bus. SSD is 'proprietary', but looks to be based on upcoming higher spec NVMe drives which will be usable for upgrades. Assuming the SOC...forum.beyond3d.com

Here is how I would design a series S.

CPU: 8core 16 thread zen2 at 3.6Ghz/3.8Ghz with SMT off. Game logic is the same regardless of graphical settings so keeping the cpu the same means the underlying game logic will not need to be compromised to make it work on the cheaper offering.

GPU: 4Tflop RDNA2. The machine will target 1080p rather than 4k so 1/3rd of the performance will be enough. This could easily be an 18CU (20 in total but 2 disabled) design clocked at 1.8Ghz.

RAM: 12 GB gddr6 on a 192 bit bus.

HDD: Needs the same IO as series X to not hold back next gen games. Could be 512GB instead of 1TB to reduce the cost and MS could make it so devs develop different installs for each version so hi res textures and other assetd that wont get used on the S can not be installed to save disk space.

This would have a cheaper BOM than series X and the sacrifices would just lower output resolution since the CPU and IO remains the same on both consoles.

Thirty7ven

Banned

It's today.

V has come to.

V has come to.

Roronoa Zoro

Gold Member

I hope that flute guy from E3 just plays for 2 hours

Filip JDM

Neo Member

I tried not to hype myself too much for GT, but im not holding back anymore, hype levels gone through the roof. Show us those ray-traced GT graphics PD!

Little Chicken

Banned

I hope Sony are also committed to 60fps first-party games.

CrustyBritches

Gold Member

I'm curious whether the retail design will end up having any similarities with the dev kits. Like a smaller, more sleek version with the same "V" cooling setup. Hopefully they'll show it.

Neo Blaster

Member

Not just that, June 11th was the date of the epic Sony presentation at E3 2013Sony: We're not going to E3.

Sony: ...ends up being the only one at would-be-E3 week.

Last edited:

THE:MILKMAN

Member

I tried not to hype myself too much for GT, but im not holding back anymore, hype levels gone through the roof. Show us those ray-traced GT graphics PD!

Just to add:

Two sets of 'eyes' now...

Dibils2k

Member

or maybe because they make 1 game every 6 yearsKnow who else stopped going to E3?

When you get so successful you are the event, you don't need someone to tell you when your stage time is - you just choose your own time.

Rusco Da Vino

Member

7 years ago

HawarMiran

Banned

After the event Aaron Greenberg be like " But did you guys know that the X will do this and that? Hello, anybody?"

ArcaneNLSC

Member

JonTheGod

Neo Member

Where is general consensus for full BC with PS1-3 these days? I'm hoping beyond hope that it's real and happening. That gap on the slide is just too tantalising.Man so many great franchises, you really can't think of them all. Yet if there is PS2 BC, I will revisit GT4, that and GT3 are right on my desk, as I plugged in my PS2 sometime ago to play them....

Something I made (it's quite professional so I had to caveat in case you thought it official):

splattered

Member

After the event Aaron Greenberg be like " But did you guys know that the X will do this and that? Hello, anybody?"

Sadly you are probably correct...

Or the whole xbox team might let them have the spotlight and congratulate them on a great reveal (Does happen sometimes)

I guess this is effectively Microsoft letting Sony go first this time around eh?

Wonder how much info will be revealed like pricing etc..

Ar¢tos

Member

Scaling is not 100% linear. Cutting the GPU to 1/3 will severely impact RT and GPGPU abilities.Here is how I would design a series S.

CPU: 8core 16 thread zen2 at 3.6Ghz/3.8Ghz with SMT off. Game logic is the same regardless of graphical settings so keeping the cpu the same means the underlying game logic will not need to be compromised to make it work on the cheaper offering.

GPU: 4Tflop RDNA2. The machine will target 1080p rather than 4k so 1/3rd of the performance will be enough. This could easily be an 18CU (20 in total but 2 disabled) design clocked at 1.8Ghz.

RAM: 12 GB gddr6 on a 192 bit bus.

HDD: Needs the same IO as series X to not hold back next gen games. Could be 512GB instead of 1TB to reduce the cost and MS could make it so devs develop different installs for each version so hi res textures and other assetd that wont get used on the S can not be installed to save disk space.

This would have a cheaper BOM than series X and the sacrifices would just lower output resolution since the CPU and IO remains the same on both consoles.

Memory bus has to remain the same as XSX or developers will have a ton of work recoding all the memory management.

HawarMiran

Banned

I think they will only show the console (just the exterior). No price, no release dateSadly you are probably correct...

Or the whole xbox team might let them have the spotlight and congratulate them on a great reveal (Does happen sometimes)

I guess this is effectively Microsoft letting Sony go first this time around eh?

Wonder how much info will be revealed like pricing etc..

TwistedSyn

Member

PlayStation 5 Former Principal Software Engineer Suggests Next-Gen Games Frame Rate Could Be Constrained By HDR and TVs

PlayStation 5 former principal software engineer recently commented on next-gen games running at 30 FPS and the reasons behind itwccftech.com

More "concerns" about next-gen games running at 30fps: it's an aesthetic choice to be "filmic", like pixel art, but also constrained by what TVs people have. A popular line of cheap TVs can only do 4:2:0 HDR @ 4K 60hz. If a game pushes HDR, 4:2:2 @ 4K 30hz helps more people see it.

I wouldn't listen to a word this website has to say.

Moses85

Member

Where is general consensus for full BC with PS1-3 these days? I'm hoping beyond hope that it's real and happening. That gap on the slide is just too tantalising.

Something I made (it's quite professional so I had to caveat in case you thought it official):

No pricing today it seems neither wants to say anything on price first and be the "Bad guy". Who goes first gets the crap for the new 499.99 pricing.Sadly you are probably correct...

Or the whole xbox team might let them have the spotlight and congratulate them on a great reveal (Does happen sometimes)

I guess this is effectively Microsoft letting Sony go first this time around eh?

Wonder how much info will be revealed like pricing etc..

Little Chicken

Banned

Somebody is going to have to pull the pricing trigger at some point shortly, it's almost July.

I suspect it'll be via controlled leaks to gauge reaction.

I suspect it'll be via controlled leaks to gauge reaction.

Last edited:

Setsuna Mudou

Member

I wouldn't listen to a word this website has to say.

WCCFTech is absolute hot garbage of a webside, does anyone take them seriously at all in this day and age??

Neo Blaster

Member

remember this moment

I expect nothing less today, at least for Sony's FP games. Cut the cinematic trailer crap, just gimme gameplay reveals like GOW and HZD had.

ToadMan

Member

Which is a shame, because it gave us this

I'd say "that didn't age well" - but I'm guessing it washed off before it became embarrassing

Roronoa Zoro

Gold Member

Of course now there's a PSN hack story ughhhhh

ToadMan

Member

or maybe because they make 1 game every 6 years

1 great game every 6 years? I think they're happy with that.

Many studios don't manage to make a single one...

Scaling is not 100% linear. Cutting the GPU to 1/3 will severely impact RT and GPGPU abilities.

Memory bus has to remain the same as XSX or developers will have a ton of work recoding all the memory management.

Go read the techpowerup review of the 5500XT It has 4GB of vram on a 128 bit bus and across all the games it averages 69fps@1080p with max ingame settings. The 5700XT with 8GB vram on a 256bit bus runs the same games with the same settings but at 4k at an average of 49fps. The gap between the 5500 and 5700 is less than the gap between the rumoured series S and series X.

As long as the CPU and SSD have the same performance games written to the DX12 ultimate API should scale pretty well on just resolution alone.

EDIT: Memory bandwidth for the CPU with a 192bit bus already exceeds what PC cpus get so cpu workloads should be unaffected.

Last edited:

Ar¢tos

Member

What dafuq does that has to do with anything?Go read the techpowerup review of the 5500XT It has 4GB of vram on a 128 bit bus and across all the games it averages 69fps@1080p with max ingame settings. The 5700XT with 8GB vram on a 256bit bus runs the same games with the same settings but at 4k at an average of 49fps. The gap between the 5500 and 5700 is less than the gap between the rumoured series S and series X.

As long as the CPU and SSD have the same performance games written to the DX12 ultimate API should scale pretty well on just resolution alone.

EDIT: Memory bandwidth for the CPU with a 192bit bus already exceeds what PC cpus get so cpu workloads should be unaffected.

I'm talking how that layout increases the amount of work for devs doing lockhart ports, I never even mentioned performance.

Last edited:

GlassAwful

Member

(It is just my opinion)

Reality will always be below expectations. I don't think they show all the cards today. I'm not saying it because I know it. I say this because at the business level it would be the most logical. Although they may also want to compensate for so much delay "in some way".

I would love for you to start the presentation with this playable real-time (and improved with MegaScans).

It's sad to think we went an entire generation and nothing even approached that.

It's sadder to think that the best we can hope for in the next gen is another iterative sequel to GT or Forza and probably modest bump in graphics and physics to hit 120 fps at 4k.

Where's my AAA wrecking rally racer with soft body physics and deformation in VR?! Bring back San Francisco Rush or Burnout!

Omega Supreme Holopsicon

Banned

can they? I mean unless sony pulled black magic into their ray tracing solution I'm not seeing how polyphony can be aiming at 4k 120fps. At least not while using ray tracing.I tried not to hype myself too much for GT, but im not holding back anymore, hype levels gone through the roof. Show us those ray-traced GT graphics PD!

I'm still hoping there's powervr ray tracing secret sauce and that gives them the edge.

Go read the techpowerup review of the 5500XT It has 4GB of vram on a 128 bit bus and across all the games it averages 69fps@1080p with max ingame settings. The 5700XT with 8GB vram on a 256bit bus runs the same games with the same settings but at 4k at an average of 49fps. The gap between the 5500 and 5700 is less than the gap between the rumoured series S and series X.

As long as the CPU and SSD have the same performance games written to the DX12 ultimate API should scale pretty well on just resolution alone.

EDIT: Memory bandwidth for the CPU with a 192bit bus already exceeds what PC cpus get so cpu workloads should be unaffected.

What I'm worried is say a game using heavy amounts of ray tracing features that runs at 1440p or 1080p. If say reflections are made critical to gameplay, it is likely the game would have to run without ray tracing on series s.

Or what about gpgpu physics. A game may be made using 4Tflops of gpgpu, but that would leave no room for other workloads in series s.

Many sony first parties were said to use heavy gpgpu, so we will see how well their games scale across gpus in the pc space.

Neo Blaster

Member

If my game kept appearing in most sold lists years and years ahead, I wouldn't bother making more too.or maybe because they make 1 game every 6 years

What dafuq does that has to do with anything?

I'm talking how that layout increases the amount of work for devs doing lockhart ports, I never even mentioned performance.

It matters because if all devs have to do is set the resolution to 1080p on the s and 4k on the X then its not a lot of work. Reviews of the same gpu architecture with different configurations on the same performing hardware (cpu and IO) show that simply scaling by resolution is possible.

XenoRyujin

Member

Hawking Radiation

Wants to watch an Event Horizon porno

Tomorrow I'm taking the longest dump in human history and I frankly dont give a fuck.Watch it in the morning, then again during work during a very long bathroom break

DrDamn

Member

Where?Of course now there's a PSN hack story ughhhhh

Hawking Radiation

Wants to watch an Event Horizon porno

Going to bed now.

I expect my knickers to be blown clean off by the time I wake up later for work.

Sooth me with your sexy voice Mark.

Take me.

I expect my knickers to be blown clean off by the time I wake up later for work.

Sooth me with your sexy voice Mark.

Take me.

Gamernyc78

Banned

I feel like with amazon loading the dummy products and everything I'm going all in and saying we will see system, pricing, preorders, everything. Sony's going to blow their entire load.

I doubt we'll get price but I think thy have so much kept from us tht thyll still be able to do at least two more shows with substance even if thy did talk about it and touch on features. If today is mostly games we can have another show to deep dive into features, ui, hidden controller features, and interesting talk on how their ssd solution is revolutionizing.

It's too early maybe to talk about psvr2 but thts a whole show or two as well I suspect we won't hear anything on tht for another year after launch of ps5 though.

ArcaneNLSC

Member

I suspect regarding "seeing" the console we might get a repeat of this

Ar¢tos

Member

Sure, all the physics calculations running on double the CUs will magically half themselves with no impact to the game. Same with all the RT calculations...It matters because if all devs have to do is set the resolution to 1080p on the s and 4k on the X then its not a lot of work. Reviews of the same gpu architecture with different configurations on the same performing hardware (cpu and IO) show that simply scaling by resolution is possible.

Devs will just press the "reduce to 1080p" button and it's done.

Andodalf

Banned

Of course now there's a PSN hack story ughhhhh

2011 Flashbacks ... plz no

Darius87

Member

quick question:

road at ps5 cerny said they had to cap gpu at 2230Mhz to keep gpu logic running but it could go even higher with theyr power supply strategy meaning that any gpu couldn't run such high clocks because logic fails? or it depends on CU amount? or gpu architecture? power suply? or other aspects?

road at ps5 cerny said they had to cap gpu at 2230Mhz to keep gpu logic running but it could go even higher with theyr power supply strategy meaning that any gpu couldn't run such high clocks because logic fails? or it depends on CU amount? or gpu architecture? power suply? or other aspects?

Setsuna Mudou

Member

No way they will show the price right now, what possible incentive would they have to do that? If the games impress, people won't care about the price anymore, at least not the most hardcore gamers.I doubt we'll get price but I think thy have so much kept from us tht thyll still be able to do at least two more shows with substance even if thy did talk about it and touch on features. If today is mostly games we can have another show to deep dive into features, ui, hidden controller features, and interesting talk on how their ssd solution is revolutionizing.

It's too early maybe to talk about psvr2 but thts a whole show or two as well I suspect we won't hear anything on tht for another year after launch of ps5 though.

I'm pretty sure they are happy to let MS go first and then undercut them if need be.

FranXico

Member

If I had to guess, CU synchronization becomes harder at higher frequencies, which is probably the logic failure he was referring to. More so the more CUs you have, in fact.quick question:

road at ps5 cerny said they had to cap gpu at 2230Mhz to keep gpu logic running but it could go even higher with theyr power supply strategy meaning that any gpu couldn't run such high clocks because logic fails? or it depends on CU amount? or gpu architecture? power suply? or other aspects?

- Status

- Not open for further replies.