Black_Stride

do not tempt fate do not contrain Wonder Woman's thighs do not do not

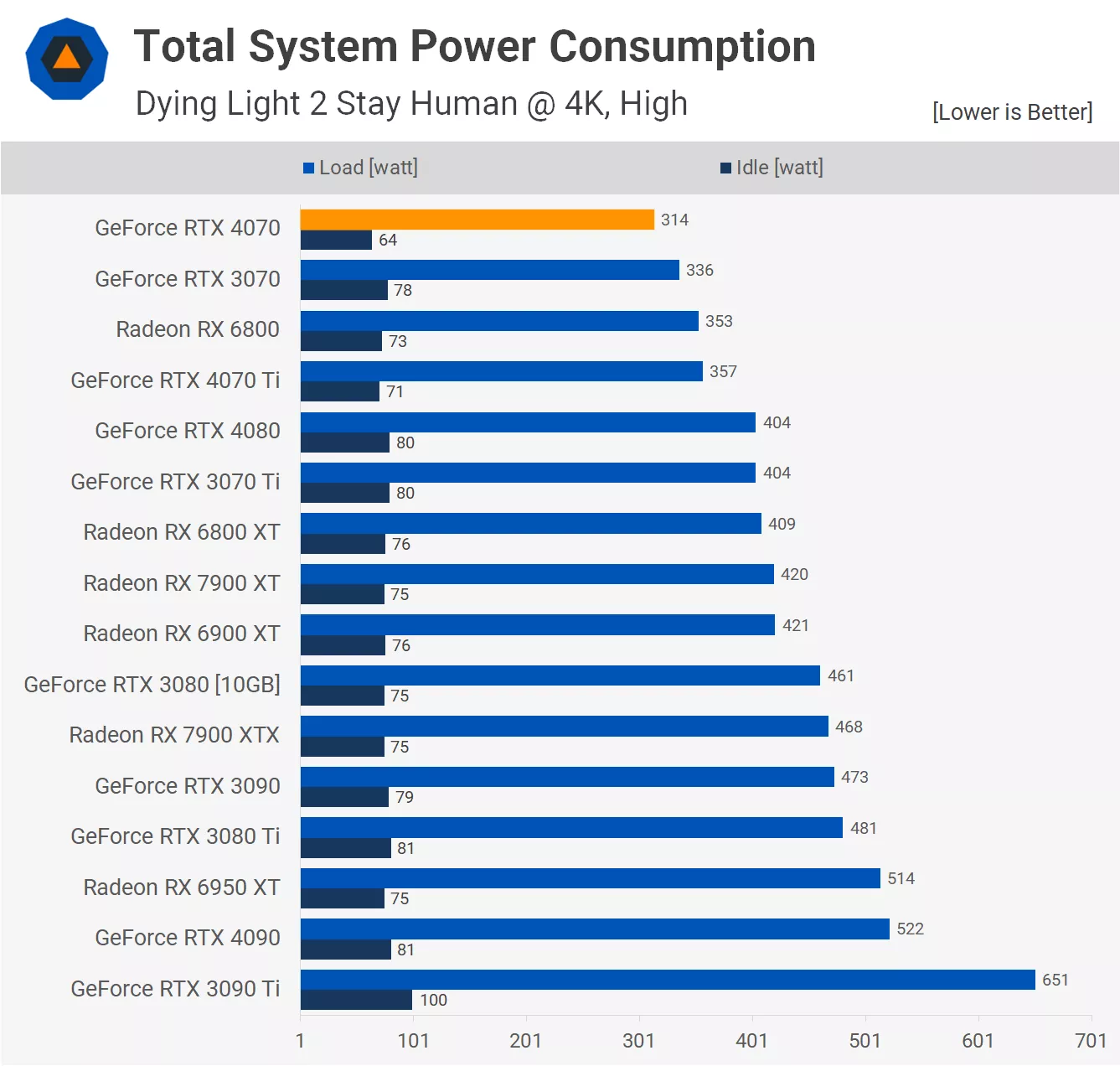

The 6950XT matches the 4070 in RT.....atleast in Control which I consider the baseline, that PT from Cyberpunk is nextgen shit.Roughly matching the 6950 XT in raster.

But 7800 XT would lose to 4070 in RT and 1440p upscaling and efficiency, making the 4070 better than 7800 XT to me in pretty much every way.

I need more RT performance more than I need 10% more raster.

If we assume the advancements made in RT trickle down to the 7800XT, then it should outshine the 4070 in RT too.

So im not really sure what advantages the 4070 would have over it beyond CUDA/OptiX.

Last edited: