KungFucius

King Snowflake

Thank god. I can keep my 4090 and relax. This minor upgrade is what I was hoping for because we don't need to keep upgrading since 4k is basically all we need.

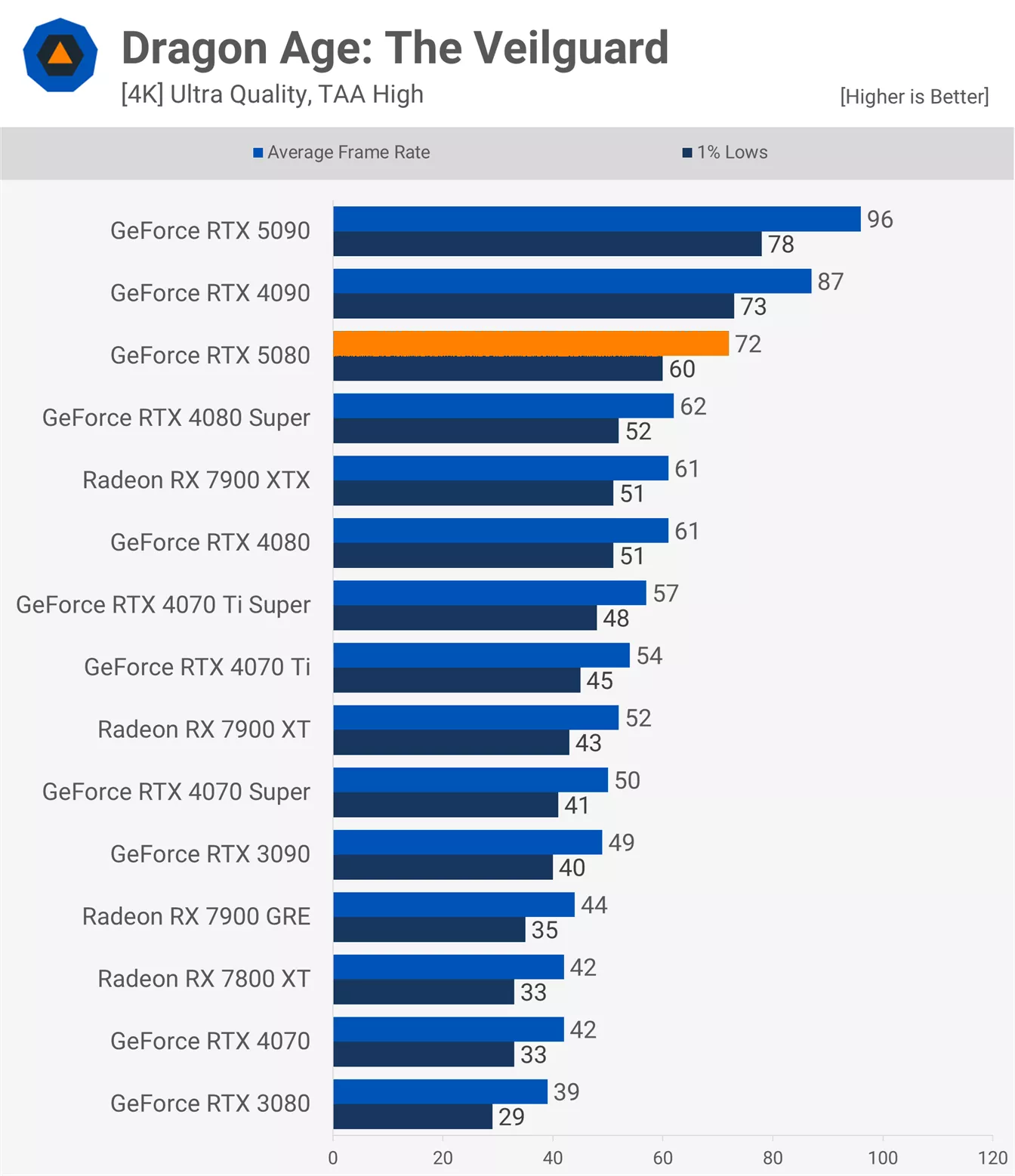

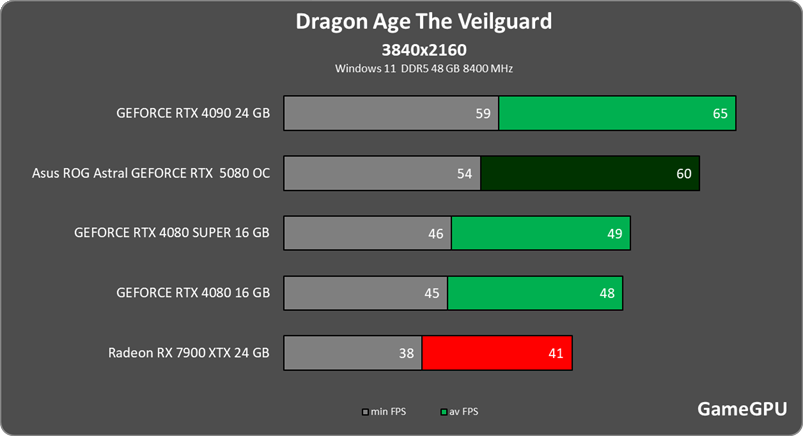

The 5090 is a bad upgrade from the 4090 since it is 25% more expensive for ~ 40% improvement coming 2+ years later. The prices are absurd too. Nvidia is basically fucking their AIB partners over and forcing them to charge 15-25% over MSRP for minor tweaks.

The 5090 is a bad upgrade from the 4090 since it is 25% more expensive for ~ 40% improvement coming 2+ years later. The prices are absurd too. Nvidia is basically fucking their AIB partners over and forcing them to charge 15-25% over MSRP for minor tweaks.