And it's still one of the latest game releases. Maybe that also says something about ray tracing support and its importance at this point in time.

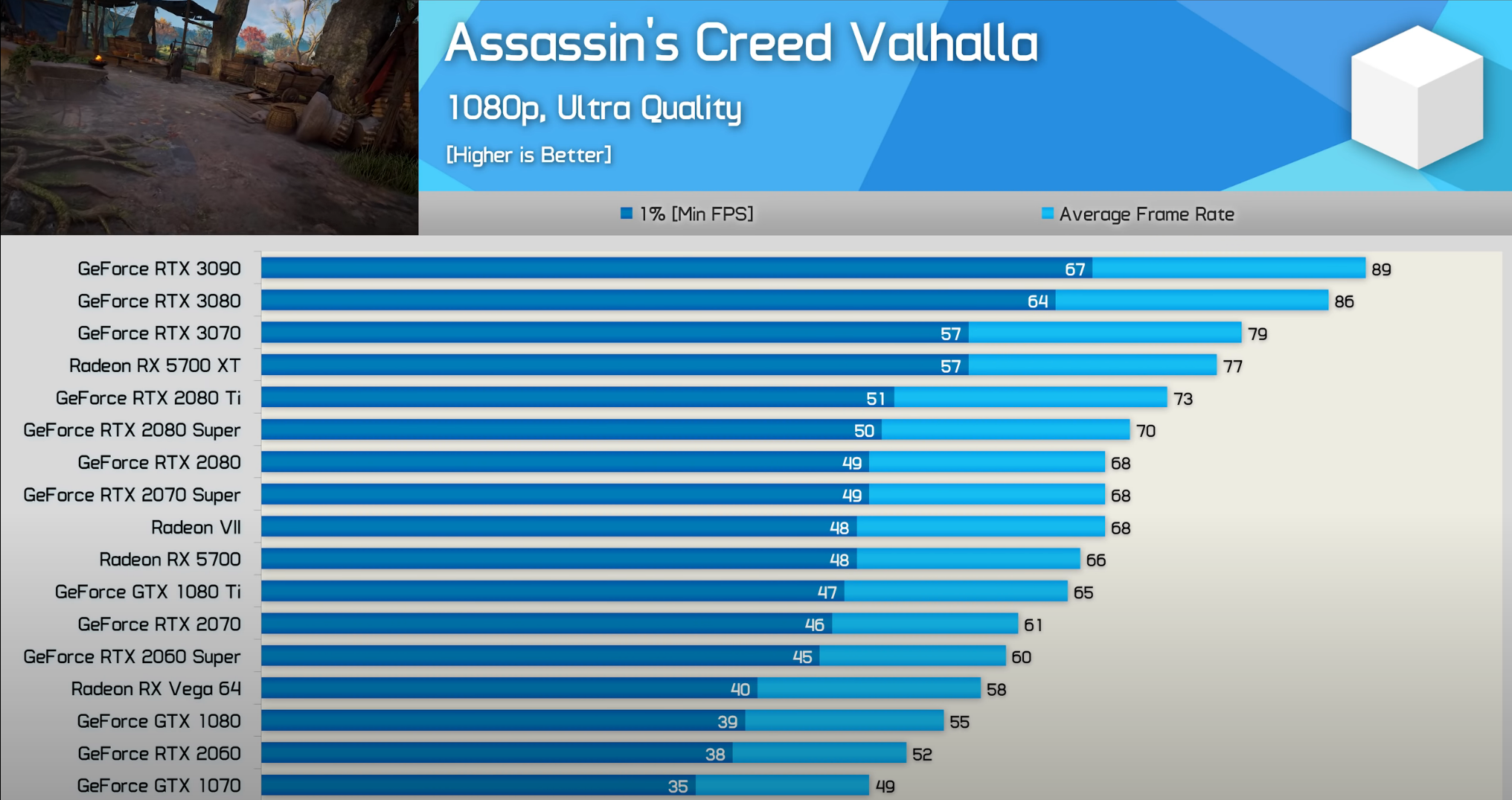

And it will continue to beat out the 2060S in performance, and beat out the 2070S in value. The 5700XT was the best value card since it was released. Live with it.

It's always the same story. The goal post is always shifted to fit nVidia and to dismiss AMD.

AMD has 4GB of RAM? Meh, too little. We need 6GB at least, otherwise it won't age.

AMD has 16GB of RAM? Meh, 10GB is enough, it's about RT and DLSS!

AMD has async compute? Meh, not important since barely any game uses it, It's about power consumption!

AMD has better power consumption? Meh. Not important. It's about RT and DLSS, despite only a handful of games using it!

My friend, I am sure you have a 5700XT judging by how much you are defending it and I'm sorry I have to be the one who tells you this: this card won't keep up, at all. Especially from a developer perspective. I highly recommend you selling the card, if you can.

With Sampler Feedback Streaming, the mentioned RTX 2060 Super (but really it also applies to the next gen consoles, Ampere and RDNA2 as well) has around 2.5-3.5x the effective VRAM amount compared to cards without SFS (basically, the 5700XT) (Inform yourself here what this technology does:

https://microsoft.github.io/DirectX-Specs/d3d/SamplerFeedback.html ) Simply speaking, it allows for much finer control of texture MIP levels, meaning your VRAM can be used far more efficiently than before. That will result in much higher resolution textures for DX12 Ultimate compatible cards without the need to increase physical VRAM and eliminates stuttering and pop in. Basically, a 2060 Super has around 20 GB and more effective VRAM compared to your 5700XT. How do you plan to compensate for that?

Next, we have mesh shading. As I said, that is a key technology as it replaces current vertex shaders and allows for much, much finer LOD and higher geometry, similar like what you saw at the PS5 Unreal Engine 5 demo. On PS5, it is using Sony's customized next generation Geometry Engine, which is far beyond the standard GE from RDNA1. On the PC however, Nanite will be using mesh shaders, most likely, which the 5700XT does not support, meaning it is incapable of handling that much geometry or it will have huge performance issues when trying to emulate it in software.

VRS can give you 10-30% or even higher performance boost at almost no image quality cost.

Next, there is Raytracing. Raytracing is not a gimmick. Raytracing saves so much time for devs and it gets more efficient each day it's going to be an integral part of Next Gen games in the future. Even the consoles support it in decent fashion and it is the future of rendering, don't let anyone tell you otherwise. Nvidia recently released their RTXGI SDK which allows for dynamic GI using updated light probes via Raytracing and it doesn't destroy performance, it is extremly efficient. This means developers don't have to pre-bake lighting anymore and save so much time and cost when developing games. RTXGI will work on any DXR capable GPU, including consoles and RDNA2. However, the 5700XT is not even capable (well it could be if AMD would enable DXR support) of emulating it in software, meaning a game using RTXGI as its GI solution won't even boot up on a 5700XT anymore. However, giving that the RT capable userbase is growing each day with Turing, Ampere, Pascal, RDNA2 and the consoles, this is likely a non issue for devs. If AMD decides to suddenly implement DXR support for the 5700XT you could still play the game, but with much worse performance and visual quality than the DX12U capable GPUs due to the lack of hardware acceleration for Raytracing.

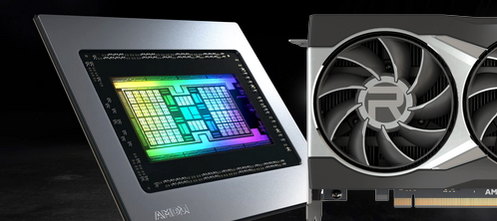

Remember, everything I talked about also applies to AMD's new RDNA2 architecture. It fully supports these features as well. Basically, RDNA2 is a much, much bigger jump than you might realize.

Your card might be fine for a while with cross generation games, but once next gen kicks off, the 5700XT is dead in the water.

Hope that clears it up.