Unknown Soldier

Member

Ah yes the AMD cycleHe's not the only one who's heard RDNA4 sucks.

Maybe UDNA will finally be the ticket...

Ah yes the AMD cycleHe's not the only one who's heard RDNA4 sucks.

Maybe UDNA will finally be the ticket...

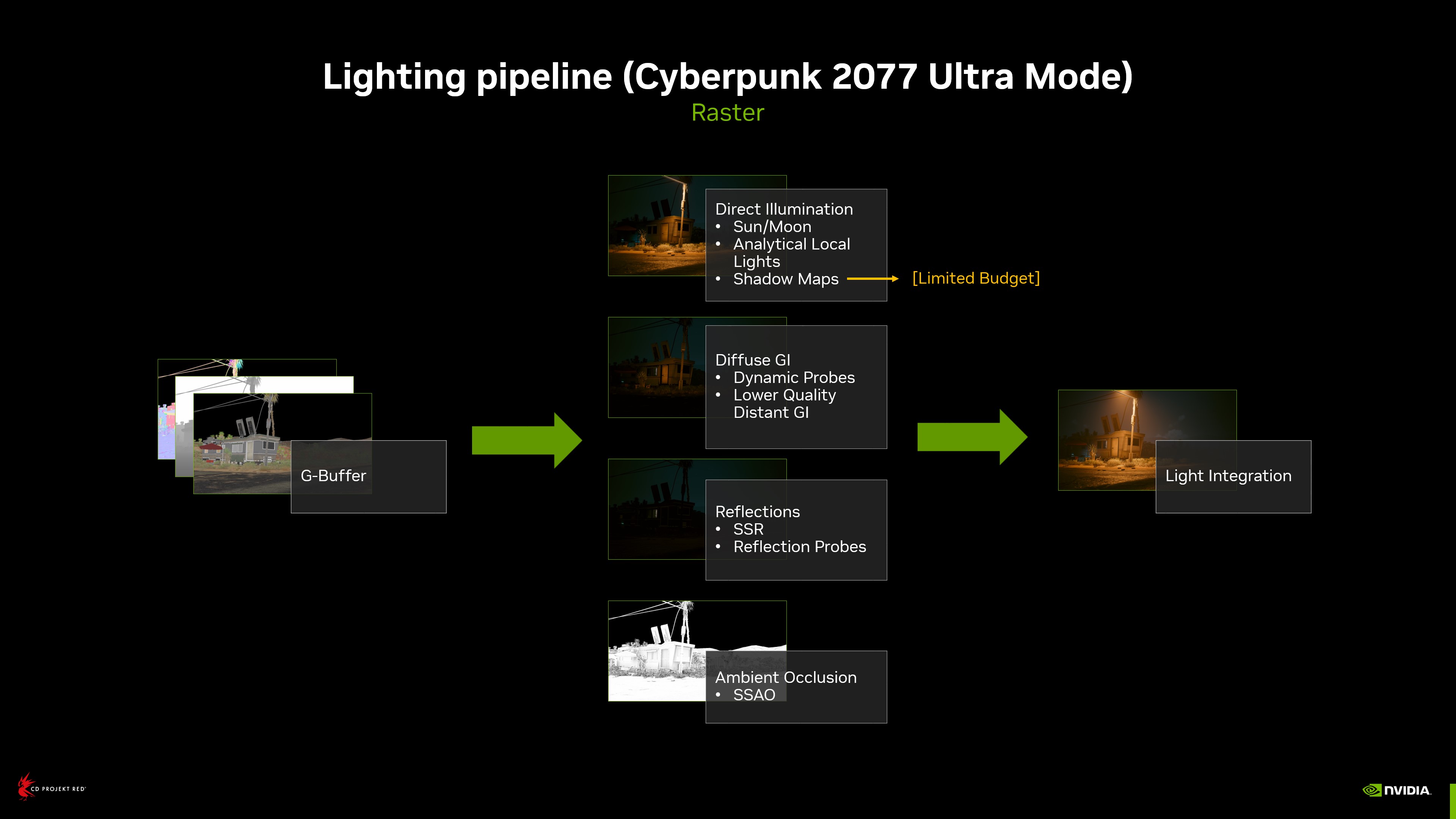

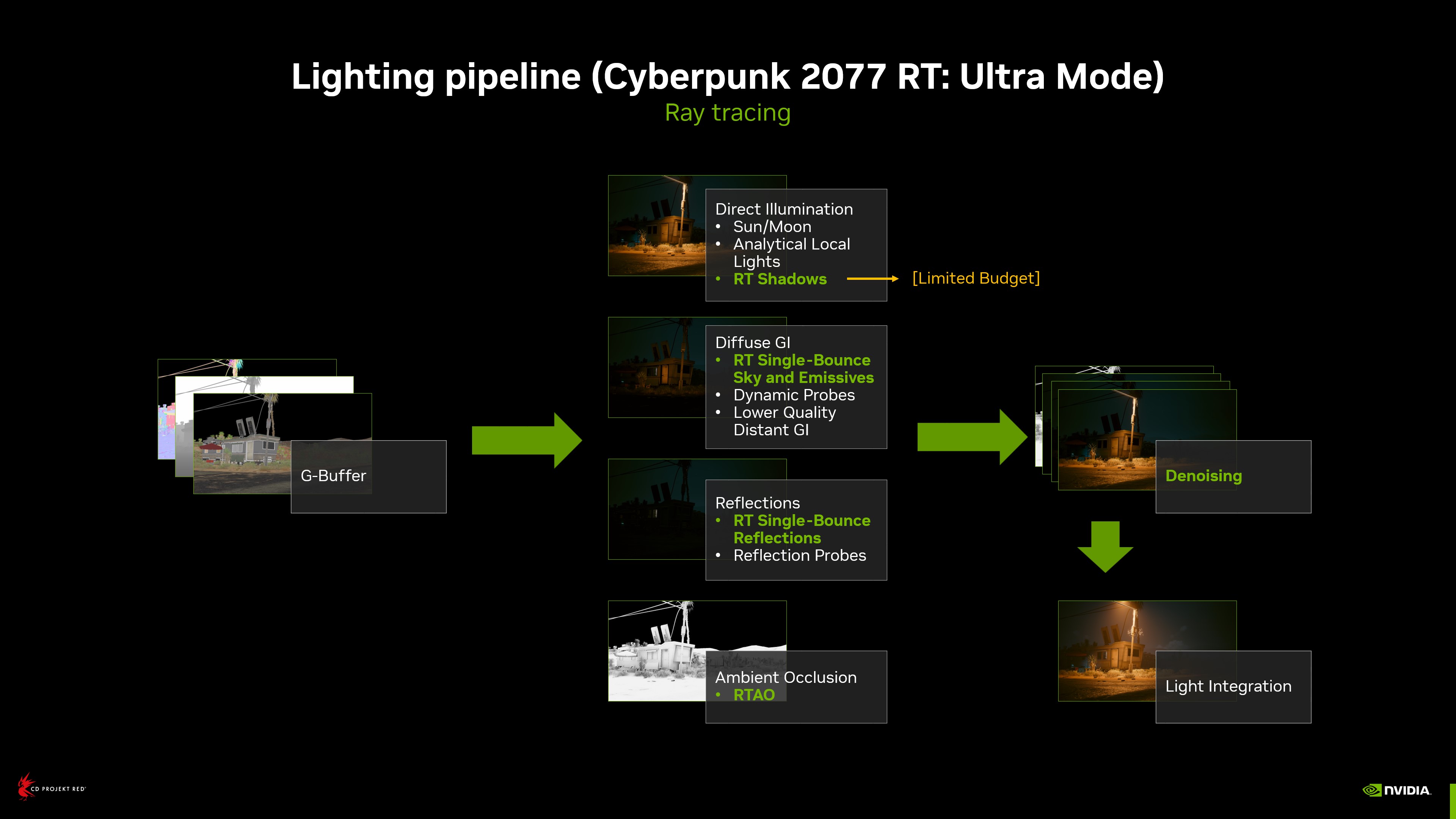

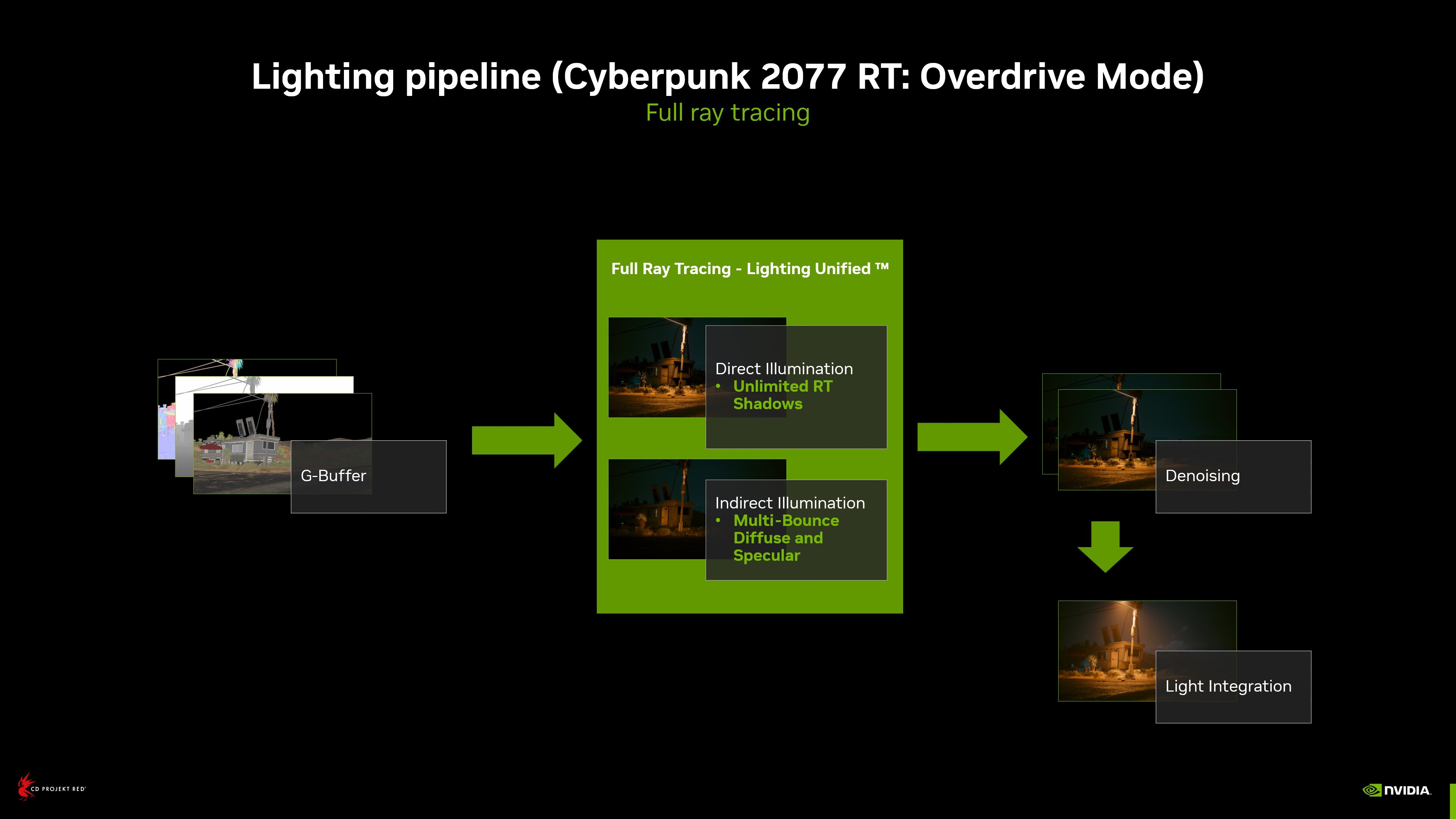

You wrote way too much to say simply that AMD's approach to RT kind of copes when doing the basic RT reflections/shadows seen on console-level RT but completely falls over and dies when forced to do the real advanced Path Tracing stuff that the highest level PC games are doing (Cyberpunk 2077, Portal RTX, Star Wars Outlaws, Black Myth Wukong)Because averaging is insanely misleading and doesn't take the distribution into account. The 7900 XTX has a sizeable advantage in rasterization in the order of 15%. For it to end up neck-and-neck with the 3090 Ti on average means its 15% average gets completely wiped by a random sample of like 5 games. However, the performance impact on ray tracing varies enormously on a per-game basis, as does the implementation, so just taking on average of a small sample of games is incredibly flawed. And you two are also incorrect in stating that people say the 3090 and 3090 Ti were said to suck following the release of the 7900 XTX. They still obliterate it when you go hard with the ray tracing.

In Alan Wake 2, the 3090 Ti outperforms it by a whopping 19% with hardware ray tracing turned on. This is despite the 7900 XTX being 18% faster without it.

In Black Myth Wukong, the 3090 Ti leads it by 46% at 1080p, max settings. Using Techspot because it doesn't seem like Techpowerup tested AMD cards.

In Rift Apart, it loses by 21%.

In Cyberpunk with path tracing, the 3090 Ti is 2.57x faster. However, I think AMD got an update that boost the performance of their cards significantly, though nowhere near enough to cover that gap.

Guardians of the Galaxy 4K Ultra, the 3090 Ti is 22% faster.

Woah, would you look at this? The 3090 Ti is 73% faster in ray tracing according to my sample of 5 games. But of course, the eagle-eyed among you quickly picked up that this was my deliberate attempt at making the 7900 XTX look bad. In addition, removing Cyberpunk, a clear outlier, from the results would probably drop the average down to the 20s. The point I'm making is that the average of 8 games does not represent the real world. You want to use ray tracing where it makes a difference and shit like Far Cry 6 or REVIII ain't that, explaining in part why they're so forgiving on performance (and why they came out tied). Expect to see the 3090 Ti outperform the 7900 XTX by 15% or more in games where ray tracing is worth turning on despite losing by this exact amount when it's off. The 3090 Ti's RT performance doesn't suddenly suck because it's equal to the 7900 XTX (it isn't, it's faster), but AMD is at least one generation behind, and in games where ray tracing REALLY hammers the GPUs, AMD cards just crumble.

tl;dr don't let averages fool you. Look at the distribution and how ray tracing looks in the games.

No, that's completely false, especially in this case where results can vary massively between 1% to over 50%. Anyone with more than a passive understanding of statistics will tell you so. You do NOT want an average. You want to look at the distribution because the average is completely skewed by outliers. Furthermore, the average from Techpowerup was a sample of 8 games. If you want a relevant average, you'd need a sample of 30+ games.cherry picking is always irrelevant results, avg is best. Games going to RT when PS6 is coming, for now it's beta test for future.

No, the 4090 is perfectly fine. I play Cyberpunk with path tracing and DLSS Quality at 3440x1440 and it runs well. Throw in Frame Generation and Reflex+ Boost, and you have an amazing experience.still doesn't matter because it's Cyber/Alan only. When more games coming with PT 3090/4090 will be super slow for this.

maybe because they was sure it's about 4090 level? There always was minimum 7900XT - max around 4080, but not 7900XTX. He spoke with someone at Computex who had seen the early testing on it and was bummed out:

Yeah, last time when i was buying for specific game it's was Crysis 1, but now i'm looking only avg perfomance, and won't looking for RT perfomance, until game gonna requirement only RT game, but that far future. UE5 have nice graphic and that fine.massive fraud lol.

They have, Nvidia have zero cards up 500$ now, but users take always worse cards. So won't change. Only Nvidia gonna help do with this. like 5060\5070 will have 8/12gb only, so that why they force me to buy RDNA4it seems like AMD needs to be ahead on both price and performance for a couple of generations

and again Cyber, i'm talking about future games.No, the 4090 is perfectly fine. I play Cyberpunk

Never was false. You mistake here. Why you need buy worse card, where in 99% games lower results.that's completely false, especially in this case where results can vary massively between 1% to over 50%.

maybe because they was sure it's about 4090 level? There always was minimum 7900XT - max around 4080, but not 7900XTX

Because budget, they're CPU + GPU company, NV GPU only. They've started get budget since Ryzen release. So they need split it to Data center/gaming/servers etc. Anyway UDNA is right way for them, save money and make strong uArchbut there hasn't been a single card in over a decade where they've really topped Nvidia.

We don't have yet comparision for PSSR vs DLSS, but all upscalers need much work yet, for now upscalers still bad. About huge gaing we don't know what NV/AMD planning in far future.Well I wish them huge gains

And inspirations from PSSR

You have gone to far man… sometimes you have to push past your limits to receive the rewardsI don't care if this guy was revealing Sydney Sweeney nudes. I would never, ever click a video with this thumbnail.

We were talking about ray tracing, weren't we? The 3090 Ti certainly doesn't get worse results 99% of the time. In fact, it's much more common that it wins out, and comfortably a lot of the time.Never was false. You mistake here. Why you need buy worse card, where in 99% games lower results.

Yep, but that diffent story. Different games, different results, but RT is bad for all cards if it's not 4090We were talking about ray tracing, weren't we?

it's about pure perfomance, but overall both bad for heavy RTThe 3090 Ti certainly doesn't get worse results 99% of the time.

Not true. The 4080 is also very good. Hell, even the 7900 XTX can be pretty good a lot of the time. It just loses more performance than I'd like.Yep, but that diffent story. Different games, different results, but RT is bad for all cards if it's not 4090

That's for servers iirc, I don't think it effects gaming at all

it's for future UDNA, also gaming AI. Just exampleI don't think it effects gaming at all

I mean, "relative RT" is of course all over the place, it can go from a useless "there's RT in this?" game like Dirt 5, to Cyberpunk overdrive, depends how the reviewer stacks the games.

hybrid RT, where you better damn hope that the generational difference in rasterization would push the card ahead of one a gen behind, which they do with RT off, but ends up performing as good as a gen behind, it means RDNA 3 RT blocks perform worse on a CU-clock basis than even Turing. Manages to drag down a 27% lead they had in rasterization.

AMD has consistently priced their cards roughly in line with Nvidia

Same going for 4060ti 8 gb vs 7700XTYet, 3050 outsold 6600 four to one.

How does the "hardwahr RT" (that is somehow present third gen into "hardwahr RT" even in $2000 cards) bottleneck get "compensated" by rasterization being faster?

You can count full ray traced / path traced games with no hybrid system on one hand

In the above scenario, the faster I get with raster, the more % of performance I lose.lose the same % of performance

You can count full ray traced / path traced games with no hybrid system on one hand and are rarely present in benchmarks, outside of Cyberpunk overdrive, the most popular one. Almost everything else has an hybrid solution. So rasterization is part of that equation. If you want to see it another way, they don't lose the same % of performance from raw rasteriation → RT enabled. If an AMD cards gets a 15% lead in rasterization and lands 15% slower with RT enabled soon as you start to have more than shadows and reflections, the RT blocks just aren't as good as Nvidia. I would wager that if you would go CU for CU and clock for clock, probably even Turing RT block would outperform it.

Thus AMD RT blocks are not that good. Evenwinjer will tell you that AMD absolutely needs to change it, and hopefully its obvious now they'll change it from all the rumours and evidently also what they did for PS5 pro.

Speaking of which, I just recommended this week a 7800XT to a friend building his first PC. There was a deal in Canada and Nvidia cards are not seen with any sort of price drop. A $250 CAD difference for its Nvidia equivalent competitor, a 4070. No brainer that at this price, the Nvidia tax is just too damn much. When AMD cards are under deals, they're no brainers. Problem is MSRP vs MSRP. I hope they learned that for RDNA 4.

When we have imaginary "hybrid" pipeline with:

a) "traditional" processing

b) "hardwahr RT" processing

If #b lets me produce only 50 frames, how does it help that #a might dish out, let's be generous, 700?

Like I told you before, the only advantage of AMDs solution is to save die space. Performance is not it.

For a console, like the PS5 and Series S/X, that need to have CPU, memory controllers, caches, and GPU in an SoC made in N7, it's important to save die space and save costs.

But now that Rt in games has evolved a bit, RT has become more relevant, than what it was in 2020. So AMD has to catch up.

If the reports of 3-4X improvements on the PS5 are real, then it's a big jump for AMD. And I doubt they managed to do that with the same hybrid solution.

But that will put them around the performance of Ada Lovelace's mid range. We can expect Nvidia to have even better RT and bigger GPUs for their 5000 series.

of 3-4X improvements

it's a big jump for AMD

But that will put them around the performance of Ada Lovelace's mid range

It does not matter "hybrid pipelines" mean. The point I made above stands for any bottleneck, regardless of labels on the distinct pipelines it is using.Imaginary hybrid pipelines

Yea I know it was an engineering choice.

Although it did fall flat a bit on its face, considering how much Nvidia sacrifices for dedicated RT blocks and ML in die area, I would have expected AMD's sacrifice to at least blast Nvidia away like one punch man in rasterization. We ended with what, a battle that keeps going one way or the other depending on the selection of titles with maybe a 2% advantage on AMD? Won't even go in silicon area between Ada and RDNA 3, Nvidia schooled AMD. Not sure their hybrid pipeline was a winner in the end.

It does not matter "hybrid pipelines" mean. The point I made above stands for any bottleneck, regardless of labels on the distinct pipelines it is using.

If you ran out of arguments, but struggle admitting "% drop" is a silly metric, oh well. No problem, dude.

4080 is 16% ahead of 7900XTX in TPU RT tests.

4 times faster AMD card would be 2.5+ times faster at RT than 4090.

Good to hear that it is behind what even a midrange 5000 series would aim for.

They gonna do it, if devs ask it. So it's for PS6 high chanceSo AMD has to catch up.

They gonna do it, if devs ask it. So it's for PS6 high chance

Nothing to stop them. UDNA is coming, there also gonna be RT changes. Not much games with RT yet, so that why they not pushed it with RDNA3I'm not so sure.

The difficulty here is that you don't get what your opponent is saying, but are stuck in echo of "true RT not true RT" a conversation that you have possibly had with someone, but certainly not me and likely not even on this forum.What don't you understand that most games, even with RT features, still have rasterization? What is difficult here?

The oldest game included by TPU is Control.And the averages by Techpowerup include older RT games, that are not as demanding.

The more demanding the RT is in a game, the wider the gap is between AMD and Nvidia.

7900XTX is on par with 4080, its direct competitor (~15% behind on average at RT). What sort of "chance" do you mean?AMD already lost one chance with RDNA3

Nothing to stop them. UDNA is coming, there also gonna be RT changes. Not much games with RT yet, so that why they not pushed it with RDNA3

anyway

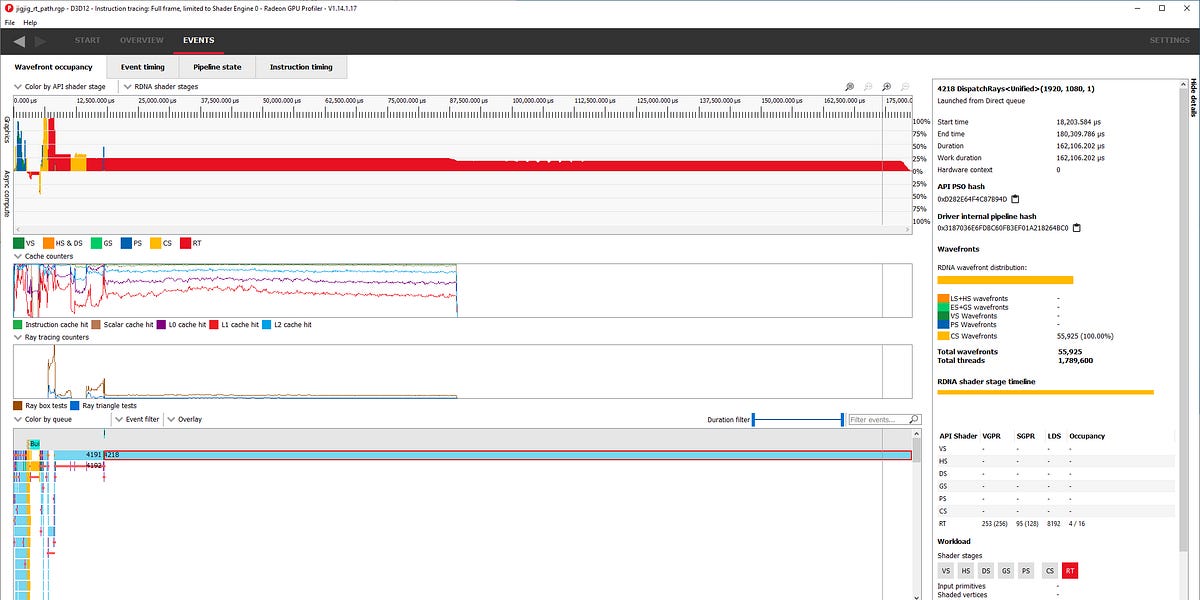

RT bench

That from KeplerUDNA being a combination of CDNA and RDNA, will probably focus on the compute side. Maybe also on ML.

The difficulty here is that you don't get what your opponent is saying, but are stuck in echo of "true RT not true RT" a conversation that you have possibly had with someone, but certainly not me and likely not even on this forum.

The question was "how does more rasterization help, when you are bottlenecked by RT". The obvious answer is "it doesn't. Instead of either admitting that, or showing it is, in fact, somehow helpful, you went to talk about something nobody is arguing about.

The oldest game included by TPU is Control.

At this game, 4090 is nearly 100% ahead of 7900XTX.

While on average it is 55% ahead.

7900XTX is on par with 4080, its direct competitor (~15% behind on average at RT). What sort of "chance" do you mean?

AMD Radeon RX 7900 XTX Review - Disrupting the GeForce RTX 4080

Navi 31 is here! The new $999 Radeon RX 7900 XTX in this review is AMD's new flagship card based on the wonderful chiplet technology that made the Ryzen Effect possible. In our testing we can confirm that the new RX 7900 XTX is indeed faster than the GeForce RTX 4080, but only with RT disabled.www.techpowerup.com

That from Kepler

that was before formal announceAMD already did the formal announcement last month.

Yeah for some reason it feels like they thought they had longer before RT was a big deal and couldn't pivot rdna towards having separate RT cores, which was a grave mistake. It's a shame because they had a real shot after rdna2, but they've been sitting on their ass too long w.r.t. DLSS and RT. I'm not too optimistic now that they merged cdna and rdna into udna, feels like gaming will get left behindI'm not so sure.

AMD already lost one chance with RDNA3. And Nvidia is not a sitting target, they are not Intel.

The difficulty here is that you don't get what your opponent is saying, but are stuck in echo of "true RT not true RT" a conversation that you have possibly had with someone, but certainly not me and likely not even on this forum.

The question was "how does more rasterization help, when you are bottlenecked by RT". The obvious answer is "it doesn't. Instead of either admitting that, or showing it is, in fact, somehow helpful, you went to talk about something nobody is arguing about.

The oldest game included by TPU is Control.

At this game, 4090 is nearly 100% ahead of 7900XTX.

While on average it is 55% ahead.

7900XTX is on par with 4080, its direct competitor (~15% behind on average at RT). What sort of "chance" do you mean?

AMD Radeon RX 7900 XTX Review - Disrupting the GeForce RTX 4080

Navi 31 is here! The new $999 Radeon RX 7900 XTX in this review is AMD's new flagship card based on the wonderful chiplet technology that made the Ryzen Effect possible. In our testing we can confirm that the new RX 7900 XTX is indeed faster than the GeForce RTX 4080, but only with RT disabled.www.techpowerup.com

The question was "how does more rasterization help, when you are bottlenecked by RT". The obvious answer is "it doesn't. Instead of either admitting that, or showing it is, in fact, somehow helpful, you went to talk about something nobody is arguing about.

That good back like to GCN. looks at PS4 how strong it's was, despite weak CPUI'm not too optimistic now that they merged cdna and rdna into udna, feels like gaming will get left behind

You can remove Cyberpunk, it's old data. Now it's have more than double perfomance in PTThis testing don't include very important RT games:

Yeah for some reason it feels like they thought they had longer before RT was a big deal and couldn't pivot rdna towards having separate RT cores, which was a grave mistake. It's a shame because they had a real shot after rdna2, but they've been sitting on their ass too long w.r.t. DLSS and RT. I'm not too optimistic now that they merged cdna and rdna into udna, feels like gaming will get left behind

What they need is SER, BVH walking acceleration, and anything they can do to reorganise the work at runtime to be more GPU friendly. RT maps horribly to how shaders are setup (massive SIMD arrays designed to perform large batches of coherent work).It makes me wonder about how long it takes to develop a proper RT unit.

Maybe it does take half a decade to do it and AMD didn't have these new RT units ready in time for RDNA3. So they just widened RDNA2 RT units.

AMD 6600 was:

1) Cheaper than 3050 (actual, street price):

2) A tier (!!!) faster than 3050

3) Faster than 3050 even at RT

4) More power efficient than 3050

Yet, 3050 outsold 6600 four to one.

So, no, pricing is not the issue.

Perhaps FUD is. When people run around with "AMD is generations behind at RT", "FSR1 is sh*t" (no, it isn't, stop listening to green shills like DF), , "TAA FSR sucks too" (yeah, that 576p upscale is a very realistic scenario that everyone needs), or even the old school FUD like "buh drivers", there is quite a bit of nonsense to cleanup.

Well say you are targeting 60 FPS, so have a 16.6 ms per frame budget. Imagine on Nvidia hardware that the ray tracing part takes 3ms, and the remainder of the frame takes 13.6ms. Then you're hitting your target. Now imagine that on AMD hardware, the ray tracing part takes 6ms instead. Then despite being at a big disadvantage during the ray tracing portion, you can still equal Nvidia if you can complete the rest of the frame in 10.6 ms. So a ray tracing deficit is "compensated" by extra rasterization performance. Obviously however the greater the frame time "deficit" due to ray tracing, the less time you will have to complete the rest of the frame in time, and the more performance you will need.The difficulty here is that you don't get what your opponent is saying, but are stuck in echo of "true RT not true RT" a conversation that you have possibly had with someone, but certainly not me and likely not even on this forum.

The question was "how does more rasterization help, when you are bottlenecked by RT". The obvious answer is "it doesn't. Instead of either admitting that, or showing it is, in fact, somehow helpful, you went to talk about something nobody is arguing about.

RT isn't a mystery, it's almost 50 year old technology at this point, we know where the bottlenecks are. The issue is balancing silicon budgets vs more general purpose HW.It makes me wonder about how long it takes to develop a proper RT unit.

Maybe it does take half a decade to do it and AMD didn't have these new RT units ready in time for RDNA3. So they just widened RDNA2 RT units.

It makes me wonder about how long it takes to develop a proper RT unit.

Maybe it does take half a decade to do it and AMD didn't have these new RT units ready in time for RDNA3. So they just widened RDNA2 RT units.

RT isn't a mystery, it's almost 50 year old technology at this point, we know where the bottlenecks are. The issue is balancing silicon budgets vs more general purpose HW.

Because even NVIDIA realizes dedicating a large amount of silicon for RT is a not good idea yet.The bottleneck is still in the unit occupancy. And this ism mostly due to prediction and scheduling.

Even nvidia's hardware struggles with it.

You mean back when they were still ATi?I've never owned an AMD card (but I have had Radeons) and I am hoping their RDNA 4 series makes them competitive again.

Because even NVIDIA realizes dedicating a large amount of silicon for RT is a not good idea yet.

AMD dedicate a lot less die area to RT than Nvidia in RDNA2 and 3, relative to Ampere and Lovelace.I'm not so sure.

AMD already lost one chance with RDNA3. And Nvidia is not a sitting target, they are not Intel.

Well say you are targeting 60 FPS, so have a 16.6 ms per frame budget. Imagine on Nvidia hardware that the ray tracing part takes 3ms, and the remainder of the frame takes 13.6ms. Then you're hitting your target. Now imagine that on AMD hardware, the ray tracing part takes 6ms instead. Then despite being at a big disadvantage during the ray tracing portion, you can still equal Nvidia if you can complete the rest of the frame in 10.6 ms. So a ray tracing deficit is "compensated" by extra rasterization performance. Obviously however the greater the frame time "deficit" due to ray tracing, the less time you will have to complete the rest of the frame in time, and the more performance you will need.

AMD dedicate a lot less die area to RT than Nvidia in RDNA2 and 3, relative to Ampere and Lovelace.

Its only natural its less performant.

If they decided to dedicate just as much die area to it - I'm not saying they will match Nvidia, but the gap will close significantly just by virtue of having more hardware dedicated to it. The question is, will AMD dedicate that much die area or will they be happy to be just about ok performance, but have higher margins due to smaller die sizes?

Remains to be seen.