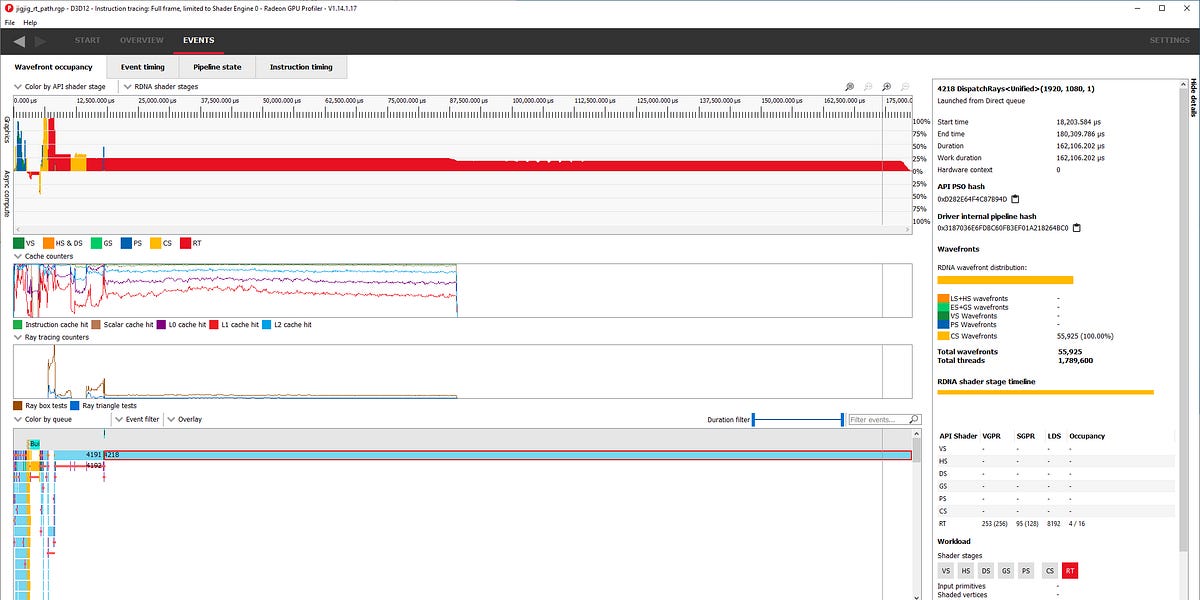

Denoising is one of techniques to address the problem of the high number of samples required in Monte Carlo path tracing. It reconstructs high quality pixels from a noisy image rendered with low samples per pixel. Often, auxiliary buffers like albedo, normal, roughness, and depth are used as guiding information that are available in deferred rendering. By reconstructing high quality pixels from a noisy image within much shorter time than that full path tracing takes, denoising becomes an inevitable component in real-time path tracing.

Existing denoising techniques fall into two groups: offline and real-time denoisers, depending on their performance budget. Offline denoisers focus on production film quality reconstruction from a noisy image rendered with higher samples per pixel (e.g., more than 8). Real-time denoisers target at denoising noisy images rendered with very few samples per pixel (e.g., 1-2 or less) within a limited time budget.

It is common to take noisy diffuse and specular signals as inputs to denoise them separately with different filters and composite the denoised signals to a final color image to better preserve fine details. Many real-time rendering engines include separated denoising filters for each effect like diffuse lighting, reflection, and shadows for quality and/or performance. Since each effect may have different inputs and noise characteristics, dedicated filtering could be more effective.

Neural denoisers [3,4,5,6,7,8] use a deep neural network to predict denoising filter weights in a process of training on a large dataset. They are achieving remarkable progress in denoising quality compared to hand-crafted analytical denoising filters [2]. Depending on the complexity of a neural network and how it cooperates with other optimization techniques, neural denoisers are getting more attention to be used for real-time Monte Carlo path tracing.

A unified denoising and supersampling [7] takes noisy images rendered at low resolution with low samples per pixel and generates a denoised as well as upscaled image to target display resolution. Such joint denoising and supersampling with a single neural network gives an advantage of sharing learned parameters in the feature space to efficiently predict denoising filter weights and upscaling filter weights. Most performance gain is obtained from low resolution rendering as well as low samples per pixel, giving more time budget for neural denoising to reconstruct high quality pixels.

chipsandcheese.com