Oh, just that

We've had this conversation. Its groundhog day!

Pick 3060 - 12.74 TFlops, divided by 2 according to you

= 6.37 TFlops

Pick 1080 - 8.873 TFlops, right? Motherfucking raw raster TFlops

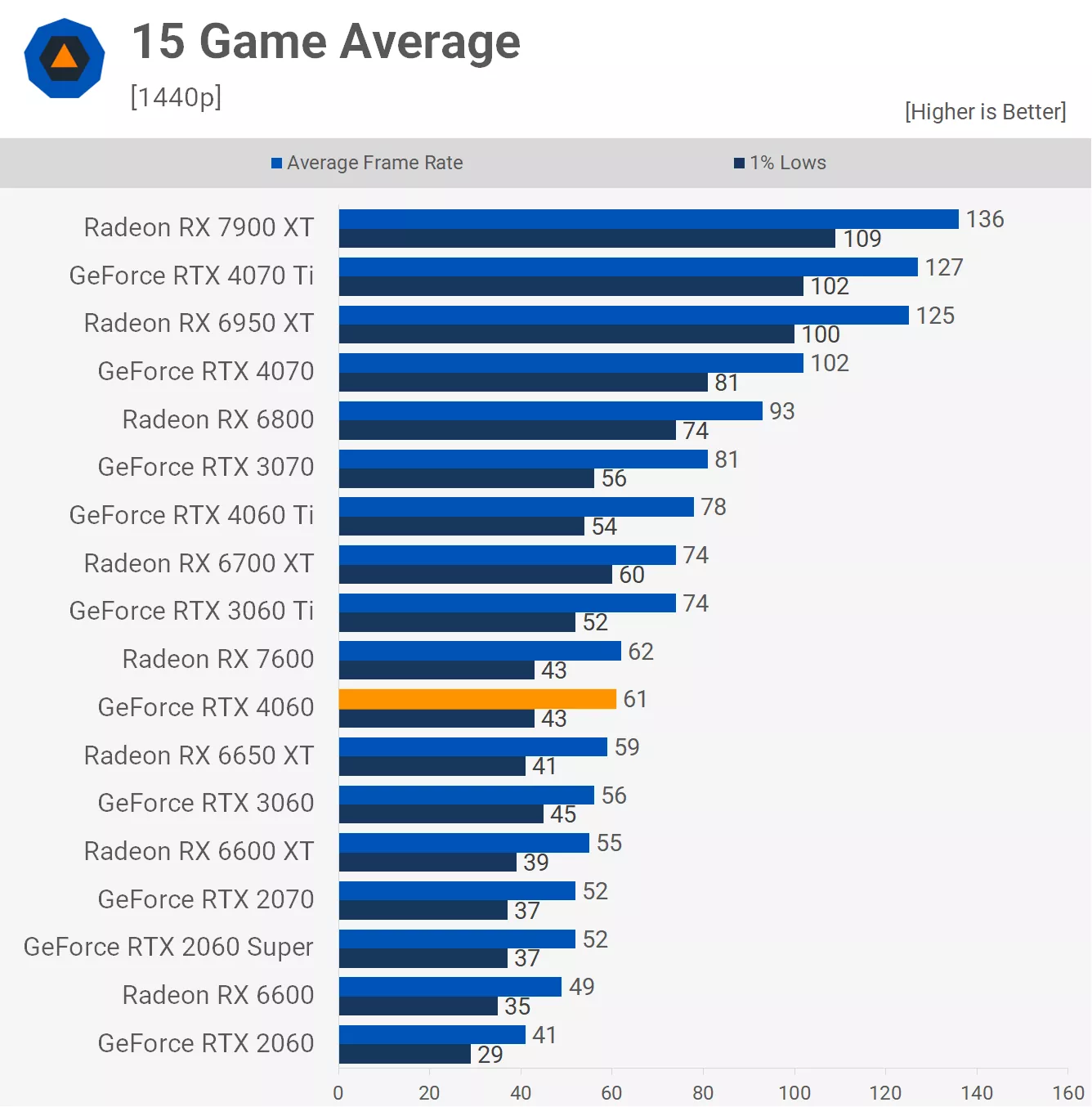

You're telling me that a 3060 Cuda core hack managed to outperform a Pascal raw cuda core raster of 8.873 TFlops by 25% on 14 games average

Or at the heels of the 5700XT's 9.754 TFlops

And averages will of course vary game to game, what about more computational heavy games?

Like this one, the 3060 at the heel of a 6600XT's 10.6 TFlops in Cyberpunk

Or lean even more into computation shaders with mesh shaders, why not?

a 3050 at 9.098/2=4.55 TFlops outperforming the 9.754 TFlops 5700 XT

Amazing!

Maybe we should derate RDNA TFlops