cireza

Member

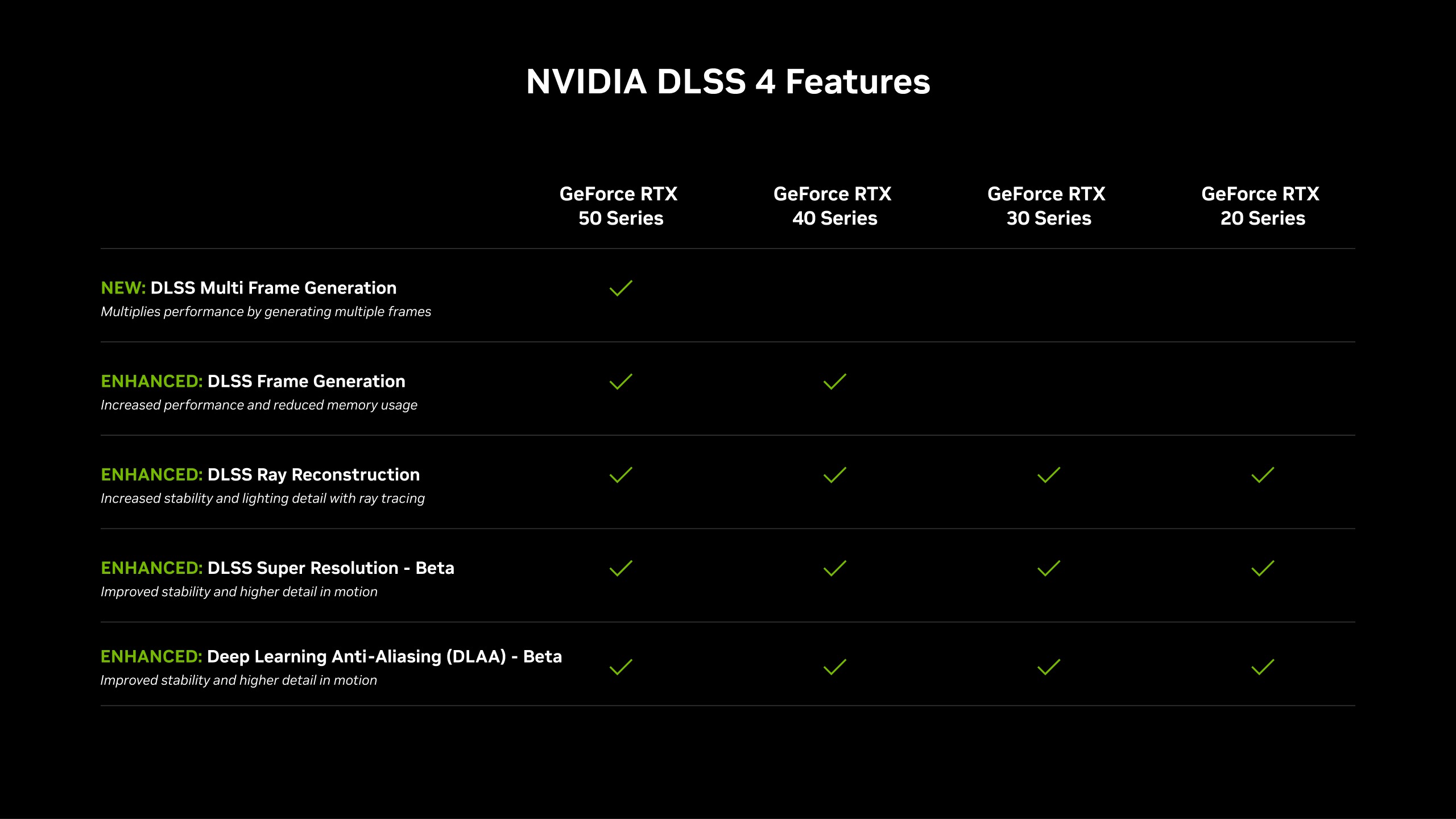

So this confirms it will be shit on Switch 2 then ?None of which is DLSS 4.

So this confirms it will be shit on Switch 2 then ?None of which is DLSS 4.

Depends on the implementation, version, and internal resolution. But generally, DLSS 3 is superior to not running it and is worth the slight frame time cost vs just boosting the resolution.So this confirms it will be shit on Switch 2 then ?

There are apparent issues in the footage from DF in the OP.

The TAA in RDR2 is terrible. It blurs even static images to the point where they look like they have been upscaled, and the blur is even stronger in motion, accompanied by noticeable artefacts. That's why I chose that game to test DLSS when I bought my current graphics card — I wanted to see if the blurriness could be fixed without downscaling. However, the DLSS implementation that came with the game was very poor, and my initial impression of DLSS was negative. The image was as soft as TAA native, but it was overprocessed on top of that, especially during motion (very strong motion-adaptive sharpening that supposed to counterbalance that motion blur). Luckily DLSS3 (and especially DLSS4 now) finally fixed image quality in this game. The image is razor-sharp, both when static and in motion. DLSS now renders more detail, which is particularly noticeable when looking at distant objects. There are also no noticeable motion artefacts around the grass and trees, so the image is clean and sharp, a very nice combination and massive win on all fronts to the inagame TAA. However, there are some minor imperfections to be aware of. For example, the quality of some effects in RDR2, such as volumetric clouds and SSR reflections on puddles of water, are tied to internal resolution. DLAA (native) can fix all of that, but if you lower internal resolution and use DLSS, then the image will show some pixelation if you look closely at these effects. That pixelation doesnt however lead to motion artefacts, therefore it isnt something that's noticeable unless you look for it. I would I prefer playting this game at 4K DLSS-Performance than TAA native, but on my current PC I can use DLSS Ultra Quality, or even DLAA in this game.

Guess which one is which.

4k version

That's what my eyes tell me when I see the early results. Issue is that the console is going to be riddled with ports using the technology. I guess some things never change.That is because of a few issues, caused by the Switch 2 being underpowered.

One is that the Transformer model is significantly heavier than the CNN model. And the Switch2 can barely run the CNN model.

The other problem is that temporal upscalers are highly dependent on base resolution and frame rate, to achieve good image quality.

You're never going to get current gen ports of AAA titles running at 1080p on the Switch 2 with acceptable settings. So it's really a question as to whether the ports should exist at all, and if people are happy to buy and play them, I don't see the issue. Cyberpunk on the Switch 2 in many cases is able to deliver better image quality than the Series S version due to the vast superiority of DLSS over FSR/TAA.That's what my eyes tell me when I see the early results. Issue is that the console is going to be riddled with ports using the technology. I guess some things never change.

Just imagine if I would have said the same about the PSSRIn many games it's hard to tell the difference between DLSS4 and native with TAA. And you get 50%+ performance.

Using native resolutions when we have such good techniques like DLSS or FSR4 is not very smart.

Pisser is closer to DLSS3 than 4Just imagine if I would have said the same about the PSSR

It's not my point. I find ironical such overpraise about the DLSS and the extreme criticism for the PSSR limits meanwhile the incredulity about someone who complains to the DLSS below 1080p because see similar artifacts in a different branded upscaler.Pisser is closer to DLSS3 than 4

I remember a certain someone telling people that the upscaler on switch 2 is going to be identical to $2000 PCs somehow because "its DLSS".It's not my point. I find ironical such overpraise about the DLSS and the extreme criticism for the PSSR meanwhile the incredulity about someone who can't tolerate the DLSS below 1080p.

They are all TAAU based in the end, people shit on me because I doesn't knew it but doubt they can be many differences between them.I remember a certain someone telling people that the upscaler on switch 2 is going to be identical to $2000 PCs somehow because "its DLSS".

Still astounds me why people insist that Switch 2 is "underpowered" despite it being hugely efficient and not far off as powerful as a mass market hybrid could be.

According to many, It should have the horsepower of a 300W machine will being a 15W machine.

They are all TAAU based in the end, people shit on me because I doesn't knew it but doubt they can be many differences between them.

PSSR has severe issues with denoising and image stability when the internal resolution is too low, and this has been called out repeatedly. It's likely one of the reasons it will be upgraded with the work being done on INT8 FSR4. DLSS 4 is simply not comparable, it is just by far the superior solution at this time. As for the Switch 2 "lite" DLSS model, it has been criticized by both DF and threads here. Just look at the Fast Fusion coverage, it got so many complaints the developer patched the game to disable DLSS.It's not my point. I find ironical such overpraise about the DLSS and the extreme criticism for the PSSR limits meanwhile the incredulity about someone who complains to the DLSS below 1080p because see similar artifacts in a different branded upscaler.

The switch 2 isn't using DLSS 4s new transformer model and so largerly has similar or even worse artifacts.PSSR has severe issues with denoising and image stability when the internal resolution is too low, and this has been called out repeatedly. It's likely one of the reasons it will be upgraded with the work being done on INT8 FSR4. DLSS 4 is simply not comparable, it is just by far the superior solution at this time. As for the Switch 2 "lite" DLSS model, it has been criticized by both DF and threads here. Just look at the Fast Fusion coverage, it got so many complaints the developer patched the game to disable DLSS.

I was agreeing with you. It was a throwback to this thread when people thought this small handheld would give the same upscaling results as $2000 PCs:They are all TAAU based in the end, people shit on me because I doesn't knew it but doubt they can be many differences between them.

Denoising are not exactly natively part of an upscaler though. And when resolution is too low IQ is more unstable on DLSS also to be fair. Something which DLSS fans continue to deny irrationally.PSSR has severe issues with denoising and image stability when the internal resolution is too low, and this has been called out repeatedly. It's likely one of the reasons it will be upgraded with the work being done on INT8 FSR4. DLSS 4 is simply not comparable, it is just by far the superior solution at this time. As for the Switch 2 "lite" DLSS model, it has been criticized by both DF and threads here. Just look at the Fast Fusion coverage, it got so many complaints the developer patched the game to disable DLSS.

One thing will change. Games will at least not be that blurry and aliased mess that SW1 games on SW1 hardware are.That's what my eyes tell me when I see the early results. Issue is that the console is going to be riddled with ports using the technology. I guess some things never change.

But it's not efficient. It's using Samsung's 8nm node.

One that even in 2020 was well behind TSMC's N7.

The Switch 2 SoC should have been made with something more recent. Such as TSMC's N5 or maybe even N3.

That would have allowed for lower power usage, better temperatures and the chip could have a more powerful GPU.

Nintendo Switch 2: the Digital Foundry hardware review

An impressive generational upgrade marred by a sub-par displaywww.eurogamer.net

"Switch 2 looks like an efficiency king then, defying expectations. "

I'll listen to experts, thanks.

The original comment byThe switch 2 isn't using DLSS 4s new transformer model and so largerly has similar or even worse artifacts.

Yes, as resolution decreases then DLSS starts struggling as well. Although DLSS does offer a superior image to PSSR at any rendering resolution. Next year things will hopefully be different and the gap should shrink significantly.Denoising are not exactly natively part of an upscaler though. And when resolution is too low IQ is more unstable on DLSS also to be fair. Something which DLSS fans continue to deny irrationally.

Sure, Mark Cerny mentions his admiration for them in tech presentations but Freddie Fanboy on the internet is better.LOL, DF are not experts in tech.

Sure, Mark Cerny mentions his admiration for them in tech presentations but Freddie Fanboy on the internet is better.

In fairness, "tech expert" is broad and vague at the same time. DF could be considered experts in their very specific niche, which is IQ analysis for games and the hundreds, if not thousands of hours of work they have put in peeping at pixels have made them pretty good at their job.You have to be very ignorant in tech, to think DF are experts in tech.

Yeah, they could have went with 5nm, higher costs, possibly difficulties in manufacturing at the required volumes and squeezed a bit more power out.You have to be very ignorant in tech, to think DF are experts in tech.

They do little more than capture images and compare frame rate and graphics settings.

They have little knowledge about chips, instructions, process nodes, etc.

Compare them to something like chipsandcheese, realworldtech, highyield, etc, and the difference in knowledge and information is staggering.

Besides, you didn't even consider that the node the switch2 is using, was never a contender for efficiency, not even when it was released, 5 years ago.

This an a basic fact that anyone with the most basic of tech knowledge knows.

In fairness, "tech expert" is broad and vague at the same time. DF could be considered experts in their very specific niche, which is IQ analysis for games and the hundreds, if not thousands of hours of work they have put in peeping at pixels have made them pretty good at their job.

The other guys you cited operate in different fields that certainly require more scientific knowledge than DF and have broader applications.

Regardless, I think deferring to "experts" when it comes to something as subjective as image quality is foolish. Just saying you will listen to DF because they are "experts" is a poor argument. What they do lacks rigor and they most certainly aren't scientists, so their knowledge is not even close to being infallible.

Yeah, they could have went with 5nm, higher costs, possibly difficulties in manufacturing at the required volumes and squeezed a bit more power out.

Would have been more expensive and still not a PS5.

Sure about that?Depends on what they wanted to do. Keep the same size chip, then the price would increase, but so would performance and capability of the system.

But making a chip with the same transistor count, and it would have a similar cost, but much lower power usage. Which would mean a more efficient SoC.

And N5 is already a mature node, so there would be no problems with yields.

Within this context, yeah. DF aren't electrical or computer engineers who know about silicon or chip fabrication. Relying on their expertise in this field as an appeal to authority is weak.But that is the thing, their expertise is only in analyzing frame rate and comparing settings. If your definition of tech is broad, then they may fit in.

But they have no technical expertise in analyzing chip design, process nodes, energy efficiency, programing, etc.

The question we were talking about is whether the Switch 2 is efficient. And in that regard, DF's opinion is inconsequential, because they have no expertise in that area.

Sure about that?

The thing is, do people really think that the engineers at Nintendo and Nvidia are morons who have less technical knowledge than rants on forums?

They clearly went for the sweet spot in cost, performance and stability.

In portable mode it's performing similarly to the Steam Deck with around half the power consumption. Ampere on 8nm was only slightly behind RDNA 2 on TSMC N7, and Ada on N5 is only around 50% better in performance per watt.But it's not efficient. It's using Samsung's 8nm node.

One that even in 2020 was well behind TSMC's N7.

The Switch 2 SoC should have been made with something more recent. Such as TSMC's N5 or maybe even N3.

That would have allowed for lower power usage, better temperatures and the chip could have a more powerful GPU.

In portable mode it's performing similarly to the Steam Deck with around half the power consumption. Ampere on 8nm was only slightly behind RDNA 2 on TSMC N7, and Ada on N5 is only around 50% better in performance per watt.

10~12W is half of 15W?In portable mode it's performing similarly to the Steam Deck with around half the power consumption. Ampere on 8nm was only slightly behind RDNA 2 on TSMC N7, and Ada on N5 is only around 50% better in performance per watt.

It's huge if you use it to increase battery life, since it should equate to roughly half the power consumption at the same clocks. But Nintendo can still shrink the T239 to N5 on a subsequent revision to get those benefits. On the other hand I don't think an extra 50% of performance would have massively changed the product positioning, and then you can't use the efficiency gains for better battery life down the line.50% improvement in power efficiency is huge, especially nowadays, with Moore's Law slowing down.

15W is the power dedicated to the SoC alone, while the unit as a whole can consume 20W+.10~12W is half of 15W?

It's huge if you use it to increase battery life, since it should equate to roughly half the power consumption at the same clocks. But Nintendo can still shrink the T239 to N5 on a subsequent revision to get those benefits. On the other hand I don't think an extra 50% of performance would have massively changed the product positioning, and then you can't use the efficiency gains for better battery life down the line.

25W for the Switch 2 unit. 40~45W for the Deck unit.15W is the power dedicated to the SoC alone, while the unit as a whole can consume 20W+.

The density difference probably corresponds to the wafer cost difference, so we're talking about spending more money for a bigger GPU, which you can always do. What you're describing seems more like the (assumed) PS5 Portable strategy of selling a break even product at the price of a home console.The N5 node is 2.8 times denser than Samsung's N8.

Having 2.8 more units would have made a gigantic difference in performance.

And it would still use less power. Though the cost of the chip would be significantly higher, though still lower than something like the PS5 Pro.

It's under 10W for the Switch 2 in portable mode, given the 2 hour+ battery life on the ~20Wh battery. While the below article reports a maximum power draw of 27W for the original Steam Deck and 23W for the OLED revision.25W for the Switch 2 unit. 40~45W for the Deck unit.

The density difference probably corresponds to the wafer cost difference, so we're talking about spending more money for a bigger GPU, which you can always do. What you're describing seems more like the (assumed) PS5 Portable strategy of selling a break even product at the price of a home console.

The same size chip on N5 could be 50% faster at the same battery life, which gives you a portable Rog Ally effectively. It would be nice but you're still well below the Series S. Realistically you need a bigger chip, with a wider bus, which will consume more power. So you will also need a bigger unit to fit a larger battery. And at that point you're pretty much talking about a different product, akin to what Sony seems to be planning.Yes, having the same sized chip in N5 would be more expensive.

But the Switch 2 is already struggling to run like CP2077, at low settings. A game that was released 5 years ago.

Even with DLSS3 CNN, isn't enough to help out.

The same size chip on N5 could be 50% faster at the same battery life, which gives you a portable Rog Ally effectively. It would be nice but you're still well below the Series S. Realistically you need a bigger chip, with a wider bus, which will consume more power. So you will also need a bigger unit to fit a larger battery. And at that point you're pretty much talking about a different product, akin to what Sony seems to be planning.

It's because a lot of people are uninformed and ignorant on these topics and just get their opinions and takes from other uninformed, ignorant buffoons. It's a mob mentality.Still astounds me why people insist that Switch 2 is "underpowered" despite it being hugely efficient and not far off as powerful as a mass market hybrid could be.

According to many, It should have the horsepower of a 300W machine will being a 15W machine.

7.8~12W the entire system portable. Can be half of the Deck, yes, but the discussion is about the APU only.It's under 10W for the Switch 2 in portable mode

Just imagine if I would have said the same about the PSSR

Pisser is closer to DLSS3 than 4

The switch 2 isn't using DLSS 4s new transformer model and so largerly has similar or even worse artifacts.

It can't be 12W, because there is only a 20Wh battery (well listed at 19.3 Wh), and 2 hours is the absolute lowest battery life. So with 10W as the upper limit, I was assuming the rest of the system consumed at least 2W, giving 8W on average to the SoC, compared with 15W on the Steam Deck.7.8~12W the entire system portable. Can be half of the Deck, yes, but the discussion is about the APU only.

It can't be 12W, because there is only a 20Wh battery (well listed at 19.3 Wh), and 2 hours is the absolute lowest battery life. So with 10W as the upper limit, I was assuming the rest of the system consumed at least 2W, giving 8W on average to the SoC, compared with 15W on the Steam Deck.

PSSR is comparable to DLSS3 as it works on same basis. Not as refined though as it's relatively new and require time for tweakingIt's not comparable to DLSS3 or DLSS4 or FSR4. It's just as simple as that.

FSR4 is a transformer (cnn-transformer hybrid) - of course it's more advanced. It's a level of DLSS4 and Pro is waiting for backporting to int8 that is known to be on the way."The neural network (and training recipe) in FSR 4's upscaler are the first results of the Amethyst collaboration," Cerny told us. "And results are excellent, it's a more advanced approach that can exceed the crispness of PSSR. I'm very proud of the work of the joint team!"

PSSR as a model is DLSS2-3 (CNN).PSSR is close to DLSS3 only in few games, The Last of Us 2 looks excellent with it. Apparently KCD2 and Yotei also have good implementations. Rest of games have more or less issues.