Johnny Concrete

Member

Graphics peaked with The Order 1886

"graphics peaked with Leonardo Da Vinci"

Graphics peaked with The Order 1886

Nah, I'm good @4K. 1440p caters to the midrange users.Yeah, 1440 is where it's at!

That's nonsense, but I'm not one of those anyway.Nah, I'm good @4K. 1440p caters to the midrange users.

No, that wouldn't be the norm right now, not even 4090 is that powerful. How is pre-rendered footage relevant when it comes to real-time graphics?Eh, nah. You'll get plenty of graphical advancements on consoles. No matter what folks tell themselves, the best looking games this gen could not have been done on last gen hardware without significant compromise.

And even with $800+ graphics cards, you aren't seeing the kind of advancement, relative to that amount, that you think you are. It can be done on consoles, you'll just pay a performance price for it. It's a resource allocation, not a hardware limitation.

No game is taking advantage of even my 3090 Ti, let alone a 4090 and better. It's not happening. I want people to understand this. Those cards are capable of far, far, far more than you've been getting.

What they do allow for, however, is better framerates and that's the biggest advantage. But, as long as consoles have the presence, share, and mass pricing accessibility that they do, your shiny PC graphics card will never, ever have its parts truly exploited and put to use.

Some examples of what could/would happen if PC devs only took advantage of the latest and greatest cards:

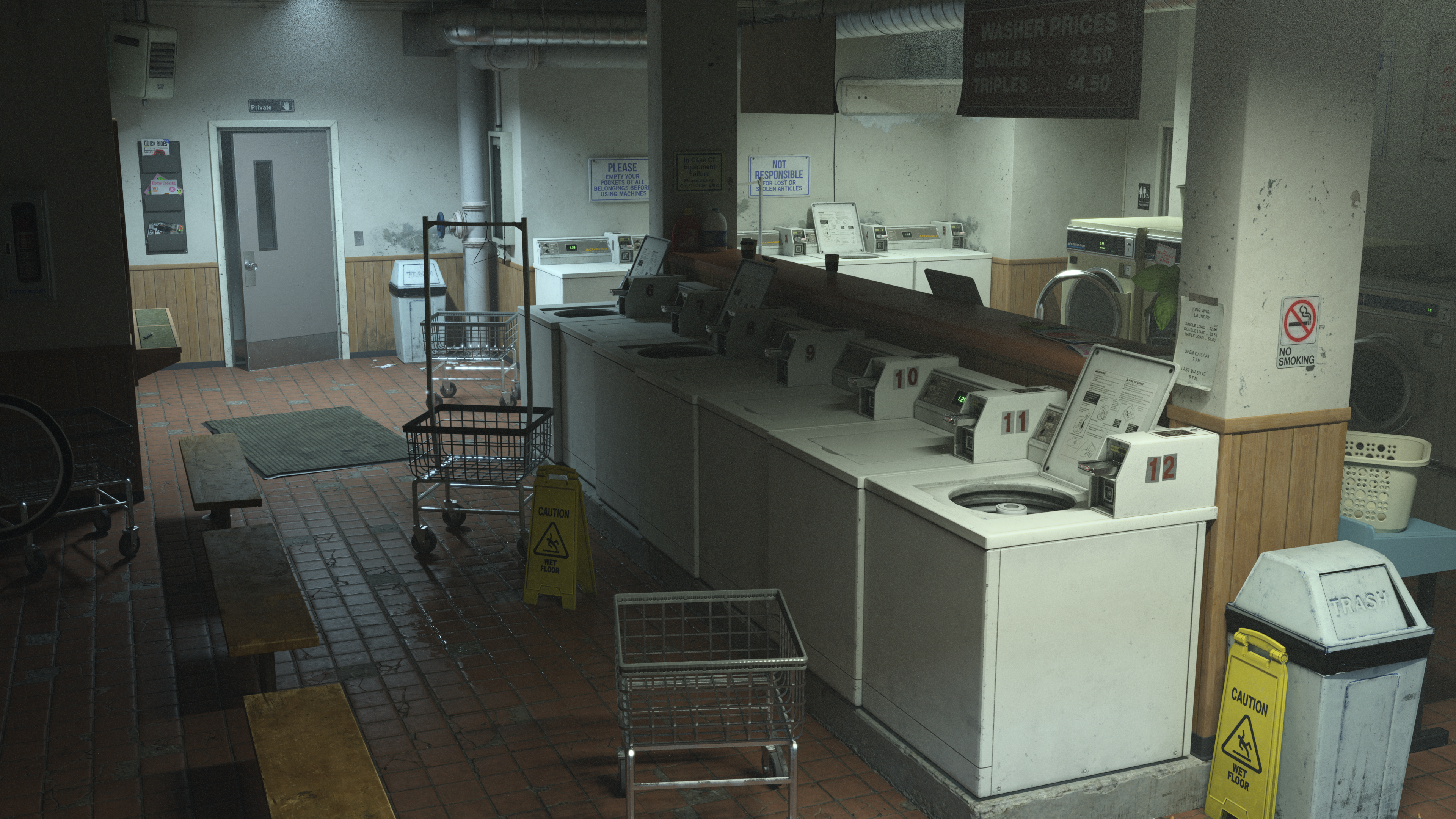

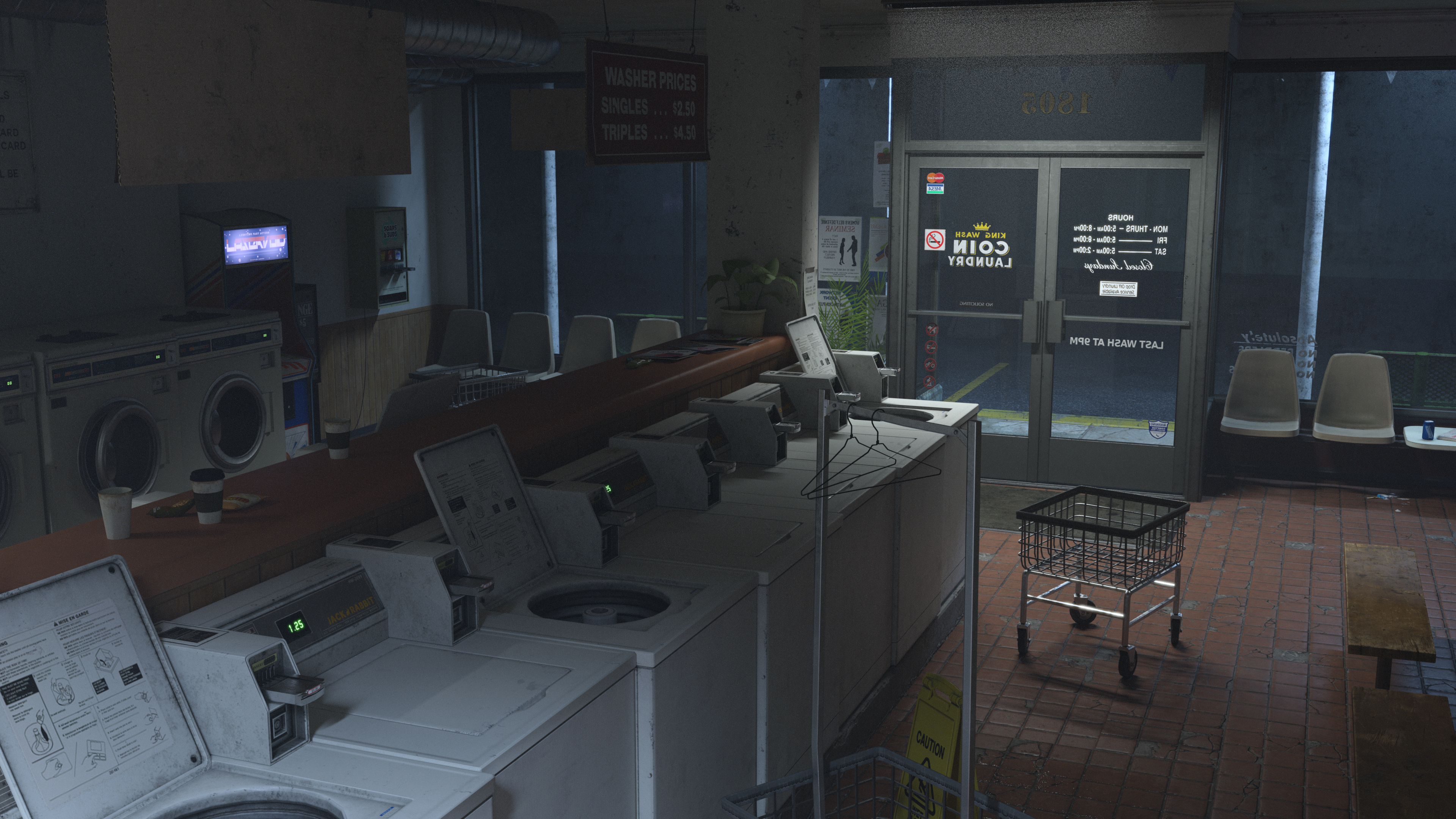

Rendered on a TITAN RTX in under four minutes in UE4 back in 2020:

Fully path-traced, 8k normal maps on nearly all surfaces, high quality fog, many dust particles (thanks to instancing). This would be the norm if PC's best cards were actually taken advantage of! And that's.....2020.

Powerful for what? To run that scene?No, that wouldn't be the norm right now, not even 4090 is that powerful. How is pre-rendered footage relevant when it comes to real-time graphics?

I'm not sure where are those scenes from, but if they are pre-rendered and it took 4 minutes to render on a TITAN, then obviously a 4090 isn't powerful enough to run them real-time at playable FPS. You are mixing up real-time graphics with pre-rendered scenes. I mean, sure, I agree graphics would be better if high-end was the baseline, but I wouldn't say there are no games taking advantage of a 4090 - Cyberpunk barely hits 20 FPS with path tracing at 4K, Alan Wake 2 barely stays at 30 FPS. Besides, what many people in this thread have said is true, we are hitting diminishing returns, there will never again be such a generational jump in graphics as before.Powerful for what? To run that scene?

It's an environment taken straight from UE4 with a few of those assets already used in games and was an example.

Point is it doesn't take rocket science to know how much better graphics would be if the high-end cards were actually taken advantage of, which is what the convo was about.

I do somewhat get where you're coming from. However, Diminishing Returns, verbatim, is about budget/return. We could have the leaps people are looking for, but we won't because it financially isn't feasible anymore. Things have stagnated on that basis, not because we are close to any graphical ceiling (which is what folks have gotten wrong). The law dictates a negative return, not capability (although graphics will eventually reach that point, but there is a very long way to go).I'm not sure where are those scenes from, but if they are pre-rendered and it took 4 minutes to render on a TITAN, then obviously a 4090 isn't powerful enough to run them real-time at playable FPS. You are mixing up real-time graphics with pre-rendered scenes. I mean, sure, I agree graphics would be better if high-end was the baseline, but I wouldn't say there are no games taking advantage of a 4090 - Cyberpunk barely hits 20 FPS with path tracing at 4K, Alan Wake 2 barely stays at 30 FPS. Besides, what many people in this thread have said is true, we are hitting diminishing returns, there will never again be such a generational jump in graphics as before.

I mean, some of those scenes don't look that much different from the best looking games nowadays. What I'm saying is if those are pre-rendered scenes and it took 4 minutes to render them on a TITAN, then a 4090 obviously isn't powerful enough to run them real-time. Maybe some of them actually were real-time, I can't tell, as I don't know where did you get them from. Diminishing returns are about graphics.I do somewhat get where you're coming from. However, Diminishing Returns, verbatim, is about budget/return. We could have the leaps people are looking for, but we won't because it financially isn't feasible anymore. Things have stagnated on that basis, not because we are close to any graphical ceiling (which is what folks have gotten wrong). The law dictates a negative return, not capability (although graphics will eventually reach that point, but there is a very long way to go).

Next, unlike a closed system (console), developers aren't creating their projects with the metal in mind. Yes, there are advantages to owning a powerful rig, but they will never be used to the fullest. What is happening is like RAM — the more of it you have, the more your system will use. The better the hardware, the better the output. Absolutely. Just not to the degree it would be if that were strictly the target.

It's just UE4 assets (most of which made it to games). They are just lumped nicely together with the highest end features, such as path/raytracing (which on double checking was 40 second renders per image in 2020, not 4 minutes — my error).I mean, some of those scenes don't look that much different from the best looking games nowadays. What I'm saying is if those are pre-rendered scenes and it took 4 minutes to render them on a TITAN, then a 4090 obviously isn't powerful enough to run them real-time. Maybe some of them actually were real-time, I can't tell, as I don't know where did you get them from. Diminishing returns are about graphics.

Sure, there are many other ways you can improve graphics other than increasing polygon count, but almost none of them are as visually striking as the increase in polygon count once was. The fact we hit diminishing returns doesn't necessarily mean we are close to the ceiling, it just means it's getting harder and harder to get closer to the ceiling. And I don't know, I would say some games are getting pretty close to photorealistic-ish graphics.

Yeah, I agree with that. Another way you could describe diminishing returns is that the same amount of work gives you less noticeable improvement than before. So theoretically, if the game dev companies had much bigger budgets, the improvement could be bigger, but unfortunately with all the things happening in recent years, I would say most companies' budgets are getting tighter.It's just UE4 assets (most of which made it to games). They are just lumped nicely together with the highest end features, such as path/raytracing (which on double checking was 40 second renders per image in 2020, not 4 minutes — my error).

In any case, I think we are most on common ground regarding the returns. I'm mainly talking about the folks who are mostly focusing on visuals and saying it is not as simple as that. True photorealistic, in-game graphics are still years away (although Tim Sweeney predicted last year would be the year we'd see fully playable, photoreal games..... long before a pandemic poured cold water on things). We have some excellent results already but are not where we should be and that is because of external (such as the continued fall out from COVID), and internal (such as budget), factors. But, yes, we are much closer to the ceiling than we have ever been, even if it's going to be a while.

No.Only people with subpar hardware would say that. There is a HUGE difference between 4K and QHD. No DLSS setting will nullify this difference.

I jumped from a 2070 Super to a 4070 Super. Playing 4k native now and the difference is night and day.

Don't say it's unnecessary or overrated just because your setup can't keep up.

It's a lie.

My setup certainly can keep up and 1440p gets the job done. 4K with my PS5, 1440p on PC with framerates that are beyond acceptable. Not a bad little trade off.Only people with subpar hardware would say that. There is a HUGE difference between 4K and QHD. No DLSS setting will nullify this difference.

I jumped from a 2070 Super to a 4070 Super. Playing 4k native now and the difference is night and day.

Don't say it's unnecessary or overrated just because your setup can't keep up.

It's a lie.

ps5 upscales most games to 4k, not native.My setup certainly can keep up and 1440p gets the job done. 4K with my PS5, 1440p on PC with framerates that are beyond acceptable. Not a bad little trade off.

I said nothing about the games, I said the PS5 is on a 4K monitor, which results in a fantastic experience. And, games like GT7 are in native 4K.ps5 upscales most games to 4k, not native.

if you want the unfiltered 4k experience, you need a pc that can do 4k, hooked up to a 4k monitor/tv.

4k120vrr is where its at.

1440p is squarely for midrange or high refresh/competitive stuffs.

1440p is still competent for small screens, but 4k really is a lot better.

My eyes dont lie. On a 32 inch monitor 3 feet away YES. The difference is very clear. Get some glasses.

Disagree. It depends on the type of the game, but if someone is trying to create an antmospheric, immersive and/or realistic game, graphics do matter very much.I'll let you on a secret, graphics never actually mattered at all, ever.

Eyes may not lie, but people certainly do. I do not believe for one second that you can see a 2560x1440's pixels at 3' on a 32" display.My eyes dont lie. On a 32 inch monitor 3 feet away YES. The difference is very clear. Get some glasses.