VRAM consumption increases by 500 mb (more as I traversed)

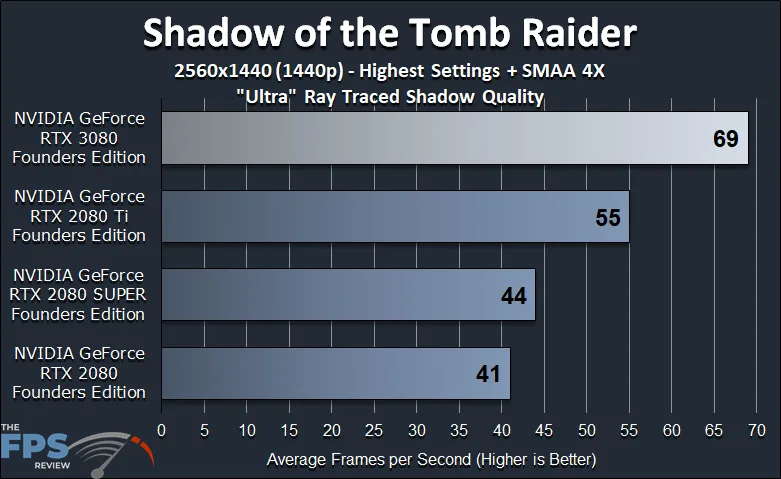

Performance takes a %18-22 drop

Now you can search for your next goalpost. Good luck.

If the RT shadows increase VRAM consumption in menu screen, there's no logic in denying it would also increase the VRAM consumption whenever ray traced shadows are being cast. You're simply nitpicking. This is not even a legitimate tech discussion , since you don't even know what kind of cost / memory footprint ray traced shadows have. I've been countless games with ray traced shadows and other mixed ray traced effects for 2 years now, at least I actually have the experience and knowledge on how it impacts the resources I have, and I can speak from experience. I cannot say the same to you, considering you nitpicked a video part where the GPU VRAM buffer is full of pressure and any change in graphical settings will not properly represent the VRAM resource impact they might have.

How can this discussion not go sour when you have a clear agenda on this specific topic and retell and recount misinformed opinions over and over and over again. Sorry but this is a discussion forum and if you a have misinformed opinion, you're bound to be corrected. I'm not even discussing anything with you. I'm merely stating facts with factual proofs and correcting your misinformed opinions. Nothing more, nothing less.

You're overcomplicating and deducing wrong opinions. That is ALL. the game is not using any super sauce magic on PS5. It literally runs how it should when you have enough VRAM budget (10 GB, and you need 12 GB to make sure the game can allocate an interrupted 10 GB to the game. the 10 GB GPUs themselves do not count)

The game literally is designed with 10 GB VRAM budget in mind, on PS5. If you have that you won't have problems. The CPU requirements could be higher. I believe you're one of those people that think somehow the PS5 SSD acts as a VRAM buffer. Dude, you're not comprehending this clearly. No SSD can ever be substitute for VRAM. you can stream textures but that will only lead to slower texture loading on PC. that is quite literally what the benefit of PS5's streaming system is. It will load textures in mere seconds, while on PC it could take 3-10 seconds depending on the region. THAT'S it. only thing directstorage would help with would be THAT. not the performance itself.

performance itself is related to how low your VRAM is.

Take a GOOD look at this chart. At 4K, 3070 is only %28 faster than 3060 DUE TO VRAM PRESSURE creeping in.

Or look at RTX 3080. it is %40 slower than 3090ti at NATIVE 4K. DLSS reduces the "VRAM pressure", and all of a sudden it is only %8 slower.

RTX 3080 lost its %30 performance, nearly, due to VRAM pressure. The video you've provided is full OF it. it is not representative of actual 3080 performance. Neither Directstorage nor PS5-like sophisticated STREAMING system on PC would solve this. THE GAME still

demands a clear uninterrupted 10 GB budget for 4K/ultra textures. What part of this do you not understand? Once you provide that, performance becomes supreme, and it is where you expect from the GPUs.

The game already uses RAM as a substitute for VRAM. This is why the performance is slower. Somehow you believe that using SSD as a substitute for VRAM (tens of times slower than RAM) would solve the issue or be remedy for it. IT would not. IT WOULD even be worse. You either have enough budget or not. You either gotta take the perf. hit or you will play around it. The game is not reducing memory footprint requirements on PS5 by utilizing SSD. It merely utilizes SSD so that textures can be loaded instantly, seamless. This is what PC ports have been lacking. It really takes some serious time for some textures to load in both cutscenes and in game traversal. This is a solution most devs come up with, and we call it texture streaming. PS5 is simply super fast at it. PS5 is not using SSD to reduce VRAM requirements. VRAM requirements are still there.

Solution is to reduce VRAM pressure if you're VRAM bound. DLSS helps that. Even ray traced shadows have their own VRAM cost( as evidenced above ). you can reduce textures as a last resort. if you have to, you gotta. THERE's no other way.

I won't reply any further. You can do whatever you want with this information.