Rikkori

Member

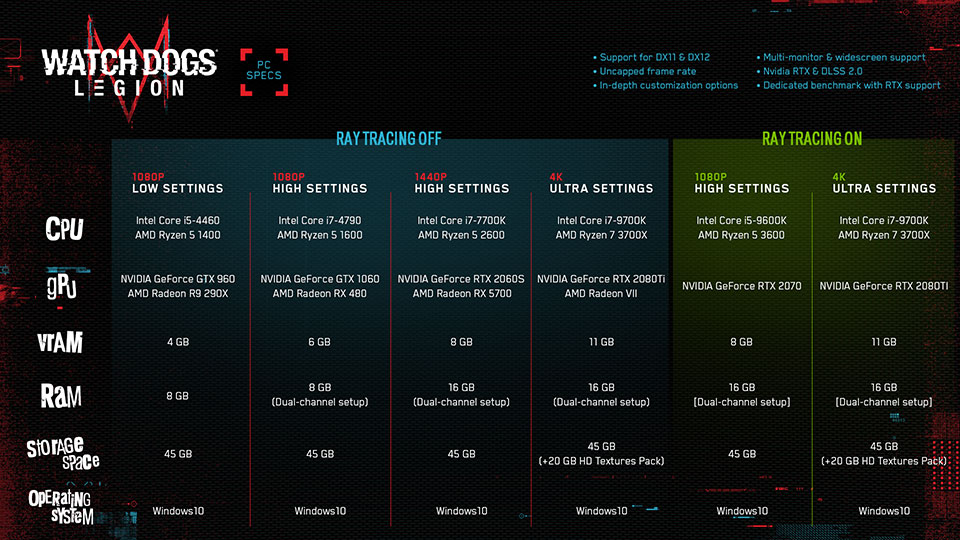

Oh, it's going to be fine for this game for sure (with DLSS on especially), but I wanted to note the trend because some people are still in denial about vram requirements going up.Ampere cards use GDDR6X so that might make some kind of impact. I'm not an expert on the matter but I'm reading that it provides a power advantage over standard GDDR6. How much and to what practical effect I'm not certain, but something to keep in mind.