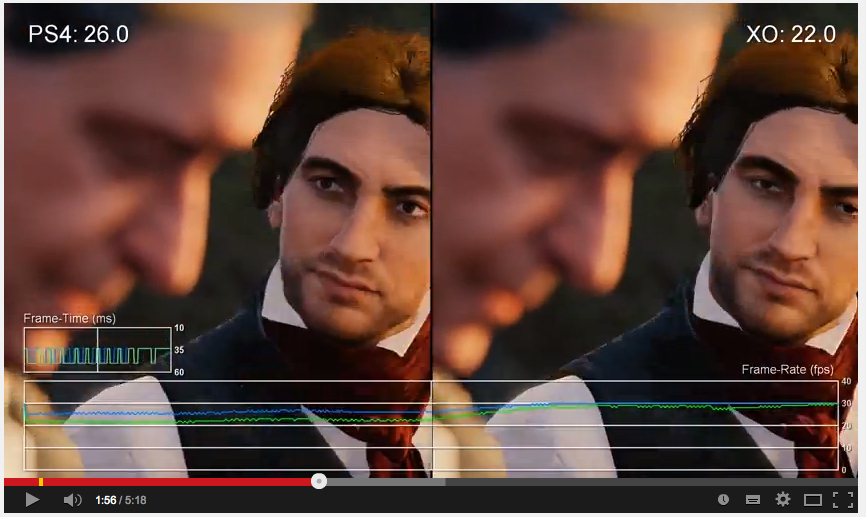

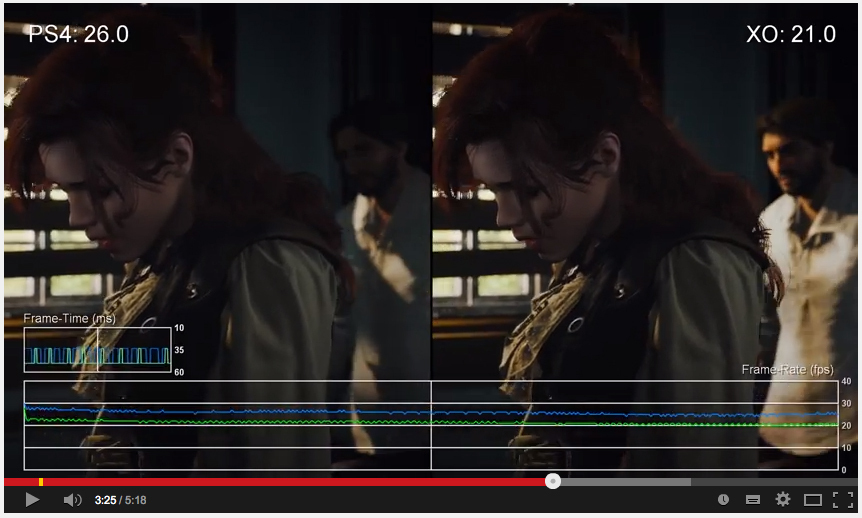

Clearly, a 9% increase accumulate across 6 active game cpu cores is.

I wonder if the crowd A.I is fixed across a few cpu cores or are scheduled across all the available cpu cores as they become available.

It does not work like that though. If AI was locked to 2 cores it would still only be 9% faster.

Besides performance sucks with or without crowds so I doubt CPU bottlenecking is the reason for shitty performance anyway.