As @LordOfChaos pointed: off the shelf Navi won't feature mcm design, hopefully Navi its post GCN and broke the shell surpassing its limits

I wasn't aware Nvidia hit a core limit, or is this speculation

In the paper they talk about needing to use Multi GPU modules to go above 128 SMs & that 256 SMs is 4.5X more SMs than the largest Nvdia single cards out now .

also Nvidia moved from 128 Cuda cores in a SM to 64 Cuda cores

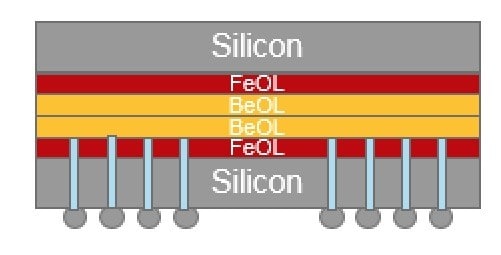

3.2 MCM-GPU and GPM Architecture As discussed in Sections 1 and 2, moving forward beyond 128 SM counts will almost certainly require at least two GPMs in a GPU. Since smaller GPMs are significantly more cost-effective [31], in this paper we evaluate building a 256 SM GPU out of four GPMs of 64 SMs each. This way each GPM is configured very similarly to today's biggest GPUs. Area-wise each GPM is expected to be 40% - 60% smaller than today's biggest GPU assuming the process node shrinks to 10nm or 7nm. Each GPM consists of multiple SMs along with their private L1 caches. SMs are connected through the GPM-Xbar to a GPM memory subsystem comprising a local memory-side L2 cache and DRAM partition. The GPM-Xbar also provides connectivity to adjacent GPMs via on-package GRS [45]

1 INTRODUCTION GPU-based compute acceleration is the main vehicle propelling the performance of high performance computing (HPC) systems [12, 17, 29], machine learning and data analytics applications in large-scale cloud installations, and personal computing devices [15, 17, 35, 47]. In such devices, each computing node or computing device typically consists of a CPU with one or more GPU accelerators. The path forward in any of these domains, either to exascale performance in HPC, or to human-level artificial intelligence using deep convolutional neural networks, relies on the ability to continuously scale GPU performance [29, 47]. As a result, in such systems, each GPU has the maximum possible transistor count at the most advanced technology node, and uses state-of-the-art memory technology [17]. Until recently, transistor scaling improved single GPU performance by increasing the Streaming Multiprocessor (SM) count between GPU generations. However, transistor scaling has dramatically slowed down and is expected to eventually come to an end [7, 8]. Furthermore, optic and manufacturing limitations constrain the reticle size which in turn constrains the maximum die size (e.g. ≈ 800mm2 [18, 48]). Moreover, very large dies have extremely low yield due to large numbers of irreparable manufacturing faults [31]. This increases the cost of large monolithic GPUs to undesirable levels. Consequently, these trends limit future scaling of single GPU performance and potentially bring it to a halt. An alternate approach to scaling performance without exceeding the maximum chip size relies on multiple GPUs connected on a PCB, such as the Tesla K10 and K80 [10]. However, as we show in this paper, it is hard to scale GPU workloads on such "multi-GPU" systems, even if they scale very well on a single GPU. This is due

2.1 GPU Application Scalability To understand the benefits of increasing the number of GPU SMs, Figure 2 shows performance as a function of the number of SMs on a GPU. The L2 cache and DRAM bandwidth capacities are scaled up proportionally with the SM count, i.e., 384 GB/s for a 32-SM GPU and 3 TB/s for a 256-SM GPU1 . The figure shows two different performance behaviors with increasing SM counts. First is the trend of applications with limited parallelism whose performance plateaus with increasing SM count (Limited Parallelism Apps). These applications exhibit poor performance scalability (15 of the total 48 applications evaluated) due to the lack of available parallelism (i.e. number of threads) to fully utilize larger number of SMs. On the other hand, we find that 33 of the 48 applications exhibit a high degree of parallelism and fully utilize a 256-SM GPU. Note that such a GPU is substantially larger (4.5×) than GPUs available today. For these High-Parallelism Apps, 87.8% of the linearly-scaled theoretical performance improvement can potentially be achieved if such a large GPU could be manufactured. Unfortunately, despite the application performance scalability with the increasing number of SMs, the observed performance gains are unrealizable with a monolithic single-die GPU design. This is because the slowdown in transistor scaling [8] eventually limits the number of SMs that can be integrated onto a given die area. Additionally, conventional photolithography technology limits the maximum possible reticle size and hence the maximum possible

Our optimized MCMGPU architecture achieves a 44.5% speedup over the largest possible monolithic GPU (assumed as a 128 SMs GPU), and comes within 10% of the performance of an unbuildable similarly sized monolithic GPU.

https://www.pcgamer.com/rtx-2080-everything-you-need-to-know/

Nvidia has reworked the SMs (streaming multiprocessors) and trimmed things down from 128 CUDA cores per SM to 64 CUDA cores. The Pascal GP100 and Volta GV100 also use 64 CUDA cores per SM, so Nvidia has standardized on a new ratio of CUDA cores per SM. Each Turing SM also includes eight Tensor cores and one RT core, plus four texturing units. The SM is the fundamental building block for Turing, and can be replicated as needed.

For traditional games, the CUDA cores are the heart of the Turing architecture. Nvidia has made at least one big change relative to Pascal, with each SM able to simultaneously issue both floating-point (FP) and integer (INT) operations—and Tensor and RT operations as well. Nvidia says this makes the new CUDA cores "1.5 times faster" than the previous generation, at least in theory.

All Turing GPUs announced so far will be manufactured using TSMC's 12nm FinFET process. The TU104 used in the GeForce RTX 2080 has a maximum of 48 SMs and a 256-bit interface, with 13.6 billion transistors and a die size measuring 545mm2. That's a huge chip, larger even than the GP102 used in the 1080 Ti (471mm2 and 11.8 billion transistors), which likely explains part of the higher pricing. The GeForce RTX 2080 disables two SMs but keeps the full 256-bit GDDR6 configuration.