llien

Banned

No, dumbo.the 2 newest pics. December 2020

You've just failed to comprehend "7 newest games".

It surely didn't get worse after Resident Evil 8 release, where even 6800XT beats 3090...

No, dumbo.the 2 newest pics. December 2020

More than enoughI have a friend who wants to trade with me. take my 6900xt and give his suprim X model brand new sealed( not the low hash rate ) plus a bit more than 650$ US )

Now I am looking for something that runs all new and upcoming games at 2k 140 frames with the DLSS Quality option.

I checked fast-paced games like Cold War. and yeah with DLSS it can even reach 180 frames.

But there are some games like Cypherpunk, it's around 90 frames with DLSS on quality.

Now, for open-world or single-player games, I am ok with 80/90 frames if everything ultra settings. the DLSS most of the time produce even a better quality image than native in my eyes ( or let's say they are so close I can't tell the difference )

But in games like COD? I need a locked 144 frames as my monitor.

To be fair, the 6900 xt does fair about the same results but native ( in COD sometimes it goes below 144 on the 6900xt)

The 6900XT I have is a really nice one too. water-cooled Asus card. But I am just worried about the closed AIO, it will die at one point and then it will have to be RMA, etc( can't even change the AIO and replace it with something else due to the weird shape the pump is, otherwise wouldn't have been a concern.

So I am wondering if the 3070 is fine, for a 2k high frames card, or should I forget about the money and keep the 6900xt.

The other reason to be honest I feel these cards are expensive too ( the 6900xt ) that is. so with the 3070 price, I feel almost no regret ? in spending this low compared to a super expensive card? not sure if what I am saying makes sense to some of you lol

I can still do 1440p and 80+ fps in most games with a 1070, so I'd say yes.Overkill for Ultra settings in next-gen games at 1440p? So you mean to say it'll do 144+fps?

Why are the 1080p and 1440p ComputerBase benchmarks missing from your post?People really need to stop saying AMD is 1st in raster. It never was, the 3090 is ahead. The 3080TI is ahead as well. In every situation, in every resolution, ray tracing, no ray tracing. In EVERY case.

it's the typical mister racer mantra: "oh, my 4 yo gpu beats consoles just fine. It's future-proof"

I honestly don't know any pc guy with a 4 yo card. They're always upgrading, it's like a ritual in their cult or something...

I agree Ultra settings are overrated and I personally never shoot for it unless there's meaningful visual gain from it. But OP specifically mentioned "Ultra settings", "144" and "next-gen gaming" in the title, a 3070 simply won't be able to deliver 144fps on ultra settings in next-gen games.I can still do 1440p and 80+ fps in most games with a 1070, so I'd say yes.

"Ultra settings" is generic jargon and it doesn't mean shit. If you do stupid, inefficient shit you can have a single setting, say "SSAA 8X", completely destroy your performances even on a 3090.

Yeah.There are tons of people on GAF with 1070, 1080s or 1080TI.

Why are the 1080p and 1440p ComputerBase benchmarks missing from your post?

I have a friend who wants to trade with me. take my 6900xt and give his suprim X model brand new sealed( not the low hash rate ) plus a bit more than 650$ US )

Now I am looking for something that runs all new and upcoming games at 2k 140 frames with the DLSS Quality option.

I checked fast-paced games like Cold War. and yeah with DLSS it can even reach 180 frames.

But there are some games like Cypherpunk, it's around 90 frames with DLSS on quality.

Now, for open-world or single-player games, I am ok with 80/90 frames if everything ultra settings. the DLSS most of the time produce even a better quality image than native in my eyes ( or let's say they are so close I can't tell the difference )

But in games like COD? I need a locked 144 frames as my monitor.

To be fair, the 6900 xt does fair about the same results but native ( in COD sometimes it goes below 144 on the 6900xt)

The 6900XT I have is a really nice one too. water-cooled Asus card. But I am just worried about the closed AIO, it will die at one point and then it will have to be RMA, etc( can't even change the AIO and replace it with something else due to the weird shape the pump is, otherwise wouldn't have been a concern.

So I am wondering if the 3070 is fine, for a 2k high frames card, or should I forget about the money and keep the 6900xt.

The other reason to be honest I feel these cards are expensive too ( the 6900xt ) that is. so with the 3070 price, I feel almost no regret ? in spending this low compared to a super expensive card? not sure if what I am saying makes sense to some of you lol

So when you said the 3080 Ti was ahead in every resolution, you were just referring to the 3 slot air/liquid cooled factory OC versions?They've only tested those models at 4k. You know, the thousand dollar+ 4k cards arent really of interest how they run at cpu bound 1080p, since they're you know, 4k cards. If you desire every resolution the other site has them.

ASUS GeForce RTX 3080 Ti STRIX LC Liquid Cooled Review

The ASUS RTX 3080 Ti STRIX LC is THE overkill RTX 3080 Ti. It comes with a pre-filled watercooling unit, that provides cooling for the GPU and memory chips. Thanks to a factory overclock to 1830 MHz, this is the fastest RTX 3080 Ti we're testing today, but also the most expensive.www.techpowerup.com

Showing nvidia ahead at every resolution, even with their selection suite of titles where you have outliers massively favouring amd and skewing the results. Without Valhalla the gap would be even bigger. Are we still pushing hardware unboxed claims from last year that rdna2 is better at 1440p ? Even their more recent tests show different now.

So when you said the 3080 Ti was ahead in every resolution, you were just referring to the 3 slot air/liquid cooled factory OC versions?

The 6800 XT *is* ahead of the 3080 at 1440p, but it remains to be seen if the 6900 XT is ahead of the (stock) 3080 Ti.

I think that's a massive downgrade. Keep that 6900XT, and FSR is coming. Not to mention going from 16GB VRAM to 10GB.

Without a doubt its a downgrade just to what extent is he willing to take the hit

I too think its a no brainer keeping the 6900

People really need to stop saying AMD is 1st in raster. It never was, the 3090 is ahead. The 3080TI is ahead as well. In every situation, in every resolution, ray tracing, no ray tracing. In EVERY case. DLSS doesnt need to work with every game, just the demanding ones. Which at this point it does. With the inclussion of Red Dead 2, we got pretty much all the heavy titles covered with dlss with the exception of Horizon and AMD partnered Valhalla. DLSS is a pretty safe bet. Games are coming out monthly now and we have reached a point where they're not even announced that they use DLSS, its just there, in the menu. Necromunda Hired Gun came out a few days ago, people found out it has DLSS from reviewes, because they saw in the menu. Its starting to become natural to have DLSS in new games

If I was building a new PC, it'll be either 3090 or 6900XT. 3090 for more VRAM and better RT performance. DLSS has very low support while FSR will have insane amount of games supporting it going forward due to being GPU/API agnostic, even an nvidia card can use it.

And the image should be more stable than DLSS in motion.

DLSS has very low support while FSR will have insane amount of games supporting it going forward due to being GPU/API agnostic, even an nvidia card can use it.

Well officially 2K is 2048x1080, which is close to 1920x1080 (1080p), it's the equivalent of 4K being 4096x2160, but ultra HD being 3840x2160.

DLSS is in nearly every big game released since last year. Its the exact opposite of very low support. It just gets added into more and more games. UE4 has it as a toggle and now Unity has it as well. If FSR is worse than any other proprietary solution and even the UE5 one nobody is gonna use it

Well officially 2K is 2048x1080, which is close to 1920x1080 (1080p), it's the equivalent of 4K being 4096x2160, but ultra HD being 3840x2160.

It's much closer to 1080p than 2560x1440.

I don't know why people started talking about "2K", I guess it's for convenience, but it's technically wrong.

As said before: GPU/API agnostic. It can work on any console, and can work on old nvidia cards. Could as well work on Switch. So why devs won't take advantage of that?

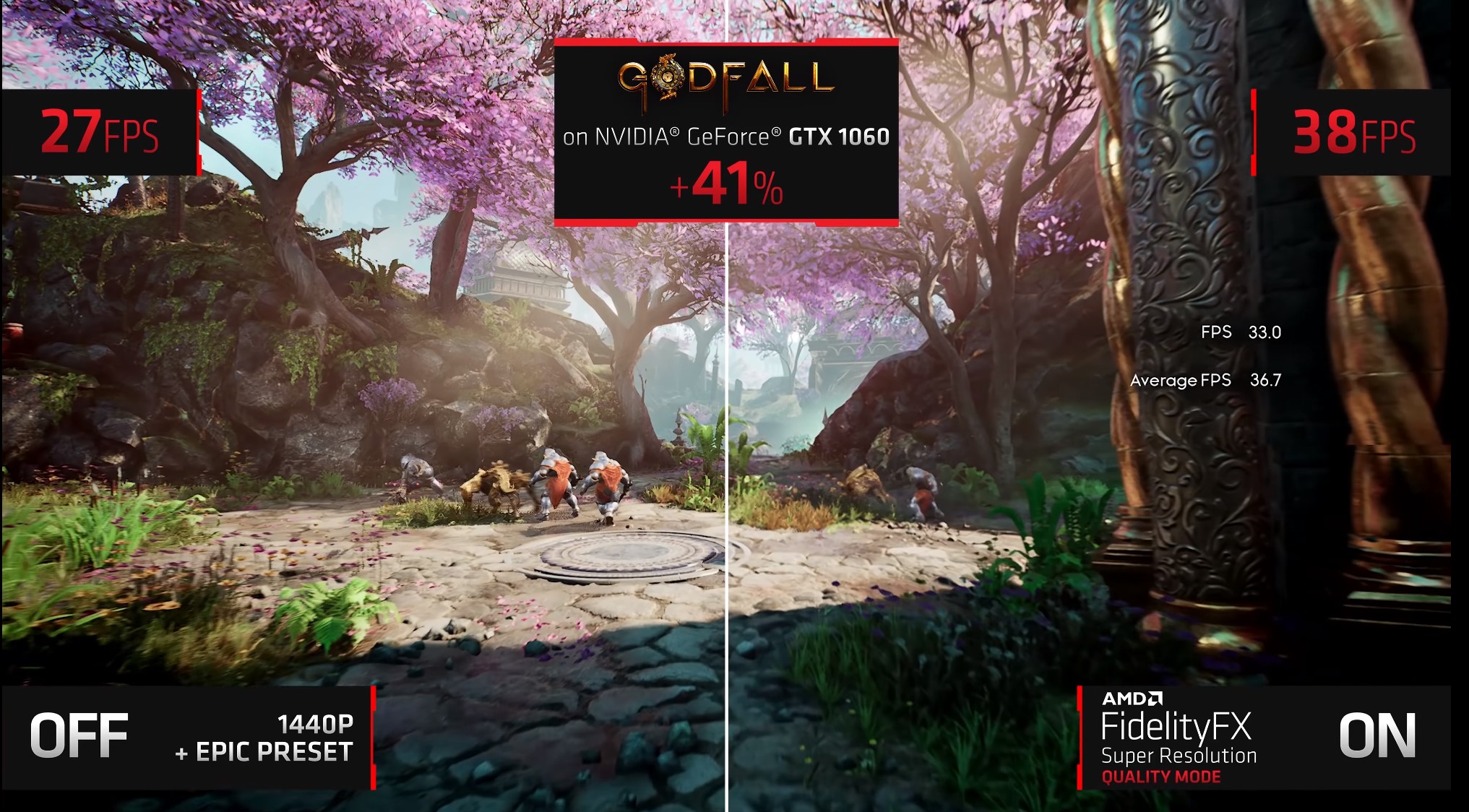

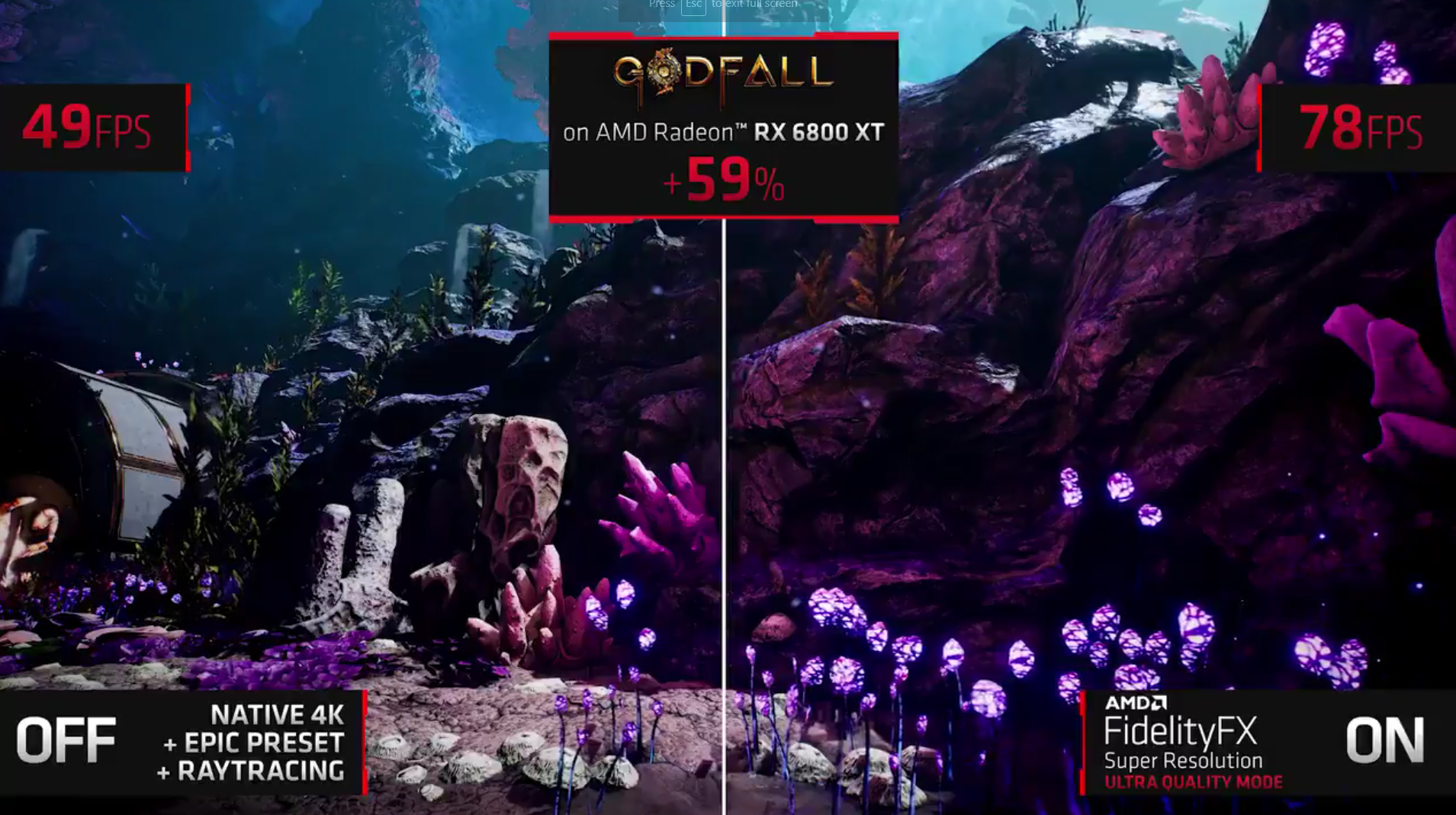

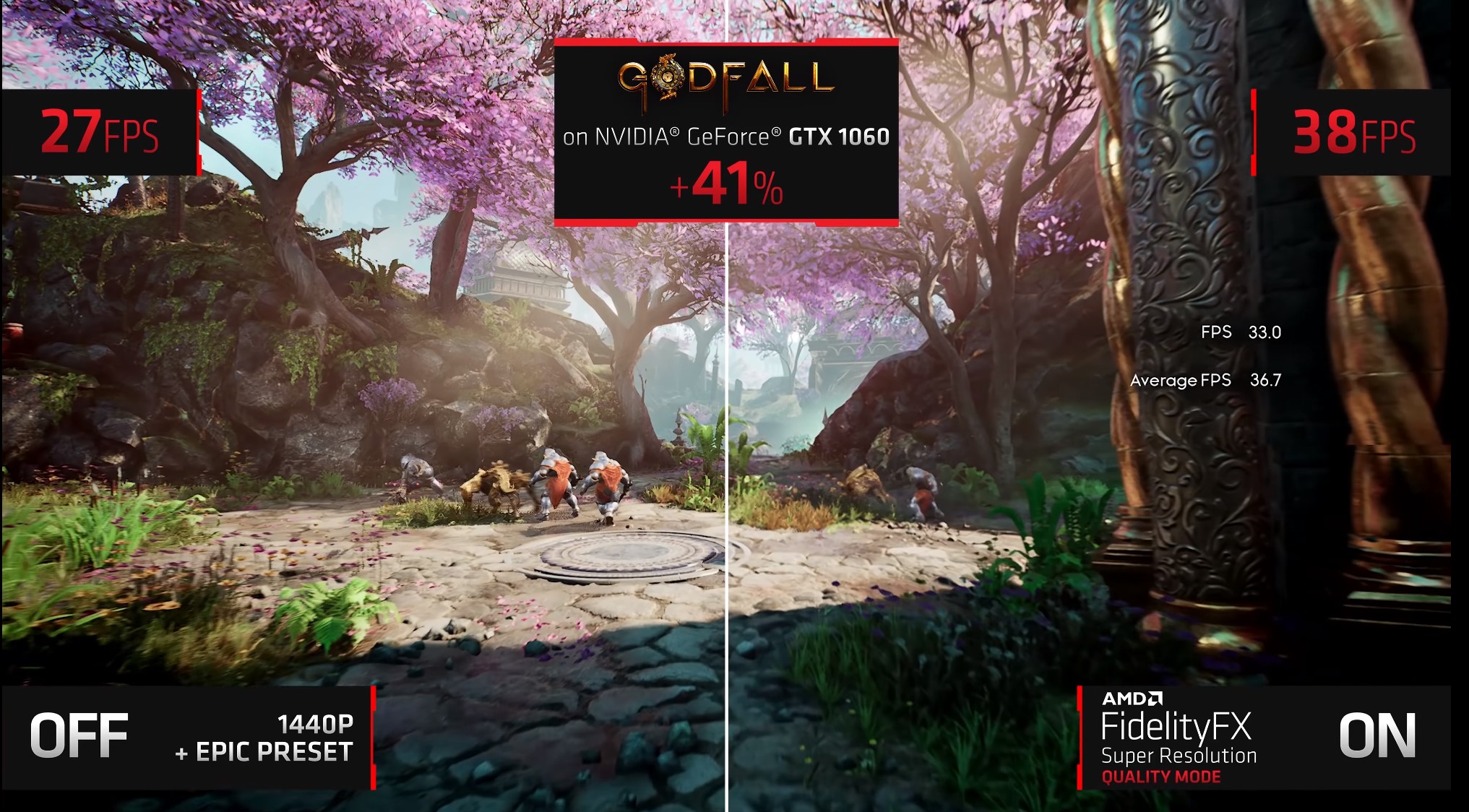

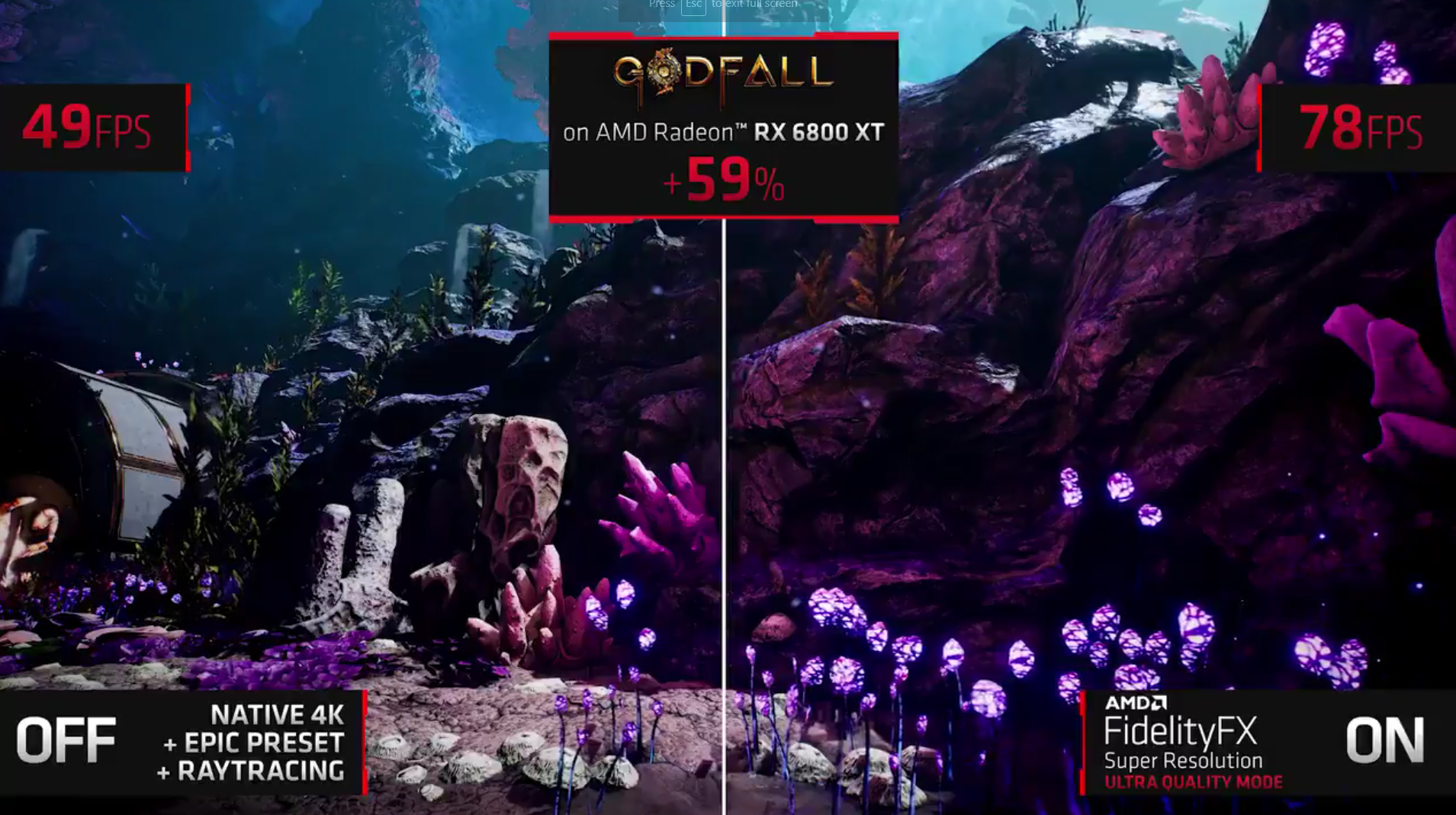

Because their own solution is better, as ive said. Remains to be seen how it is in practice, but what amd showed in that comparison pic is horendous. If every dev has a better looking and performing solution, why would they use amd's ? Switch will use DLSS

About that PC master race thing: the most popular GPU on steam hw (as flawed as that review is) is 1060.

Both PS5 and XSeX laugh at that.

You know this has to be put into the game by the devs like dlss right, it's not a 1 driver toggle all games work type deal? I don't see it gaining much traction in the console space, they have been doing it better than what AMD have shown for years already.As said before: GPU/API agnostic. It can work on any console, and can work on old nvidia cards. Could as well work on Switch. So why devs won't take advantage of that?

Is the 3070 enough for next gen gaming at 2k 144 ultra settings with DLSS quality and no ray tracing

all new and upcoming games at 2k 140 frames with the DLSS Quality

...

Now, for open-world or single-player games, I am ok with 80/90 frames if everything ultra settings.

You know this has to be put into the game by the devs like dlss right, it's not a 1 driver toggle all games work type deal? I don't see it gaining much traction in the console space, they have been doing it better than what AMD have shown for years already.

More into FSR:

Made these two 4K screenshots, couldn't get a clean one for 1060 demonstration. Native 4K still looks slightly sharper, but the sacrifice for FPS is pretty minimal:

These are wallpapers, where they wrote different tiers of fsr, they're not pictures showing the actual fsr at work

FTFYthe 3070 will match the 3090 with DLSS

Yeh we have had super sampling for ages, you can do it many ways like setting the ingame resolution slider above 100% or make a custom resolution, or use the amd/nvidia driver options (dsr/vsr) and it works with dlss if you so choose so yay for amd adding something they already had anyway (just use vsr with fsr is what i'm implying).So far only seen better implementation by Bluepoint on Demon's Souls and Insomniac temporal injection for Performance and Performance RT modes (Fidelity 4K is native 4K reconstructed to even higher than 4K and downsampled to have that unique, clean look with zero aliasing).

More than sure FSR will be used much more often than DLSS going forward.

He's just being a tool.You as you wrote this:

Show me one person on GAF who said a "gt1080 was more than enough to beat consoles." I'll wait.

Can you please give me some backup for this? I'm not trying to argue-- I want to verify that what you're saying is real because if it is, I'm dropping the $3000.00 on the Sapphire Toxic, as well as getting a 5900x or maybe 5950x.Trading 6900XT that beats 3090 in newest games to roflcopter like 2070, to play with glorified TAA derivative aka "DLSS 2".

Note, the graph below refers to ALL games TPU normally tests with, not just newest ones (only computerbase does "7 latest" kind of tests among sites I visit)

TPU

You are commenting on a post with two links on two statements.Can you please give me some backup for this? I'm not trying to argue-- I want to verify that what you're saying is real because if it is, I'm dropping the $3000.00 on the Sapphire Toxic, as well as getting a 5900x or maybe 5950x.