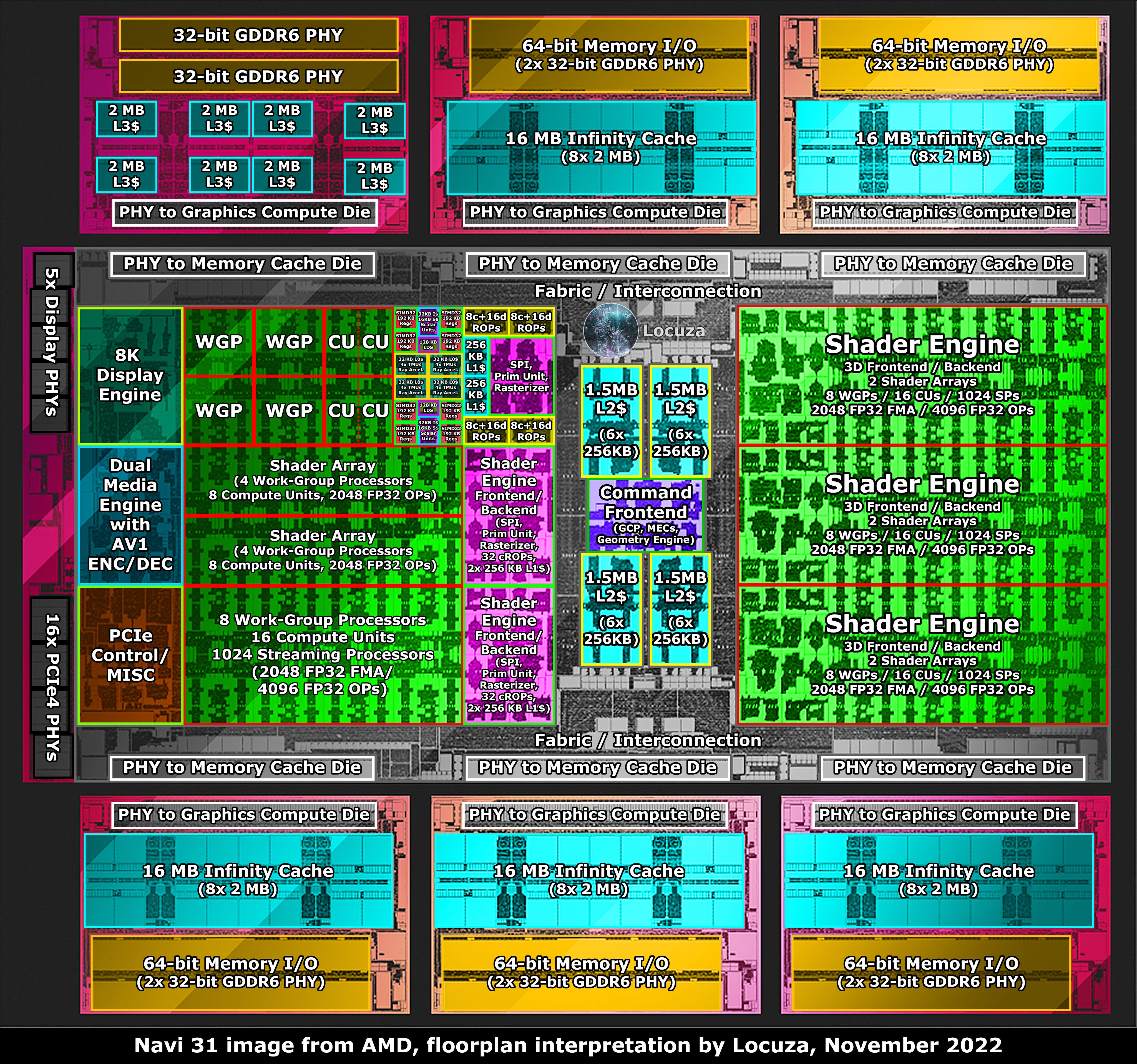

Didn't MLID say before that Canis is 16 CUs without any disabled?

Also, Orion is going to have an uneven setup regardless if it's 9 or 10 WGPs. It's 3 SE, with 3 SA each.

And 80 CUs (40 WGPs) for Magnus was the other leak but MLID has been saying 68 CUs for Magnus, which would put it closer to the 25% CU advantage over Orion according to MLID (its 30%+ though). So 36 WGPs, 72 CUs total, 4 CUs disabled.

I personally think MS will use AT3 die (Medusa Point Halo) for the cheaper S tier SKU, 24 WGP, 48 CUs total, enough to target 1440p/120 comfortably. And 36 WGP, 72 CUs total for Magnus AT2. MS stated "first party consoles" as in plural.

MS also needs a cheaper SKU for use on xcloud.