Tobimacoss

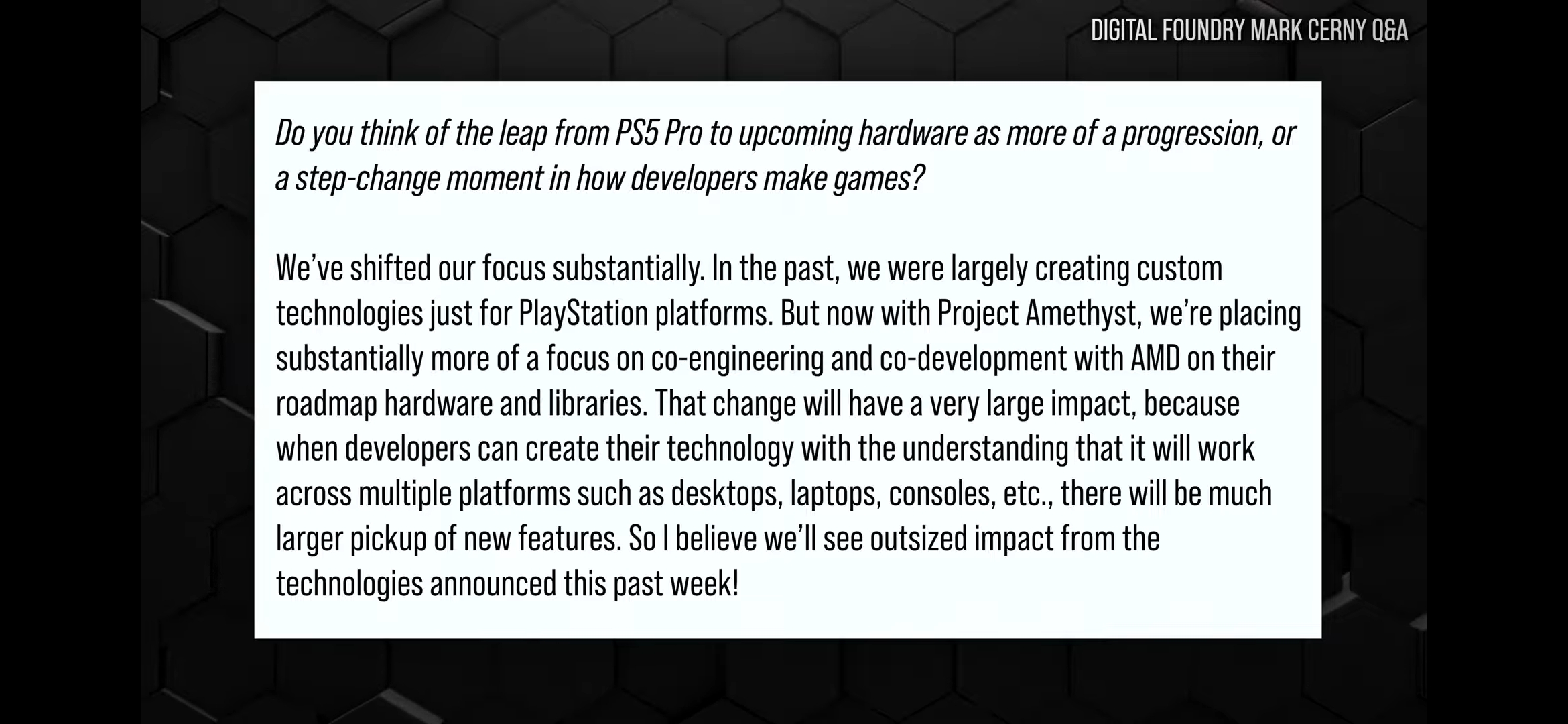

Member

And that's why being a chiplet design modular system helps save costs for both AMD and MS. They can use the 5 dies for various products including discrete graphics. Less waste, everything they create becomes dual purpose.That Magnus SOC looks massive. That can't be cheap.

I think it will turn out like this:

Magnus with AT4 = Xbox Laptops and Xbox Handhelds

Magnus with AT3 = Xbox S console and bulkier Gaming Xbox Laptops

Magnus with AT2 = Xbox X console and Xbox PCs

Magnus with AT1 = Xbox PCs

Magnus with AT0 = Xbox Cloud, they will pair up AT0 with 2-4 Magnus SOCs each, and run 2 instances of X profiles for Ultimate users at 4k, and 4 instances of S profiles for Premium and Essential users at 1440/1080

Then also use AT2, AT1, AT0 for discrete graphics, basically 5080, 5090, and beyond tiers, or 6070, 6080, 6090 tier discrete GPUs to compete with Nvidia lineup.

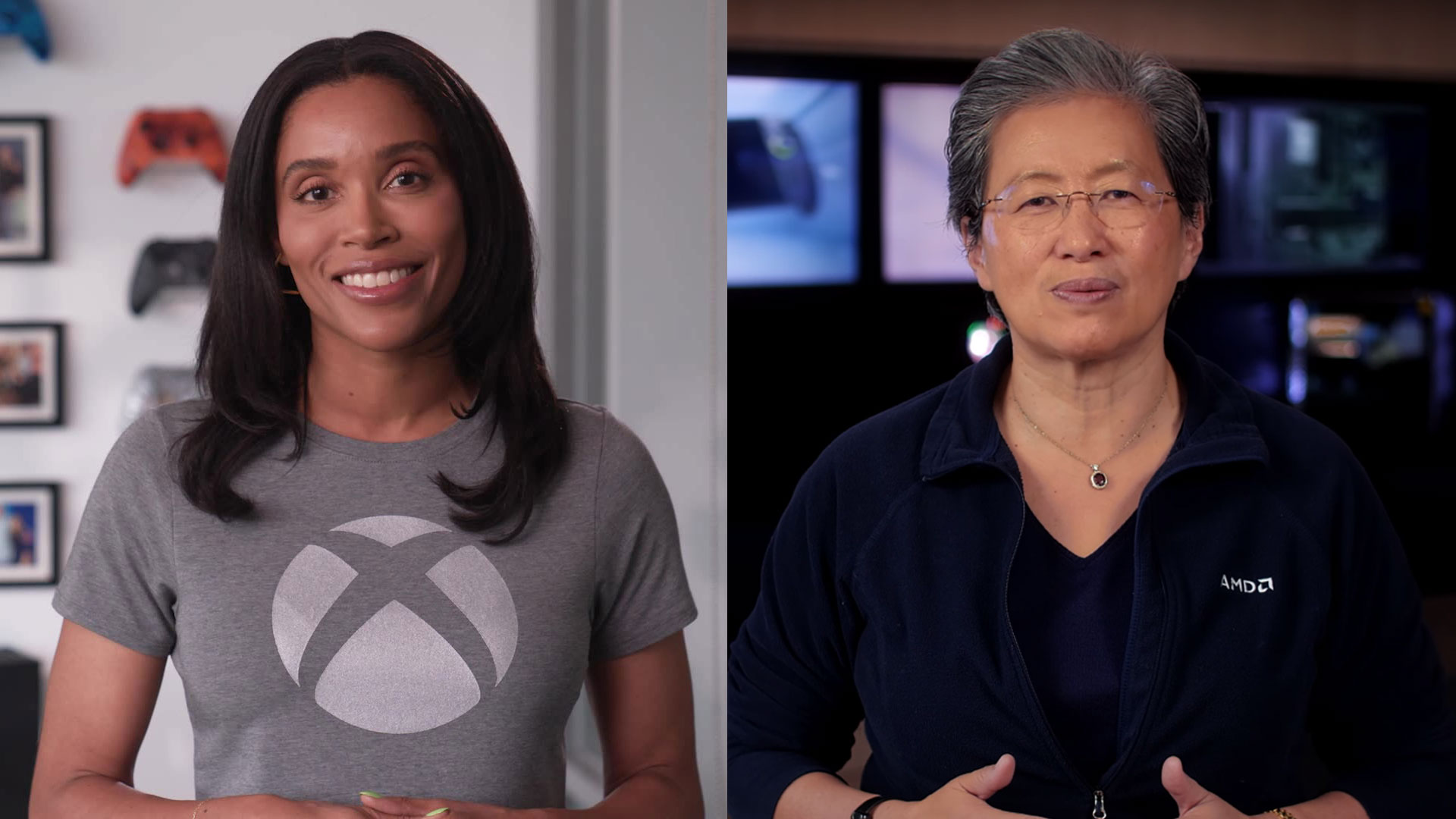

AMD, and Xbox co-engineered this solution for longterm for both companies. 5 GPU dies designed for 5 form factors, all using same CPU SOC.

Xbox and AMD: Advancing the Next Generation of Gaming Together - Xbox Wire

This week, Xbox announced it is actively building its next-generation lineup across console, handheld, PC, cloud, and accessories. Find out more inside.

news.xbox.com

news.xbox.com

They mention portfolio of devices multiple times.

Last edited: