Nintendo Switch 2 vs PS4 Pro is the most fitting comparison when evaluating two hardware systems with broadly similar performance ceilings, despite differences in architecture and rendering approaches.

This isn't just old vs new, it's muscle vs brains. The PS4 Pro, with its 4.2 TFLOPs GCN GPU and 8GB of GDDR5 RAM, was a beast in its time. It handles high-res textures, heavy post-processing, and complex effects like volumetrics and alpha transparencies with confidence. Native or checkerboarded 4K was its calling card, but all of this runs on a dated Jaguar CPU and an architecture that's now well behind the curve.

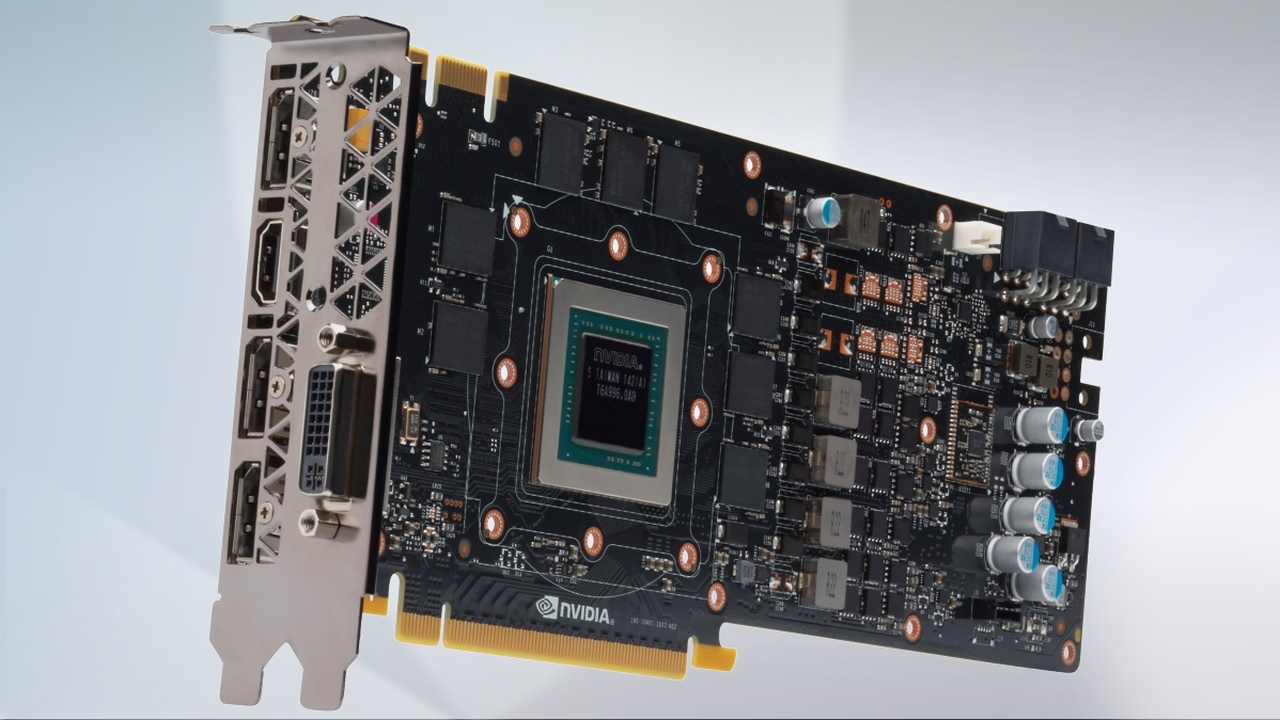

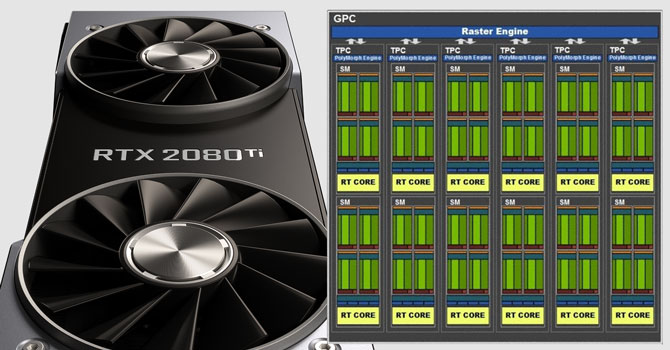

The Switch 2, meanwhile, takes a completely different route, running a custom NVIDIA Ada Lovelace-based SoC, it's all about efficiency and modern rendering. With DLSS 3.5 in the mix, including frame generation and ray reconstruction, the console doesn't need brute force to punch above its weight.

In raw texture quality and bandwidth-heavy scenes, the PS4 Pro still pulls ahead. It's better at pushing high-res assets without compromises. But in lighting and overall visual coherence, especially in motion, the Switch 2 may look more "next-gen," thanks to modern techniques like real-time global illumination and AI-powered reconstruction.

Particle density and effects are another battleground: PS4 Pro delivers more of them, but the Switch 2 can simulate similar results using smarter shaders and DLSS-enhanced tricks, albeit sometimes at the cost of artifacting or edge softness.

Bottom line ? PS4 Pro is still impressive for raw power and static image quality. But the Switch 2 brings modern rendering tools to the fight, DLSS, better CPU efficiency, and scalable performance. For handheld gaming with "current-gen visuals", it's a game-changer. For raw asset fidelity on a 4K screen, the PS4 Pro still flexes harder.

All things considered, I'm pleasantly impressed by the hardware of the Switch 2, just quite a bit disappointed by the astronomical price of some physical games, such as 'Mario Kart World' priced at €89.99, while others like 'Donkey Kong Bananza' are offered at €79.99(Italy)