The PS5 Pro has 6x better AI hardware than the XSX. Do you think it makes a difference in practical terms?But Xbox has a better console this gen, that's a fact, better VRR, better OS features, AI hardware.

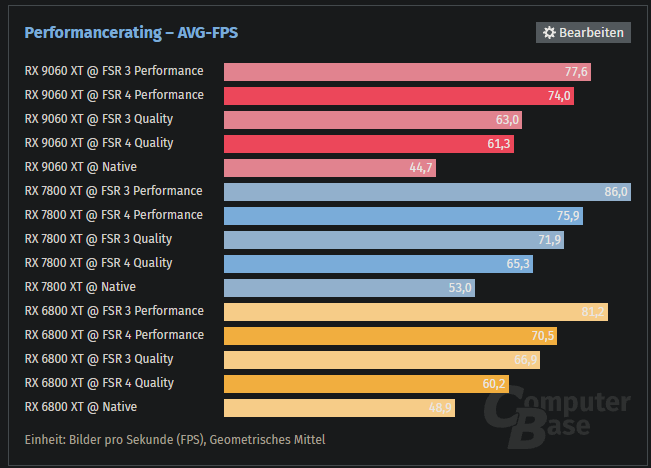

I'm actually expecting XS consoles to be FSR 4 capable.

Good luck implementing FSR4 on a Series S. Even on the 30fps games I'm skeptical it would be a possibility. I have no idea why DF tried to run this so called Xbox Series "simulation" and pin blame on base PS5 as if that's what's holding XSX back on implementing it. Purely academic simulation I guess but suggesting base PS5 is the reason we haven't seen it on XS is stupid. The base PS5 isn't holding XSX back on an upscaler option especially when there is a PS5 Pro out there and PC support. The Xbox series hardware itself is what's holding FSR4 support back.

They were better off doing a PS5 Pro "equivalent" test because at least that would have been somewhat useful in the real world.