-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

vpance

Member

Everything we actually do know flies in the face of a sub 10TF machine.

/snip

It depends on where said quotes and leaks set their standards at though. At around 13TF Vega, lots of devs might feel that gives them enough power to make a nice jump. No one is going to say anything overly negative. Closest we got was that Platinum games studio guy.

As for PSVR2, it's possible they could go 1440p. But I doubt it.

I think he might be saying it duo to AVX512 support, but I think Zen2 will have this extension for servers, therefore at Sonys and MS disposal as well.And this? Any significance in relation to Scarlett?

LordOfChaos

Member

I think he is insinuating AVX512 extension in Arden. Tbh I expected this in both consoles as well as Zen2 server chips.

I'm reading that as Zen 3 (18.2) has AVX-512, Zen 2 (18.0) doesn't, and the Arden core has a custom stepping (18.h) that doesn't differentiate the two?

AVX-512 is a wide pathway and you have to step down clock speeds to use it, we're already fighting for die size on next gen, even keeping an 8 core Zen 2's 32MB L3 cache isn't set in stone, I'm not expecting AVX-512

Last edited:

ethomaz

Banned

Can we stop with that 2x, dual-gpu bullshit?RSX was 2 x GeForce 6800 and PS4 Pro was OG PS4 x 2 (butterfly setup for easy BC).

Sony has a precedent with this. They just need the proper lithography tech to pull this off.

Pro was made with 2 Shader Engines... each Shader Engine is identical to each other... Vega 64 for example has 4 identical Shader Engines... RX 5700 has 2 identical Shader Engines.

And RSX was not two GeForce 6800... c'mon... it was not even the standard nVidia setup at time... the ALUs were different and do more tasks than 6800 or 7800.

We are starting to reach MisterXMedia level of allucinations.

Last edited:

Negotiator

Banned

Yeah, I know it's not a dual GPU.Amm....there is nothing dual GPU about RSX and Pro. Not a thing.

Pro is using "butterfly" (whatever that PR speak is) as a way to say "We doubled CUs between the two so that we can have rudimental BC support by disabling half a chip when running PS4 games".

As for PS4 dev kits, this is for 3rd party as I understand, because 1st party gets it months in advance.

Who's to say that Sony won't do the same once again with Navi CUs by doubling them in a single monolithic semi-custom GPU? 1st gen crappy 7nm lithography intended for PC enthusiasts that will pay an arm and a leg for a mid-range GPU? Sorry, but PC hardware price gouging philosophy doesn't apply to consoles. Thank god Cerny doesn't think that way.

Nope, the final PS3 specs (Cell 3.2 GHz, RSX) were completed in January 2006 (PS3 was released in November 2006):PS3 dev kits with official SOC came more then 1 year before release, 2005.

The final PS4 specs (8-core Jaguar/Radeon APU) were completed in January 2013 (PS4 was released in November 2013):

ORBIS Devkits Roadmap/Types

Sony and Microsoft have had a lot of work with Orbis and Durango. Create a new hardware it’s a long running process, you have to build different versions of hardware and make changes along the core until the last version, the closer one to the retail product. We have created an article with...

vgleaks.com

vgleaks.com

Notice a pattern here?

PS5 final specs will likely be finalized in January 2020, the reveal will happen sometime in February (special, invite-only PS event for journalists), they will show the final form factor at E3 2020 and the retail console will be released in November 2020, like clockwork.

Negotiator

Banned

You're preaching to the choir.Can we stop with that 2x, dual-gpu bullshit?

Pro was made with 2 Shader Engines... each Shader Engine is identical to each other... Vega 64 for example has 4 identical Shader Engines... RX 5700 has 2 identical Shader Engines.

And RSX was not two GeForce 6800... c'mon... it was not even the standard nVidia setup at time... the ALUs were different and do more tasks than 6800 or 7800.

RSX performed as 2 x GeForce 6800, that's the point. It wasn't a dual GPU.

ResilientBanana

Member

13TF is not happening in a $400 - $500 game console. I'd be pleasantly surprised if this was the case, but it's not. AMD's presentation was everything we needed to know to know how many TFLOPS were going to be achieved. The Secret Sauce in both cases is most likely RT. Expect NAVI lite numbers.It depends on where said quotes and leaks set their standards at though. At around 13TF Vega, lots of devs might feel that gives them enough power to make a nice jump. No one is going to say anything overly negative. Closest we got was that Platinum games studio guy.

As for PSVR2, it's possible they could go 1440p. But I doubt it.

Last edited:

vpance

Member

13TF is not happening in a $400 - $500 game console. I'd be pleasantly surprised if this was the case, but it's not. AMD's presentation was everything we needed to know to know how many TFLOPS were going to be achieved. The Secret Sauce in both cases is most likely RT. Expect NAVI lite numbers.

13TF Vega is 8.5TF Navi. I put Vega numbers for a more familiar reference.

Negotiator

Banned

AVX512 is too costly silicon-wise (console CPU budget is traditionally small) and consoles are better off offloading SIMD tasks to the GPGPU.

It would be kinda stupid to compromise CPU clocks below 3.2 GHz (which will heavily affect ST/integer performance) to offer AVX512. Intel is still struggling with it.

It would be kinda stupid to compromise CPU clocks below 3.2 GHz (which will heavily affect ST/integer performance) to offer AVX512. Intel is still struggling with it.

There are few facts about Gonzalo which we are aware of so for anyone wondering why this should be PS5 or console in general, here is what I got from APISAK and Komachi :

1. Gonzalo is codename for high performance console SOC.

ZG16702AE8JB2_32/10/18_13F8

2. We know it because its codename is defined with "G" (AMD decoded - console).

3. It was in ES1 (engineering sample) in January and QS in April (first letter in codename "Z")

4. It consists of 8 Zen2 cores clocked at 1.6GHZ base and 3.2GHZ boost, and Navi 10 Lite GPU clocked at 1.8GHZ

5. We know its Navi 10 Lite because 13e9 has been identified as dev ID for Navi Lite on Chiphell back in January.

6. Why do we connect it to Ariel and PS5? Because Ariel has 13e9 entry in PCI ID, and Ariel falls under Sony custom chip entries. According to APISAK 13F8 is likely revision of this chip (as first version ran at 1GHZ)

7. Why not Xbox or something else? Because Xbox has codename in PCI ID base as well - Arden (confirmed by Brad Sams in January) and its not something else because AMD hasnt said they won any other high performance semi custom design bar next Gen consoles and Subor Z (that had Polaris and Zen1 this year, this kind of SOC is to expensive for firm that went bankrupt)

8. Can it be Google, Amazon or Apples chip? Doubt it. Google Stadia uses discrete Vega 56 card and Intel CPU, Amazon is just laying off its gaming division and Apple does not have console rumored in works. If they had, any of them, we would get leaks much earlier then when chip is already in QS status.

So all in all, Gonzalo is high performance monolithic chip confirmed to be for console.

It ties up with Ariel and Navi 10 Lite, while MS's next gen console is codename Arden. What are the chances its not PS5? IMO very small. Miniscule tbh.

1. Gonzalo is codename for high performance console SOC.

ZG16702AE8JB2_32/10/18_13F8

2. We know it because its codename is defined with "G" (AMD decoded - console).

3. It was in ES1 (engineering sample) in January and QS in April (first letter in codename "Z")

4. It consists of 8 Zen2 cores clocked at 1.6GHZ base and 3.2GHZ boost, and Navi 10 Lite GPU clocked at 1.8GHZ

5. We know its Navi 10 Lite because 13e9 has been identified as dev ID for Navi Lite on Chiphell back in January.

6. Why do we connect it to Ariel and PS5? Because Ariel has 13e9 entry in PCI ID, and Ariel falls under Sony custom chip entries. According to APISAK 13F8 is likely revision of this chip (as first version ran at 1GHZ)

7. Why not Xbox or something else? Because Xbox has codename in PCI ID base as well - Arden (confirmed by Brad Sams in January) and its not something else because AMD hasnt said they won any other high performance semi custom design bar next Gen consoles and Subor Z (that had Polaris and Zen1 this year, this kind of SOC is to expensive for firm that went bankrupt)

8. Can it be Google, Amazon or Apples chip? Doubt it. Google Stadia uses discrete Vega 56 card and Intel CPU, Amazon is just laying off its gaming division and Apple does not have console rumored in works. If they had, any of them, we would get leaks much earlier then when chip is already in QS status.

So all in all, Gonzalo is high performance monolithic chip confirmed to be for console.

It ties up with Ariel and Navi 10 Lite, while MS's next gen console is codename Arden. What are the chances its not PS5? IMO very small. Miniscule tbh.

Last edited:

Sqrt_minus_one

Member

Not necessarily too expensive silicon and power wise, they could treat it like they treated AVX256 in the original Zen, split the instructions into 2 and execute as 2 128bit instructions.AVX512 is too costly silicon-wise (console CPU budget is traditionally small) and consoles are better off offloading SIMD tasks to the GPGPU.

It would be kinda stupid to compromise CPU clocks below 3.2 GHz (which will heavily affect ST/integer performance) to offer AVX512. Intel is still struggling with it.

AVX512 is also split into various groups of instructions in a custom console CPU you could support some instructions whilst ignoring the rest, so partial AVX512 support. There are some instructions that I think would be beneficial to consoles in that instruction set.

xool

Member

AVX512 is too costly silicon-wise (console CPU budget is traditionally small) and consoles are better off offloading SIMD tasks to the GPGPU.

It would be kinda stupid to compromise CPU clocks below 3.2 GHz (which will heavily affect ST/integer performance) to offer AVX512. Intel is still struggling with it.

I don't understand why you think clocks would be limited by AVX512 - essentially it's just double width the SIMD we're used to (256bit) - introduced on the XeonPhi to get the FLOPS up.. (and GPU like efficiency for supercompute) - assuming they double the number of adders and multipliers I don't see the issue with clocks .[not an expert]

Fast multipliers take up a bit of space (a lot by 1990s standards) but compared to GPU space requirements, or even as a percentage of CPU area - that extra die space is "peanuts" by todays standards

Last edited:

Negotiator

Banned

I thought we were talking about true AVX512 support.Not necessarily too expensive silicon and power wise, they could treat it like they treated AVX256 in the original Zen, split the instructions into 2 and execute as 2 128bit instructions.

AVX512 is also split into various groups of instructions in a custom console CPU you could support some instructions whilst ignoring the rest, so partial AVX512 support. There are some instructions that I think would be beneficial to consoles in that instruction set.

Jaguar also supports AVX256, but it only has a 128-bit FPU and many people complained about it.

True AVX256 support for Zen 2 is more than enough IMHO. They can use the GPGPU for more wide tasks (GCN with Warp64 is essentially a 2048-bit processor).

Intel CPUs heavily downclock in AVX workloads. This isn't a coincidence.I don't understand why you think clocks would be limited by AVX512 - essentially it's just double width the SIMD we're used to (256bit) - introduced on the XeonPhi to get the FLOPS up.. (and GPU like efficiency for supercompute) - assuming they double the number of adders and multipliers I don't see the issue with clocks .[not an expert]

Fast multipliers take up a bit of space (a lot by 1990s standards) but compared to GPU space requirements, or even as a percentage of CPU area - that extra die space is "peanuts" by todays standards

xool

Member

But doesn't that defeat the main point of AVX512 ie double the float performance - if you just add instructions but don't add the functional units - without extra SIMD maths units performance doesn't shift.Not necessarily too expensive silicon and power wise, they could treat it like they treated AVX256 in the original Zen, split the instructions into 2 and execute as 2 128bit instructions.

..

xool

Member

I didn't know this - is this just because they get hot or something else ?Intel CPUs heavily downclock in AVX workloads. This isn't a coincidence.

[edit] googled it - what a shit show - well done intel ..

Last edited:

Negotiator

Banned

Yeah, they get very hot and toothpaste doesn't help either.I didn't know this - is this just because they get hot or something else ?

FPU transistors are one of the hottest parts of a microchip. It's the same reason GPUs can easily reach 300W these days and of course their max clocks (~2 GHz) are much lower compared to high-end CPUs (5 GHz). It's a trade-off.

LordOfChaos

Member

And this? Any significance in relation to Scarlett?

Given that Microsoft already said Zen 2 specifically, their guess was just that, and wrong

I'm not reading where that even says it has AVX-512, it said 18.2 did, 18.0 didn't, and Arden lists 18.h?

Last edited:

SlimySnake

Flashless at the Golden Globes

yep. this all makes too much sense for it to not be the ps5 chip.There are few facts about Gonzalo which we are aware of so for anyone wondering why this should be PS5 or console in general, here is what I got from APISAK and Komachi :

1. Gonzalo is codename for high performance console SOC.

ZG16702AE8JB2_32/10/18_13F8

2. We know it because its codename is defined with "G" (AMD decoded - console).

3. It was in ES1 (engineering sample) in January and QS in April (first letter in codename "Z")

4. It consists of 8 Zen2 cores clocked at 1.6GHZ base and 3.2GHZ boost, and Navi 10 Lite GPU clocked at 1.8GHZ

5. We know its Navi 10 Lite because 13e9 has been identified as dev ID for Navi Lite on Chiphell back in January.

6. Why do we connect it to Ariel and PS5? Because Ariel has 13e9 entry in PCI ID, and Ariel falls under Sony custom chip entries. According to APISAK 13F8 is likely revision of this chip (as first version ran at 1GHZ)

7. Why not Xbox or something else? Because Xbox has codename in PCI ID base as well - Arden (confirmed by Brad Sams in January) and its not something else because AMD hasnt said they won any other high performance semi custom design bar next Gen consoles and Subor Z (that had Polaris and Zen1 this year, this kind of SOC is to expensive for firm that went bankrupt)

8. Can it be Google, Amazon or Apples chip? Doubt it. Google Stadia uses discrete Vega 56 card and Intel CPU, Amazon is just laying off its gaming division and Apple does not have console rumored in works. If they had, any of them, we would get leaks much earlier then when chip is already in QS status.

So all in all, Gonzalo is high performance monolithic chip confirmed to be for console.

It ties up with Ariel and Navi 10 Lite, while MS's next gen console is codename Arden. What are the chances its not PS5? IMO very small. Miniscule tbh.

i am guessing Navi 10 LITE is the 36CU version of the RX 5700XT chip.

36CU at 1.8ghz gives us 8.29 tflops. or roughly 10.25 GCN tflops. Disappointing.

archy121

Member

There are few facts about Gonzalo which we are aware of so for anyone wondering why this should be PS5 or console in general, here is what I got from APISAK and Komachi :

1. Gonzalo is codename for high performance console SOC.

ZG16702AE8JB2_32/10/18_13F8

2. We know it because its codename is defined with "G" (AMD decoded - console).

3. It was in ES1 (engineering sample) in January and QS in April (first letter in codename "Z")

4. It consists of 8 Zen2 cores clocked at 1.6GHZ base and 3.2GHZ boost, and Navi 10 Lite GPU clocked at 1.8GHZ

5. We know its Navi 10 Lite because 13e9 has been identified as dev ID for Navi Lite on Chiphell back in January.

6. Why do we connect it to Ariel and PS5? Because Ariel has 13e9 entry in PCI ID, and Ariel falls under Sony custom chip entries. According to APISAK 13F8 is likely revision of this chip (as first version ran at 1GHZ)

7. Why not Xbox or something else? Because Xbox has codename in PCI ID base as well - Arden (confirmed by Brad Sams in January) and its not something else because AMD hasnt said they won any other high performance semi custom design bar next Gen consoles and Subor Z (that had Polaris and Zen1 this year, this kind of SOC is to expensive for firm that went bankrupt)

8. Can it be Google, Amazon or Apples chip? Doubt it. Google Stadia uses discrete Vega 56 card and Intel CPU, Amazon is just laying off its gaming division and Apple does not have console rumored in works. If they had, any of them, we would get leaks much earlier then when chip is already in QS status.

So all in all, Gonzalo is high performance monolithic chip confirmed to be for console.

It ties up with Ariel and Navi 10 Lite, while MS's next gen console is codename Arden. What are the chances its not PS5? IMO very small. Miniscule tbh.

Great summary.

Do we not need to bare in mind the PS5 is delayed and these specs relate to a 2019 release ?

Surely these can't be locked down with so much more time added to release date.

In what areas are changes still feasible with the additional time ?

Going smaller in nm with higher clocks seems to the easiest improvement other than memory/HD capacity changes.

Last edited:

SonGoku

Member

I wouldn't be so happy if i were you, PS5 will be within spitting distance of Scarlett, if PS5 shit XB2 will be shit as well.seems like 8-9 tflop is confirmed for ps5

Not exactly a exiting prospect

Wait, so one of the theories is that PS5 is dual GPU, like PS4 pro? Like dual 5700s?

Semantics.

I think that refers the way pro gpu is made like dual gpu of ps4. you can see it in the picture if you draw horizontal line in middle looks like butterflie wings.

Its not dual, just symmetricbased on Mark Cerny quote I also thought PS4P GPU is indeed dual GPU, and if you run PS4 pro games both chips are activated.

Not really, just a bunch of assumptions

Uninformed peopleWhat's with all the talk about a dual soc like the PS4 Pro? where is this coming from?

10-11TF is doable on 7nm, eating losses on a big chip short term until it can be shrunk to 6nm (2021)Seems like the only way 10TF+ is likely is if Sony goes 7nm EUV. With 6nm and 5nm right around the corner going with a tiny 7nm chip would be a mistake.

7nm EUV from the get go is preferable though

Not even close to the mark, going by AMD official estimates:13TF Vega is 8.5TF Navi. I put Vega numbers for a more familiar reference.

13TF Vega = 10.4TF Navi (56CUs @1451Mhz)

Last edited:

SlimySnake

Flashless at the Golden Globes

first gonzolo leak with 1ghz was in january.Great summary.

Do we not need to bare in mind the PS5 is delayed and these specs relate to a 2019 release ?

In what areas are changes still feasible with the additional time ?

Going smaller in nm with higher clocks seems to the easiest improvement other than memory/HD capacity changes.

second one came as late as April 2019 just a couple of months ago. I would say its pretty much locked in at this point.

LordOfChaos

Member

AMDs semicustom division seems happy to make these weird 'G' series SoCs for names that aren't household in the west. It's not this one, but something like it would run Windows and be able to run the Firestrike benchmark in the first place. Because FreeBSD, the PS4's OS base and presumably the PS5s, doesn't.

www.anandtech.com

www.anandtech.com

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

Last edited:

SonGoku

Member

AMDs semicustom division seems happy to make these weird 'G' series SoCs for names that aren't household in the west. It's not this one, but something like it would run Windows and be able to run the Firestrike benchmark in the first place. Because FreeBSD, the PS4's OS base and presumably the PS5s, doesn't.

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.www.anandtech.com

DemonCleaner

Member

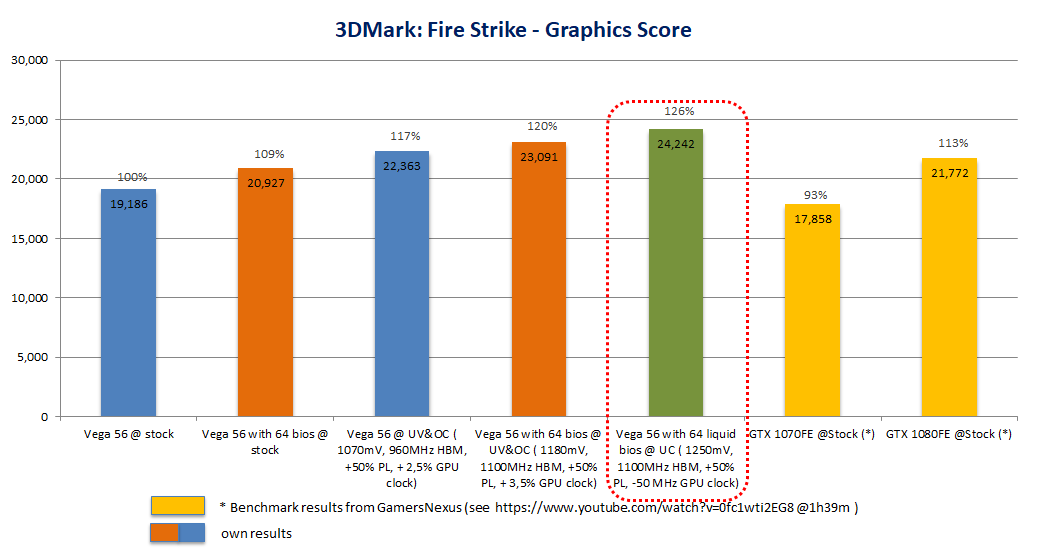

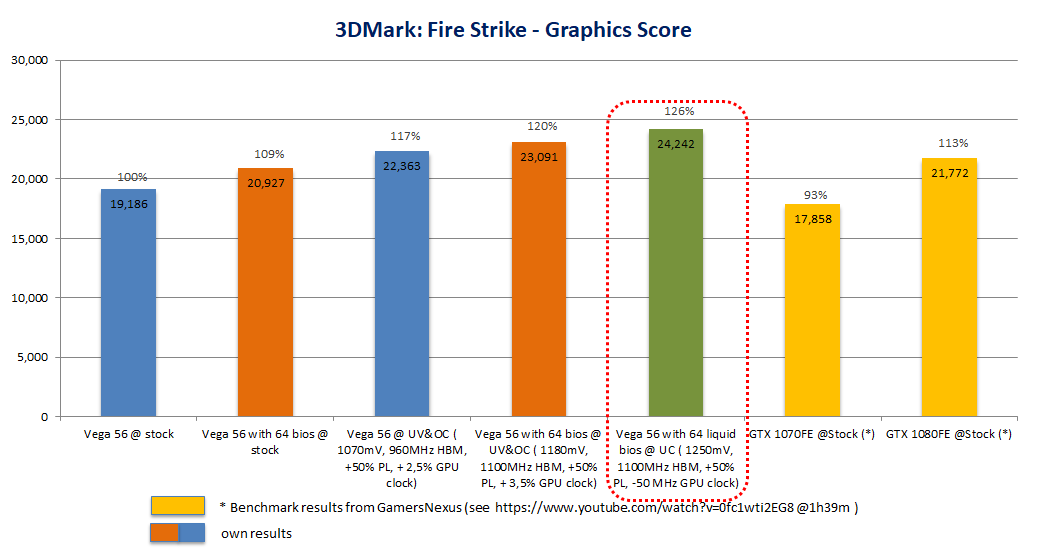

guys to get a firestrike (combined) score of 20.000+ with a vega56 you have to overclock it to above gtx1080 levels and have a beafy CPU. the scores you're quoting are heavy overclocks.

i thinks many here are also confusing graphics and overall score.

i put my vega56 on water and put 400W through it (with the liquid bios) to get to 20K combined. i had a graphics score of over 24.000 while doing it.

still have the results:

stock combined scores for a factory vega56 must be around 16-17K depending on CPU

apisak is stating combined scores.

i thinks many here are also confusing graphics and overall score.

i put my vega56 on water and put 400W through it (with the liquid bios) to get to 20K combined. i had a graphics score of over 24.000 while doing it.

still have the results:

stock combined scores for a factory vega56 must be around 16-17K depending on CPU

apisak is stating combined scores.

Sqrt_minus_one

Member

I thought we were talking about true AVX512 support.

Jaguar also supports AVX256, but it only has a 128-bit FPU and many people complained about it.

True AVX256 support for Zen 2 is more than enough IMHO. They can use the GPGPU for more wide tasks (GCN with Warp64 is essentially a 2048-bit processor).

Intel CPUs heavily downclock in AVX workloads. This isn't a coincidence.

But doesn't that defeat the main point of AVX512 ie double the float performance - if you just add instructions but don't add the functional units - without extra SIMD maths units performance doesn't shift.

AVX512 is not just a mechanism to operate on wide vectors, AVX512 also introduced new special instructions to speed up vector processing and these new instructions need not necessarily be run in 512 bit execution mode.

I am saying that I would not bet against some of these new instructions being useful in a console space.

For instance AVX512 introduces a scatter/gather instructions for vectors whilst AVX256 only had a gather instruction, I am speculating that the scatter gather instruction could be useful in a console.

AVX512 also introduced new instructions to speed up neural network processing, the VNNI extension, could this be useful - perhaps.

I am not advocating a full 512bit wide execution pipeline within the CPU, just an examination of useful new instructions found in the AVX512 ISA and utilising them on a 256 bit vector unit.

AMD is likely building support for AVX512 in a future Zen version anyhow.

DemonCleaner

Member

I'm a bit confused...

How did he run 3DMark on PS4?

Orbis / Gnm has a fallback layer for DX11 for lazy non-low level programming. so that's not impossible.

also the reason why we saw and still see so many shitty multiplatform conversions.

xool

Member

There are few facts about Gonzalo which we are aware of so for anyone wondering why this should be PS5 or console in general, here is what I got from APISAK and Komachi :

1. Gonzalo is codename for high performance console SOC.

ZG16702AE8JB2_32/10/18_13F8

2. We know it because its codename is defined with "G" (AMD decoded - console).

3. It was in ES1 (engineering sample) in January and QS in April (first letter in codename "Z")

4. It consists of 8 Zen2 cores clocked at 1.6GHZ base and 3.2GHZ boost, and Navi 10 Lite GPU clocked at 1.8GHZ

5. We know its Navi 10 Lite because 13e9 has been identified as dev ID for Navi Lite on Chiphell back in January.

6. Why do we connect it to Ariel and PS5? Because Ariel has 13e9 entry in PCI ID, and Ariel falls under Sony custom chip entries. According to APISAK 13F8 is likely revision of this chip (as first version ran at 1GHZ)

7. Why not Xbox or something else? Because Xbox has codename in PCI ID base as well - Arden (confirmed by Brad Sams in January) and its not something else because AMD hasnt said they won any other high performance semi custom design bar next Gen consoles and Subor Z (that had Polaris and Zen1 this year, this kind of SOC is to expensive for firm that went bankrupt)

8. Can it be Google, Amazon or Apples chip? Doubt it. Google Stadia uses discrete Vega 56 card and Intel CPU, Amazon is just laying off its gaming division and Apple does not have console rumored in works. If they had, any of them, we would get leaks much earlier then when chip is already in QS status.

So all in all, Gonzalo is high performance monolithic chip confirmed to be for console.

It ties up with Ariel and Navi 10 Lite, while MS's next gen console is codename Arden. What are the chances its not PS5? IMO very small. Miniscule tbh.

I read the tweets Apisak/Komachi a couple of times and saw :5. We know its Navi 10 Lite because 13e9 has been identified as dev ID for Navi Lite on Chiphell back in January.

- 13e9 means NaviLite

- Gfx1000 means Navi10

So what does "Navi Lite" mean and what does it tell us ? -- according to speculation on www.reddit.com/r/Amd/comments/c1xkd0/navi_10121421_and_lite_variants_spotted_by_apisak/ the "Lite" suffix might mean it's Navi as part of an APU (not discrete) -

i am guessing Navi 10 LITE is the 36CU version of the RX 5700XT chip.

36CU at 1.8ghz gives us 8.29 tflops. or roughly 10.25 GCN tflops. Disappointing.

36 becuase Navi 10 tops out at 40CU (251mm2), and the expectation is to have a few disabled becuase yields ??

LordOfChaos

Member

Orbis / Gnm has a fallback layer for DX11 for lazy non-low level programming. so that's not impossible.

also the reason why we saw and still see so many shitty multiplatform conversions.

GNMX is the high level wrapper to GNM. There's no DX on PS hardware.

You may have read the sentence "This can be a familiar way to work if the developers are used to platforms like Direct3D 11"? It's not actually running DX11 anywhere, that's Windows proprietary

DemonCleaner

Member

GNMX is the high level wrapper to GNM. There's no DX on PS hardware.

You may have read the sentence "This can be a familiar way to work if the developers are used to platforms like Direct3D 11"? It's not actually running DX11 anywhere, that's Windows proprietary

well thanks. might have confused that. pretty sure i read several times that there's compatibility for D3D11 procedures and therefore easy code conversion.

Last edited:

SonGoku

Member

done@SonGoku - can this excellent piece of detective work be nailed to the OP

5. We know its Navi 10 Lite because 13e9 has been identified as dev ID for Navi Lite on Chiphell back in January.

Can you clarify elaborate on these two?Ariel falls under Sony custom chip entries.

?SonGoku, you are leaving out hardware RT part confirmed in PS5 and Scarlett chips when comparing it to Vega 13TF.

Negotiator

Banned

Navi will probably support neural networks/deep learning acceleration, they'll need it for next-gen AI:AVX512 is not just a mechanism to operate on wide vectors, AVX512 also introduced new special instructions to speed up vector processing and these new instructions need not necessarily be run in 512 bit execution mode.

I am saying that I would not bet against some of these new instructions being useful in a console space.

For instance AVX512 introduces a scatter/gather instructions for vectors whilst AVX256 only had a gather instruction, I am speculating that the scatter gather instruction could be useful in a console.

AVX512 also introduced new instructions to speed up neural network processing, the VNNI extension, could this be useful - perhaps.

I am not advocating a full 512bit wide execution pipeline within the CPU, just an examination of useful new instructions found in the AVX512 ISA and utilising them on a 256 bit vector unit.

AMD is likely building support for AVX512 in a future Zen version anyhow.

Here's One Area Where PS5 and Xbox Scarlett Could Make Huge Progress In

According to Youichiro Miyake, Square Enix’s lead AI researcher, the PlayStation 5, next Xbox, and next-gen gaming in general are poised to make substantial progress in AI, especially when it comes to creating NPC crowd tech. The AI researcher believes that while we haven’t seen large leaps in...

AMD’s Navi 7nm GPU Architecture To Reportedly Feature Dedicated AI Circuitry

AMD’s Navi 7nm GPU Architecture To Reportedly Feature Dedicated AI Circuitry

What other uses could AVX512 have in a console? Cell SPUs emulation?

Zen uarch would need some heavy semi-custom modifications for that. For starters, all 8 cores would have to be in the same CCX (AMD still uses quad-core CCX) to properly emulate Cell's fast internal bus (EIB ~300GB/s) that connects everything together. Kinda like what Intel does with ring bus.

And they would also have to reduce the L2 cache latency to 1-cycle (SPU local store memory is almost as fast as accessing registers). Not sure if that's possible.

Other than that, I see no other benefits for AVX512 in a console environment.

Burrito Bandito

Banned

.ps5 final silicon dev kits might be out if we get an april/may launch.

#teamApril1st

whens the last time a PS or xbox launched outside of the Oct-Dec window? I seriously doubt we will see Q1 2020 for either console, especially not Sony, who has absolutely no reason to. Thats a move someone looking to regain marketshare would do in desperation.

I am saying comparison wirh 13TF Vega does not work. First because Navi XT is 1.14x faster then Vega 64. Second, because any TF advantage jn earlier dev kits will have to be used as headroom for already condirmed hardware RT.

So ~8.5TF NAVI + hardware RT is certainly closer to Vega64 then Vega56, even though it misses 4TF.

2080 is "only" 9.5TF GPU if we are getting so bent out of shape for TF.

Negotiator

Banned

GNMX is extremely similar to DX11 according to some devs. Sony offered this API to facilitate quick & dirty ports (mainly Unity-based indie games that run like crap aka sub-30 fps). Vulkan/DX12/GNM also share a lot of similarities, otherwise the porting process would have been a nightmare for devs.GNMX is the high level wrapper to GNM. There's no DX on PS hardware.

You may have read the sentence "This can be a familiar way to work if the developers are used to platforms like Direct3D 11"? It's not actually running DX11 anywhere, that's Windows proprietary

Let's say that they managed to boot Windows somehow, even though the PS4 is not IBM PC compatible (not sure how many people realize this, the Aeolia southbridge is totally alien in PC terms). Linux Kernel needs over 5000 lines of code to change to be able to boot on a PS4.

Or maybe they run Linux and Wine/DXVK, which would most likely incur a huge performance hit and this would skew numbers quite a bit.

LordOfChaos

Member

well thanks. might have confused that. pretty sure i read several times that there's compatibility for D3D11 procedures.

GNMX makes it easier for a developer to move over from DX11, but we're still talking about translating Firestrikes DX11 calls to GNMX for one, and even then every Windows API and library call would be missing on FreeBSD.

Just keep your skeptics hat on for this benchmark is all I'm saying - who would be able to modify Futuremarks closed source binary for one, or run a non FreeBSD OS on FreeBSD (write all the drivers for another OS just to run a few tests? Put it on an online result browser? Windows, moreover?) for two...

The easiest possible explanation here is that it's another semicustom APU for another oddball Windows box like this

Next-Gen PS5 & XSX |OT| Console tEch threaD

I think he is insinuating AVX512 extension in Arden. Tbh I expected this in both consoles as well as Zen2 server chips. And this? Any significance in relation to Scarlett?

GNMX makes it easier for a developer to move over from DX11, but we're still talking about translating Firestrikes DX11 calls to GNMX for one, and even then every Windows API and library call would be missing on FreeBSD.

Just keep your skeptics hat on for this benchmark is all I'm saying - who would be able to modify Futuremarks closed source binary for one, or run a non FreeBSD OS on FreeBSD (write all the drivers for another OS just to run a few tests? Put it on an online result browser? Windows, moreover?) for two...

The easiest possible explanation here is that it's another semicustom APU for another oddball Windows box like this

Next-Gen PS5 & XSX |OT| Console tEch threaD

I think he is insinuating AVX512 extension in Arden. Tbh I expected this in both consoles as well as Zen2 server chips. And this? Any significance in relation to Scarlett?www.neogaf.com

The Subor Z+ console team has disbanded - but it's not game over yet

Last September we took an early look at the Z+, a Windows 10 games console from Chinese manufacturer Zhongshan Subor. A…

ethomaz

Banned

Maybe they put the APU in a PC for test pourposes.Orbis / Gnm has a fallback layer for DX11 for lazy non-low level programming. so that's not impossible.

also the reason why we saw and still see so many shitty multiplatform conversions.

PS4 did not run DX11... it is an API similar to DX11.

SonGoku

Member

Still equivalent to 10TF of GNC for rasterization performance, nowhere close to the 13TF found in devkitsSo ~8.5TF NAVI + hardware RT is certainly closer to Vega64 then Vega56, even though it misses 4TF.

This theory has a bunch of holes in it and going through a bunch of assumptions to make it work:

- Is it real?

- Its not a Chinese gaming chip?

- How is it linked to PS5 other than PS4 chips? What's to say this isn't cashing it on that to make it believable

- April dev kits weren't Navi because? Didn't gonzolo appear before that?

- It performs like 10TF GNC even though devkits report 13TF of unknown arch

- Why would Sony use a Latin last name for the APU code name?

10TF*2080 is "only" 9.5TF GPU if we are getting so bent out of shape for TF.

We are talking of navi not Turing

8TF Navi would perform similar to 6TF Turing.

Last edited:

Negotiator

Banned

China is a huge country/market, who cares if one company went bankrupt? As the title says "it's not game over yet".

The Subor Z+ console team has disbanded - but it's not game over yet

Last September we took an early look at the Z+, a Windows 10 games console from Chinese manufacturer Zhongshan Subor. A…www.eurogamer.net

There are probably other companies that would also need a gaming APU for the Chinese market.

ethomaz

Banned

I believe it will be a killer move if they do that.whens the last time a PS or xbox launched outside of the Oct-Dec window? I seriously doubt we will see Q1 2020 for either console, especially not Sony, who has absolutely no reason to. Thats a move someone looking to regain marketshare would do in desperation.

There is only pros in this move:

- No holidays stock issues

- Two big seller period in the first year

LordOfChaos

Member

The Subor Z+ console team has disbanded - but it's not game over yet

Last September we took an early look at the Z+, a Windows 10 games console from Chinese manufacturer Zhongshan Subor. A…www.eurogamer.net

A semicustom part in the same vein as that is what I suggested, not that exact part or company

I mean, what's the explanation for this APU running a Windows benchmark when Playstation is FreeBSD based? The closest plausible explanation if you squint would be that they also run Linux on it for testing and ran it through Wine, but then you'd expect such a performance drop, and to even land between the Vegas...

And why would they need to do that anyways, FreeBSD is what they know and customize and run on PS hardware for years.

There's so much about that you need to squint really hard to make it seem plausible, vs it just being a semicustom part for another obscure Windows box.

Last edited:

ethomaz

Banned

That is why I asked.. seems weird to see 3DMark benchmarks for PS4.GNMX makes it easier for a developer to move over from DX11, but we're still talking about translating Firestrikes DX11 calls to GNMX for one, and even then every Windows API and library call would be missing on FreeBSD.

Just keep your skeptics hat on for this benchmark is all I'm saying - who would be able to modify Futuremarks closed source binary for one, or run a non FreeBSD OS on FreeBSD (write all the drivers for another OS just to run a few tests? Put it on an online result browser? Windows, moreover?) for two...

The easiest possible explanation here is that it's another semicustom APU for another oddball Windows box like this

Next-Gen PS5 & XSX |OT| Console tEch threaD

I think he is insinuating AVX512 extension in Arden. Tbh I expected this in both consoles as well as Zen2 server chips. And this? Any significance in relation to Scarlett?www.neogaf.com

But maybe for test only before they had the full PS4 hardware they used the APU in a PC with Windows and so they did the benchmarks.

I believe there is no issue in installing a Windows in a machine with PS4's APU because it is fully compatible.

Of course the final PS4 machine can be better around in performance than that 3DMark frankstein test.

Last edited:

Dabaus

Banned

.

Sony have been quiet, haven't they?

[/QUOTE]

What if that were to happen but it was only 8.5-9 Tflops, 399? Game over for Xbox division?

Sony have been quiet, haven't they?

[/QUOTE]

.ps5 final silicon dev kits might be out if we get an april/may launch.

#teamApril1st

What if that were to happen but it was only 8.5-9 Tflops, 399? Game over for Xbox division?

Last edited:

vpance

Member

I wouldn't be so happy if i were you, PS5 will be within spitting distance of Scarlett, if PS5 shit XB2 will be shit as well.

Not exactly a exiting prospect

Its not dual, just symmetric

Not really, just a bunch of assumptions

Uninformed people

10-11TF is doable on 7nm, eating losses on a big chip short term until it can be shrunk to 6nm (2021)

7nm EUV from the get go is preferable though

Not even close to the mark, going by AMD official estimates:

13TF Vega = 10.4TF Navi (56CUs @1451Mhz)

My bad, I meant GCN.

I suspect going near 400mm2 7nm will be a no no. They probably don't want to trigger EPA regulations/tariffs or something as far as CE devices go. Times have changed from PS3 days.

xool

Member

I thought the Apisak etc work looked reliable - but doesn't make it guaranteed rightStill equivalent to 10TF of GNC for rasterization performance, nowhere close to the 13TF found in devkits

...

But the simple explanation is : we have 13TF dev kits that exceed even the ~8TF RDNA>GCN TF conversion because/if dev kits are/were running more powerful hardware to ease development before optimization.

- Status

- Not open for further replies.