-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Gen.Grievous

Member

You really think Nvidia didn't test this? Unless you're running with a crappy stock cooler (which I hope no sane person does with a budget to get a 3000 series card) or have an insane RAM overclock you will be fine.Hmm, took this screenshot from Gamers Nexus:

What it looks like that FE edition front fan is exhausting hot air right into the CPU tower, I guess FE is a no no for me as I'm using tower for my CPU's cooling.

Jack Videogames

Member

8GB on 1080p so the new 3070 and 3080 may struggle with ram if played at 4k

RPS says 4gb at 1080p, 8gb at 4K

Crysis Remastered PC requirements are finally here

The original Crysis was famous for melting PCs everywhere, but the PC requirements for its retooled successor Crysis Re…

GHG

Member

You really think Nvidia didn't test this? Unless you're running with a crappy stock cooler (which I hope no sane person does with a budget to get a 3000 series card) or have an insane RAM overclock you will be fine.

Sometimes these parts are predominantly tested on test benches unfortunately. The reason being is because they know that's how most reviewers and outlets test their cards when doing benchmarks. So some of the AIB's especially design coolers that will perform best in test bench situations, and that cooling "performance" doesn't always carry over when the same cooling solution is placed in an enclosed case.

In the event that the cooler is tested in a case it would be useful to know which case and what case fan configuration (along with what case fans were used rpms the fans were spinning at), but we never get told that information by the manufacturers.

So basically we have no idea what impact this new cooler will have on case temperatures, CPU temps and airflow until these things are in people's hands and can test them for themselves.

Makoto-Yuki

Banned

Hmm, took this screenshot from Gamers Nexus:

What it looks like that FE edition front fan is exhausting hot air right into the CPU tower, I guess FE is a no no for me as I'm using tower for my CPU's cooling.

i doubt this will affect your cooling/performance. if anything it might be 1-2C warmer. it's better to direct that warm air up through a fan to exhaust it than just push the warm air out into and around the case. the air flow will be more efficient and any added temperature on the CPU will be mitigated by the cooler case temp. if you're really worried then you'd put another intake directly infront of the CPU cooler (you should be doing this anyway...) to increase airflow. that image doesn't have a 3rd fan at the top front.

looking at the design of the FE it will do a better job of directing the airflow not just by the fan orientation but the solid metal around the fans. on the current FE cards the fans push the air out the sides but that just isn't possible on this card.

forgive my crude drawings but this is how my airflow is right now with a 2080 FE

and how it would be with a 3080 FE

Last edited by a moderator:

J3nga

Member

You really think Nvidia didn't test this? Unless you're running with a crappy stock cooler (which I hope no sane person does with a budget to get a 3000 series card) or have an insane RAM overclock you will be fine.

Well maybe it's designed specifically for AIO. I have Dark Rock 4 for my 3700x which isn't that hot, but those i7/i9 coffee/ice lake can reach some high temps, but then most probably are using AIO solutions for those CPU's.

GPU's exhaust some really hot air, I doubt it would just be 1-2c warmer. I'm not physicist, so I can't say for sure what impact it would have, but I think it's worthy keeping in mind if FE is one of your options.i doubt this will affect your cooling/performance. if anything it might be 1-2C warmer. it's better to direct that warm air up through a fan to exhaust it than just push the warm air out into and around the case. the air flow will be more efficient and any added temperature on the CPU will be mitigated by the cooler case temp. if you're really worried then you'd put another intake directly infront of the CPU cooler (you should be doing this anyway...) to increase airflow. that image doesn't have a 3rd fan at the top front.

looking at the design of the FE it will do a better job of directing the airflow not just by the fan orientation but the solid metal around the fans. on the current FE cards the fans push the air out the sides but that just isn't possible on this card.

Last edited:

skneogaf

Member

RPS says 4gb at 1080p, 8gb at 4K

Crysis Remastered PC requirements are finally here

The original Crysis was famous for melting PCs everywhere, but the PC requirements for its retooled successor Crysis Re…www.rockpapershotgun.com

Crysis Remastered system requirements won't melt your PC

The spit-and-shine re-release is still scheduled to release Sep 19.

Edit.

They seem to have changed it now so thankfully

Last edited:

Armorian

Banned

i doubt this will affect your cooling/performance. if anything it might be 1-2C warmer. it's better to direct that warm air up through a fan to exhaust it than just push the warm air out into and around the case. the air flow will be more efficient and any added temperature on the CPU will be mitigated by the cooler case temp. if you're really worried then you'd put another intake directly infront of the CPU cooler (you should be doing this anyway...) to increase airflow. that image doesn't have a 3rd fan at the top front.

looking at the design of the FE it will do a better job of directing the airflow not just by the fan orientation but the solid metal around the fans. on the current FE cards the fans push the air out the sides but that just isn't possible on this card.

forgive my crude drawings but this is how my airflow is right now with a 2080 FE

and how it would be with a 3080 FE

In most GPUs other than blowers airflow works like this, so CPU fan is getting hot air anyway (I'm not sure about Nvidia FE 2xxx series, maybe it works as you discribed).

Agent_4Seven

Tears of Nintendo

It's all cool, but with DLSS2.0 and internal resolution sliders, there's really, really no need for native 4K monitors or screen resolution, with Nvidia's own DSR even there's no need for that.Agent_4Seven

Agent_4Seven A big reason why they went with 4K so heavily is because when you have so many cores the only way to get proper utilisation out of them is to increase resolution. That's the easiest & cheapest way to make use of them, anything else is going to be a massive pain and probably not done for various (game-dev economic) reasons. That's why Vega 64 was so good at 4K but was so far behind at lower resolutions as well - it had a lot of CUs which get automatically put to work as you increase resolution but they otherwise require much more fine-tuning on the devs' part in order to use them.

As far as I am aware, there was no Async Compute in any of the games I've mentioned for example and in many other games too, same story with Vulkan. 2000 series only saw huge gains over Pascal in Async Compute / Vulkan heavy games.As for rasterisation, they will keep increasing it because they have to - you still need shaders to do so much work & also for RT because it scales that perf as well. So even if they want to get rid of them, they can't. I think you will still see a significant boost to older more traditional games, but especially those who were compute-heavy. So any game that did really well with Vega for example, you can expect Ampere to smash them too.

Exactly. I don't care about RT and 2080 Super / 2080Ti pathetic performance gain over 1080Ti is... well, quite frankly not worth even $100-200, let alone $700-1500.I don't know, if I had a 1080 Ti I'd have a hard time justifying a 3080 too, simply because 1080 Ti is still a beast & RTX is still not prevalent.

And here lies the problem. This game is 4 months away, there's no system requirements for each common resolution, FPS and settings, NVIDIA didn't show how well even current build of the game runs on 3080. We literally know nothing about how this game will perform on even currently available cards, let alone on 3000 series. So basially what you're doing is buying 3000 card in hope that it can run the game in whatever screen resolution, FPS and settings you want it to run. So you're basically gambling at this point and throwing your money at a "fix" for a possible problem and you don't know if you can even fix it with that "fix" or not. Idk, it's just the way I see it.On the other hand, I always upgrade for specific games (otherwise what's the point?), so while I upgraded to AMD for Deus Ex: MD, I'm looking at doing the same for Cyberpunk 2077. I just know how much I'm gonna love it & I definitely want to take advantage of RTX in that one. Otherwise? Meh. There will be better models than the 3080 and at better prices, within a year.

And what NVIDIA showed? DOOM effin' Eternal, a game which can run pefectly fine even on a potato PC with Ultra Nightmare settings in 1080p. What a fuckin' showing of a new "Flagship" GPU, bra-vo.

Last edited:

skneogaf

Member

Gpu cooling has always been a little bit strange as founders edition used to suck in air and blow out the back of the case and non founders would just suck the heat off the heat sink into the case.

Its about time we've seen air be pushed through a heat sink which seems to be the best way for air flow although current motherboard designs may need to be redesigned if its going to be a standard in the future.

I remember linus doing a video where he used gpu pcie extension "things" and maybe some of us will need to use a lower slot with the extension so as to not over saturate the cpu cooler and or the ram modules.

Its about time we've seen air be pushed through a heat sink which seems to be the best way for air flow although current motherboard designs may need to be redesigned if its going to be a standard in the future.

I remember linus doing a video where he used gpu pcie extension "things" and maybe some of us will need to use a lower slot with the extension so as to not over saturate the cpu cooler and or the ram modules.

Last edited:

GHG

Member

All this talk of GPU coolers and airflow makes me miss the simplicity of blower coolers. Unless you want to overclock the only downside of those coolers is noise. As long as its getting enough cool air it's all good, you don't need to worry out it dumping a shit load of hot air back into your case.

Nemesisuuu

Member

Ulysses 31

Member

Rikkori

Member

Gorgeous! Loving the workflow.

It's not really gambling because I know two very important things: a) I want a faster GPU than the one I have & b) I'm going to play Cyberpunk 2077 regardless. Therefore my options are constrained. AMD is out, because no DLSS & CUDA (which I want for other things). 3070 is out because 8 GB. 3090 is out because it's too expensive. Used GPUs are out because my local market sucks. So it's really down to - do I buy a 3080 10GB at launch or do I wait and hope the 20 GB launches before November? But then I probably won't want to pay the extra.

So as you can see, there's no gambling involve, just thinking.

Btw, you can't compare Turing vs Pascal to Turing vs Ampere, the change to arch is substantial and the 2xFP32 are the important part for the games I mentioned. This is why I say it's like Vega vs Pascal instead. And I would say they very well do use Async, all of them (at least all the Nixxes games for sure). Vulkan itself is meaningless, it's down to devs & their engine. I remember the issues with The Surge 2 switching to Vulkan and then a lot of people crying about how it's not running as well as Doom etc. and for them to go back to DX11 because The Surge 1 ran better. It's a mis-diagnosis of the situation to attribute so much good "optimisation" to the API rather than the developers & the goals and targets set.

Deus Ex: Mankind Divided - Yes (see Nixxes CEO GDC talk about DX12 switch for this, applies to SotTR too)

Quantum Break - Probably? (need to watch their nortlight engine talks again)

Horizon: Zero Dawn - Yes

Shadow of the Tomb Raider - Yes

Metro: Exodus - Yes

Detroit: Become Human - No idea

We literally know nothing about how this game will perform on even currently available cards, let alone on 3000 series. So basially what you're doing is buying 3000 card in hope that it can run the game in whatever screen resolution, FPS and settings you want it to run. So you're basically gambling at this point and throwing your money at a "fix" for a possible problem and you don't know if you can even fix it with that "fix" or not. Idk, it's just the way I see it.

It's not really gambling because I know two very important things: a) I want a faster GPU than the one I have & b) I'm going to play Cyberpunk 2077 regardless. Therefore my options are constrained. AMD is out, because no DLSS & CUDA (which I want for other things). 3070 is out because 8 GB. 3090 is out because it's too expensive. Used GPUs are out because my local market sucks. So it's really down to - do I buy a 3080 10GB at launch or do I wait and hope the 20 GB launches before November? But then I probably won't want to pay the extra.

So as you can see, there's no gambling involve, just thinking.

Btw, you can't compare Turing vs Pascal to Turing vs Ampere, the change to arch is substantial and the 2xFP32 are the important part for the games I mentioned. This is why I say it's like Vega vs Pascal instead. And I would say they very well do use Async, all of them (at least all the Nixxes games for sure). Vulkan itself is meaningless, it's down to devs & their engine. I remember the issues with The Surge 2 switching to Vulkan and then a lot of people crying about how it's not running as well as Doom etc. and for them to go back to DX11 because The Surge 1 ran better. It's a mis-diagnosis of the situation to attribute so much good "optimisation" to the API rather than the developers & the goals and targets set.

Deus Ex: Mankind Divided - Yes (see Nixxes CEO GDC talk about DX12 switch for this, applies to SotTR too)

Quantum Break - Probably? (need to watch their nortlight engine talks again)

Horizon: Zero Dawn - Yes

Shadow of the Tomb Raider - Yes

Metro: Exodus - Yes

Detroit: Become Human - No idea

Last edited:

Agent_4Seven

Tears of Nintendo

Just curious, which GPU do you have now / had before (if you already sold it)?It's not really gambling because I know two very important things: a) I want a faster GPU than the one I have & b) I'm going to play Cyberpunk 2077 regardless. Therefore my options are constrained. AMD is out, because no DLSS & CUDA (which I want for other things). 3070 is out because 8 GB. 3090 is out because it's too expensive. Used GPUs are out because my local market sucks. So it's really down to - do I buy a 3080 10GB at launch or do I wait and hope the 20 GB launches before November? But then I probably won't want to pay the extra.

So as you can see, there's no gambling involve, just thinking.

We'll see in about a week+ from nowBtw, you can't compare Turing vs Pascal to Turing vs Ampere, the change to arch is substantial and the 2xFP32 are the important part for the games I mentioned. This is why I say it's like Vega vs Pascal instead. And I would say they very well do use Async, all of them (at least all the Nixxes games for sure). Vulkan itself is meaningless, it's down to devs & their engine. I remember the issues with The Surge 2 switching to Vulkan and then a lot of people crying about how it's not running as well as Doom etc. and for them to go back to DX11 because The Surge 1 ran better. It's a mis-diagnosis of the situation to attribute so much good "optimisation" to the API rather than the developers & the goals and targets set.

Rikkori

Member

Just curious, which GPU do you have now / had before (if you already sold it)?

We'll see in about a week+ from now

RX480 now, Vega 64 before.

Agent_4Seven

Tears of Nintendo

In your case performance gain will be massive. I wish I could say that about myself and my 1080Ti with absolute certainty.RX480 now, Vega 64 before.

longdi

Banned

In terms of cooler noise and performance, the GeForce RTX 3080 operates at a peak temperature of 78C when hitting its peak TBP of 320W with a noise output of just 30dBA. For comparison, the Turing Founders Edition coolers peak out at 81C with a noise output of 32dBA when hitting their TBP of 240W (RTX 2080 SUPER). In NVIDIA's own testing, they reveal that the GeForce RTX 3080 averages at around 1920 MHz with a GPU power draw of 310W and a peak temperature of 76C.

This is also where NVIDIA gets its 1.9x efficiency figure from as the RTX 3080 can deliver over 100 FPS while being cooler and quiet versus the 60 FPS of its Turing gen predecessor.

NVIDIA touts this PCB as an overclocking marvel with unprecedented GPU overclock headroom that most users can leverage from to gain even faster performance. But as pointed out earlier by us, the Founders Edition PCB is not the reference design and that will come with a standard rectangular PCB. Water block manufacturers have also confirmed this which we reported here.

Thats a nice stock boost clocks, meaning 3080 is about 33TF in use.

Add a little OC, +100mhz with faster louder fan speeds , it should run 2.02ghz, making it a 35.2TF card.

A 3080 is as powerful as SeX + PS5 + One X + PS4 Pro + PS4 + One combined

Oh and dont buy FE if you want to add a water block later...damn son!

Last edited:

Md Ray

Member

Not really. TFLOPs are irrelevant.A 3080 is as powerful as SeX + PS5 + One X + PS4 Pro + PS4 + One combined

I thought this was obvious by now.

2080 Ti: 14.3 TFlops

3070: 20.4 TFlops

By looking at NVIDIA's own perf numbers you get 2080 Ti performing the same as 3070 more or less with 30% fewer TFlops. Or 3070 performs the same as 2080 Ti more or less even though it has got ~43% more TFlops than 2080 Ti. By your logic, shouldn't 3070 be ~43% more powerful than 2080 Ti?

Last edited:

nemiroff

Gold Member

Hmm, took this screenshot from Gamers Nexus:

What it looks like that FE edition front fan is exhausting hot air right into the CPU tower, I guess FE is a no no for me as I'm using tower for my CPU's cooling.

Ok, but you didn't tell us GN's thoughts about it. So what did they say?

J3nga

Member

Ok, but you didn't tell us GN's thoughts about it. So what did they say?

Timestamped it

He didn't say anything specifically, a guy that overclocks CPU's using nitrogen unlikely fan of the tower coolers, maybe it simply slipped off his mind I really don't know. It looks like a good cooling solution IF using AIO since a lot of the heat is exhausted rather than being trapped under the backplate, I assume memory will see big decrease in temperature. It's just I don't think feeding your tower with hot air is the best thing in the world. Testing will tell I guess.

Last edited:

nemiroff

Gold Member

Timestamped it

He didn't say anything specifically, a guy that overclocks CPU's using nitrogen unlikely fan of the tower coolers, maybe it simply slipped off his mind I really don't know. It looks like a good cooling solution IF using AIO since a lot of the heat is exhausted rather than being trapped under the backplate, I assume memory will see big decrease in temperature. It's just I don't think feeding your tower with hot air is the best thing in the world. Testing will tell I guess.

Thanks. I watched the entire segment. Their conclusion: Not necessarily a bad thing, not necessarily a good thing.. Literally on a case by case basis.

I still have no idea what to go for. Right now, it's ROG Strix, Gaming Trio or FE. If we don't get reviews and benchmarks before launch, it's either going to be a lottery or 2021 (they will sell out quickly).. Scary times, lol

Last edited:

J3nga

Member

Well embargo for FE drops on 14th(that's 3 days before the launch), partner cards on 17th(launch date), so you'll have time to weight pros and cons of each.Thanks. I watched the entire segment. Their conclusion: Not necessarily a bad thing, not necessarily a good thing.. Literally on a case by case basis.

I still have no idea what to go for. Right now, it's ROG Strix, Gaming Trio or FE. If we don't get reviews and benchmarks before launch, it's either going to be a lottery or 2021 (they will sell out quickly).. Scary times, lol

Last edited:

nemiroff

Gold Member

Well embargo for FE drops on 14th(that's 3 days before the launch), partner cards on 17th(launch date), so you'll have time to weight pros and cons of each.

But will (pre-)ordering be off the table until release..?

J3nga

Member

I don't know and NVIDIA didn't specify, you can sign up on NVIDIA to be notified once the sales go live, will that be before the 17th or on it, yet to be seenBut will (pre-)ordering be off the table until release..?

pesaddict

Banned

Ouch !

www.kotaku.com.au

www.kotaku.com.au

Kotaku | Gaming Reviews, News, Tips and More.

Gaming Reviews, News, Tips and More.

www.kotaku.com.au

www.kotaku.com.au

CrustyBritches

Gold Member

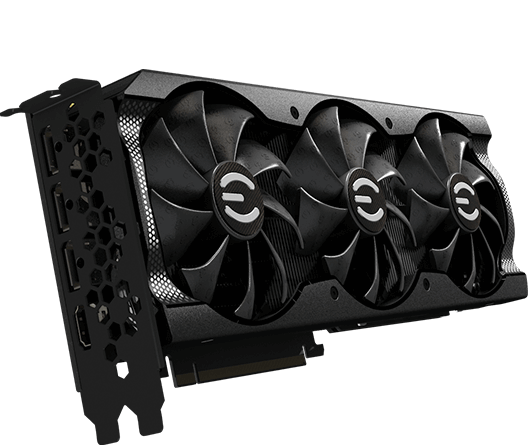

I like the look of the new EVGA cards. The curves and offset fan of the 3070 LED model are more appealing than the regular black, but I would probably disable the lighting I'm kind of OD'd on that stuff lately.

GHG

Member

I like the look of the new EVGA cards. The curves and offset fan of the 3070 LED model are more appealing than the regular black, but I would probably disable the lighting I'm kind of OD'd on that stuff lately.

Really dislike the EGVA cards on the whole this time round. The red plastic present on all of their cards pisses me off, it's too opinionated. I don't unerstand why they couldn't keep their cards colour neutral like they have in previous generations.

grfunkulus

Banned

You need at least a 750 W PSU to run the 3080

Recommended. I'm sure with a good 650 supply (RMX 650 by corsair) and only running games without a lot of additional drives/rgb/etc...you can get away with it. My current build is showing 460 something with a 9700k and 2080. The 3080 could be maybe 100 watts higher. Lower if I undervolt the GPU which I do because it reduces heat and barely affects performance if you do it right. I'll sacrifice the cpu overclock or turn it down a bit. The noctua cooler will be working overtime to keep the cpu cool with the founders cooler design anyway

So, I'll probably be at ~550 peak which isn't the ideal of 70% but it's not 95 or something crazy either.

Last edited:

skneogaf

Member

I'm definitely looking for one with more than one hdmi out as I've lost the ability to use my USB-C adapter that I used for my oculus rift.

I've found the Asus and gigabyte cards support more than one.

The amount of ram on the 3090 has made me think about game developers not utilising the excessive amount of ram we have in a pc's like system ram or vram.

I've found the Asus and gigabyte cards support more than one.

The amount of ram on the 3090 has made me think about game developers not utilising the excessive amount of ram we have in a pc's like system ram or vram.

DeaDPo0L84

Member

I think its either FE or Asus Strix for me. If the Asus goes up for preorder a few days before the 17th i'll just go that route. All the rest of the cards are terrible.

BluRayHiDef

Banned

The XC3 version of the 3090 is color neutral.Really dislike the EGVA cards on the whole this time round. The red plastic present on all of their cards pisses me off, it's too opinionated. I don't unerstand why they couldn't keep their cards colour neutral like they have in previous generations.

BluRayHiDef

Banned

Here you go, matey.I've got a 750W EVGA PSU, a 3800X, 16GB of RAM, and an SSD and an HDD. Am I going to have enough juice for a 3080? Or do I need a beefier PSU?

Unknown Soldier

Member

I really hope they release the Marbles demo so people can play with it.

00_Zer0

Member

I'm taking the 17th off of work and heading to Microcenter an hr before they open, just to make sure I get a 3080. Got a friend wants to buy my 1080ti for $150 (he currently has a 970), and I'll be installing it in his machine then as well.

I have a Microcenter near me and I texted them on their customer support line about availability of the 3080's on the 17th and this was their reply:

"We do not have an estimated arrival date at this time. I would recommend checking our website for any updates."

I know that I can see the inventory at my local store when they decide to post it, but I don't know if I want to take a chance with them when they might not have the card I want. My first choice is a FE card which may be scarce, who knows?

I signed up on the Nvidia's site to be notified when I can order it from them, but I worry about their website crashing once it goes up for sale.

I also signed up for notifations on nowinstock.net and gearinstock.com to be notified when the 3080 will be available. I am not sure how reliable these two sites are or if they are a scam. Does anyone else know of any legit item tracker sites?

I'm pretty much trying to cover all my bases to get the 3080 on launch day before they are all sold out.

Last edited:

CrustyBritches

Gold Member

Like BluRayHiDef was saying, they do have the XC3 as well, but I'm more into the curves and offset fan look of the FTW3. Of course, the look of the actual card isn't the most important factor, but depending on the case you have it can matter. I have the ThermalTake Core V21 with mobo in horizontal position, and placed on the table where it's visible anytime I'm using it, so I don't mind a good-looking card.Really dislike the EGVA cards on the whole this time round. The red plastic present on all of their cards pisses me off, it's too opinionated. I don't unerstand why they couldn't keep their cards colour neutral like they have in previous generations.

Rentahamster

Rodent Whores

Agreed. Unless the red part is changeable somehow, it's gonna end up clashing with a lot of systems in terms of color scheme.Really dislike the EGVA cards on the whole this time round. The red plastic present on all of their cards pisses me off, it's too opinionated. I don't unerstand why they couldn't keep their cards colour neutral like they have in previous generations.