D

-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Rikkori

Member

GaviotaGrande

Banned

It was only 1200 EUR.

It lasted 2 years.

I don't get why it's funny.

P.S. I like this meme

Patrick S.

Banned

Cool some uninformed person from France bough my RTX Titan for more than I payed for it. I feel good.

Why buy a prebuild shit? How man watts you have on the PSU? I am sure there is going to be some extension cable which is going to be 2 6pin to 12 pin or something like that, but you cannot have 450W PSU....

Just wait till he claims a refund though PayPal and you have lost both the card and the money 0

M1chl

Currently Gif and Meme Champion

This way payment through IBAN : )Just wait till he claims a refund though PayPal and you have lost both the card and the money 0

Patrick S.

Banned

Jack Videogames

Member

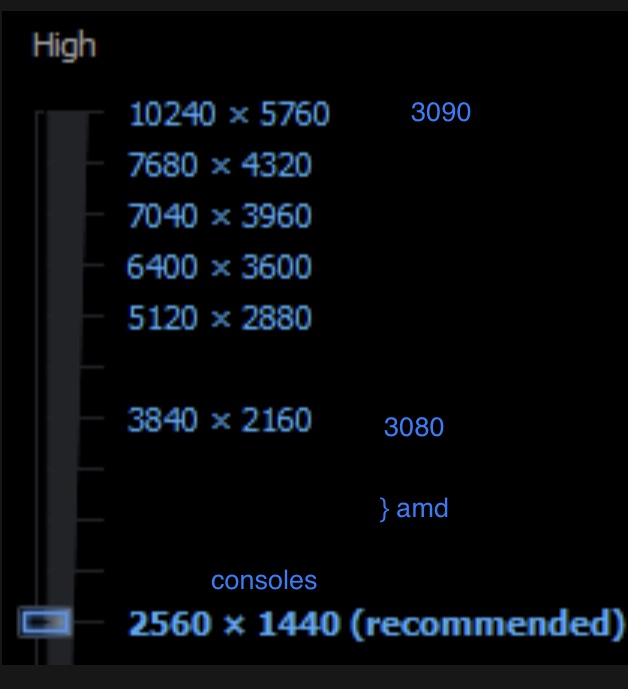

Recently I've started doubting if the 3080 is such a worthwhile upgrade to my 1080 Non-TI because of the vram amount.

But let's say I buy it - what would be the worst case scenario in 4 years time if I'm mostly going to play at 4k 60fps? Having to lower textures from Ultra to V. High? Having to lower rendering quality to 95%?

I'm an enthusiast gamer but I'm not a techhead. Is the 3080 likely to last me 4 years?

But let's say I buy it - what would be the worst case scenario in 4 years time if I'm mostly going to play at 4k 60fps? Having to lower textures from Ultra to V. High? Having to lower rendering quality to 95%?

I'm an enthusiast gamer but I'm not a techhead. Is the 3080 likely to last me 4 years?

Seriously? I'm not going to spend a single minute to play with settings, but the 2080 Ti part is obviously a mix of middle and high settings. Additionally the card is massively overclocked. 2080 Ti does not reach 180-200% 2080 performance. Neither in this world nor in hell. Maybe in LN2 Land.

bargeparty

Member

Let's not upgrade cause vram? Just disregarding all the other benefits?

BluRayHiDef

Banned

Get a 3090 or wait for the rumored 3080 20GB variant.Recently I've started doubting if the 3080 is such a worthwhile upgrade to my 1080 Non-TI because of the vram amount.

But let's say I buy it - what would be the worst case scenario in 4 years time if I'm mostly going to play at 4k 60fps? Having to lower textures from Ultra to V. High? Having to lower rendering quality to 95%?

I'm an enthusiast gamer but I'm not a techhead. Is the 3080 likely to last me 4 years?

Rentahamster

Rodent Whores

Rikkori

Member

Ampere details: https://videocardz.com/newz/nvidia-details-geforce-rtx-30-ampere-architecture

3080 Fortnite RTX with and without DLSS, 4K Epic Settings

3080 Fortnite RTX with and without DLSS, 4K Epic Settings

Dampf

Member

Ampere details: https://videocardz.com/newz/nvidia-details-geforce-rtx-30-ampere-architecture

3080 Fortnite RTX with and without DLSS, 4K Epic Settings

Jesus christ, only 28 FPS at native 4K in Fortnite on a 3080? Those RT effects must be sucking a lot. Doesn't Epic settings in 4K run at well above 60 FPS on a 2080 or something?

Tripolygon

Banned

Some good breakdown of the difference compared to Turing in the thread.

Last edited:

Rikkori

Member

Jesus christ, only 28 FPS at native 4K in Fortnite on a 3080? Those RT effects must be sucking a lot. Doesn't Epic settings in 4K run at well above 60 FPS on a 2080 or something?

Not unexpected UE4 RTX full hog takes a lot of horse power. Don't forget this classic:

Tripolygon

Banned

DeaDPo0L84

Member

Didn't Jensen mention the fe cards light up? Also will there be a review roundup before launch on the 17th? I know 4k is getting all the attention but I'm curious about 1440p/120hz.

Desaccorde

Member

So what should we deduct from Ryan Smith's Final Words tweet? Sorry, I am illiterate on the tech behind GPUs.

YCoCg

Member

...if there's cards coming later on which have more VRAM then yes?Let's not upgrade cause vram? Just disregarding all the other benefits?

Lethal01

Banned

But........SSD.

Are very important, which is why we are getting RTXIO

INC

Member

Didn't Jensen mention the fe cards light up? Also will there be a review roundup before launch on the 17th? I know 4k is getting all the attention but I'm curious about 1440p/120hz.

This is where my heads at, I couldnt care less about 4k, 1440p and VR is what I wanna see more attention on

Unknown Soldier

Member

I think it's interesting the consoles have "dedicated blocks" for their speedy I/O, and Nvidia has RTX I/O. So...what will BIg Navi have? Imagine if it's nothing...I wouldn't put it past AMD to fail to include their console tech on their PC video cards.So what should we deduct from Ryan Smith's Final Words tweet? Sorry, I am illiterate on the tech behind GPUs.

bargeparty

Member

...if there's cards coming later on which have more VRAM then yes?

That's only part of what I'm seeing on this forum. Some are questioning if the performance will be with it, really?

Whatever.

HeisenbergFX4

Gold Member

Prices

Amazon.com: ZOTAC Gaming GeForce RTX 3080 Trinity 10GB GDDR6X 320-bit 19 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, Spectra 2.0 RGB Lighting, ZT-A30800D-10P: Computers & Accessories

Buy ZOTAC Gaming GeForce RTX 3080 Trinity 10GB GDDR6X 320-bit 19 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, Spectra 2.0 RGB Lighting, ZT-A30800D-10P: Everything Else - Amazon.com ✓ FREE DELIVERY possible on eligible purchases

www.amazon.com

Amazon.com: ZOTAC GAMING GeForce RTX 3090 Trinity 24GB GDDR6X 384-bit 19.5 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, SPECTRA 2.0 RGB Lighting, ZT-A30900D-10P: Computers & Accessories

Buy ZOTAC GAMING GeForce RTX 3090 Trinity 24GB GDDR6X 384-bit 19.5 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, SPECTRA 2.0 RGB Lighting, ZT-A30900D-10P: Graphics Cards - Amazon.com ✓ FREE DELIVERY possible on eligible purchases

www.amazon.com

fersnake

Member

it's a classic now xDNever get tired of this meme.

MightySquirrel

Banned

Nah thats just amazon doing its usual early adopters stuffPrices

Amazon.com: ZOTAC Gaming GeForce RTX 3080 Trinity 10GB GDDR6X 320-bit 19 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, Spectra 2.0 RGB Lighting, ZT-A30800D-10P: Computers & Accessories

Buy ZOTAC Gaming GeForce RTX 3080 Trinity 10GB GDDR6X 320-bit 19 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, Spectra 2.0 RGB Lighting, ZT-A30800D-10P: Everything Else - Amazon.com ✓ FREE DELIVERY possible on eligible purchaseswww.amazon.com

Amazon.com: ZOTAC GAMING GeForce RTX 3090 Trinity 24GB GDDR6X 384-bit 19.5 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, SPECTRA 2.0 RGB Lighting, ZT-A30900D-10P: Computers & Accessories

Buy ZOTAC GAMING GeForce RTX 3090 Trinity 24GB GDDR6X 384-bit 19.5 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, SPECTRA 2.0 RGB Lighting, ZT-A30900D-10P: Graphics Cards - Amazon.com ✓ FREE DELIVERY possible on eligible purchaseswww.amazon.com

https://www.overclockers.co.uk/Zotac-GeForce-RTX-3080-Trinity-10GB-GDDR6X-PCI-Express-Graphics-Card-GX-122-ZT.html

longdi

Banned

Damn im still conflicted, 3080 now or 3080S/Ti 9 months later.

The 10GB limitation is pissing my balls off.

Will 3080S/Ti have 20GB or 12GB?

Is 3080S/Ti an upscaled 3080 hence 20GB or a downscaled 3090 hence 12GB

If it is the later, than 3080 looks good to go..

Do you believe Nvidia will give us 20GB on a non-titan priced gpu?

The 10GB limitation is pissing my balls off.

Will 3080S/Ti have 20GB or 12GB?

Is 3080S/Ti an upscaled 3080 hence 20GB or a downscaled 3090 hence 12GB

If it is the later, than 3080 looks good to go..

Do you believe Nvidia will give us 20GB on a non-titan priced gpu?

Rentahamster

Rodent Whores

How much do you really expect the resale value of a 3080 to drop in nine months, realistically? Assuming AMD doesn't bring out a card that competes with it.Damn im still conflicted, 3080 now or 3080S/Ti 9 months later.

Unknown Soldier

Member

Not likely to be the real final prices. You can preorder if you feel like it, and if the price doesn't adjust before it ships just cancel it. I just threw in a 3090 preorder, worst thing that happens is I have to cancel it. Not a big deal.Prices

Amazon.com: ZOTAC Gaming GeForce RTX 3080 Trinity 10GB GDDR6X 320-bit 19 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, Spectra 2.0 RGB Lighting, ZT-A30800D-10P: Computers & Accessories

Buy ZOTAC Gaming GeForce RTX 3080 Trinity 10GB GDDR6X 320-bit 19 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, Spectra 2.0 RGB Lighting, ZT-A30800D-10P: Everything Else - Amazon.com ✓ FREE DELIVERY possible on eligible purchaseswww.amazon.com

Amazon.com: ZOTAC GAMING GeForce RTX 3090 Trinity 24GB GDDR6X 384-bit 19.5 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, SPECTRA 2.0 RGB Lighting, ZT-A30900D-10P: Computers & Accessories

Buy ZOTAC GAMING GeForce RTX 3090 Trinity 24GB GDDR6X 384-bit 19.5 Gbps PCIE 4.0 Gaming Graphics Card, IceStorm 2.0 Advanced Cooling, SPECTRA 2.0 RGB Lighting, ZT-A30900D-10P: Graphics Cards - Amazon.com ✓ FREE DELIVERY possible on eligible purchaseswww.amazon.com

longdi

Banned

How much do you really expect the resale value of a 3080 to drop in nine months, realistically? Assuming AMD doesn't bring out a card that competes with it.

I expect the 3080S/Ti to fill in the $1099 price gap, so prices arent a big factor.

I guess is more the perfomance, will the S/Ti version gives the same 30% uplift as expected of a Ti, especially with bigger VRAM? Next gen games will get full swing late 2021 imo.

Times are ...confusing and conflicting.

3080 is already using the big die unlike recent x80..is there more head room left? Will VRAM be even more critical?

While i like to buy once and forget for 3 years, you may be right on the resale thing. Ampere could be all about reselling now.

Last edited:

Rentahamster

Rodent Whores

Save yourself the anguish and just buy a 3080 now and sell it once the 3080 Super leaks start coming outI expect the 3080S/Ti to fill in the $1099 price gap, so prices arent a big factor.

I guess is more the perfomance, will the S/Ti version gives the same 30% uplift as expected of a Ti, especially with bigger VRAM? Next gen games will get full swing late 2021 imo.

Times are ...confusing and conflicting.

3080 is already using the big die unlike recent x80..is there more head room left? Will VRAM be even more critical?

While i like to buy once and forget for 3 years, you may be right on the resale thing. Ampere could be all about reselling now.

Jigga117

Member

Yep hi I am that guyDon't be naive.

It's an amazing feature.

Self burning fuse of sorts.

Buy this thing, later on buy bumped version.

Some would buy it even if it was just a piece of wood with NV sticker on it.

BluRayHiDef

Banned

Just get a 3090 and call it a day.Damn im still conflicted, 3080 now or 3080S/Ti 9 months later.

The 10GB limitation is pissing my balls off.

Will 3080S/Ti have 20GB or 12GB?

Is 3080S/Ti an upscaled 3080 hence 20GB or a downscaled 3090 hence 12GB

If it is the later, than 3080 looks good to go..

Do you believe Nvidia will give us 20GB on a non-titan priced gpu?

ZywyPL

Banned

New benchmarking tools from Nvidia that can measure everything under the sun with one exception - can you guess what it is?

SSD I/O bandwidth? ;p

geordiemp

Member

Pretty impressed to see Nvidia get greater transistor density on Samsung 8N than AMD got on their first two attempts on TSMC 7.

3080/3090 = 44.6 MT/mm^2

3070 = 44.3 MT/mm^2

5700/XT = 41 MT/mm^2

Radeon VII = 39.97 mm^2

All the nodes have a similar pitch of around 30 nm and densities, including Intel.

The sauce is the gate technology, at TSMC 7nm is 6 nm width on the fin supposedly, and its to do with gate material technology as well as how its made that gives the frequency and power perf.

How good the samsung node is will b eseen in frequencies and power use,

7nm is just marketing, as is 8 nm.

Last edited:

Sleepwalker

Member

Never get tired of this meme.

Hate that I speak spanish and can't enjoy it all

Agent_4Seven

Tears of Nintendo

After carefully examining specs (RAM bandwidth and memory data rates) of 2080 Super, 2080Ti, 3080 and even RTX Titan, I just cannot believe that 3080 will deliver huge performance gains in rasterization and in native 1440p / 4K. And I'm talking about 60-90 FPS difference (at the very least) for both min and max frame rates in not Async Compute / Vulkan games of which there are very few and that's even assuming that you're playing them, planning to play them or care about them at all. Sure, you can make games load faster via RTX I/O boost, but it won't give you massive boost in frame rates. Also, who cares if 3000 series is x2 better when it comes to RT performance if 99,9% of games are not even using RT or DLSS for that matter, and you can't force DLSS to work in not supported games.

I don't care about 4K performance (I've 1440p 144Hz monitor), I don't care about RT until at least 70% of all AAA, AA and even B level games will be using RT. DLSS on the other hand is really cool feature to have but it also needs to be supported much more widely and or at the very least support for older demanding games needs to be added. I can sell my 1080Ti for $380 (I bought it for something like $430+ 2,5 years ago) right now, easily, but for now and without seeing real world benchmarks, I just don't think that 3080 will be significant enough upgrade in terms of rasterization performance in native 1440p / 4K in most demanding games out there - Deus Ex: Mankind Divided, Quantum Break, Horizon: Zero Dawn, Shadow of the Tomb Raider, Metro: Exodus, Detroit: Become Human and a bunch of other, old and also very demanding games, not to mention the ones whe haven't even played yet or let alone seen.

NVIDIA again trying to sell us the promise of the future which will be achievable and somewhat utilized when it comes to RT and DLSS not today, not tomorrow, not next month and next year, but maybe 5-6 years from now, during which we'll get at least 2 new generation of GPUs from both (or even triple if Intel's GPUs will be very good and competitive enough) sides. What they should focus on also and more heavily, is huge performance gains in rasterization cuz overwhelming majority of games will still be from 70% to 100% raster, so lighting, reflections, global illumination etc. etc. etc. will be faked in games and what's the point in RT and DLSS if your new $700-1500 card can't even run these games with min 100+FPS without RT and DLSS cuz they don't have support for it or Async Compute / Vulkan?

September 14th can't come soon enough.

I don't care about 4K performance (I've 1440p 144Hz monitor), I don't care about RT until at least 70% of all AAA, AA and even B level games will be using RT. DLSS on the other hand is really cool feature to have but it also needs to be supported much more widely and or at the very least support for older demanding games needs to be added. I can sell my 1080Ti for $380 (I bought it for something like $430+ 2,5 years ago) right now, easily, but for now and without seeing real world benchmarks, I just don't think that 3080 will be significant enough upgrade in terms of rasterization performance in native 1440p / 4K in most demanding games out there - Deus Ex: Mankind Divided, Quantum Break, Horizon: Zero Dawn, Shadow of the Tomb Raider, Metro: Exodus, Detroit: Become Human and a bunch of other, old and also very demanding games, not to mention the ones whe haven't even played yet or let alone seen.

NVIDIA again trying to sell us the promise of the future which will be achievable and somewhat utilized when it comes to RT and DLSS not today, not tomorrow, not next month and next year, but maybe 5-6 years from now, during which we'll get at least 2 new generation of GPUs from both (or even triple if Intel's GPUs will be very good and competitive enough) sides. What they should focus on also and more heavily, is huge performance gains in rasterization cuz overwhelming majority of games will still be from 70% to 100% raster, so lighting, reflections, global illumination etc. etc. etc. will be faked in games and what's the point in RT and DLSS if your new $700-1500 card can't even run these games with min 100+FPS without RT and DLSS cuz they don't have support for it or Async Compute / Vulkan?

September 14th can't come soon enough.

Last edited:

Rikkori

Member

As for rasterisation, they will keep increasing it because they have to - you still need shaders to do so much work & also for RT because it scales that perf as well. So even if they want to get rid of them, they can't. I think you will still see a significant boost to older more traditional games, but especially those who were compute-heavy. So any game that did really well with Vega for example, you can expect Ampere to smash them too.

I don't know, if I had a 1080 Ti I'd have a hard time justifying a 3080 too, simply because 1080 Ti is still a beast & RTX is still not prevalent. On the other hand, I always upgrade for specific games (otherwise what's the point?), so while I upgraded to AMD for Deus Ex: MD, I'm looking at doing the same for Cyberpunk 2077. I just know how much I'm gonna love it & I definitely want to take advantage of RTX in that one. Otherwise? Meh. There will be better models than the 3080 and at better prices, within a year.

Roman Empire

Member

You need at least a 750 W PSU to run the 3080