dampflokfreund

Banned

Red Dead Redemption looks better....seems to also have a bigger draw distance as well:

Are you serious... it looks so much worse compared to Zelda U.

Red Dead Redemption looks better....seems to also have a bigger draw distance as well:

Red Dead Redemption looks better....seems to also have a bigger draw distance as well:

Looks worse and runs sub 30 fps much of the time. Was that game even native 720p?

To my eyes it looks much better since it doesn't have the messed up unphysical bloom effect that is present on most characters in WiiU first party games. Makes me want to take a sand paper and grind the characters till they reflect light like in a natural way.

You forgot to mention only half of it's memory is used for games, that it's only half as fast and that a 119 pages long GPU feature&power analysis thread pointed out the GPU being less powerful after all.Simply not true. Wii U has more / faster / better structured memory. 4x more. A faster GPU with more modern shader capabilities. And is capable of running great looking games a 60fps.

Seriously, Bayo 2 looks amazing and is a day 1 purchase but it looks nothing like GoW3. Hell, there aren't many games on PS4 that look as good yet!

I don't understand why everyone is saying how Mario Kart 8 has some of the best graphics. My GF bought it and when I played it, there was nothing about the graphics that screams this is better than PS3. If you are comparing it to the old versions of the same game, then yes it's a big jump but not compared to what's been done on PS3/360.

To my eyes it looks much better since it doesn't have the messed up unphysical bloom effect that is present on most characters in WiiU first party games. Makes me want to take a sand paper and grind the characters till they reflect light like in a natural way.

M°°nblade;116975675 said:You forgot to mention only half of it's memory is used for games, that it's only half as fast and that a 119 pages long GPU feature&power analysis thread pointed out the GPU being less powerful after all.

It is a big jump if you notice framerates/resolutions. The Sega racing games and Banjo-Kazooie:N&B are comparable to Nintendo's look, and those games were sub-30fps/720p, MK8 is 60fps/720p, with much better lighting and shadows.

This thread is just gonna go in circles with people posting pics of sub 30fps games to compare them to 60fps games. Not to mention the comparisons between completely different styles.

Yes, there has been and it left the Wii U with a 160ALU / 176 gflops GPU which is significantly less than both what the XBox360 and the PS3 have. People can believe all they want but there is no evidence that it's really more powerful.I am quite familiar with that thread. Are you suggesting that any kind of consensus has ever been reached there?

I am quite familiar with that thread. Are you suggesting that any kind of consensus has ever been reached there?

M°°nblade;116977952 said:Yes, there has been and it left the Wii U with a 160ALU / 176 gflops GPU which is significantly less than both what the XBox360 and the PS3 have. People can believe all they want but there is no evidence that it's really more powerful.

It is not when you look at the fact that the geometry is piss-poor with some objects having way less poly-count than GT and Forza on PS360. Nintendo achieved 60 fps by butchering of a bunch of stuff like AA and poly-count. So yeah, MK8 proves nothing to me.

No reasoning with loons who keep on going Shinen, 1080p and DR,DR,DR when the damn game has very low-poly objects and looks like absolute garbage. Fourth Storm was the most reasonable poster in that thread and his estimate of 176 GFlops was independently corroborated by another trusted forum poster, who was earlier sceptical of this. I will take their word and the fact that there is no game on the WiiU rivalling GoW3 or U3 as proof of the fact that the WiiU GPU is 176 GFlops

The GPU has a lower theoretical peak, but it's still faster in real world applications. For starters, it's a true unified shader architecture, whereas RSX still had dedicated vertex and pixel shader units and Xenos had some sort of proto-unified shaders where not every shader unit supports the same operations, making it pretty much impossible for developers to max it out and potentially causing stalls. Also, the Wii U architecture relies heavily on local stores/ caches and DMA, so the main memory bandwidth isn't really all that important.M°°nblade;116975675 said:You forgot to mention only half of it's memory is used for games, that it's only half as fast and that a 119 pages long GPU feature&power analysis thread pointed out the GPU being less powerful after all.

Me neither. But having higher res textures seems something than can be explained by having a larger amount of memory available.Where about in the thread is this out of curiousity? I've read a few pages but didn't really see anything

Being able to "switch on" pc textures on NFS:MW would suggest otherwise, but i'm not really knowledgeable enough on the tech side to know

M°°nblade;116975675 said:You forgot to mention only half of it's memory is used for games, that it's only half as fast and that a 119 pages long GPU feature&power analysis thread pointed out the GPU being less powerful after all.

It is not when you look at the fact that the geometry is piss-poor with some objects having way less poly-count than GT and Forza on PS360. Nintendo achieved 60 fps by butchering of a bunch of stuff like AA and poly-count. So yeah, MK8 proves nothing to me.

Bayonetta 2 runs at a rock solid 60FPS, GOW3 fluctuates to 30, probably even lower at certain scenes.No reasoning with loons who keep on going Shinen, 1080p and DR,DR,DR when the damn game has very low-poly objects and looks like absolute garbage. Fourth Storm was the most reasonable poster in that thread and his estimate of 176 GFlops was independently corroborated by another trusted forum poster, who was earlier sceptical of this. I will take their word and the fact that there is no game on the WiiU rivalling GoW3 or U3 as proof of the fact that the WiiU GPU is 176 GFlops

M°°nblade;116977952 said:Yes, there has been and it left the Wii U with a 160ALU / 176 gflops GPU which is significantly less than both what the XBox360 and the PS3 have. People can believe all they want but there is no evidence that it's really more powerful.

Simply not true. Wii U has more / faster / better structured memory. 4x more. A faster GPU with more modern shader capabilities. And is capable of running great looking games a 60fps.

4X more memory, but only just over double more for the game. The PS360 use 45-60MB for the OS iirc, while the Wii U reserves 1GB.

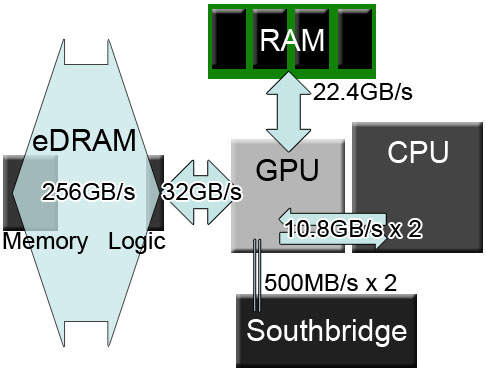

And "better structured" has to be defined. I'm absolutely sure it has a better memory controller which allows lower latencies and higher efficiency than the PS360, but it's RAM modules can only have a total of 12.8GB/s bandwidth for a unified CPU/GPU pool, while the PS3 had two pools of ~20GB/s and the 360 had a unified ~23GB/s as well plus 10MB of very fast eDRAM for the GPU alone. Sure, real world efficiencies will bring them closer, but I don't think enough to jump that gap.

The 32MB eDRAM will no doubt help, but it's like the XBO problem on crack, fast eDRAM, limited speed to the rest of the memory compared to the others.

I am quite familiar with that thread. Are you suggesting that any kind of consensus has ever been reached there?

But those systems only use 32-42 MB for their OS and didn't reserve 1000MB for it like the Wii U does, so mentioning total memory is a useless parameter since the games don't benefit from it. It's approximately only 2x the amount for in-game memory usage.It's 4x total memory. Not all of ps360 is available to use either.

I agree with all that, yet there's still little base for Clockwork5 to say it's more powerful.Also, as I pointed out on the previous page, it makes no sense to say "less powerful" unless you specify which aspect of graphics processing output you're talking about. I'm sure you know from that thread that the general consensus was that in this case less shaders/flops didn't mean 'worse'. Those shaders are much more modern and flop count alone is not an accurate yardstick when you're comparing GPUs from different generations.

Lol the fuck? Does GT or Forza have to calculate what items 11 other computer players are going to get in addition to directing them? The physics that go along with using said items? Shadows casting on individual pieces of koopa shells as they break? Level geometry that isn't static? There are so many other factors that go into Mario Kart.

Bayonetta 2 runs at a rock solid 60FPS, GOW3 fluctuates to 30, probably even lower at certain scenes.

Perhaps you missed the video I posted on the previous page?

https://www.youtube.com/watch?v=OAHup4CXF9E&feature=youtu.be&t=22m30s

M°°nblade;116980757 said:But those systems only use 32-42 MB for their OS and didn't reserve 1000MB for it like the Wii U does, so mentioning total memory is a useless parameter since the games don't benefit from it. It's approximately only 2x the amount for in-game memory usage.

M°°nblade;116980757 said:I agree with all that, yet there's still little base for Clockwork5 to say it's more powerful.

360 uses 32MB and PS3's OS footprint varies depending on what optional programs you choose to run with your game.

Ah, they shrunk the OS a few times, wasn't up to date. Even more to my point though. The Wii U does not give 4X more memory to the game, it's just over double.

You don't constantly max out the memory bus, you mostly need high bandwidth to deal with spikes. As I just wrote, Wii U relies on local stores and DMA. It's not just MEM1 (the eDRAM) - CPU, GPU, IO processor, audio DSP and video encoder all have large dedicated local caches, which helps reducing or even eliminating those spikes.4X more memory, but only just over double more for the game. The PS360 use 45-60MB for the OS iirc, while the Wii U reserves 1GB.

And "better structured" has to be defined. I'm absolutely sure it has a better memory controller which allows lower latencies and higher efficiency than the PS360, but it's RAM modules can only have a total of 12.8GB/s bandwidth for a unified CPU/GPU pool, while the PS3 had two pools of ~20GB/s and the 360 had a unified ~23GB/s as well plus 10MB of very fast eDRAM for the GPU alone. Sure, real world efficiencies will bring them closer, but I don't think enough to jump that gap.

The 32MB eDRAM will no doubt help, but it's like the XBO problem on crack, fast eDRAM, limited speed to the rest of the memory compared to the others.

I wonder why the Wii U needs so much RAM just for the OS. I wonder if they will ever lower it and give it back for games?

You don't constantly max out the memory bus, you mostly need high bandwidth to deal with spikes. As I just wrote, Wii U relies on local stores and DMA. It's not just MEM1 (the eDRAM) - CPU, GPU, IO processor, audio DSP and video encoder all have large dedicated local caches, which helps reducing or even eliminating those spikes.

Bayonetta 2 is much, much more impressive than God of War 3. I do not even get the "look at dem big dudes fighting" argument.... scales are fun, but they do not make your game technically superior in any way.

People still thinking that is actual gameplay and not an in-engine scene.

The discussion is not about games' technical superiority though. Otherwise GTA5 would win easily. How Rockstar managed to pull that off on both 360 AND PS3 is a miracle.

If you want to compare the consoles power you should look at the hardware, not at the games.

Didn't Aonuma say it WAS gameplay, just that they moved the camera around to give it a cinematic/cutscene look?

People still thinking that is actual gameplay and not an in-engine scene.

The lack of power is overrated to me because Nintendo games have unique art styles rather than going for photo realism. I think in the long run, it will pay off for Nintendo.

Probably? Lol. God of War 3 runs at an unlocked framerate, with the framerate rarely dipping below 30FPS and the average framerate ranging between about 40 or 50 FPS.Bayonetta 2 runs at a rock solid 60FPS, GOW3 fluctuates to 30, probably even lower at certain scenes.

Perhaps you missed the video I posted on the previous page?

https://www.youtube.com/watch?v=OAHup4CXF9E&feature=youtu.be&t=22m30s

Aonuma confirmed it was all in-game running on a Wii U.

Yes it was "in game" (ie in engine/real-time on WiiU) but probably wasnt "gameplay" (ie someone actually playing part of the game). Might have been , but Aonumas translation seems to have meant in game rather than gameplay.

Probably? Lol. God of War 3 runs at an unlocked framerate, with the framerate rarely dipping below 30FPS and the average framerate ranging between about 40 or 50 FPS.

Of course, if you had actually played the game, you would know this. But please, continue making more uninformed statements about games you clearly haven't even played.

Wii U has more RAM and a much better GPU, but a much worse CPU.

The difference is hard to assess, it's more complicated than just "it's X times more powerful".

But it's certainly closer to the competition's past gen than their current gen.

Sorry, you sound like someone who hasn't played it. If you had played the game, you wouldn't be lumping it with other 30FPS games like TLoU and Uncharted.Lol, I've never played it? Perhaps you want me to go into detail on the Hercules gloves? Or maybe the final boss fight? Or how about Cerberus boss battle that takes place prior to repositioning stone hands? I played through that button mashing POS last year.

Also here's a video buddy:

https://www.youtube.com/watch?v=kYnydQ4t0aU

Dips to 35 FPS in this video alone. Below 30 in the beginning of the video.

Sorry, you sound like someone who hasn't played it. If you had played the game, you wouldn't be lumping it with other 30FPS games like TLoU and Uncharted.

So either you played it and you're making disingenuous claims about technical details about it being "30FPS", or you haven't played it and you're uninformed. Either way, you're a fool.

The cpu isn't much worse it's just very different, it's far more efficient and at some types of code actually performs much better