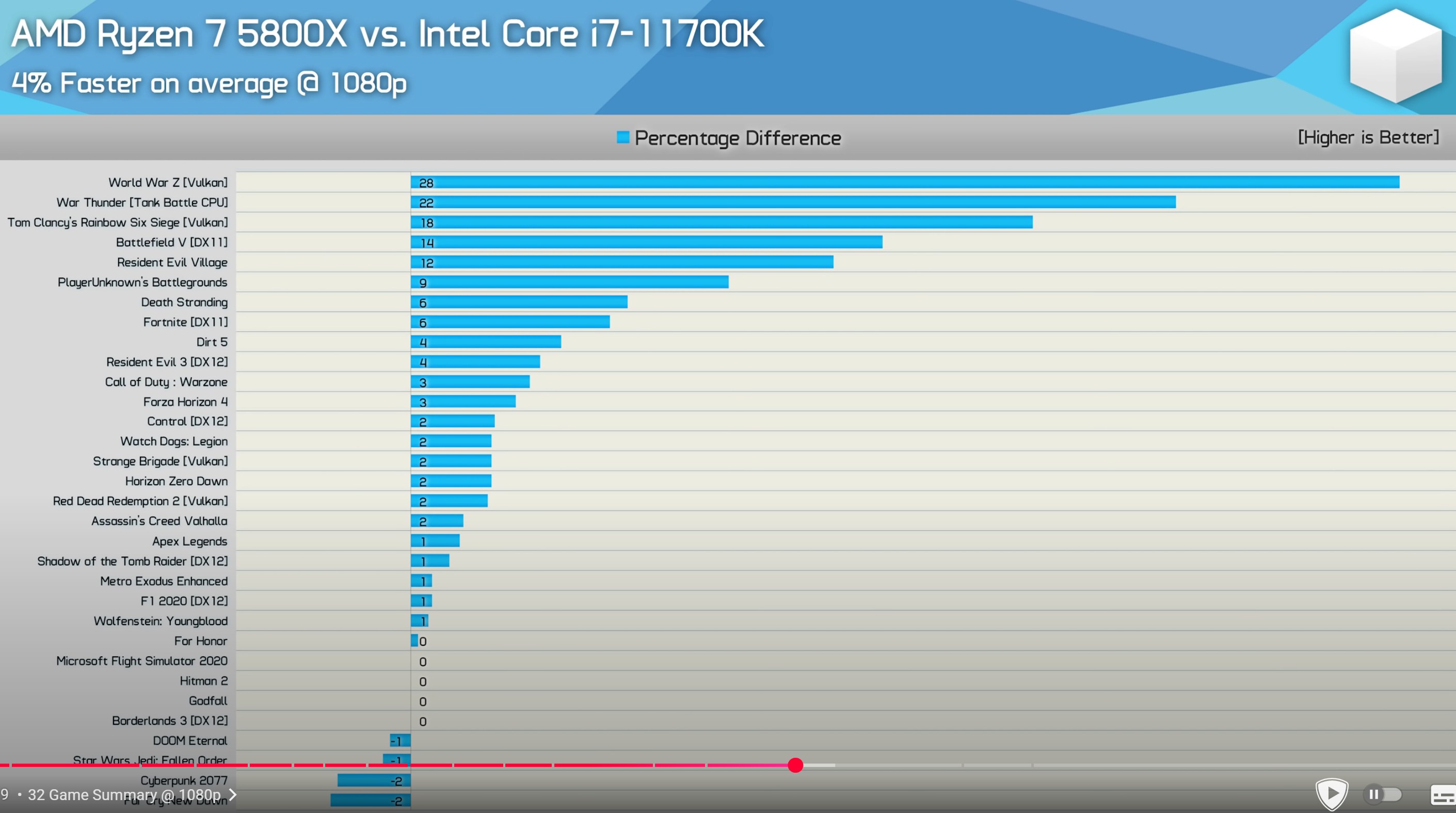

It's a shame how many people were fooled into buying the zen 2 CPUs like the 3600 by retarded youtubers who look at all the wrong things when reviewing CPUs. I remember being in the market around that time and everyone was shitting on intel's 11th gen CPUs for being power hungry, running hot, and praising the zen 2 CPUs for running cooler and being less power hungry. They were actually more expensive too. I only ended up going with the intel i7-11700k because it was $50 cheaper and $100 cheaper with the mobo. And yet it's held up far better than these trashy Zen 2 CPUs.

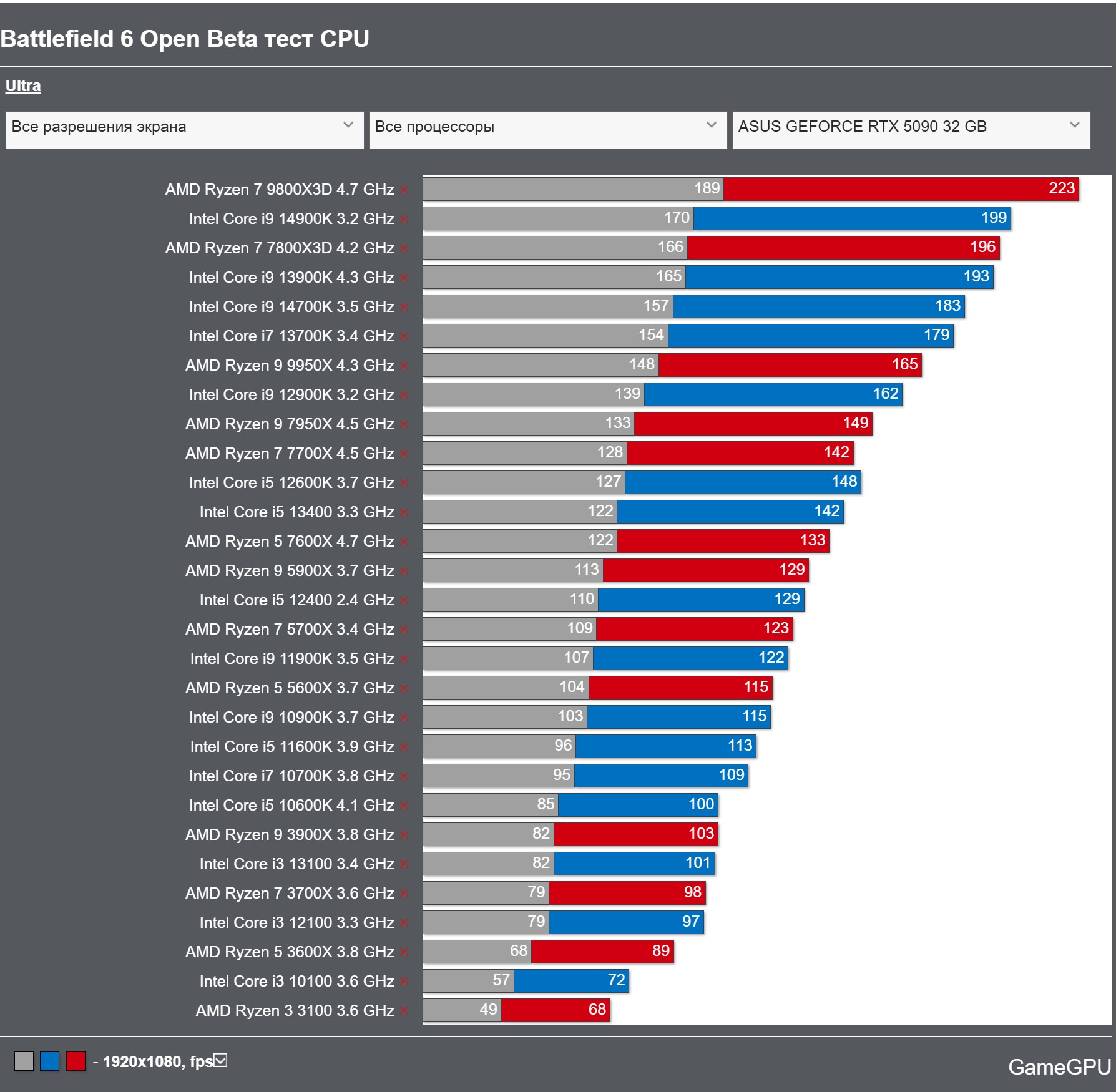

It started around starfield when people were struggling to hit 60 fps in cities while im like nah, im good. Then path tracing dropped in cyberpunk soon after and people were shocked to see my 3080 run it at 60 fps.

I have had zero issues running CPU heavy games like Gotham Knights, Starfield, Space Marine 2, and even the ones that perform poorly in cities like Jedi survivor and Dragons Dogma 2, ran at a perfect 60 fps with all RT effects enabled during gameplay and in the open world.

i mean look at how the 8700k is outperforming 5000 series CPUs in starfield. This was 2 years ago. And people got upset at Todd Howard for telling them to upgrade their CPUs.