Reizo Ryuu

Member

Wait, which one is the navi 33? Sounds kinda weird if they are going with 8gb vram for the 7700xt.

It might be to do with availability of 3 GB/4 GB memory modules as NAVI 33 only has an 128-bit bus.Wait, which one is the navi 33? Sounds kinda weird if they are going with 8gb vram for the 7700xt.

For all the talk of the 4090, I feel most people are forgetting that over 90% of card sales are the 50/60 midrange models.

Having the best is prestigious, but having more sales can bring in more revenue.

It was lack of DSLL and ray tracing that has kept AMD back. If they can complete now, Nvidia will need to cut prices.

He literally says more than 2X improvement in RT in the linked tweet. Even AMD have stated that their CUs have "enhanced ray-tracing capabilities".https://wccftech.com/amd-rdna-3-rad...er-2x-rt-performance-amazing-tbp-aib-testing/

2x RT performance compared to RDNA 2 when also having 2x more rasterization performance is not good news, it seems it would be using the same type of RT accelerator or, if better, culled back by MCM latencies. This would put their RT performance at 3090 level. That's not something to hang your hat on.

But you know, rumours and all, it's pretty much all crap until it's benched by sites.

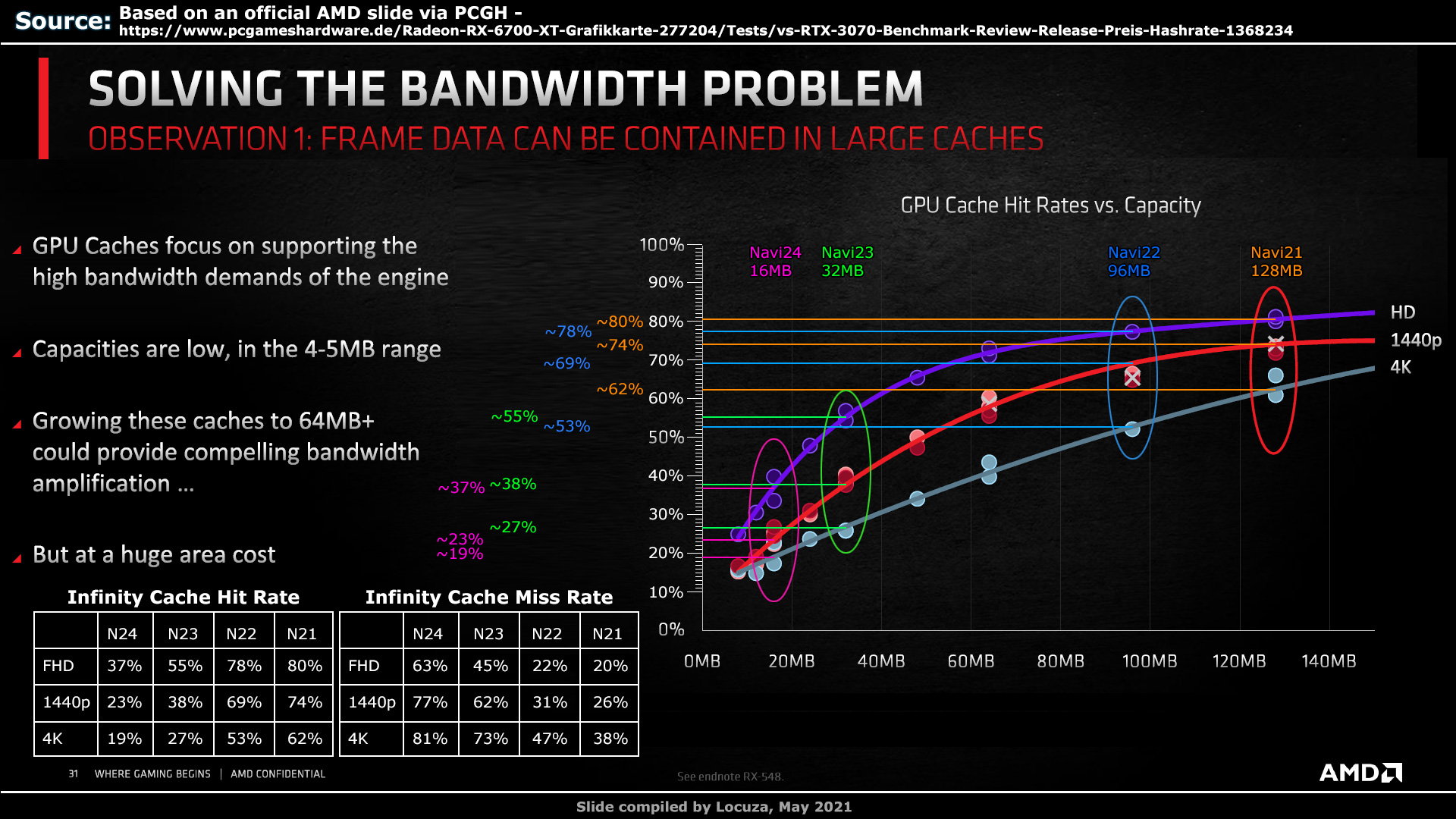

But each cache block was halved in size, meaning there's twice the cache blocks for the same cache amount and therefore twice the bandwidth-per-MB. So while there's 2/3rds of the cache it's working at 2* 2/3rds the total bandwidth, so there's actually 4/3rds = 33% higher bandwidth clock-for-clock when comparing Navi 21's 128MB to Navi 31's 96MB. Navi 21 had ~2TB/s on Infinity cache, so Navi 31 should have 2.67TB/s.Actually RDNA3 is rumored to only have 96MB infinity cache, less than 128MB.

RGT did say they were using a new generation of infinity cache which is apparently much better hence the smaller size.Actually RDNA3 is rumored to only have 96MB infinity cache, less than 128MB.

LOL. MLiD said last month back that Intel will close their GPU division because it was not successful.Both him and MLID get a lot of shit but having followed them for years, on graphics cards they've been spot on.

Me.How many people here who are hoping for AMD to be competitive in performance and aggressive in price, are actually planning on buying Radeon GPU?

Or are you just hoping AMD bring the heat so that you can buy a GeForce GPU at a discount?

If its the latter, I've got a 4080 12GB shaped bridge to sell you.

Because of where I think they will be investing there money on i don't see brand new tech like dlss 3 here i see it going to raw perfWhy would you think that?

Ada and RDNA3 are on the same node.

AMD doesnt have the node advantage anymore.

Both are gonna be boosting to 3000Mhz what could AMD possibly do to actually take that the lead from Nvidia?

Beating the RTX 4090 even in pure raster will be a tall order.

Maybe in those AMD favoring titles like Valhalla and FarCry, but in general and on average the 4090 isnt losing this battle.

In terms of console i don't see them using rdna 3 i see them using rdna 4 es specially if the refreshes dont come out till 2024I love the tension of these wars. There's always the undercurrent of lurking console fans eyeing up the AMD offering, even though it won't possibly fit - in terms of size or power draw or cost - into a console product.

The 7900xt Will be my first pc so me I guessHow many people here who are hoping for AMD to be competitive in performance and aggressive in price, are actually planning on buying Radeon GPU?

Or are you just hoping AMD bring the heat so that you can buy a GeForce GPU at a discount?

If its the latter, I've got a 4080 12GB shaped bridge to sell you.

Fsr 3.0 could be better than dlss 3.0 when used at lower frameratesNvidia has priced the 4080 so high, I think they fucked themselves on 4060 and 4070 pricing. Who the hell wants to pay $600 for a 60 series card??

Only thing is I don't see FSR 3.0 coming anywhere close to DLSS 3.

So effectively RDNA2 all over again?Because of where I think they will be investing there money on i don't see brand new tech like dlss 3 here i see it going to raw perf

Wait, which one is the navi 33? Sounds kinda weird if they are going with 8gb vram for the 7700xt.

We will see but if be more surprised if the 7900 xt loses in any rasterized game that isn't Just straight nvidia favoredSo effectively RDNA2 all over again?

They might match and even beat Ada in some games no doubt.

But we know how big the GCD and MCDs are, we know the nodes being used.

We have a good guess of how high the thing can boost.

It has 3x8pins so thats approx 450-500W TDP.

At best at best in pure raster it will match a 4090 during synthetics.

RT performance hopefully on par with xx70 Ampere maybe even a 3080'10G

A few AMD favoring games will give the edge to the 7900XT too.....but beyond that, the 7900XT is once again going to be a borderline nonexistent card.

Rather look at the 76/77/78XT and hope they are priced competitively cuz Nvidia got no answer to those right now.

At the top end, forget about it.

So effectively RDNA2 all over again?

They might match and even beat Ada in some games no doubt.

But we know how big the GCD and MCDs are, we know the nodes being used.

We have a good guess of how high the thing can boost.

It has 3x8pins so thats approx 450-500W TDP.

At best at best in pure raster it will match a 4090 during synthetics.

RT performance hopefully on par with xx70 Ampere maybe even a 3080'10G

A few AMD favoring games will give the edge to the 7900XT too.....but beyond that, the 7900XT is once again going to be a borderline nonexistent card.

Rather look at the 76/77/78XT and hope they are priced competitively cuz Nvidia got no answer to those right now.

At the top end, forget about it.

How many people here who are hoping for AMD to be competitive in performance and aggressive in price, are actually planning on buying Radeon GPU?

Or are you just hoping AMD bring the heat so that you can buy a GeForce GPU at a discount?

If its the latter, I've got a 4080 12GB shaped bridge to sell you.

https://wccftech.com/amd-rdna-3-rad...er-2x-rt-performance-amazing-tbp-aib-testing/

2x RT performance compared to RDNA 2 when also having 2x more rasterization performance is not good news, it seems it would be using the same type of RT accelerator or, if better, culled back by MCM latencies. This would put their RT performance at 3090 level. That's not something to hang your hat on.

But you know, rumours and all, it's pretty much all crap until it's benched by sites.

20 to 40% faster than the 4090?At 450W with 1.5x perf/watt the uplift will be 2.25x the 6900XT which is 20% faster than a 4090 in raster.

In the very best case the perf per watt was said to be >50% and last time AMD did that it was actually a 64% going from 5700XT to the 6900XT we can say that 450W with 1.64 perf per watt is 2.46x 6900XT and 30% faster than the 4090 in raster.

Personally I think 375W is more likely and it will probably roughly match the 4090 in raster but lets wait 2 weeks and we will know.

Are you drunk or just illiterate? I said 20-30,% on the basis of 450W and a lower/upper perf per watt improvement. It is just maths. I also said I don't think 450W will happen so around 4090 at 375W feels more likely.20 to 40% faster than the 4090?

Are you drunk?

This kind of mentality is the reason Nvidia want 900$ as standard price for mid range cards, The trash 4080 12GB is

not even half as fast as the 4090 in some games so effectively Nvidia is offreing overpriced underperforming trash

and Nvidia shills are ok with it

I just did one and no regrets. Might return my 6700xt for a 6800xt tho. $300 difference tho so unsureIf I build a PC its probably a 100% forgone conclusion that AMD will get my money for both CPU and GPU.

I will certainly buy an AMD GPU if the price and performance is better.How many people here who are hoping for AMD to be competitive in performance and aggressive in price, are actually planning on buying Radeon GPU?

Or are you just hoping AMD bring the heat so that you can buy a GeForce GPU at a discount?

If its the latter, I've got a 4080 12GB shaped bridge to sell you.

gonna laugh if nvidia was able to brute force it's way to outperforming amd's mcm.

and amd drivers are probably going to have more issues than usual, given its their first mcm.

20 - 30% faster than a 4090 at 450W....PCB leaks have shown the 7900XT with 3x8pin which translates to approx 450W.Are you drunk or just illiterate? I said 20-30,% on the basis of 450W and a lower/upper perf per watt improvement. It is just maths. I also said I don't think 450W will happen so around 4090 at 375W feels more likely.

Its not cynical, its very much a reality.I always though this take was really cynical, but now I think there may be more than a kernel of truth to it. Like, I see so many mentions along the lines of "we need competition to bring prices down". Reading between the lines a bit, I must ask - do we need "competion" to get lower prices or do we just need someone to release cards at better prices to get better prices?

Me, I'm very much more likely to buy radeon but im well invested because i use linux exclusively at home. Ever since the drivers have went open source, the experience has been fantastic. Some stuff comes late, like the 6000 series not working right for a few months (never experienced it firsthand. I buy mid-low range and it was worked out by then), but once things are sorted I practically forget that drivers even exist. O_O I have not installed a gpu driver even once in the last 4 years. The reason I use linux in the first place is the low maintenance so I'm all about it. Nvidia actually works well on linux, tho. So I'm not completely locked in, just had a great experience with the drivers rolled-in and want to stick with that.

Its not cynical, its very much a reality.

The PCMR has been using AMD and ATi before them, as a lever to keep Nvidia prices in check.

Radeon GPUs have been better value for money for literally decades and at every turn they've been spurned in favour of Nvidia. The driver problems of early to mid 2000s ATi have been memed so badly its turned into a self-fulfilling prophecy.

When RV770 and Cypress launched around the time of Tesla and Fermi, ATi had their highest market share which was barely 50%. But they had parity in market share at a significantly lower ASP, so they never really made any profit. No profit means software support takes longer.

Its not any of the consumers business to care about that of course, but a lot of the problems were overstated by press and in forums like the original GAF and Beyond3D. There are even reports of actual paid shills arranged by Nvidia in partnership with AEG to push the agenda. All of this hamstrung competition, by starving Radeon of the volume needed to compete.

Right now people are suddenly acting shocked and disturbed at Nvidia's monopolistic tendencies as if they hadn't been doing this since their inception as a company. And now they're longing for AMD to come to the rescue yet again.

"Please compete so we can have sane prices in the GPU market".

Well I'm sorry, but corporations exist to make money. AMD are not your friend. Why should they undercut Nvidia by any significant margin? Why should they leave money on the table? It has never worked for them before. 9700 Pro was an excellent card, didn't win ATi any meaningful market share. HD4870 was half the price of the GTX 280. Hardly anyone cared. HD5870 absolutely mopped the floor with Fermi. Nobody cared. The R9 290X offered GTX Titan performance for half the price - nobody bought it. The Fury X offered 980 Ti performance at a steep discount. Nobody bought one. The RX480 and RX580 were, are and remain to this day a better product than the GTX 1060 (did we all forget that the 1060 3GB was also a cut down version of the 6GB in more than just VRAM lol?) - the 1060 still absolutely murdered the RX480 in terms of marketshare and mindshare.

At all turns people applauded Radeon for "Being good value", but then turned around and bought Nvidia anyway.

The market is in this state because of a lack of competition. That has been fuelled by and large due to PCMR elitism and hilariously bizarre loyalty to a company that has openly shown contempt for its fans for decades.

Apologies for the sanctimonious rant, but I have a sinking feeling AMD won't play ball any more. We had a chance to support them to keep Nvidia in check many times before, but that time has passed. AMD wants their profits and ASPs up. They have no incentive to engage in the rat race to the bottom, because it never achieved anything for them. So be prepared to just get shafted. PC gaming will become more and more niche as shit just keeps getting jacked up in price.

Now I want to make a poll here about whether people would buy a 6800XT or choose a 3070ti for just a liiiiitle more.

O_O Kinda dread to see the results.

Why bother making a poll. Look at steam hardware survey.

6800 XT 0.16%

3700 Ti 1.07%

Whatever neogaf thinks, this is the reality. Ampere dominated sales.

Nvidia dominates mid range as well, it's not even a competition, AMD is like a theoretical alternative to Nvidia, but Nvidia keeps eating away at the marketshare. They have the high end AND mid range sales dominance for discrete GPUs.

At this point, except a minority of loud AMD fans, peoples are cheering for AMD to be competitive, for Nvidia price drops, to buy Nvidia cards. Almost nothing AMD can do will get them out of this market share hole, sadly for them. They know it, they didn't produce a whole lot of RDNA 2 cards as even the 3090 outsold their entire range of cards. It was the very definition of a paper launch even though AMD marketing hyped the crowd into believing it would not be the case and that they would surpass Nvidia in availability. It was a bunch of bullshit. Same for their MSRP, had to be incredibly lucky to score one on amd.com, their AIBs had disgusting prices compared to Ampere.

Discrete GPUs are no longer (never was) AMD's bread and butter. To compete with Nvidia in production output, they would have to eat away at their silicon availability for better margin products like APUs for consoles and CPUs.

So at this point, why would AMD eat away at their already small margins if they know it'll only trigger a price war with a company that has way deeper pockets? They're not running a charity here.

Edit- you're also maybe a tad too hopeful that not only they match or exceed in rasterization, but also match in ray tracing and ML? You do know that MCM solution will already require a lot of work to split workload and be invisible to API while also trying to somehow keep latencies low? All these ray tracing / ML solutions require super fast small transfers of data, which an MCM crossbar will slow down as the data needs to hop from node to node. The more chiplets, the slower it becomes. CPU tasks are typically unaffected by chiplets, while GPUs get big impacts. Even Apple's insane 2.5TB/s link (vs AMD MI200's 800 GB/s) didn't help there, as the CPU performance was effectively doubled while the GPU was ~+50%. That's with 2 chiplets. More than that and yikes..

AMD don't have as much Radeon stock for a few reasons:Nvidia released the 1080ti and 3080 at $699 due to worrying about what AMD had coming. We've now gone from a $700 3080 to a $1200 4080. And Nvidia came to their senses on releasing a $900 4070 maybe 4070ti. I believe Nvidia had to release at higher prices to not have their partners get crushed on their 3000 series inventory. Rumor was that before the 4000 series launch reveal, there was over a billion in inventory of 3000 series cards.

AMD doesn't seem to have as much stock to sell through and could sell cards at reasonable prices. They don't even need to match or exceed the Nvidia models. 90% of the performance for 20-30% less. Maybe even a much lower power draw? DLSS and raytracing were winners for Nvidia, and made spending extra worth it in many people's eyes. We don't know what AMD has in store, but we're heading into a major market downturn and people will be tightening their belts. People are going nuts of the 4090, but some people are always crazy and blow money just to have the best. Chip manufacturing will have more free capacity as long as China doesn't try moving in on Taiwan. People as a whole will be less likely to drop as much money on a new GPU as they were during the two-year drought. The used market will also affect pricing, you can get a used RTX 3080 for under $500 now, or a used RTX 3070 for just over $300.

I'm just hoping that they can continue to be competitive with Nvidia and bring solid prices. If the new cards are just absolutely insane, then I'll purchase the 7000 series. I am happy with my 6800 XT purchase thus far and that was my first AMD GPU purchase. I wish everyone would hope AMD and Intel do well in the GPU space. That will mean more options, better tech and prices for us... the consumers. Anything else is clown behavior, imo.How many people here who are hoping for AMD to be competitive in performance and aggressive in price, are actually planning on buying Radeon GPU?

Or are you just hoping AMD bring the heat so that you can buy a GeForce GPU at a discount?

If its the latter, I've got a 4080 12GB shaped bridge to sell you.

Why would you think that?

Ada and RDNA3 are on the same node.

AMD doesnt have the node advantage anymore.

Both are gonna be boosting to 3000Mhz what could AMD possibly do to actually take that the lead from Nvidia?

20 - 30% faster than a 4090 at 450W....PCB leaks have shown the 7900XT with 3x8pin which translates to approx 450W.

The board at least has a 450W rating, likely the power slider will be unlocked up to 450W regardless of what the shipped state is.

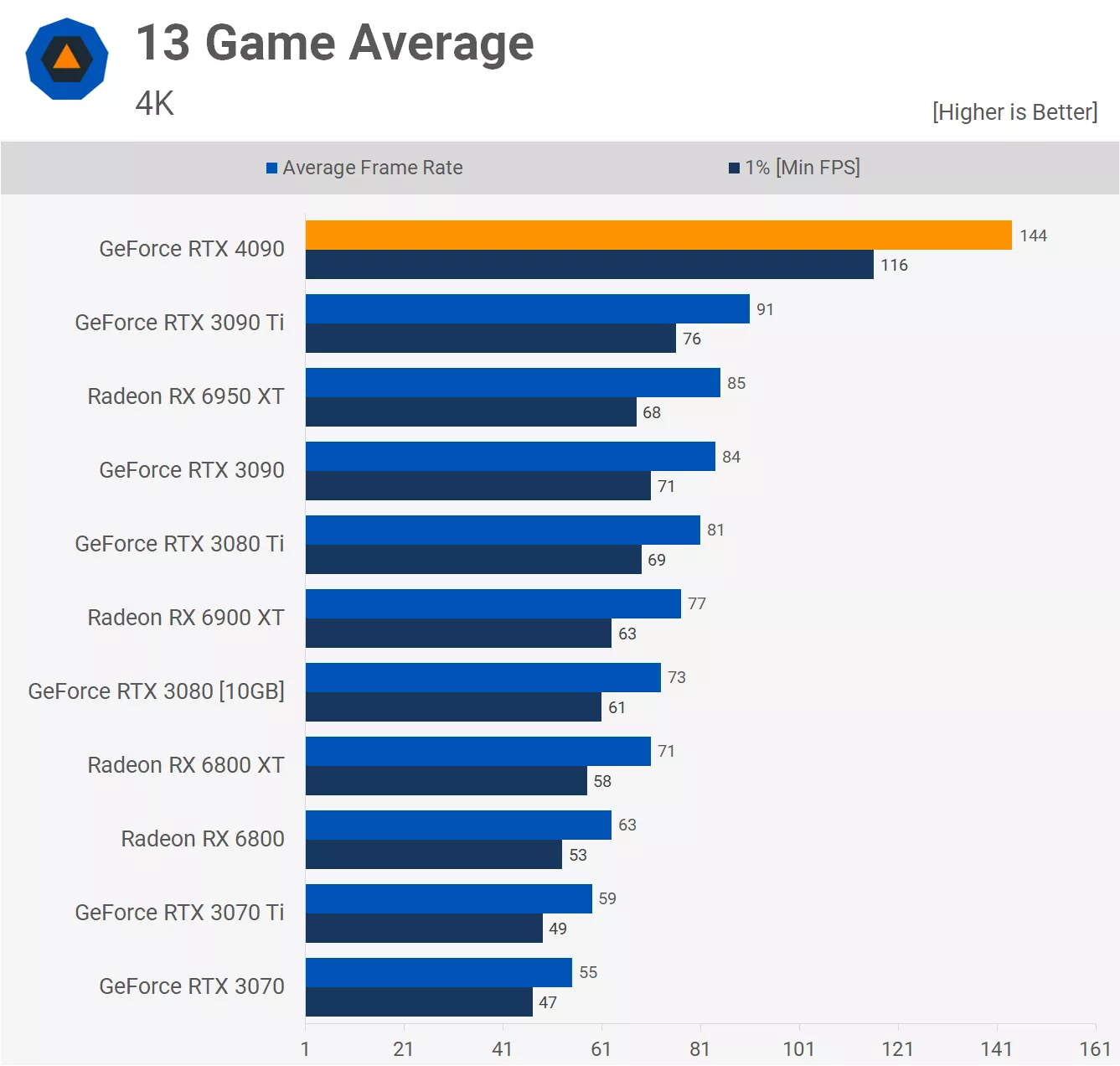

That would put the 7900XT at like 180fps 13 game average at 4K.

Yeah I want whatever you are on.

Do people really think that if amd is aggressive with prices nvidia is gonna lower their price the day after?How many people here who are hoping for AMD to be competitive in performance and aggressive in price, are actually planning on buying Radeon GPU?

Or are you just hoping AMD bring the heat so that you can buy a GeForce GPU at a discount?

If its the latter, I've got a 4080 12GB shaped bridge to sell you.

Based on what I've watched (I might have missed something), MLID has been consistently that RDNA3 will be good but that it will not beat Lovelace.but people like MLiD dream of AMD performing some magic that will destroy all their competition (blah blah infinity cache). And there are people who fell for that fan marketing.

Do people really think that if amd is aggressive with prices nvidia is gonna lower their price the day after?

How they would justify that with pr talk??

And did something similar even happened? Amd having aggressive prices and nvidia eating their pride and lowering their price the day\week after?

AMD don't have as much Radeon stock for a few reasons:

1) Nobody buys them - their marketshare has been hard capped at like 20% for the better part of a decade.

2) They have a limited number of wafers to build out their entire tech portfolio including Radeon, Instinct, CPU, Xilinx, APUs and Consoles. Out of those things CPU's are the most profitable. Simple as.

As I've said before. They have no incentive to play the game to try and win marketshare because the market has proven time and time again they don't care. Heck we've got tech journalists unironically trying to shill Intel ARC, when AMD and Radeon have been present and competing on a shoestring budget forever.

If they do decide to try, because of Nvidia's unique vulnerability this generation, I will be pleasantly surprised. But this is the last chance. If nobody buys in, the market is fucked forever. Intel might be relevant, but I doubt they'll do much better than Radeon.