If they beat a 4080 they'll just price just as bad. Or at best, offer a $50 discount.Any chance of a 7800 beating a 4080 16gb in rasterization??

Weren't people saying that without dlss3 that card kinda stinks on his own?

Imagine if amd can surpass their performances with a 200-300 dollars lower price.

I'm pretty sure that many people don't give 2 fucks about rtx parity...

-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

YeulEmeralda

Linux User

There are driver issues if you play old games and use emulators. (and yes I bought a PC to play Deadly premonition).

GymWolf

Member

Slightly beating in raster but with inferior rtx and dlss tech tho.If they beat a 4080 they'll just price just as bad. Or at best, offer a $50 discount.

I and many other don't care about rtx, but many people who build high end machines do.

Sure if they beat a 4080 16gb by A LOT then we can discuss, but it's probably not gonna happen.

Also nvidia has that tech to remaster old games with rtx that it looks horrible to me but people here were jerking off over that tech.

Last edited:

Crayon

Member

Any chance of a 7800 beating a 4080 16gb in rasterization??

Weren't people saying that without dlss3 that card kinda stinks on his own?

Imagine if amd can surpass their performances with a 200-300 dollars lower price.

I'm pretty sure that many people don't give 2 fucks about rtx parity...

They were in the ballpark last time so I guess it's a coin toss. Keep in mind the swing from games that favor one or the other can be way bigger than the average difference.

GymWolf

Member

Too bad that amd big difference is with ubisoft games of all gamesThey were in the ballpark last time so I guess it's a coin toss. Keep in mind the swing from games that favor one or the other can be way bigger than the average difference.

Joking, but not by that much

I honestly feel like there isn't a single game where the performance penalty from RTX is actually worth it.Slightly beating in raster but with inferior rtx and dlss tech tho.

I and many other don't care about rtx, but many people who build high end machines do.

Sure if they beat a 4080 16gb by A LOT then we can discuss, but it's probably not gonna happen.

Also nvidia has that tech to remaster old games with rtx that it looks horrible to me but people here were jerking off over that tech.

When you're playing the game, there is no major visual difference.

Crayon

Member

I honestly feel like there isn't a single game where the performance penalty from RTX is actually worth it.

When you're playing the game, there is no major visual difference.

I like to think I have a good eye, and I appreciate the effects, but tbh I would fail the pepsi challenge in most scenes in most games.

RT reflections are the effect that is clearly superior to the tricks we have now. But rasterized light and shadow tricks are pretty fuckin good. The performance hit is absolutely savage when the game already has all that painstaking work put into the non-rt effects.

The real age of raytracing begins when more games come that REQUIRE rt. That's when develpers can get their light and shadow done way more quickly and we see the true benefit (easier development) , instead of the marketed benefit (betar grafiks).

BattleScar

Member

You make the mistake of assuming AMD cares about gaining market share.Slightly beating in raster but with inferior rtx and dlss tech tho.

I and many other don't care about rtx, but many people who build high end machines do.

Sure if they beat a 4080 16gb by A LOT then we can discuss, but it's probably not gonna happen.

Also nvidia has that tech to remaster old games with rtx that it looks horrible to me but people here were jerking off over that tech.

Whatever they price it at, it will sell.

GymWolf

Member

Shut your globally space reflected mouth, you rasterized filth.I honestly feel like there isn't a single game where the performance penalty from RTX is actually worth it.

When you're playing the game, there is no major visual difference.

But yeah, fuck rtx

Last edited:

Crayon

Member

You make the mistake of assuming AMD cares about gaining market share.

Whatever they price it at, it will sell.

That's a big question mark for me. AMD and partners might be happier keeping margins up instead of moving more and more product with less money made on each one.

Another way to look at it is that maybe they don't want to be the cheap option in the long term, but back to an equally good option.

SolidQ

Member

https://www.anandtech.com/show/2679 - This relation to RDNA3

01011001

Banned

I honestly feel like there isn't a single game where the performance penalty from RTX is actually worth it.

When you're playing the game, there is no major visual difference.

that's when DLSS comes in

Control with RT on looks drastically better than with it off. same with Spider-Man.

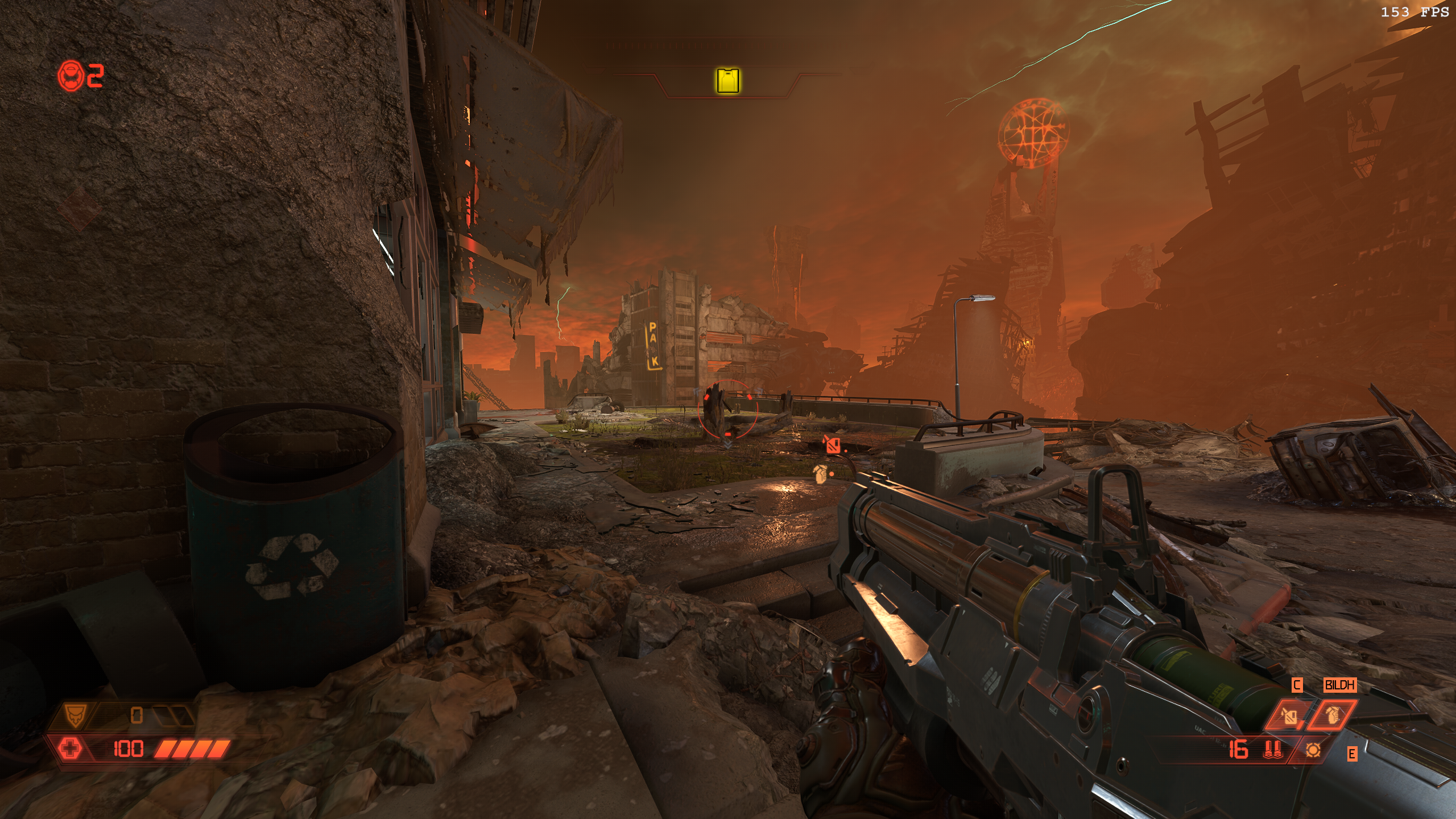

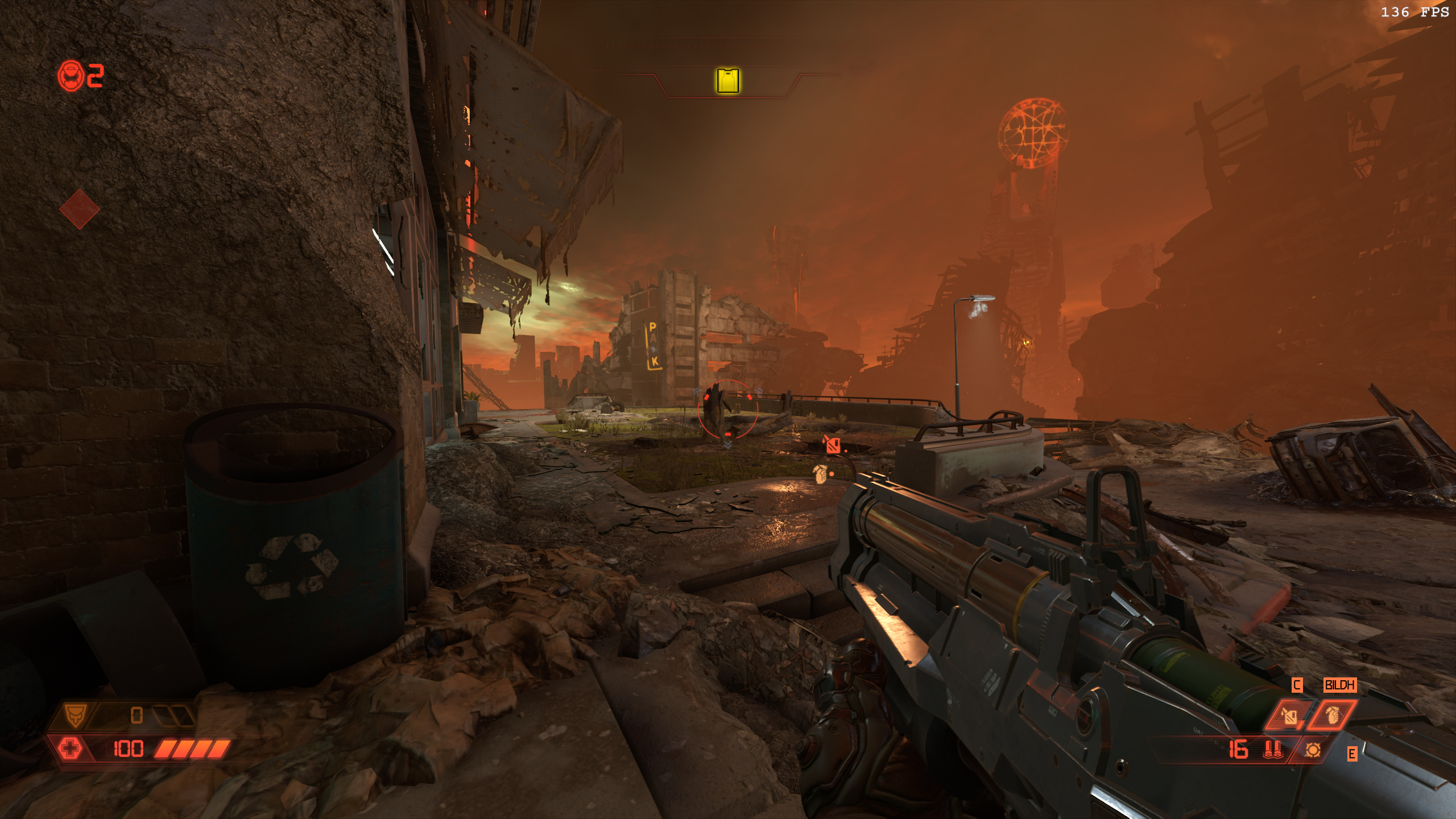

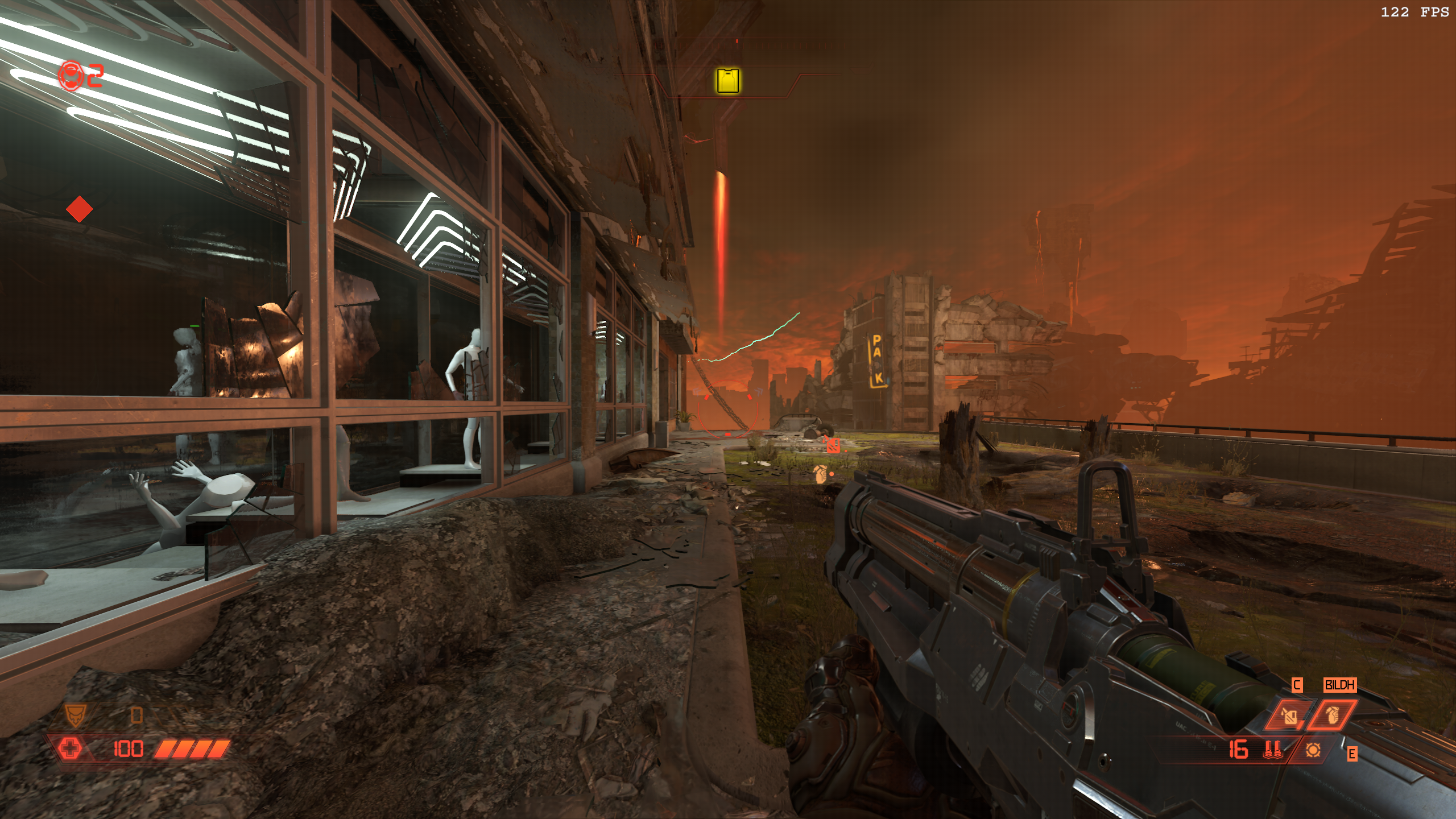

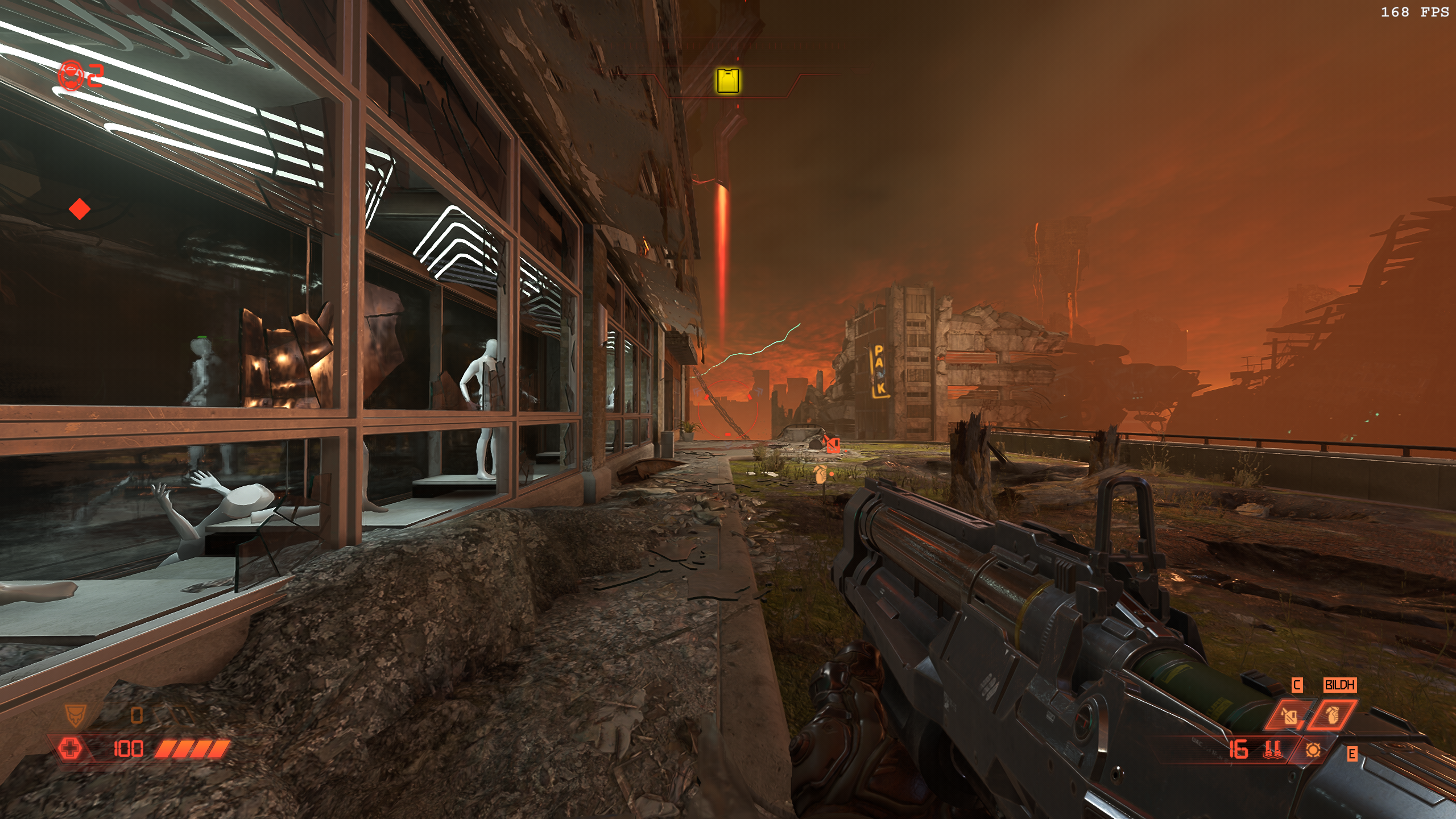

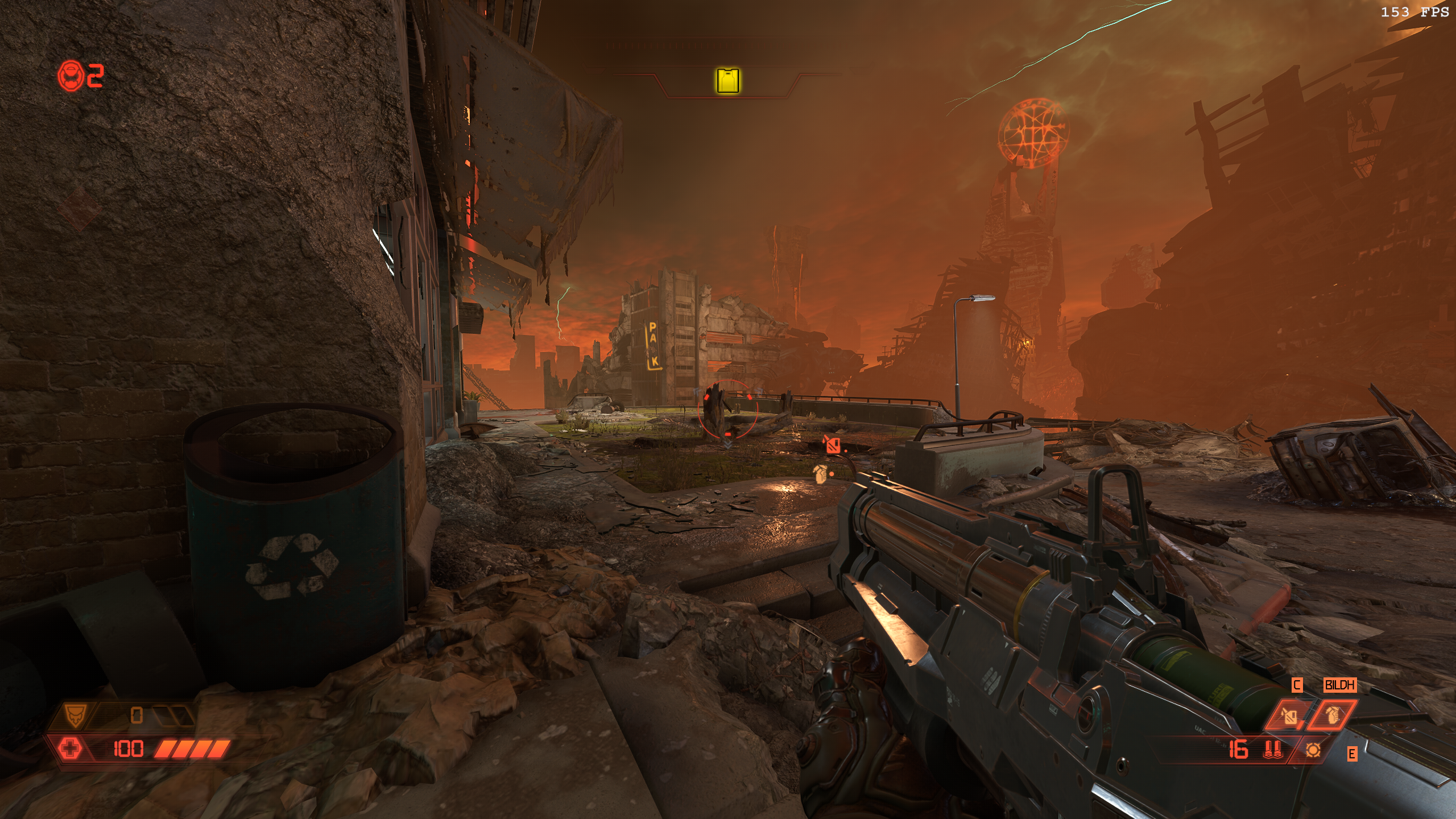

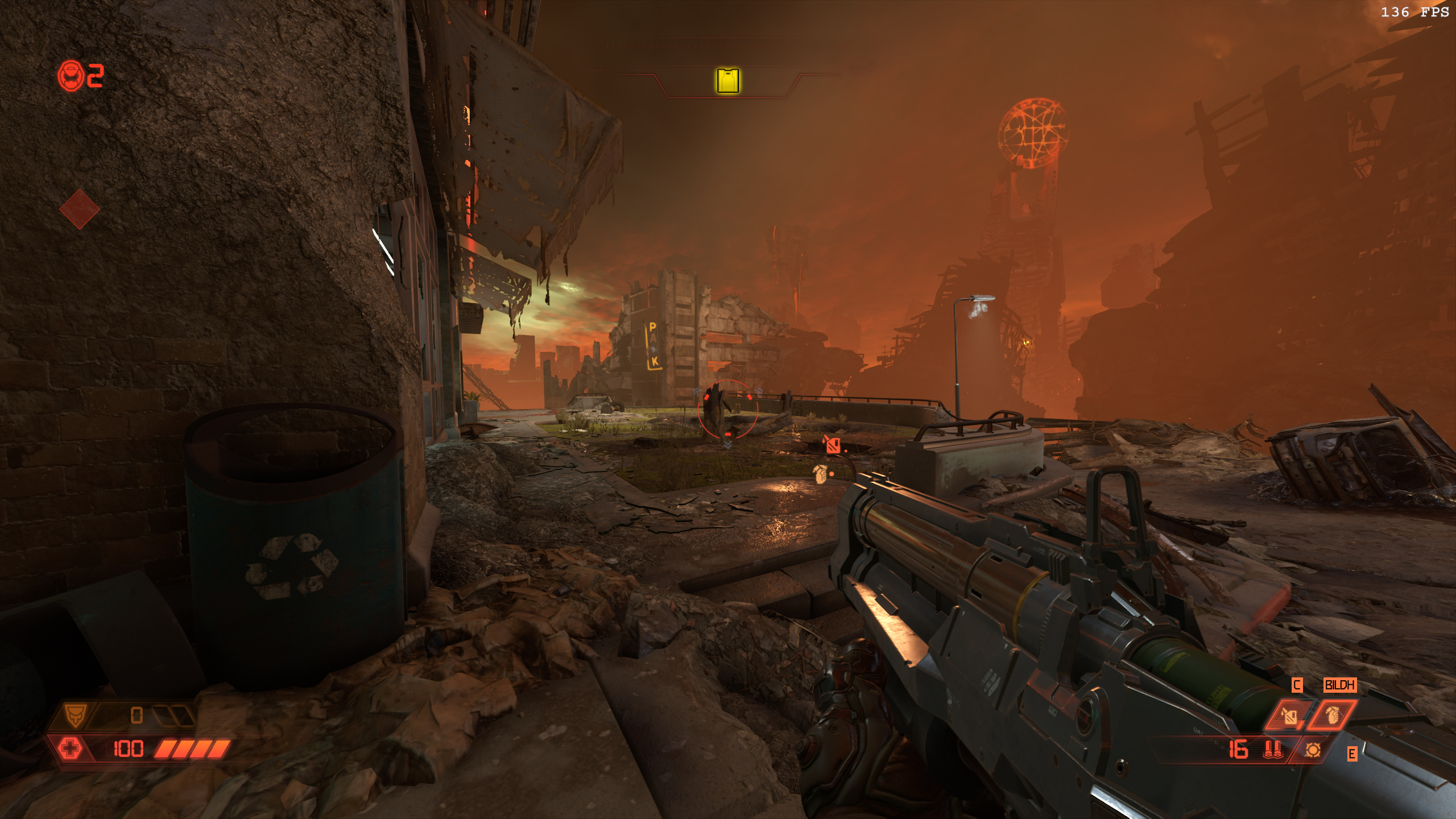

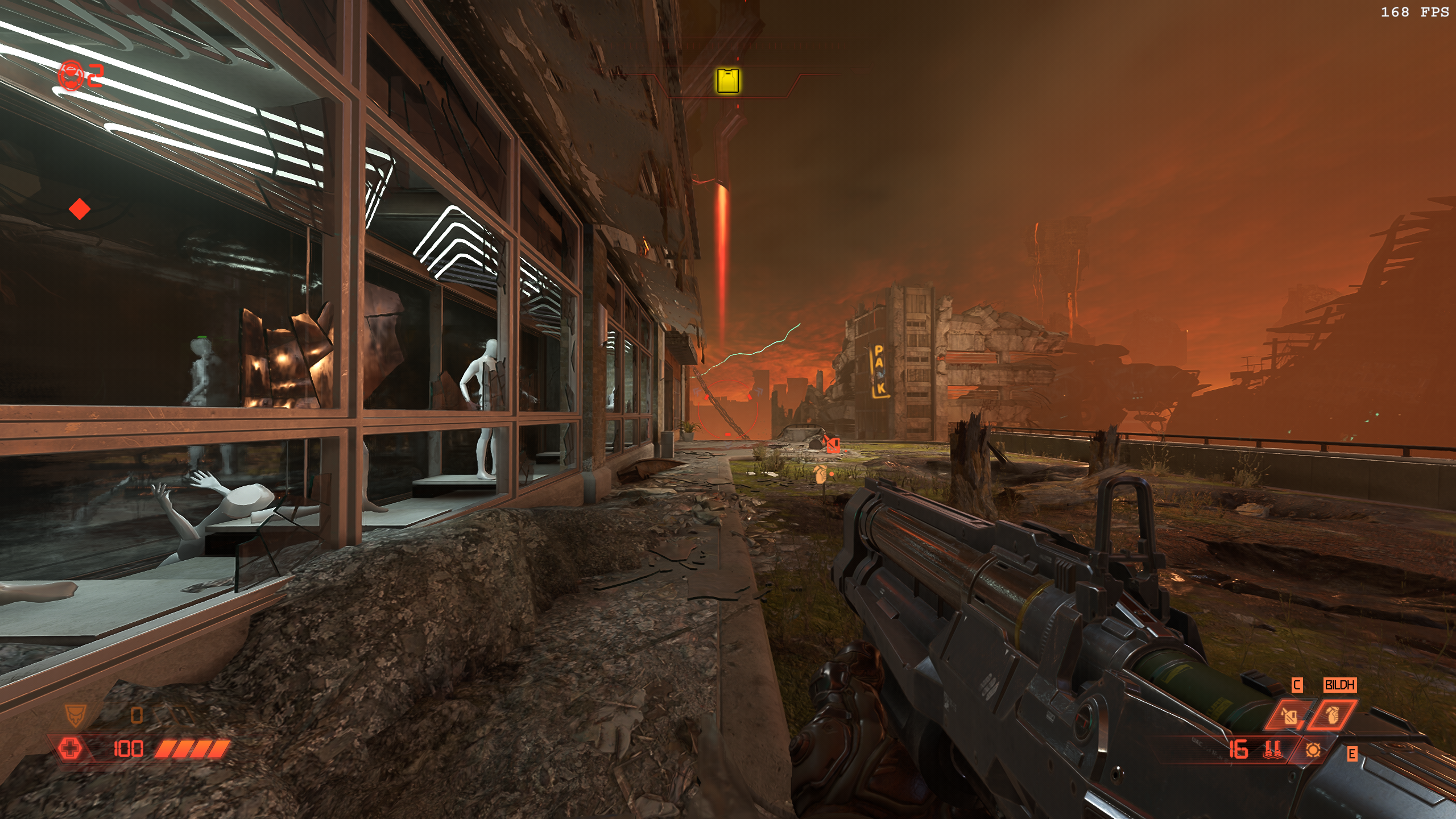

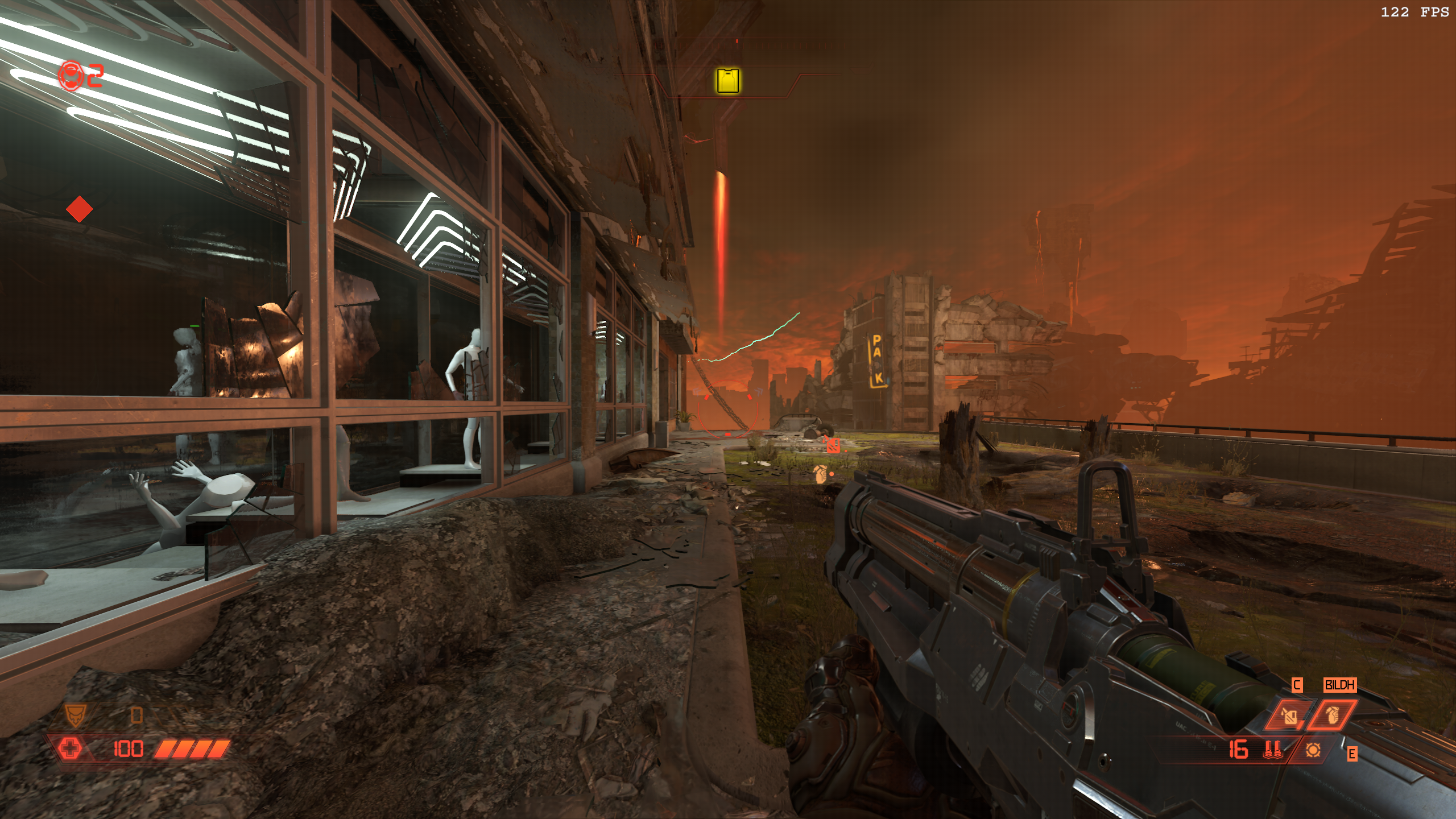

some levels in Doom Eternal look amazing with RT (especially that underwater station in the DLC) and Doom Eternal runs fast as hell even with RT on. iD Tech 7 is floppin it's massive dick on the table as usual

TheRedRiders

Member

Anyone know how reliable Keplar is?

Control is probably the best example of RT making a substantial visual difference.that's when DLSS comes insmall image quality penalty for a huge settings boost and good performance.

Control with RT on looks drastically better than with it off. same with Spider-Man.

some levels in Doom Eternal look amazing with RT (especially that underwater station in the DLC) and Doom Eternal runs fast as hell even with RT on. iD Tech 7 is floppin it's massive dick on the table as usual

I could barely tell the difference in Doom Eternal other than some slightly shiny and reflective surfaces.

Last edited:

01011001

Banned

Control is probably the best example of RT making a substantial visual difference.

I could barely tell the difference in Doom Eternal other than some slightly shiny and reflective surfaces.

I mean in doom Eternal basically every reflective surface gets RT reflections, and in some levels that adds a lot. it's just cool when you run through a level and see enemies behind you thanks to a big refelctive window n stuff.

Crayon

Member

Anyone know how reliable Keplar is?

...must... resist.... buying... hype.....

MUST RESIST

BY THE POWER OF CHRIST I REBUKE THEE, HYPE

Anyone know how reliable Keplar is?

If this is right then we've got strong competition for the 4090.

01011001

Banned

Control is probably the best example of RT making a substantial visual difference.

I could barely tell the difference in Doom Eternal other than some slightly shiny and reflective surfaces.

I mean in doom Eternal basically every reflective surface gets RT reflections, and in some levels that adds a lot. it's just cool when you run through a level and see enemies behind you thanks to a big refelctive window n stuff.

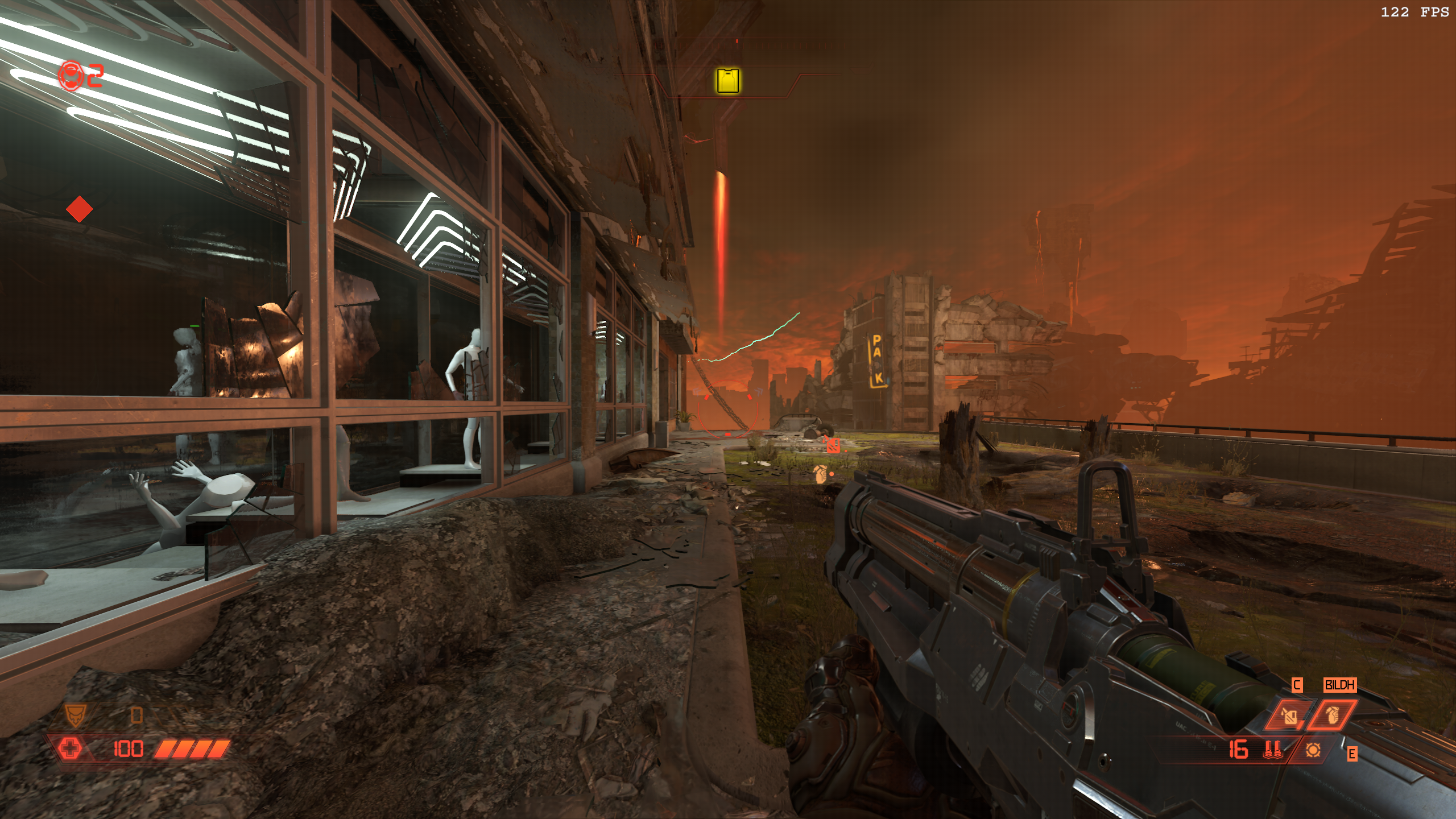

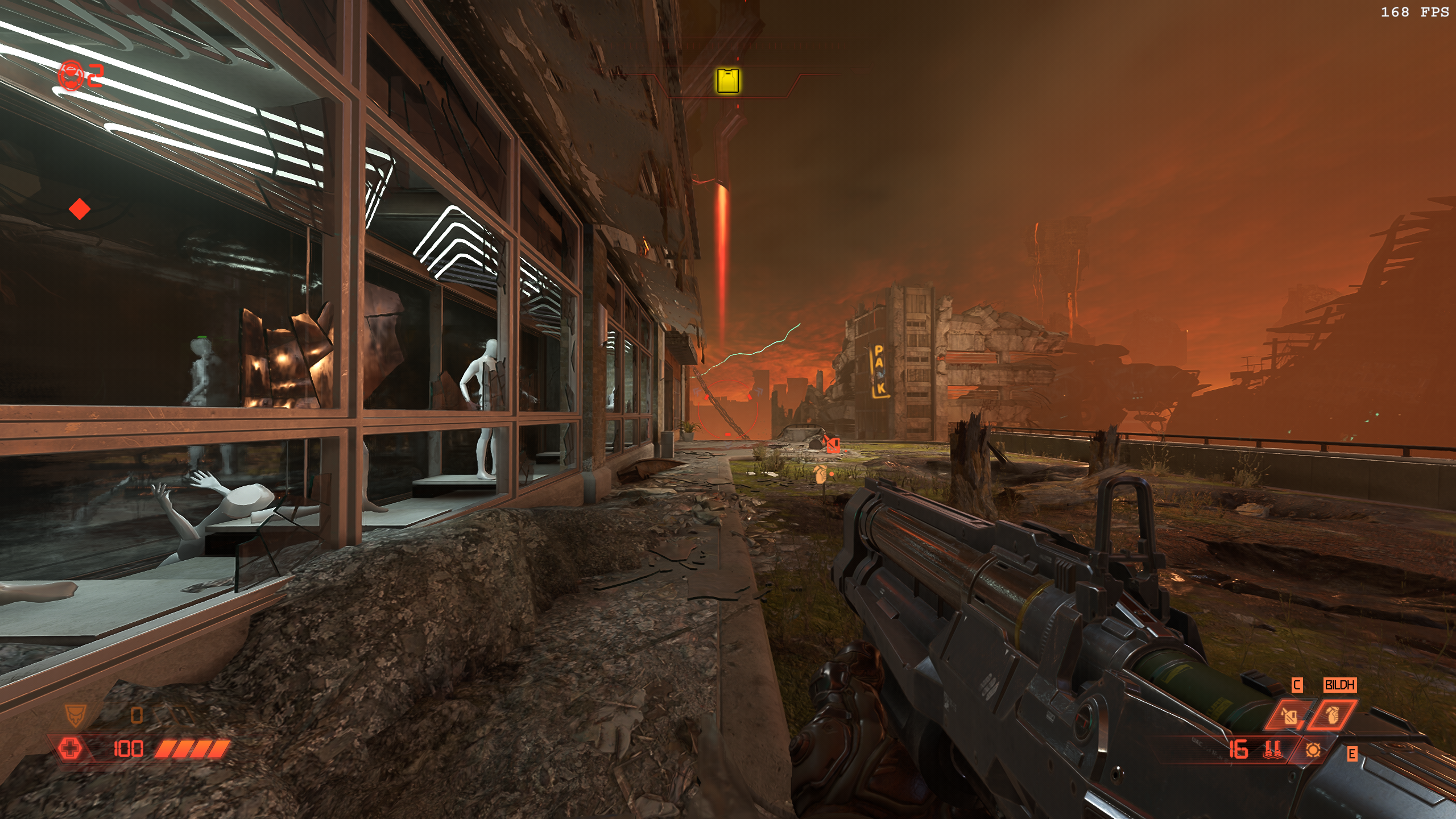

like, you can't really argue against that on a 1440p screen running an RTX3060ti and reaching basically my max monitor refresh reliably at a mix of Ultra and Nightmare settings with RT on and DLSS on Quality (which looks really nice in this game as well btw thanks to the sharpness slider which all games should have)

so RT for me in Doom Eternal is an absolute no-brainer if you have a somewhat decent PC, and even on lower end Nvidia cards thanks to DLSS you can try it at least

and now behold, the massive dick of iD Tech 7

slapping you across the face with its glory!

(the filmgrain effect looks really bad in screenshots lol)

EDIT: and just for comparison sake, and why you should use DLSS in many many games over TAA:

DLSS [Quality] Stationary

TAA Stationary

DLSS [Quality] Strafing Right

TAA Strafing Right

and as a bonus:

DLSS [Performance] Strafing Right

Last edited:

Silver Wattle

Member

Anyone know how reliable Keplar is?

Nothing new here, and calling the 4080 "lowly" is apt, it's an overpriced pos compared to the 4090.

FingerBang

Member

Anyone know how reliable Keplar is?

Don't know how reliable he is but I think he has a point. N31 seems to be for the rumored 7900xt and 7950xt and we should expect the former to be quite faster than a 4080 16GB if the latter can trade blows with a 4090.

...but I really doubt those are going to be cheap. If they priced the 7900xt at around $1000 and the 7950xt at $1200 it'd still be great though

Crayon

Member

The launch price is one thing but there's also price drops and sales. Here's a 6600 for $219:

PowerColor Fighter AMD Radeon RX 6600 Graphics Card with 8GB GDDR6 Memory https://a.co/d/5AhQyj3

That was $329 a year ago when it came out. 329 is a lot for that imo, but 229 is a good deal.

A 7600 that will outrun a ps5 might be regularly available under $250 two years from now with a price drop and the occasional sale. Maybe a 7700XT for a little over $400?

Two years seems a ways out but that 6600 is still in play right now. It's current and will be in it's class for another year.

If someone can sell it for that low after launching at $329, and the mcd can help avoid a price hike, 7000 cards will be a good buy a year after release even if the launch price is disappointing. Just like 6000 cards.

PowerColor Fighter AMD Radeon RX 6600 Graphics Card with 8GB GDDR6 Memory https://a.co/d/5AhQyj3

That was $329 a year ago when it came out. 329 is a lot for that imo, but 229 is a good deal.

A 7600 that will outrun a ps5 might be regularly available under $250 two years from now with a price drop and the occasional sale. Maybe a 7700XT for a little over $400?

Two years seems a ways out but that 6600 is still in play right now. It's current and will be in it's class for another year.

If someone can sell it for that low after launching at $329, and the mcd can help avoid a price hike, 7000 cards will be a good buy a year after release even if the launch price is disappointing. Just like 6000 cards.

GymWolf

Member

umm...didn't series 6000 kinda failed in term of sales? so failing again is not completely off the table.You make the mistake of assuming AMD cares about gaining market share.

Whatever they price it at, it will sell.

But i could be wrong.

SantaC

Member

It was sold out for a year due to the pandemicumm...didn't series 6000 kinda failed in term of sales? so failing again is not completely off the table.

But i could be wrong.

winjer

Member

umm...didn't series 6000 kinda failed in term of sales? so failing again is not completely off the table.

But i could be wrong.

Not really. It's complicated.

The 6000 series was released during the crypto boom, and covid restrictions increasing demand for chips.

AMD had allocated as much as it could from TSMC N7 node. But it had a lot of products to make. Zen3, PS5, Series S/X and GPUs.

In the case of consoles, there were contracts, so AMD had to make these chips to sell to Sony and MS.

On the case of Zen3 CPUs, these were the best CPUs on the market until Alder Lake, and they were selling very well.

But the thing is, CPUs are much more profitable than GPUs. At the time, a 5800X had a similar MSRP of a 6700XT.

But the 5800X uses only 4.15 million transistors. The chip for the 6700XT uses 17.2 Million. So AMD can get a lot more chips for CPUs, from a waffer, than for GPUs.

Also, the CPU has less things. It has a package, cooler, pins, IOD, HIS, and not much more. But a GPU will have a bigger cooler, GDDR6, voltage regulation, packaging, etc.

So AMD made the logical decision and made more CPUs, and less GPUs.

To make things worse, the few GPUs that AMD made, were bought mostly by miners. So even fewer ended up on gamers hands.

Buggy Loop

Gold Member

So then it was a paper launch and with so few units that they got even lost market share. Nvidia was also scooped up for crypto, yet still manages to gain a good portion of steam hardware survey.

Now show us pics with RTX off.like, you can't really argue against that on a 1440p screen running an RTX3060ti and reaching basically my max monitor refresh reliably at a mix of Ultra and Nightmare settings with RT on and DLSS on Quality (which looks really nice in this game as well btw thanks to the sharpness slider which all games should have)

so RT for me in Doom Eternal is an absolute no-brainer if you have a somewhat decent PC, and even on lower end Nvidia cards thanks to DLSS you can try it at least

and now behold, the massive dick of iD Tech 7

slapping you across the face with its glory!

(the filmgrain effect looks really bad in screenshots lol)

EDIT: and just for comparison sake, and why you should use DLSS in many many games over TAA:

DLSS [Quality] Stationary

TAA Stationary

DLSS [Quality] Strafing Right

TAA Strafing Right

and as a bonus:

DLSS [Performance] Strafing Right

In most games there is barely any visual difference.

The Doom Eternal engine runs fantastic though even though it's a visually simplistic game.

KungFucius

King Snowflake

That is good because I like fighting for high end cards at launch and ending up with my 4th choice every couple of years until I finally get something that is so good for my age that I don't care anymore.Someone need to check the bench for plague tale requiem...the first "big" game released after the 4090.

People thinking that a 4090 is gonna be enough for everything at 4k120 without dlss are in for a REALLY rude awakening...

Like i say every single time, heavy (or in this case fucking broken) games are still gonna be released in the future, and requiem doesn't even have rtx...

Their pricing is due to their excessive inventory of 3000 series cards. When that inventory is gone, I would expect the 12GB card and lower to show up and take their place at more reasonable prices but more expensive than last gen. If Fucking Lego can raise prices by 25% for for fucking plastic, Nvidia can do the same for their GPUs. Plus they can always slap Ti on the thing andNvidia has priced the 4080 so high, I think they fucked themselves on 4060 and 4070 pricing. Who the hell wants to pay $600 for a 60 series card??

Only thing is I don't see FSR 3.0 coming anywhere close to DLSS 3.

They have to do the last bit consistently to gain on the high end, and with that the mindshare on the lower end. If someone buys a 4090 and the AMD's 7900XT is close to it across the board for 600 less it will gain their attention. If they do it again with the 8900XT they might buy it, if again with the 9900XT, etc. But if they do it once and Nvidia comes out with a monster 5090 that they cannot touch with the 8900XT, then they are back to where they are now.The only way for AMD to gain market share is by agressively undercutting Nvidia or bringing out products that are significantly superior to Nvidia.

We were jerking off because it can also be used to enhance VR porn.Also nvidia has that tech to remaster old games with rtx that it looks horrible to me but people here were jerking off over that tech.

SportsFan581

Member

So then it was a paper launch and with so few units that they got even lost market share. Nvidia was also scooped up for crypto, yet still manages to gain a good portion of steam hardware survey.

Not to mention Nvidia was even more overpriced at retail and are still above MSRP for the lower part of the stack. It really surprised me that the 6600 didn't fair better since it never got the price markup that a lot of other cards did and was frequently in stock for $350-$375 throughout the shortage.

AncientOrigin

Member

Yes that is the right thought.It's only a few days left till everything will be known.And maybe you don't even need the most expensive best card.maybe the mid tier cards are probably stronger than a 3090 so you will have lots of optionGot a 3090 the other day and have sent it back and got a refund. Wanna see what AMD show if the flagship is around 1200 then I'll get that as it should easily out perform a 3090 for a few hundred more.

Yea exactly. I hate it as can't even use my PC without a GPU but rather get the best for my money by simply waiting a bit longer.Yes that is the right thought.It's only a few days left till everything will be known.And maybe you don't even need the most expensive best card.maybe the mid tier cards are probably stronger than a 3090 so you will have lots of option

BattleScar

Member

Failing sales wise does not mean not being profitable.umm...didn't series 6000 kinda failed in term of sales? so failing again is not completely off the table.

But i could be wrong.

Low-volume, High-margin is a perfectly viable business model. Certainly better than being low-volume, low-margin that they've been forced to deal with for the last decade.

As I've said a million times before. They've tried to offer unequivocally better value products than Nvidia and people have consistently performed all sorts of mental gymnastics to buy Nvidia instead.

They have no incentive to enter the rat race. And they certainly won't do it, because PC gamers are sad that they're getting shafted for by the market conditions they themselves propagated.

I honestly feel like there isn't a single game where the performance penalty from RTX is actually worth it.

When you're playing the game, there is no major visual difference.

RTX did wonder to Minecraft.

01011001

Banned

Now show us pics with RTX off.

In most games there is barely any visual difference.

The Doom Eternal engine runs fantastic though even though it's a visually simplistic game.

of course with RTX off I'll get way better performance but everything above 144 fps on a 144hz screen is wasted potential for a game like this.

I'm not over here needing framerates of 2x my refresh to optimise input lag like CS:GO players do

and of course you see a difference... screen space reflections are disgusting, and with RT Doom Eternal gets rid of that shit, so one of the worst eyesores of modern graphics gone is absolutely worth it for me to not run at 2x my monitor refresh

Last edited:

SportsFan581

Member

Failing sales wise does not mean not being profitable.

Low-volume, High-margin is a perfectly viable business model. Certainly better than being low-volume, low-margin that they've been forced to deal with for the last decade.

As I've said a million times before. They've tried to offer unequivocally better value products than Nvidia and people have consistently performed all sorts of mental gymnastics to buy Nvidia instead.

They have no incentive to enter the rat race. And they certainly won't do it, because PC gamers are sad that they're getting shafted for by the market conditions they themselves propagated.

Nothing says they can't do a bit of both though. They would not necessarily need to position themselves aggressively at every price point, but trying to design something that gives them the best bang for the buck possible on the lower-end could certainly help. Would have to still be good business for them and the AIBs. They need another one of those cards that is just an absolute no brainer for the average gamer, they've done it in the past.

Last edited:

YeulEmeralda

Linux User

People going with Nvidia is not mental gymnastics.

Fact is Nvidia has been the market leader for 20 years and I invite you to look objectively into why this is. Their R&D can't be matched. Every time AMD becomes a threat Nvidia pulls something out of their hat and AMD is left reacting to it. See DLSS or ray tracing.

Fact is Nvidia has been the market leader for 20 years and I invite you to look objectively into why this is. Their R&D can't be matched. Every time AMD becomes a threat Nvidia pulls something out of their hat and AMD is left reacting to it. See DLSS or ray tracing.

I care and I don't build high end machines. Would love to use RT but the performance hit is savage.I and many other don't care about rtx, but many people who build high end machines do.

Ray tracing is literally the reason why I'd consider an upgrade in the first place. Without RT – and with real heavy hitters with DLSS – my 2070 Super is still quite capable. So non-RT performance isn't really that interesting to me and if DLSS is getting still more frequently implemented than FSR2 (which it seems as of now) there's little AMD can do to convince me except for 100% more performance than my 2070S for 500 EUR tops, because the lack of DLSS and performance thereof has to be mitigated by a huge performance surplus.

GymWolf

Member

My 2070 super is pretty good with decently optimized titles but it sweat a lot with broken\overly heavy games like gotham knights and plague tale 2 (both with dlss)I care and I don't build high end machines. Would love to use RT but the performance hit is savage.

Ray tracing is literally the reason why I'd consider an upgrade in the first place. Without RT – and with real heavy hitters with DLSS – my 2070 Super is still quite capable. So non-RT performance isn't really that interesting to me and if DLSS is getting still more frequently implemented than FSR2 (which it seems as of now) there's little AMD can do to convince me except for 100% more performance than my 2070S for 500 EUR tops, because the lack of DLSS and performance thereof has to be mitigated by a huge performance surplus.

I had to settle for 45 frame to not get too low with the details and res on GK and it still dips rarely.

And i'm lucky because like i said i give 2 fucks and a half about rtx.

Last edited:

SportsFan581

Member

People going with Nvidia is not mental gymnastics.

Fact is Nvidia has been the market leader for 20 years and I invite you to look objectively into why this is. Their R&D can't be matched. Every time AMD becomes a threat Nvidia pulls something out of their hat and AMD is left reacting to it. See DLSS or ray tracing.

You can't deny that. They always support their cards extremely well also. Game specific patches get released often, sometimes specifically for very old cards.

Their excellence has put them where they are. Would still like to see some meaningful competition though, especially with Nvidia's new take on it not being worth it to make console like GPUs. I'm still deciding on whether or not to upgrade to XSX or upgrade the old gaming PC, a new budget king would be much appreciated.

Last edited:

People going with Nvidia is not mental gymnastics.

Fact is Nvidia has been the market leader for 20 years and I invite you to look objectively into why this is. Their R&D can't be matched. Every time AMD becomes a threat Nvidia pulls something out of their hat and AMD is left reacting to it. See DLSS or ray tracing.

This is BS.

20 years ago the 9700pro launched with proper fp32 and the ability to run AA and AF at playable framerates. When FX did finally release it could only handle FP24 so when playing stuff like source engine games (Half-Life 2 being a big one at the time) if it didn't run in compatability mode with worse image quality it ran like absolute dog shit. Then the 9800XT released and cemented ATi. X800 vs 6800 was a close run thing. X1800 vs 7800 was a slight NV advantage but the refreshes of X1900 and X1950 vs the 7900 was ATi all the way.

After that the 2000 and 3000 series were significantly worse than the 8000 and 9000 series NV parts and this is where NV really started to gain market share and snowball. AMD had a great chance with the 4000 to actually take the crown but instead went for a 'small die' strategy which meant RV770 was tiny but was able to be around 90% of GTX 280 performance. They should have made an RV790 with 1,200 shaders and just taken the performance crown. 5000 series was also faster than the 480 and 5000 series brought with it angle independent AF which was a huge IQ improvement.

6000 series was a bit of a side step and NV made a good leap with 500 series but again AMD took the crown with the 7000 series (Which for some reason was about 33% underclocked at launch with parts capable of 30% OCs easily) and even when the 680 came out OC vs OC Tahiti was faster. 290X was faster than Titan and the 780Ti came out with a full die to compete but price wise AMD was the better perf/$. Unforunately what happened is that the issues in the 2000 and 3000 series stuck so even when AMD offered better perf/$ with the 4000, 5000, 7000 and 200 series NV still sold more. That led to stuff like Fiji not being as fast as the 980Ti and then Vega 64 being a big let down. RDNA was a good arch but AMD never released a properly top tier part and then we arrive at RDNA2 where it is competitive in raster but NV does have a few more feature and is much better at RT although it comes at a very large premium.

But no. NV have not been the market leader for 20 years.

Last edited:

That definitely helps with older hardware.And i'm lucky because like i said i give 2 fucks and a half about rtx.

Just testing Chernobylite and half the time there is not a single difference even on side by side screenshots. Yet the performance is still tanked to the ground.

When there's a scene however where every effect applies it looks brilliant. Just saw a Dying Light 2 video and it looks totally transformed with RT.

DonkeyPunchJr

World’s Biggest Weeb

Exactly how I feel as well. Ray tracing is where the performance increase is most needed. How many non-RT games are there that challenge current high-end GPUs?I care and I don't build high end machines. Would love to use RT but the performance hit is savage.

Ray tracing is literally the reason why I'd consider an upgrade in the first place. Without RT – and with real heavy hitters with DLSS – my 2070 Super is still quite capable. So non-RT performance isn't really that interesting to me and if DLSS is getting still more frequently implemented than FSR2 (which it seems as of now) there's little AMD can do to convince me except for 100% more performance than my 2070S for 500 EUR tops, because the lack of DLSS and performance thereof has to be mitigated by a huge performance surplus.

"It's 3% faster in raster and 30% slower in ray tracing….but who cares about ray tracing?" is not a convincing argument.

Other than reflective surfaces there is no graphical improvement with Doom Eternal RT. And in most cases, that's all RT offers.of course with RTX off I'll get way better performance but everything above 144 fps on a 144hz screen is wasted potential for a game like this.

I'm not over here needing framerates of 2x my refresh to optimise input lag like CS:GO players do

and of course you see a difference... screen space reflections are disgusting, and with RT Doom Eternal gets rid of that shit, so one of the worst eyesores of modern graphics gone is absolutely worth it for me to not run at 2x my monitor refresh

Full raytraced shadows and lighting looks good but we only see this in very simplistic games such as Quake 2.

winjer

Member

This is BS.

20 years ago the 9700pro launched with proper fp32 and the ability to run AA and AF at playable framerates. When FX did finally release it could only handle FP24 so when playing stuff like source engine games (Half-Life 2 being a big one at the time) if it didn't run in compatability mode with worse image quality it ran like absolute dog shit. Then the 9800XT released and cemented ATi.

The R300 is the one that only supported FP24.

NV30 is the one that supported FP32 or FP16.

01011001

Banned

Other than reflective surfaces there is no graphical improvement with Doom Eternal RT. And in most cases, that's all RT offers.

Full raytraced shadows and lighting looks good but we only see this in very simplistic games such as Quake 2.

the worst thing modern game graphics do is use screen space based effects, the worst of those is BY FAR Screen Space Reflections...

RT reflections get rid of that crap and replace them with accurate ones that aren't turning into a glitched looking mess as soon as things are overlapping or you turn the camera in a wrong way.

so RT reflections rightfully get used here as the lighting in the game is already great looking

and like I said, why wouldn't I use it? I'm already running mostly nightmare settings, at a higher Framerate than my panel supports.

and as I showed in the screenshots DLSS looks noticeably better than native + TAA both while standing still and in motion. so using DLSS to reach that 144fps is not only no big deal, I would leave image quality on the table if I didn't use it!

hell even DLSS in Performance mode only looks marginally worse than native + TAA

DLSS Performance

DLSS Quality

TAA Native

Last edited:

lukilladog

Member

This is BS.

20 years ago the 9700pro launched with proper fp32 and the ability to run AA and AF at playable framerates. When FX did finally release it could only handle FP24 so when playing stuff like source engine games (Half-Life 2 being a big one at the time) if it didn't run in compatability mode with worse image quality it ran like absolute dog shit. Then the 9800XT released and cemented ATi. X800 vs 6800 was a close run thing. X1800 vs 7800 was a slight NV advantage but the refreshes of X1900 and X1950 vs the 7900 was ATi all the way.

After that the 2000 and 3000 series were significantly worse than the 8000 and 9000 series NV parts and this is where NV really started to gain market share and snowball. AMD had a great chance with the 4000 to actually take the crown but instead went for a 'small die' strategy which meant RV770 was tiny but was able to be around 90% of GTX 280 performance. They should have made an RV790 with 1,200 shaders and just taken the performance crown. 5000 series was also faster than the 480 and 5000 series brought with it angle independent AF which was a huge IQ improvement.

6000 series was a bit of a side step and NV made a good leap with 500 series but again AMD took the crown with the 7000 series (Which for some reason was about 33% underclocked at launch with parts capable of 30% OCs easily) and even when the 680 came out OC vs OC Tahiti was faster. 290X was faster than Titan and the 780Ti came out with a full die to compete but price wise AMD was the better perf/$. Unforunately what happened is that the issues in the 2000 and 3000 series stuck so even when AMD offered better perf/$ with the 4000, 5000, 7000 and 200 series NV still sold more. That led to stuff like Fiji not being as fast as the 980Ti and then Vega 64 being a big let down. RDNA was a good arch but AMD never released a properly top tier part and then we arrive at RDNA2 where it is competitive in raster but NV does have a few more feature and is much better at RT although it comes at a very large premium.

But no. NV have not been the market leader for 20 years.

Yep, people like to cherry pick and forget about things like unified shaders, tessellation, forward + which is still ussed in forza horizon (and should be used more honestly), tressfx (or whatever was called), and without the mantle api push we would still be probably sitting in some dx11 plus extensions. Also i think nvidia is still being creamed with RBAR, and the software suit is leagues better.

Ps. - And that radeon shader post process injection is like the father of all other post process ideas like fxaa, sweetfx/reshade, and even dlss. Ha, i still have a clip in my chanel:

Last edited:

Just went back and double checked. You are correct. HL 2 was FP24 by default but NV30 had to either use fp32 which it eas awfully slow in or fp16 which had an IQ impact and was still only about on par with 9700pro fp24 performance.The R300 is the one that only supported FP24.

NV30 is the one that supported FP32 or FP16.