rodrigolfp

Haptic Gamepads 4 Life

In your dreams maybe.Your arguments are always just "why is it not ROG ally X?"

In your dreams maybe.Your arguments are always just "why is it not ROG ally X?"

In your dreams maybe.

Criticising an aspect that is better on one vs another =\= cocksucking/promoting. You seen personally attacked with SW2 critics.We see you coming in Switch 2 threads not to inform anyone on the Switch 2 question with logical reason why they made the decision, you're here to say "Well well well, on PC handhelds, if you didn't know..."

The biggest PC handheld cocksucker on neogaf. Never misses a chance to promote.

Not really. Even switch 1 clocks went up e.g. when loading. They updated switch software for that. 1.8-2ghz should not be a problem for that arm CPU. Yes, power usage would go up, but a good cooling solution should handle that.1ghz to 2ghz seems like a lot of overclocking don't think nintendo wants lawsuits from people's houses being burnt down.

Criticising an aspect that is better on one vs another =\= cocksucking/promoting. You seen personally attacked with SW2 critics.

To say I think Nintendo fuck up with the SW2 "design" if temps are a problem for a full clocked ARM CPU in handheld mode.Why even come into this thread with this kind of crud answer "x86 handheld PCs CPUs run at 2+GHz and don't burn hand"

To say I think Nintendo fuck up with the SW2 "design" if temps are a problem for a full clocked ARM CPU in handheld mode.

Discussing technical aspects = trolling. Noted.

I give up

Continue trolling every switch 2 threads for all I care. What a fucking idiot

Discussing technical aspects = trolling. Noted.

Reviews wishing aspects of games/gaming machines were something else/better is fucking trolling. Noted too.Wishing a console to be something else than it is including its form factor yes it's fucking trolling

Wait, how do you know that??Curiously enough, the clock speeds in docked mode are actually lower than in handheld mode

Reviews wishing aspects of games/gaming machines were something else/better is fucking trolling. Noted too.

Yeah. Why not give a $1000+ PS5 Pro Pro in a big case with a 5090 as option for the consumers as some other past thread suggested??I wisH plAysTAtioN 5 wAS MuCh moRe POWerfUL lIKe A 5090, I MeaN i caN Fit It iN a 15L CAse, WhaT's THE EXCusE?

Gone for a while but not forgotten. Back on June the 30th by popular demand.they says runs well in own vid you post oohoohoo

I'm talking specifically about the Cyberpunk port where the DF comparison showed the Switch 2 version resolving more distant detail at times than even the Series S version.From all the media videos I've passively seen, frustums/Draw distances look setup like Vita games compared to typical PS4 home console renders IMO, and lots of items look like they are statically lit immediately outside the foreground on Switch 1 and 2 game versions, and lots of facet edges on random items too.

Maybe it is just early days and development pipelines are still too much in Switch 1 development, but even with Switch 2 ports having performance issues they also still look like they aren't carrying a full home console workload, yet IMO.

This has become my strategy as well. Mostly platform or her side stroller and RPG's outside of the big big ones I've been playing on my handheld and I really enjoy it. I seem to play it more often.In handheld mode I play smaller games, like Dead Cells for example. Big screen games don't feel good enough for me and I prefer playing them on TV.

Please enlighten me good sir.Bro doesn't know how clockspeed works on a computer

Most pc cpus are not being overclocked by 100%. There is a reason why when people do extreme oc they have extreme cooling to go with it.x86 handheld PCs CPUs run at 2+GHz and don't burn hands but that ARM CPU will burn houses??

it's not overclock. It's default clocks.Most pc cpus are not being overclocked by 100%. There is a reason why when people do extreme oc they have extreme cooling to go with it.

A computer can't set a house on fire for running at high clockspeed. If cpu/gpu temps get too high it will thermal throttle and forcefully slow itself down. If still temps rise, it will force shut down before any kind of physical damage can occur.Please enlighten me good sir.

Why not design close enough and not suck in clock/heat aspect??

That would me it suck even more.hookers?

I wisH plAysTAtioN 5 wAS MuCh moRe POWerfUL lIKe A 5090, I MeaN i caN Fit It iN a 15L CAse, WhaT's THE EXCusE?

You're going overboard, mate. If he bothers you then use the ignore function. Don't say you're giving up and then continue responding. For what it's worth, I agree withrodrigolfp . I prefer the Steam Deck and ASUS ROG Ally design and functionality to the Nintendo Switch 2. You don't have to agree with that, but this is a forum where people are allowed to engage in constructive discourse. You were the one who responded with apparent hate/disgust because someone brought up a tangential point in regard to the OP. Your reaction was the real disruption in this thread.

You don't need to because of how they nailed the design. Children to adults can comfortably play on them without issue.You see me go into every Steamdeck threads saying how I would prefer a smaller form factor? No? Take example.

You see me go into every Steamdeck threads saying how I would prefer a smaller form factor? No? Take example.

How does coming in a thread with a legit question from OP helps in any way with saying how PC handhelds can clock higher? How is this of any help? Bigger heatsink, well "NiNtenDo shOudL haVE biGgeR heATsiNK!! Hur hur hur" Oh yea very helpful information. Brilliant collaboration and exchange of knowledge. We really need more posters like that! /s

"Constructive discourse"... HA! Another way of saying shitposting.

But isn't there no end to these suggestions? Nintendo could have waited ten years and the switch 2 would have been much more powerful. They could have used top of the line components and sold it for 1.500€ and it would have been much more powerful.The OP made a comment about CPU usage not improving (and in fact, worsening) when docked. The general consensus is that this was necessary to prevent the device from getting too warm/hot in docked mode, which would make it uncomfortable to pull off of the dock to use in handheld mode. His remark, which is valid, was that if Nintendo had marginally increased the footprint of the Switch 2, they could have implemented a better heatsink or cooling solution that would allow the CPU to run at higher clock speeds when it is docked while still keeping the device cool enough to comfortably transition back to handheld mode.

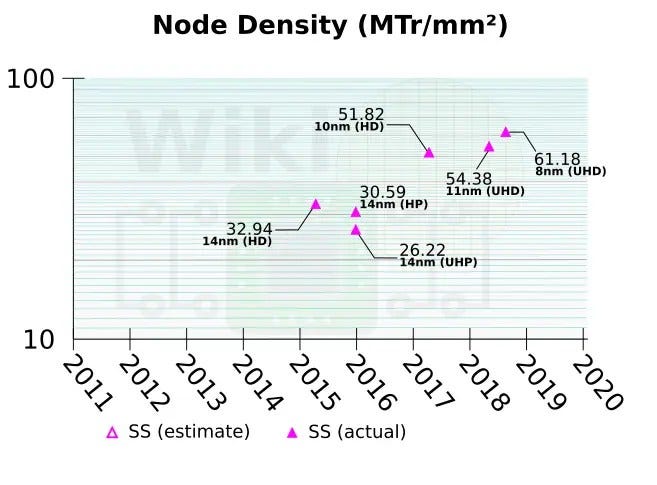

Even without increasing the size, if they had used 5nm transistors instead of 8nm transistors, this would have also allowed the CPU to run at a higher clock while docked without sacrificing cooling. The smaller transistors would have increased performance per watt while also improving power efficiency (and thermals). Additionally, that would have freed up space internally, meaning thermal hotspots would have decreased. There are ways Nintendo could have improved the design to eliminate this issue. Talking about that isn't a bad thing. Nobody is telling you that you can't like Nintendo or your Nintendo Switch 2. Take a mental health day, mate. You're going off the rails.

The OP made a comment about CPU usage not improving (and in fact, worsening) when docked.

The general consensus is that this was necessary to prevent the device from getting too warm/hot in docked mode, which would make it uncomfortable to pull off of the dock to use in handheld mode.

His remark, which is valid, was that if Nintendo had marginally increased the footprint of the Switch 2, they could have implemented a better heatsink or cooling solution that would allow the CPU to run at higher clock speeds when it is docked while still keeping the device cool enough to comfortably transition back to handheld mode.

Even without increasing the size, if they had used 5nm transistors instead of 8nm transistors

, this would have also allowed the CPU to run at a higher clock while docked without sacrificing cooling. The smaller transistors would have increased performance per watt while also improving power efficiency (and thermals). Additionally, that would have freed up space internally, meaning thermal hotspots would have decreased. There are ways Nintendo could have improved the design to eliminate this issue. Talking about that isn't a bad thing. Nobody is telling you that you can't like Nintendo or your Nintendo Switch 2.

Take a mental health day, mate. You're going off the rails.

I absolutely understand these ideas and suggestions, but price is a huge factor and they went way higher than I initially thought.I'm of the opinion they probably should have made the Switch 2 a touch thicker for a slightly bigger battery and ported the design to some variant of 5/4nm. A extra few mm of thickness won't affect the portability that much, especially considering the overall size of the device. Naturally that would probably have tacked on an extra $60 or so to the BOM, not including paying Nvidia for the node port costs either as Nvidia didn't do any Tegra version of Ada.

Likely Nintendo didn't want to eat the cost or price it that high, but the performance and battery gains would have been significant. It will likely get ported to much more efficient node for the eventual refresh.

That would reduce the battery down to 30 mins.In my opinion, Nintendo should add a mode where they heavily overclock the CPU in docked mode, to atleast 2 GHz or higher for titles like that, that would help immensely. Handheld will continue to run shitty like that but atleast in docked mode it would run decently.

The worsened part is just DF's and not confirmation of the dev kits. Devs on famiboards have said this is erroneous.

Nonsense.

They have a wattage in dock mode just like any PC handheld have a limitation, regarless of these fucking clock speed "theoretical" performances, they cannot hold down max clocks CPU & GPU. Steam deck, rog ally, you name it.

This is a closed platform. CPU clock speed handheld = dock, this is to remove headaches from devs for both modes. Also, for the umpteenth time, LPDDR5x limitation. Useless to raise clocks needlessly when you're using the bandwidth efficiently already.

Nintendo makes a closed platform. FFS

The fucking thing cannot throttle like your PC shit bricks. From a Canadian in winter cold room temperatures to some south of Spain >50°C hellhole, performance has to hold up constantly.

Nobody knows really. Nvidia always uses low densities and for low clock speeds already these things don't scale with node improvements like you see in most node benchmarks. The diminishing returns of lowering nodes is real for wide, low density low frequency mobile chipsets.

Cool dream bruh

Write in your diary how much better you would have designed this over Nvidia engineers, then please, go and @ them on twitter with your ideas. Or better yet, print that on paper and attach it to your CV and send to Jensen. Clearly they missed the brain power that you have when designing an APU. What the fuck do they know afterall.

Seenaguanatak 's post.

This has nothing to do with OP. You go and buy that next gen PC handheld with all of those improvements you're dreaming of. Happy for you. I'm sure a 10" wide 3" thick monster can finally clock so high you're gonna have a good 90°C hand warmer for winter.

As if I give a flying fuck about your advice lol. Read the fucking room. Nobody gives a shit about your "what ifs"

Go back to PC handheld threads and circle jerk all you want. Here and here

But isn't there no end to these suggestions? Nintendo could have waited ten years and the switch 2 would have been much more powerful. They could have used top of the line components and sold it for 1.500€ and it would have been much more powerful.

Yet, a lot of people complain about the price of the device as it is.

There is no pleasing everyone. I - and many others - are very happy with what we've got. Would I prefer having a couple of mm more in thickness to have a better cooling system? Yeah... Would I prefer having a chip in the dock or some overclocking to have a more powerful device? Yeah...

We can ruminate all day about what could have been, but some of the remarks in here and in other threads are just not in good faith it seems. And I have to agree...there are people who always bring up some other device that they love and when challenged it always comes down to 'you want an echo chamber of praise and love, this is a forum for discussion, live with it'

Same as in game OTs when people are constantly shitting on a game... Then when questioned say that people who like said game can leave if they only want to read praise... Not suggesting that the people in this conversation are trolls, but the amount of people going into threads just to shit on games or devices are through the roof these days

That would reduce the battery down to 30 mins.

Time wasn't really a problem in this case. T239 was designed all the way back in 2021. Porting to a new node doesn't take that long. The costs are probably the reason though, TSMC 4/5nm are both vastly more expensive compared to Samsung 8nm, like over double. Plus, asking Nvidia to do it would have probably come with a price tag. The costs for the console are already up there, and the leap over Switch 1 is already significant so thus it makes sense to stick with 8nm for the first version of the console. The thicker console and better node speculation is just wishful thinking on my part. Well, true wishful thinking would have been a Tegra variant of Ada, but that never happened.I absolutely understand these ideas and suggestions, but price is a huge factor and they went way higher than I initially thought.

Then you have to consider that they have to stop designing and start planning/manufacturing somewhere. You can't just toss a couple of years R&D aside and say 'ok, we take the smaller chip, make the device thicker and toss in a bigger battery'. By the time you have finished R&D, design and are able to start manufacturing, some new components will be available and people suggest or even demand that you make the device 50-100€ more expensive.

We are not arguing about different revisions of 3nm, but Samsung optimised 10nm (which is what the 8nm node we mention is) vs 3-5nm TSMC (3nm being available but out of Nintendo's self imposed conservative budgeting reach). I am not saying to expect 100% clock speed improvements, but a decent clock speed boost or an even bigger power draw reduction yes.Nobody knows really. Nvidia always uses low densities and for low clock speeds already these things don't scale with node improvements like you see in most node benchmarks. The diminishing returns of lowering nodes is real for wide, low density low frequency mobile chipsets.

Nobody is quite claiming to be able to do a better job than nVIDIA did, but nVIDIA could just like they would have for PS3 if Sony did not run to them at the last minute with a reduced budget as they already blew theirs over without finishing with Toshiba's GPU design.Write in your diary how much better you would have designed this over Nvidia engineers, then please, go and @ them on twitter with your ideas. Or better yet, print that on paper and attach it to your CV and send to Jensen. Clearly they missed the brain power that you have when designing an APU. What the fuck do they know afterall.

It doesn't. However, my reading ability is in question.What does the battery life matter in docked mode?

I don't know why you guys expecting PS5 level power on Switch 2.

The system was design around being hybrid, the decision was made in order keep it cool and easy to use.

Maybe my expectations was very different than you guys, what I wanted was big upgrade over original Switch which exactly what I got.

CPR similar to Bethesda always have technical issues every time they release game.I don't think that people are asking for PS5 level power on the Switch 2. I could be wrong, but I didn't see those posts myself. I think the point was that the expectation for docked mode is that it boosts performance across the board, but for some reason Nintendo has decided to reduce clock speeds on the CPU in docked mode (although the GPU does get a boost). I don't know the real-world performance impact the reduced clock speeds are having. If the impact is zero (or so limited as to be indistinguishable from zero), then this is much-ado about nothing. I know the OP posted an example, but I am not taking a single example as gospel truth of a widespread issue when that could also be chalked up to poor optimization by the developer.

CPR similar to Bethesda always have technical issues every time they release game.

This was case with Witcher 3 when first came out even PS5 version had issues.

No matter power these devs can't release without suffering from some kind of technical issues.

So you're saying nobody should be able to have a discussion about how to make products better because there are always ways products could be better? This is a discussion forum. People are allowed to discuss. If you don't like the conversation you don't have to participate...

We are not arguing about different revisions of 3nm, but Samsung optimised 10nm (which is what the 8nm node we mention is)

vs 3-5nm TSMC (3nm being available but out of Nintendo's self imposed conservative budgeting reach)

. I am not saying to expect 100% clock speed improvements, but a decent clock speed boost or an even bigger power draw reduction yes.

Do we have the two chips to compare in front of us no, but let's be honest it is very darn likely. Nintendo wanted to hoard SoC's with the cheapest manufacturing process as soon as possible instead of getting to the end of 2024 and being only able to start manufacturing the volume they intended at a much larger cost with rushed orders for a mid 2025 launch.

nVIDIA had Ampere ported to TSMC 7nm and Blackwell now is on their optimised 5nm (4LP) process. I am not finding sources that do not generally show Samsung processes being behind the "equivalent" TSMC ones and optimised 10nm vs 5nm TSMC mmmh… I find it hard to believe it is as a wash as you say (otherwise Apple would not spend so much money to be on the very latest process from TSMC for their A series SoCs either):

The SoC, based on the leaks, was finalised a few years ago (active development starting in 2019 and the chip being finalised around 2021) and under instruction of their client. Manufacturing tech was not forced on them, feature set (what from Ampere, what from Ada or what became Blackwell, etc…).

All I suggested is that there are people on here not discussing or suggesting, but merely shitting on anything that isn't their prefered way of playing/device/game... whatever.

It is absolutely fair to compare a new device to an existing device to see what compromises were made. It is fair to critique either device. What was offered in this thread was critique. Who gets to decide when someone is genuinely critiquing a product and who is "merely shitting on anything that isn't their preferred way of playing"? Is itBuggy Loop ? Because I will create my own account suicide thread right now if that's the case.

Show me whererodrigolfp was just shit-posting and I'll retract my comments. He has an opinion, and he's entitled to it. You and

Buggy Loop are free to disagree, but the only one that made a scene in here was

Buggy Loop . He flipped out because he has seen multiple posts comparing the Nintendo Switch 2 to the Steam Deck. That's going to happen. They're similar devices. I compare my Steam Deck to my Nintendo Switch, and to my PlayStation Portable, my PlayStation Vita, and my ASUS ROG Ally. If I had a Nintendo Switch 2 I would be doing the same thing.

This is one of the easiest ways to judge how poor or well something is made - by comparing it to an existing product that is similar. How do the aesthetics compare? What about the build quality? How about hardware/software? Real-world performance? All of that is valid to discuss in this forum, and your piggybacking off of the discussion I had with a guy who was losing his marbles for no reason makes it seem like you disagree with the discourse taking place at all. Otherwise, why did you respond to me?