Just bought it. I was never a fan of Brothers, but I adore It Takes Two. It's good to go back and see Joseph Fares plant the seeds for what was to come. I am appreciating the little details controlling the two brothers a lot more now than i did ten years ago. go figure.

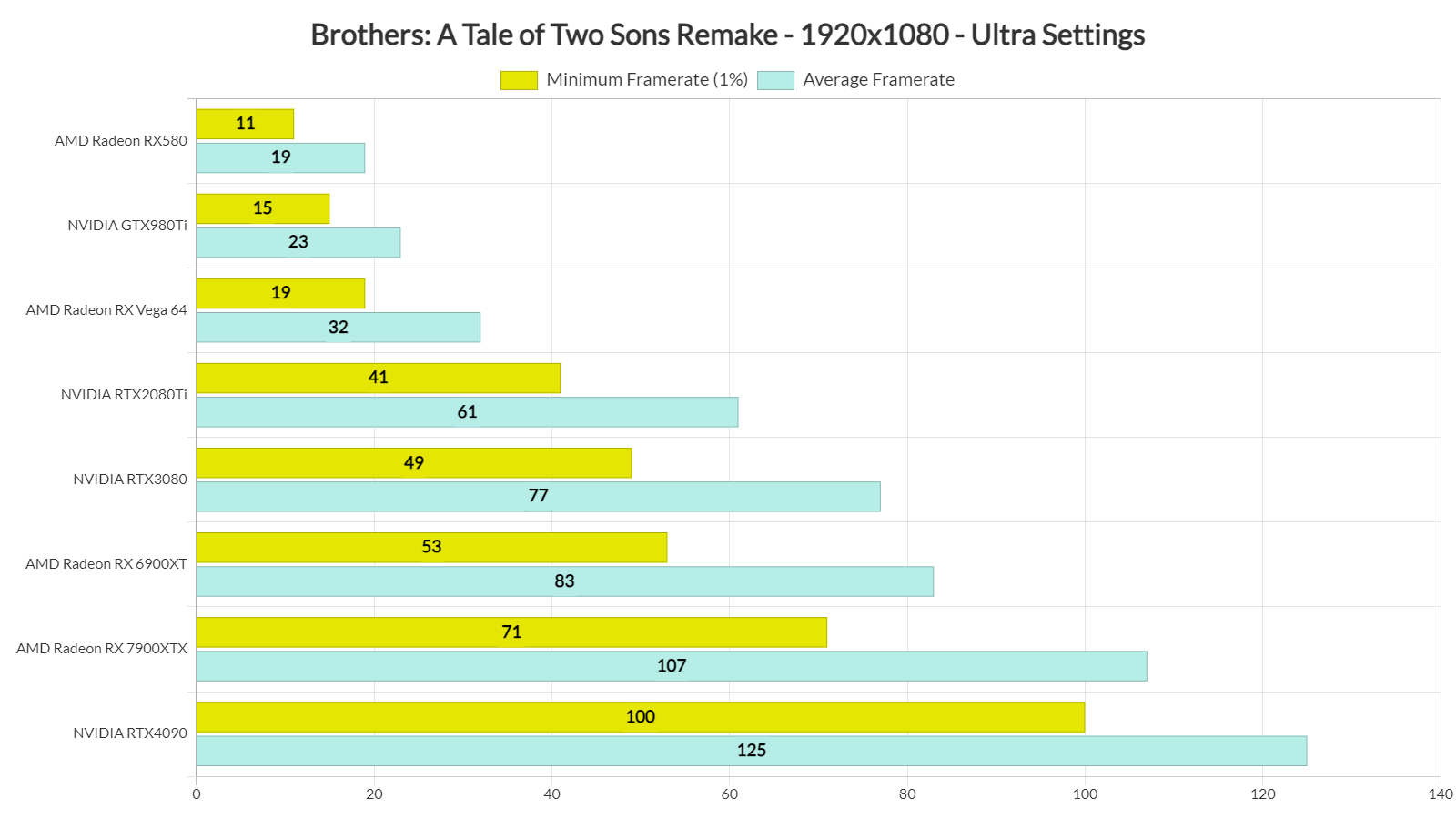

DF wasnt lying. Game is very heavy on the GPU. CPU usage is non-existent but my 3080 runs this game between 30-50 fps maxed out at 4k dlss quality. i just capped it to 30 fps. i dont know why people say vrr or gsync hides frame drops, i can notice every fucking frame drop on my LGCX even with gysnc engaged. just capped it to 30 fps because its much smoother that way.

Not surprised consoles are running it at 1440p 30 fps. Game is beautiful and very pleasing to look at but im not seeing why its so expensive on the GPU. other ue5 games ive played arent this heavy.