Utter nonsense.....

Show me any TAA in any game that even gets within a mile of what DLSS does.

DLSS can reconstruct a friggin 2160p image from a 1080p source and look indistinguishable from native resolution, or even better with small details.

No TAA solution in any game, ever, managed to create more than a muddy mess in that situation.

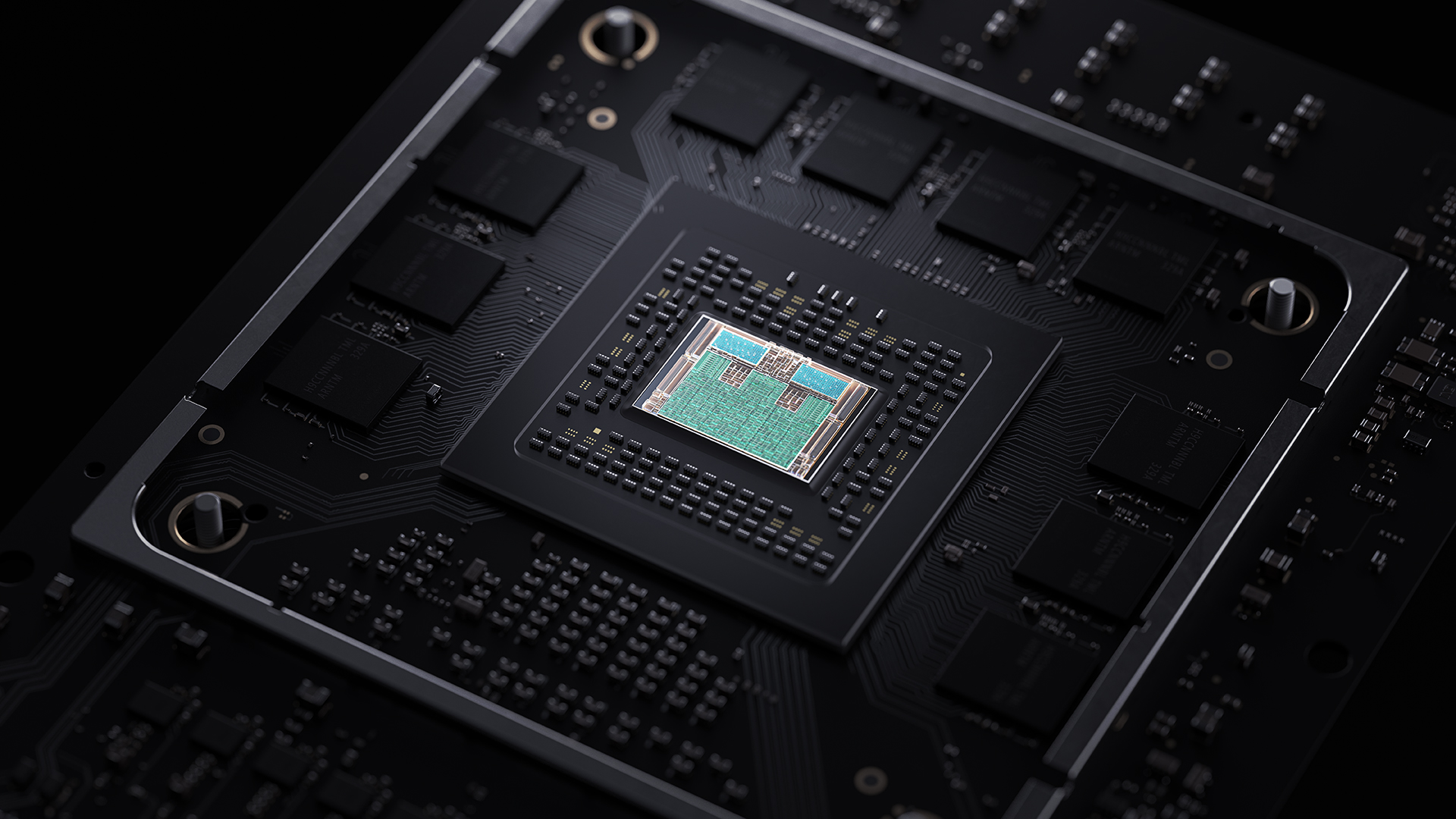

And while it is software...are you seriously denying that software with dedicated silicone runs several orders of magnitudes faster?!?

You`re announcing that no car will ever be able to do the quarter mile in under a minute while standing in front of a Ferrari. You do realize that DLSS 2.0 is already out there together with a giant number of camparison videos and analytics which all invalidate your claims?