You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

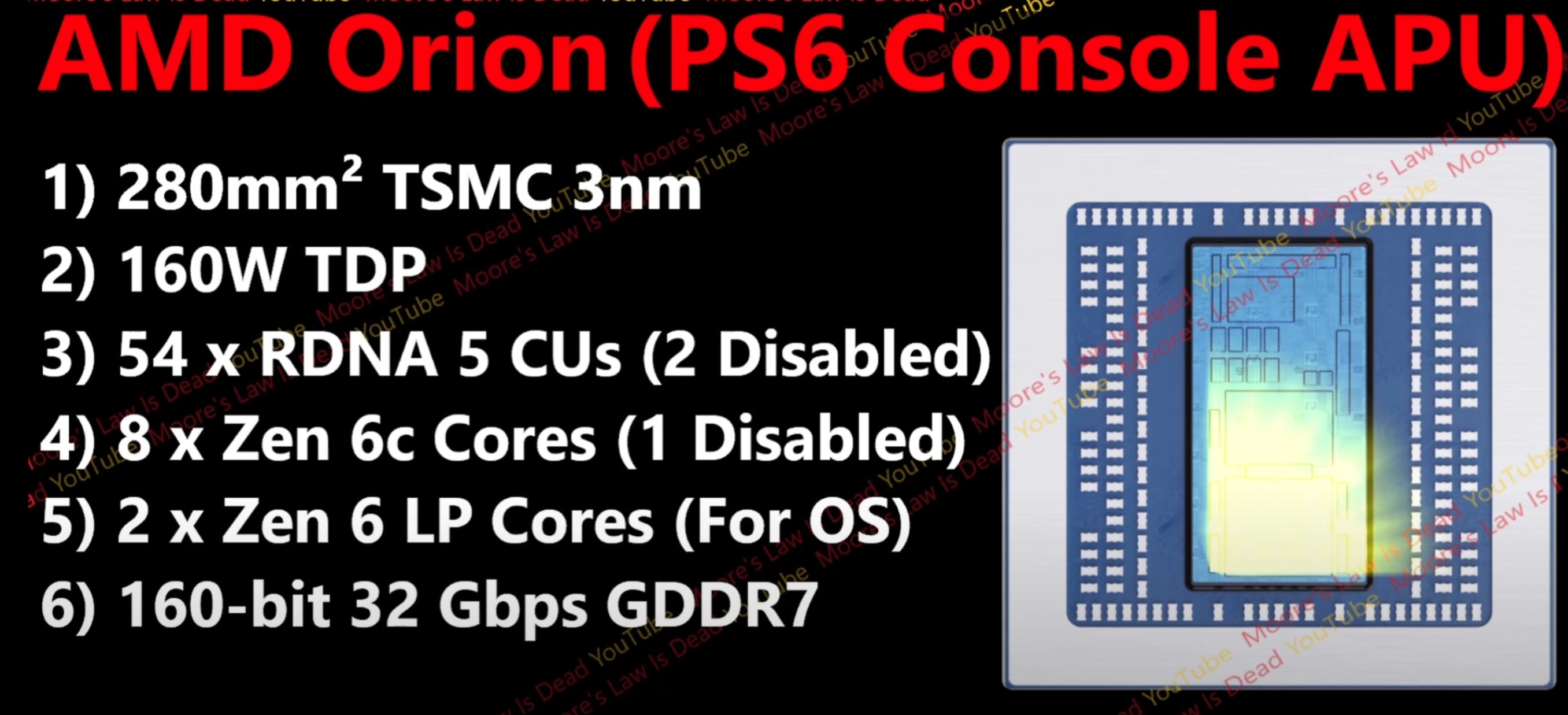

[MLiD] PS6 Full Specs Leak: RTX 5090 Ray Tracing & Next-Gen AI w/ AMD Orion!

- Thread starter Lunatic_Gamer

- Start date

- Rumor Hardware Hype

VolticArchangel

Member

Raster performance is underwhelming honestly, that too for a Console that's releasing in Q4 2027.

pacman4000

Member

It would be funny if wccftech quoted you on the above.Don't feel like getting quoted by wccftech and all the other tech rags today

Tobimacoss

Member

Oh, you will get quoted regardless.Don't feel like getting quoted by wccftech and all the other tech rags today

"Kepler refuses to comment on the content of MLID's video, heavily implying that MLID doesn't know what he's talking about".

VolticArchangel

Member

Don't feel like getting quoted by wccftech and all the other tech rags today

"LADIES AND GENTLEMEN, MY NAME IS PAUL, AND WELCOME TO ANOTHER REDGAMINGTECH.COM VIDEO!"

Last edited:

peish

Member

Pick one.

- 160W TDP

- 5090 Performance

160w tdp for CPU and GPU

160bit Gddr7 for CPU and GPU

vs

600w tdp for GPU

512bit Gddr7 for GPU

What a retord

Gamer79

Predicts the worst decade for Sony starting 2022

Its the "Secret Sauce" aka Bullshit.160w TDP, 640GB/s, and under 40 TF? This is supposed to match the 5090 in RT performance?

March Climber

Gold Member

Anyone believing this is going to be highly disappointed.....

Tobimacoss

Member

If Canis has 16 CUs, then that means 8 WGs right? So 8 vs 27 (1 disabled). PS6 will be 3.25 times the GPU power of the PS6 handheld. Series X was 3 times the Series S but with almost equivalent CPUs.

Zathalus

Member

Oh I see what has happened, he sees x amount of RT performance over the base PS5 and then just multiples the FPS from a benchmark. He did the same with the PS5 Pro. That's not really how that works, you have an improved RT pipeline, not the entire FPS number being multiplied by a certain amount. That's how people vastly inflated the PS5 Pro RT performance as well. Assuming this information is even correct.

Tobimacoss

Member

That's exactly what he does, but I think according to him, this (12x) would play out more in solely Path Traced games. Otherwise 6x.Oh I see what has happened, he sees x amount of RT performance over the base PS5 and then just multiples the FPS from a benchmark. He did the same with the PS5 Pro. That's not really how that works, you have an improved RT pipeline, not the entire FPS number being multiplied by a certain amount. That's how people vastly inflated the PS5 Pro RT performance as well. Assuming this information is even correct.

ChorizoPicozo

Member

"Sony is NOT compromising performance next generation".

Of course they are. They are trying to sell a console in order to make bank on games and MTXs through their storefront.

Of course they are. They are trying to sell a console in order to make bank on games and MTXs through their storefront.

pacman4000

Member

Yeah, cus CU count is the only thing that determines GPU performance. Come on now.So 8 vs 27 (1 disabled). PS6 will be 3.25 times the GPU power of the PS6 handheld.

StreetsofBeige

Gold Member

I'm just curious from a tech perspective, when Sony/MS do their CU thing and they got a few to spare in case some go down, what happens it loses so many CUs the backups cant fulfill it?

Does the system and game totally bomb out? Or the game can still work but it's like someone with a gimped PC and it can still work but at shittier performance?

Does the system and game totally bomb out? Or the game can still work but it's like someone with a gimped PC and it can still work but at shittier performance?

Tobimacoss

Member

Well, obviously the clocks will determine the final result, but smaller the gap, better for the devs technically.Yeah, cus CU count is the only thing that determines GPU performance. Come on now.

Think about it like this: TSMC's claimed transistor density for N3P is 224 Mtr/mm^2, and Apple SoCs are also in this range. So if the chip is 280mm^2 it should land somewhere in the 224 * 280mm = ~62.7B transistors range. A 9070 XT is 53.9B transistors, but that is for the GPU alone. This chip will have include the entire CPU and SoC aswell which is say 30-40%(?) of the die area if you eyeball it. IMO, if one expects this to massively outperform some ~200mm^2 3nm RTX 6060 or 9060 XT successor you set yourself up for disappointment.

Last edited:

pacman4000

Member

It's a >10 times difference (hopefully 20 times...) in power draw. That's really all you need to know.Well, obviously the clocks will determine the final result, but smaller the gap, better for the devs technically.

(Not that power draw vs performance has a nowhere near linear relationship but the difference will be huge no doubt about that. good thing most gfx related tasks are highly scalable then)

Last edited:

viveks86

Member

They are nonfunctional (and possibly defective), but included to increase manufacturing yield and reduce production costs. For all practical purposes, pretend the disabled CUs don't exist. No CUs disabled typically means a super high quality chip and as a result, the highest cost to produce. They end up being the ultimate/flagship models in a GPU series.I'm just curious from a tech perspective, when Sony/MS do their CU thing and they got a few to spare in case some go down, what happens it loses so many CUs the backups cant fulfill it?

Does the system and game totally bomb out? Or the game can still work but it's like someone with a gimped PC and it can still work but at shittier performance?

Post manufacturing, no CUs should go down. There are no spares/backups.

Last edited:

pacman4000

Member

Yeah, cus everyone has a capable gaming pc or equally capable console already. Ps6 is totally pointless.It is pointless to buy ps6 if you will just see there exclusives be ported to Pc and other consoles.

Stuart360

Member

Were you expecting 6090 level or something?.Its just me or a 9070/5080 in almost 3 years for a 8 year gen seems like really fucking low ?

I'll bet anything a 4090 will still eat in for breakfast raw power wise, probably a 4080 too.

Which for a console would still be good.

As with every new console gen coming up, keep your expectations in check, and dont believe everything you read or watch from clueless Youtubers.

Last edited:

Black_Stride

do not tempt fate do not contrain Wonder Woman's thighs do not do not

5090 Performance?

In 2027 for 160W?

If Nvidia announced this we would laugh at them.

160W for RTX 5090 performance for the 2026 nextgen lets call it RTX 6070 would be called bullshit straight up, we wouldnt even entertain it.

Why are we gonna believe a console could do that?

In 2027 for 160W?

If Nvidia announced this we would laugh at them.

160W for RTX 5090 performance for the 2026 nextgen lets call it RTX 6070 would be called bullshit straight up, we wouldnt even entertain it.

Why are we gonna believe a console could do that?

Physiognomonics

Member

3 shader engines and 2 deactivated CU don't go along. TDP should be higher and bandwidth is way too low.

Fake or highly modified.

Logically it's going to have 2 shader engines and 4 deactivated CUs.

Fake or highly modified.

Logically it's going to have 2 shader engines and 4 deactivated CUs.

sncvsrtoip

Member

Bandwidth and tdp just terrible, well as predicted, time to move to pc, will buy this weakling but will only play 1 first party game on it per year

sncvsrtoip

Member

Nope, with this bandwith will be like 30% faster than pro in raster, will be even bw more limited than proPS6 is going to be a like a 5090 with 40 gb ram?

Oblivion78

Neo Member

Never bet against Cerny

NuclearDiarrhea

Member

I'm game.If next console has even half of 5090 rt performance, I will eat my own shit live on cam, but if not, someone else will have to do it, question is who?

TheRedRiders

Member

I have a hunch only half this information is true, the sketchy stuff being the TDP and RAM, TDP is likely going to be similar to the PS5, and RAM is likely going to be 24 GB.

I expect the RT uplift to be huge, on 54 CU as well - this looks very promising.

I expect the RT uplift to be huge, on 54 CU as well - this looks very promising.

SplunkyMunkey

Member

I probably would of changed it to

10 high X3D powered cores cpu - 1 LP core disabled for OS and good for cpu demanding games

320-bit @ 720GBS @ GDDR7

58cu capable gpu 2 disabled with 20MB L2 cache 40-50gig of ram

40-50 tf of performance

Probably be around 200-300 tdp

Should be enough atleast between a 5080 5090 level. And it definitely be more powerful then 6090xt and a 4090gtx

10 high X3D powered cores cpu - 1 LP core disabled for OS and good for cpu demanding games

320-bit @ 720GBS @ GDDR7

58cu capable gpu 2 disabled with 20MB L2 cache 40-50gig of ram

40-50 tf of performance

Probably be around 200-300 tdp

Should be enough atleast between a 5080 5090 level. And it definitely be more powerful then 6090xt and a 4090gtx

Last edited:

The ninja farmer

Member

Yeah. For such huge step ups, while being 'efficient' some of this doesn't sound right and things like bandwidth and power seem off. Well compared to the levels and direction that last few PlayStation consoles took and they did work on efficiency on them. Especially the ps5

during the summer they were saying 40-48 cus for the ps6. Now it's in the 50s ? As a response to the next Xbox chip. I'm not sure that's how Sony or amd design a chip. With a quick add more 'X' to not look bad or weaker, against the opposition.

Doesn't history say the next PlayStation will be about the X700, or X700xt level with some Sony tweaks and bits ? Eg, ps5 being about 6700, then 7700 for the pro levels as the equivalent amd card

But perhaps with boosted machine learning and ray tracing parts ?

during the summer they were saying 40-48 cus for the ps6. Now it's in the 50s ? As a response to the next Xbox chip. I'm not sure that's how Sony or amd design a chip. With a quick add more 'X' to not look bad or weaker, against the opposition.

Doesn't history say the next PlayStation will be about the X700, or X700xt level with some Sony tweaks and bits ? Eg, ps5 being about 6700, then 7700 for the pro levels as the equivalent amd card

But perhaps with boosted machine learning and ray tracing parts ?

'Huge' is a weird term to use here in any context.25% seems like a huge number compared to all the ones he's been using up to that point. He could have very easily put down

It'd be the second smallest delta we've had between two same-gen consoles for the last 30 years (smallest one being current gen), and that's without normalizing for the diminishing returns (ie. 25% in PS1 era was significantly more notable than it is today).

I mean I get it that as differences disappear we're narrowing the focus of how we talk about numbers in enthusiast circles - but in practice, hw differences might as well not exist at this point as to the amount of impact they have on the end-user experience.

Now the number is obviously pulled out of thin air - so it's about as believable as all the FUD that happens every generation a few years out - but 'if' it actually materialized.

Last edited:

Tobimacoss

Member

And for $499 right?I probably would of changed it to

10 high X3D powered cores cpu - 1 LP core disabled for OS and good for cpu demanding games

320-bit @ 720GBS @ GDDR7

58cu capable gpu 2 disabled with 20MB L2 cache 40-50gig of ram

40-50 tf of performance

Probably be around 200-300 tdp

Should be enough atleast between a 5080 5090 level. And it definitely be more powerful then 6090xt and a 4090gtx

sncvsrtoip

Member

You can forget about 5080 level with this bandwidth, it will be closer to 5070 (base, no ti version)No.

9070 to 5080 performance in raster. Amount of RAM is still not set in stone.

pacman4000

Member

The video is silly on so many levels but one thing worth mentioning is that TDP-figures are kinda pointless if your next sentence is "clocks are not decided".

The TFLOPS figure is dumb as well since it include dual issue and he then compares it to single issue PS5 Pro numbers. "Wow, wow 3 times higher". One would think that we have left that stupidity by now...

I think MLiD has proven that he has access to some valid information/sources. The problem is that he is not satisfied with that. He just has to add his own, often less than stellar, analysis and speculation and even down right hyping.

The sad thing is that he seems unaware of that his reputation would be a lot better if he just was that "reliable leaker without the bullshit"-guy instead. But hey, maybe "entertainment" is his main priority and if so he's doing a pretty good job.

The TFLOPS figure is dumb as well since it include dual issue and he then compares it to single issue PS5 Pro numbers. "Wow, wow 3 times higher". One would think that we have left that stupidity by now...

I think MLiD has proven that he has access to some valid information/sources. The problem is that he is not satisfied with that. He just has to add his own, often less than stellar, analysis and speculation and even down right hyping.

The sad thing is that he seems unaware of that his reputation would be a lot better if he just was that "reliable leaker without the bullshit"-guy instead. But hey, maybe "entertainment" is his main priority and if so he's doing a pretty good job.

Kaiserstark

Member

160W TDP still seems low same as his last video. CU count have got little bump. I think it was 40-48CU. 2.5-3x PS5 raster performance is definitely believable. I do not believe his RT and memory requirement as well.

Bandwidth and tdp just terrible, well as predicted, time to move to pc, will buy this weakling but will only play 1 first party game on it per year

Kepler said a few weeks ago that the 160W TDP wasn't accurate, so seeing MLID continue to say that make you wonder what other things he got wrong.

160 bit memory bus and that 160W TDP...

pacman4000

Member

Spot on. It's so easy to make up specifications if you don't have to care about economy.And for $499 right?

I think they should go for a AT0-sized GPU, 16 Zen6 cores and 128 GB of GDDR7. Call it "PS6 Kutaragi Edition", I don't care.

Last edited:

sncvsrtoip

Member

Its wrong as AMD over sell tdp of their product not that MLID info is wrongKepler said a few weeks ago that the 160W TDP wasn't accurate, so seeing MLID continue to say that make you wonder what other things he got wrong.

SolidQ

Member

RDNA5 CU is difference story, and 9070XT already faster, than RTX 5070, with same bandwidthit will be closer to 5070 (base, no ti version)