I'm sure this is from the same source of the ps5 unified cpu cache. I'm sure 4 exclusive deals were kept secret the last 6 months that would of destroyed any good will of this deal. Add to that you think Microsoft would of paid 7.5 billion if everything in the next 5 years was a Sony exclusive in 1 way or another full or timed lol.

-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Years good chance we see Starfield in the next 12 months considering how much Sony wanted that exclusive contract. Must of been pretty far along to get to that stage and show off. Take ps5 development off the table it shaves time off to.MS bought Bethesda, so what? It'll be years before an exclusive game from them is released, Crash 4 is happening right now.

Neo Blaster

Member

Not a single official shot from the game so far, we may even see something within that time frame but the actual release is far away.Years good chance we see Starfield in the next 12 months considering how much Sony wanted that exclusive contract. Must of been pretty far along to get to that stage and show off. Take ps5 development off the table it shaves time off to.

ToadMan

Member

Years good chance we see Starfield in the next 12 months considering how much Sony wanted that exclusive contract. Must of been pretty far along to get to that stage and show off. Take ps5 development off the table it shaves time off to.

Well 12 months is possible I guess, given COVID and the acquisition set it back. So let's see it then - when we do it'll be worth a comment. Until then it's a name and little else...So what is there to say?

May as well talk about Halo Inf

FranXico

Member

Patch is already undergoing certification. It's more than "possible".PS5: Possible patch to get 1800p

3liteDragon

Member

FatKingBallman

Banned

LiquidRex

Member

A really cool but short sneak peak tralier.Aaaaaand it's gone (copyright strike from Zenimax).

What was it?

Last edited:

SSfox

Member

Guys i want to share this link, it's not directly related but i though i should post here

Harada Katsushiro (For the ignorants he's Tekken director since 25 years) created new youtube chanel where he discuss stuffs (probably video games stuffs will be big part of it), and he's gonna invit people there, and his first guest is Ken Kutaragi (For the ignorants he is the father of Playstation)

Harada Katsushiro (For the ignorants he's Tekken director since 25 years) created new youtube chanel where he discuss stuffs (probably video games stuffs will be big part of it), and he's gonna invit people there, and his first guest is Ken Kutaragi (For the ignorants he is the father of Playstation)

FranXico

Member

Is he hyping FidelityFX again? No thanks.

Thirty7ven

Banned

MS bought Bethesda, so what? It'll be years before an exclusive game from them is released, Crash 4 is happening right now.

Bethesda acquisition is a big dick move and for Xbox fans it's a great sign that finally the future of the platform will be better than the past.

But the effort being put into this to somehow turn it into some sort of check mate move on the industry is pure premature ejaculation. Which is nothing new for the community.

TheRedRiders

Member

His video is entirely based on these threads.Is he hyping FidelityFX again? No thanks.

SportsFan581

Member

Do read the rest of the posts before one is so quick to hit reply

Why do that? It's much better to type first and think later.

DeepEnigma

Gold Member

Why do that? It's much better to type first and think later.

Neo Blaster

Member

How Xbox fans are once again 'waiting for E3'...oops, for Bethesda exclusives.What we arguing about today boys

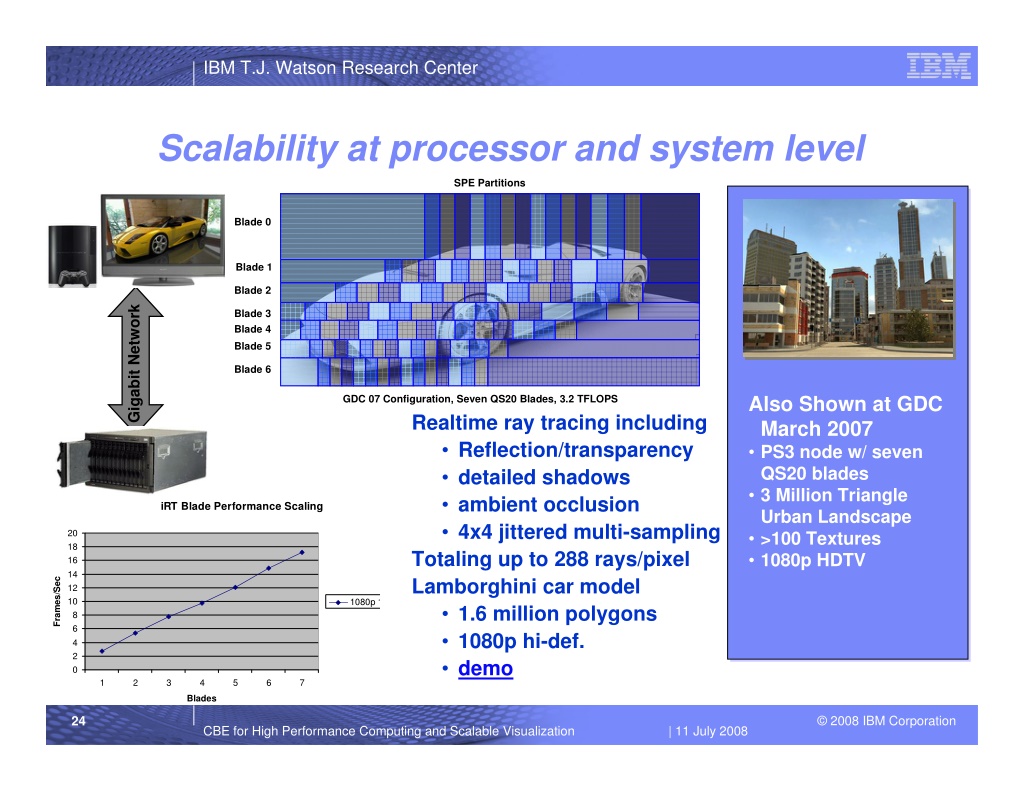

It's similar to the SPU of a CELL...

CELL is two different types of cores (heterogeneous); the SPU is but one, and it would take at least a dozen Tempest Engines (each equivalent to eight SPUs) to run that urban landscape demo -- which means a PS3 didn't produce that RT'd imagery by itself. It was actually assisted over a network by seven QS20 CELL-based blades (14 CELL processors)...

One of the visualization architects responsible for the demo wrote on his blog:

Sounds sweet... Sweeney would be all over something like that. Years ago he voiced:

I'd like to think that if a "CGPU" of Sweeney's (and your) description is the future of rendering, then a CELL-based CGPU may be biding its time given that:

- CELL demonstrated it could trounce a top-off-the-line GPU at software-based RT despite being significantly disadvantaged in terms of transistors and flops (in line with Sweeney's circa '99 prediction for '06-7: "CPU's driving the rendering process"... "3D chips will likely be deemed a waste of silicon")

- the PlayStation Shader Language (PSSL) is based on the same ANSI C standard superheads from MIT used to mask CELL's complexity

- SPUs are programmable in C++ languages so SPU support of PSSL's C++ structs and members can be added with little or no hassle, which means the benefits of PSSL would likely extend to a massive many-core CELL-based CGPU designed to run shaders across a legion of SPUs and CUs using a single simple shader language

- entries [0015], [0016], [0017] [0052] of this patent and entries [0017], [0018], [0033] of this patent say that the described methods for backwards compatibility can be implemented on "new" processors in various ways

- IBM's open-source customizable A2I processor core for SoC devices has a number of features (it can run in little endian mode; addresses x86 emulation) that make it a prime candidate to replace CELL's PPE (had instructions for translating little endian data; addressed x86, PS1, 2, PSP emulation; A2I's LE mode would add PS4, 5, assumably 6 emulation)

- FreeBSD (PS4's OS based on 9.0, PS5 presumed to be 12.0 based) supports PowerPC; A2I is a PowerPC core with little (x86) and big (PPC) endian support; An A2I/CELL-based PS7 could run a little endian OS carried over from an x86-based PS6 or run an entirely new one written with little or big endian byte ordering

- FreeBSD now only supports LLVM's Clang compiler

- Clang/LLVM (frontend/backend compilers, SIE made the full switch to Clang x86 frontend during PS4 dev) support PowerPC (frontend), CELL SPU (backend courtesy of Aerospace corp.) and Radeon (backend)

- AMD's open-source Clang/LLVM-based compiler with support for PowerPC offloading to Radeon under Linux can serve as a reference for an SIE Clang/LLVM-based compiler that supports PPC (A2I)/CELL (SPU) offloading to Radeon under FreeBSD (Linux and FreeBSD were cut from the same cloth)

Interestingly, some folks at Pixar seem to think an architecture that melds CPU and GPU characteristics would be the preferred option for path-tracers, and anticipate such a chip may appear by 2026:

IMO, the unfortunate thing about their future outlook is that the pros and cons of the "CPU vs. GPU" debate were rendered moot over a decade ago. I recall Kutaragi saying that he expected CELL to morph into a sort of integrated CGPU at some juncture and wanted Sony to make a business of selling them:

Too bad SCE wasn't able to startup that business and become a successful vendor of CELL-based CGPUs back then. There's no telling how customer feedback from the likes of Pixar might've influenced future designs or what of those designs might've trickled down to PS consoles -- but all isn't lost. If SIE were to bring CELL out of "cryopreservation" for a CGPU, there are at least two coders (one of which is an authority on CELL) who'd jump at the chance to help shape its feature set and topology...

- Mike Acton (CELL aficionado, former Insomniac Games Engine Director, current Director of DOTS architecture at Unity (career timestamped) had a few things he wanted to see implemented that would bring CELL's capabilities closer to those of today's GPUs

- Michael Kopietz wants to play with a "Monster!" -- a 728 SPU monster...

The 'Monsters Inc.' slide says SPEs can replace specialized hardware. I'm not much of a techy, but I presume SIE would skip on ML hardware so the algorithms could benefit from the theoretical higher clock frequency, wide SPU parallelism and massive internal bandwidth of a "CELL2". A BCPNN study showed that CELL was extremely performant vs. a top-tier x86 CPU from its era due mainly to the chip's internal bus (EIB) bandwidth.

I think a CELL2 with a bus(es) broad enough to harbor and speedy enough to shuttle a few TB/s of data around internally to hundreds of high frequency SPEs to drastically accelerate ML (and other workloads in parallel), would be better than having dedicated ML hardware (and other dedicated hardware) constrained by GPU memory interface bandwidth limits and taking up space that could otherwise go to more TMUs or ROPs on the integrated GPU.

The re-emergence of CELL would not only give SIE an opportunity to further strengthen its collaborative ties with Epic and possibly establish a new one with Unity, but also allow Sony to pick up where it left off with ZEGO (a.k.a the BCU-100, used to accelerate CG production workflows with Houdini Batch -- the Spiderman/GT mash-up for example) and become a seller of CGPUs to Pixar and other studios. I'm sure if SIE really wanted to, it could come up with something in collaboration with all interested parties to satisfy creatives of all stripes in the game and film industries -- from Polyphony to Pixar...

The thought of a future PS console potentially running a first or third-party game engine that drives something like the above Pixar KPCNN in real-time intrigues me. My wild imagination envisions a many-core CELL2 juggling:

- geometry processing (Nanite on SPUs in UE5's case)

- physically accurate expressions of face, hair, gestures, movement, collision, destruction, fluid dynamics, etc.

- primary ray casts

- BVH traversal (video of scene shown on the page) for multi-bounce path-tracing

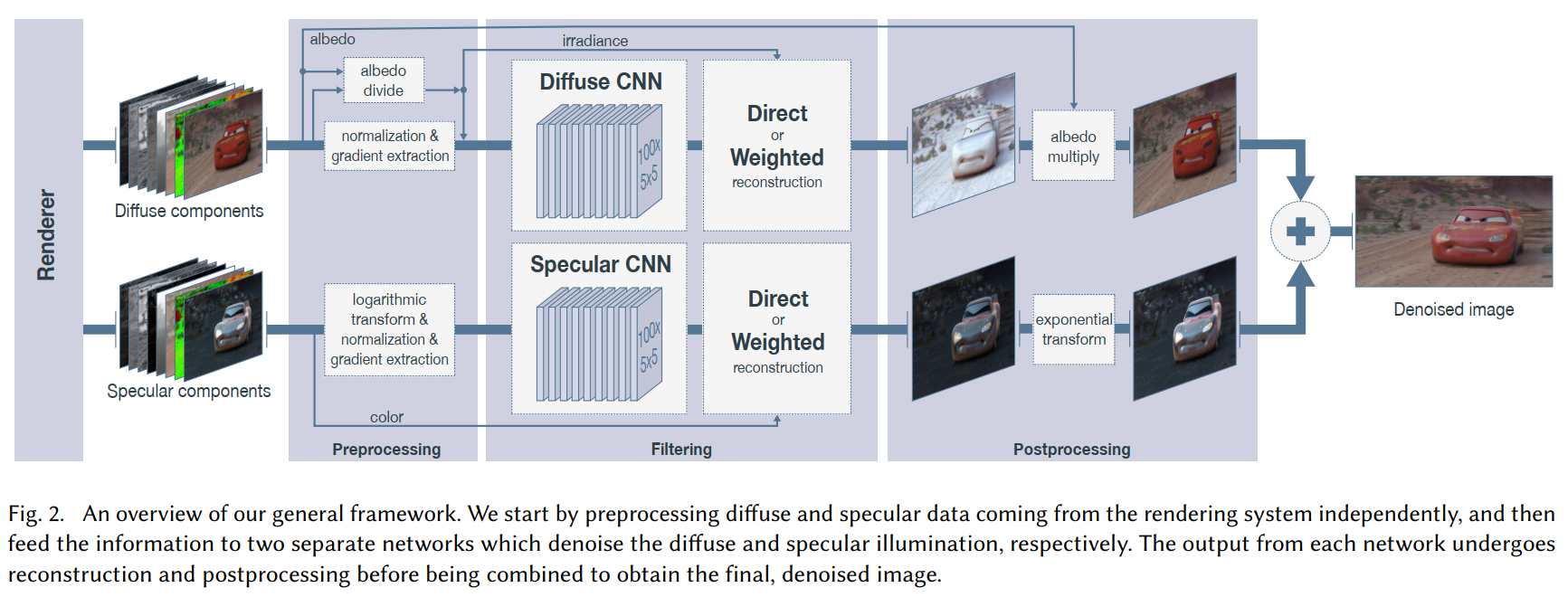

- SPU-based shading and pre/post-processing of diffuse and specular data (Lumens on SPUs in UE5's case)

- Monte Carlo random number generation for random sampling of rays

- filtering (denoising) the diffuse and specular ray data via two convolutional neural networks (CELL has a library for convolution functions, consumes neural networks of all types and is flexible in how it processes them)

while an integrated GPU from the previous gen (or bare spec next gen entry level GPU) dedicates every single flop of compute it has to the trivial tasks of merging the diffuse/specular components of frames that were rendered and denoised by SPUs; then displaying the composited frame in native resolution (the GPU would only do these two tasks and provide for backwards compatibility; in bc mode Super-Resolution could be done by SPEs accessing the framebuffer to work their magic on pixels)

Given that George Lucas and Stephen Spielberg used to discuss the future of CG with Kutaragi, I think a hybrid rendering system of this sort would've been front of mind for a Kutaragi in the era of CGPUs. With SPUs acting as a second GPU compute resource for every compute intensive rendering workload, the GPU would be free to fly at absurdly high framerates. Kaz wants fully path-traced visuals (timestamped) in native 4K at 240 fps for his GT series (for VR I guess), I suspect a CGPU with 728 enhanced SPEs at 91.2 GFLOPS per SPE (3x the ALU clocked at 3.8 Ghz) would give it to him. Maybe we'd even get that photorealistic army of screaming orcs Kutaragi was talking about too (timestamped).

Realistically though, the current regime has a good thing going with their "PC cycle incrementalism bolstered by PS3's post-mortem" approach to hardware; so they'll likely work with AMD to come up with a Larrabee-like x86/Radeon design influenced by aspects of CELL's EIB rings. So long as its $399, can upscale to the native res of the day, run previous gen games at ~60 fps and show a marginal increase in character behavior/world simulation over what we have today, it'll be met with praise from consumers.

Personally, I'd love to see them do more than just balance price and performance on a dusty 56 years old model born of Moore's law. I'd be elated if they balanced the two on one of CELL's models, because unlike the "modern" CPUs an AMD sourced CGPU would descend from, CELL:

- doesn't waste half its die area on level cache; more of it goes to ALU (in red) instead to help achieve multiples of performance with greater power efficiency

- was intended to outpace the performance of Moore's law and designed to break free of the programming model the law gave rise to

- was designed to effectively challenge Geisinger's law and Hofstee's collorary (two bosom buddies of Moore's law that use cache size, hardware branch prediction etc. etc. to limit traditional processor design performance gains to 1.4x (i.e. ~40%) despite getting 2x the transistors, and drive power efficiency down by ~40% -- and they won't be going anywhere without fundamental changes in chip design)

All things being equal (node, transistor count, size, modern instruction sets, iGPU) it seems to me that a CELL/Radeon CGPU would be way more performant and much more power efficient than a Larrabee-like x86/Radeon CGPU for what I presume would be similar costs. That means a lot more bang for my buck and I'm all for that kind of "balance".

Hopefully the merits of CELL won't continue to go ignored.

Impressive amount of information! Didn't know IBM was still improving on PowerPC and it's ISA, thought it was dead. Like you I too believe Sony is biding their time with the x86

SSfox

Member

God Of War 2 was unbelievable on playstation 2, i remember i finish the game, i restart it right after, i finish it, i restart, like around 15 times without getting bored one bit, what a masterpiece, i only did this for very very few exceptional games in my life.

I remember one day i was with friend at his house playing gow2, and his old brother passed by and was instant shock, he was about to go work but he stayed a bit because he wanted to watch more of that masterpiece game.

Last edited:

Try months lol.MS bought Bethesda, so what? It'll be years before an exclusive game from them is released, Crash 4 is happening right now.

assurdum

Banned

The hell of absurdity says this channel?

Neo Blaster

Member

Try months lol.

demigod

Member

What exclusive game are they getting this year?Try months lol.

Ass of Can Whooping

Member

What exclusive game are they getting this year?

Deathloop and Ghostwire

Oh wait sorry lol

DeepEnigma

Gold Member

Damn, Microsoft buys these companies and Sony's getting exclusive games from them first.Deathloop and Ghostwire

Oh wait sorry lol

ethomaz

Banned

Uncle Phil and the forever waiting game... every year, every interview.How Xbox fans are once again 'waiting for E3'...oops, for Bethesda exclusives.

ethomaz

Banned

From their release schedule only Bethesda PS exclusives are being released this year.Try months lol.

But hey there is always some top secret to be revealed.

Last edited:

Rea

Member

Last edited:

sncvsrtoip

Member

1.2x faster in 120fps mode than rtx2070oc, 2x faster loading times than pc with nvme ssd

Last edited:

Imtjnotu

Member

1.2x faster in 120fps mode than rtx2070oc, 2x faster loading times than pc with nvme ssd

Wait did ign give him the boot?

sncvsrtoip

Member

Nah he said he will still creating content also for his own channelWait did ign give him the boot?

Last edited:

Barakov

Gold Member

1.2x faster in 120fps mode than rtx2070oc, 2x faster loading times than pc with nvme ssd

The PS5's SSD continues to impress.

Bernd Lauert

Banned

1.2x faster in 120fps mode than rtx2070oc, 2x faster loading times than pc with nvme ssd

Why is he running the game at 1440p on the PC and then comparing it to the 1080p mode on PS5?

The load times on the PS4 Pro HDD were already really impressive. There is not that much to win here. Pure SSD speed and one memory pools meets a PC with a slower NVME drive + DX11 (bad multithreading for GPU related work) + split memory pool.The PS5's SSD continues to impress.

Edit:

And does he mention at some point, what CPU he uses? Zen+ vs Zen2 would a quite a bit unfair.

I hat it when someone compares a console with a PC and doesn't provide the specs of the PC (at least, CPU, GPU, Memory specs).

Last edited:

MasterCornholio

Member

1.2x faster in 120fps mode than rtx2070oc, 2x faster loading times than pc with nvme ssd

Seems like it's very nice on PS5. Pretty interesting the differences between the load times.

MasterCornholio

Member

Why is he running the game at 1440p on the PC and then comparing it to the 1080p mode on PS5?

Did he use a PC that's a lot more powerful than the PS5?

Ass of Can Whooping

Member

Fake?

Mixer lol

LiquidRex

Member

SlimySnake

Flashless at the Golden Globes

1.2x faster in 120fps mode than rtx2070oc, 2x faster loading times than pc with nvme ssd

isnt that a 10 tflops gpu?

1.2x = 12 tflops. ps5 12 tflops confirmed.

demigod

Member

It's hilarious that you didn't watch the video and just went off by that comment from a fanboy in his video.Why is he running the game at 1440p on the PC and then comparing it to the 1080p mode on PS5?

DeadOblivion78

Banned

This guy has to be living a fantasy in his head.

That account has probably never looked at Xbox sales in Japan.

Barakov

Gold Member

Great Hair

Banned

This guy has to be living a fantasy in his head.

Nippon wont allow any hostile take over!

- Status

- Not open for further replies.