To be precise, every downclock has a negative impact even if leads to just 1% performance loose.

You obviously can argue that this "worst case" of only having 18% TF advantage on the Xbox Series X is the usual case but It's simply not guaranted that the PS5 GPU is running at 2.23GHz all the time.

It doesn't have to be. But if it's 99.9% of the time, that 0.1% becomes negligible.

Since we don't have clock numbers we can't tell if current games are already here and there a bit below the 2.23 GHz mark and if not, how many next gen games will put the clocks down?

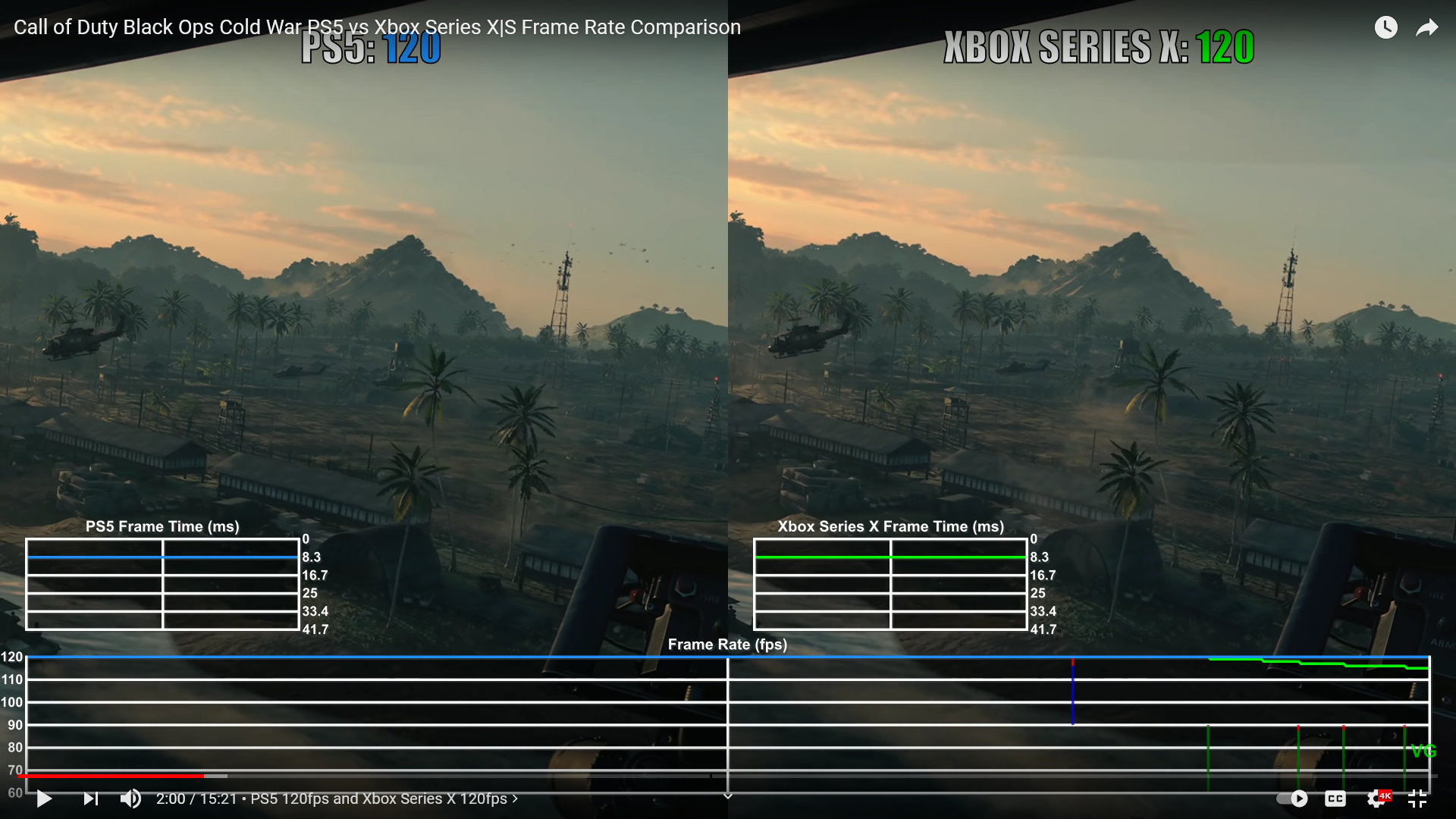

We can indeed look at the performance of current games on PS5 versus XSX versus PC and gain some confidence in the fact that if any downclocking is occuring, it simply isn't meaningful to overall performance. In almost every game tested, the PS5 GPU outperforms a PC desktop GPU with similar TF numbers. Likewise, in most non-BC titles those 10TF on PS5 are delivering higher performance than the 12TF on XSX... so again, any downclocking on the PS5 GPU isn't appearing to be meaningful to real-world performance.

Mark Cerny was stating that they expect the GPU to run most of its time at or "close" to that frequency and when downclocking occurs that they expect it to be pretty "minor".

Another statement was saying that to reduce power by 10% it only takes a couple of percent of lower clock speeds.

However all of that is of course not very precise and is based on "expectations", even if they are coming from Sony.

It's not like companies are right all the time or don't inject a bit of (too) optimistic marketing.

Of course, but then when the performance in real games consistently demonstrates the GPU is outperforming equivalent TF desktop GPUs and even the 12TF XSX GPU there becomes strong reason to simply believe that Mark Cerny wasn't lying or spouting optimistic marketing (and any historical precedent should tell you that Mark Cerny rarely does for that matter).

There's also the notion that the Road to PS5 talk was originally intended for devs. So there's very little reason to mislead devs with a statement like that. They are the ones who will be working with the hardware at the end of the day and will very quickly and very easily be able to call stuff like that out as BS if it was.

Normally, Technical Experts like Andrew Goosen on the Xbox side, and Mark Cerny on the PS side, speak plainly, concisely and factually. They aren't company PR people so aren't at all given to spouting marketing spiel. So it seems odd to want to dismiss their comments on the basis of "it could be marketing". These aren't the guys that do that.

If it was Spencer or Jim Ryan, then sure.

Now based on the claims and how it should fare, I wouldn't expect major downclocking to occur but next gen games, which also stress the CPU, could pass the treshold and consistently lower the clocks.

The CPU doesn't impact PS5's variable clocks. The clock frequency isn't varied on the basis of power; i.e. it's not measuring consumed power and adjusting frequency to keep within a threshold. It's deterministically adjusting frequency on the basis of GPU workload and GPU hardware occupancy. So whatever the CPU workload it won't impact GPU clocks.

It kinda seems like you might be conflating Smart Shift with their GPU variable clocks. Smart Shift will raise the power ceiling for the CPU if the GPU is idle, but I don't think it works the same the other way around because the GPU clocks are fixed at the top end, based not on overall APU power, but GPU stability limitations (as attested to by Cerny himself).

So I doubt the CPU running flat out would reduce the GPU power ceiling, as a) I would argue logically that as poor design, and b) it simply doesn't need to because the cooling system capacity will be sized for peak CPU and GPU power consumption (which with the variable GPU clock regime is still way below that of a fixed clock GPU).

On avg. the PS5 might run at 2.15 GHz in one game, 2.07 GHz in another.

That's not how it works. The GPU clock frequency adjustment is performed on the basis of GPU workload. So it will change rapidly and many times within the time span of rendering a single frame.

So for a 30fps game, you could see the clocks adjust up and down multiple times within that 33.3ms frame time.

So when Cerny says the GPU will be at max clocks most of the time, it's pretty clear he knows what he's talking about.

They never said a missing light source! some lie this is, series x simply had poor raytracing on cod than ps5 and the missing alpha effects on guns where there for everyone to see.

The 36 cu's are not a hypothethis they are real so i dont get your point there. and the variable clocks dont with a 1.8ghz fixed clock is even more confusing what fo you mean here?

I guess that english probably isn't your first language so it's clear you misunderstood that statement I made.

What I'm saying is that the the PS5's variable clock regime will perform better than a PS5 that was set with fixed clocks.

i.e.

Let's say that Cerny went the same route as Xbox with PS5 (i.e. a hypothetical PS5), and spec'd their machine as 36CUs clocked with a 1.8GHz fixed clock-speed. This would obviously perform significantly worse than the actual variable clocked 2.3GHz 36CU PS5 GPU.

Yes, variable clocks also benefits the Front End, ROPs and cache bandwidth too, but regardless, you cannot point to any of these features and say conclusively "this" or "that hardware feature running at higher clocks is the reason the PS5 provides more stable framerates across a range of different titles".

Every game is different, will have a different performance profile, and will be bottlenecked in different parts of the system at different points throughout the game. It's way more complex than just saying, higher PS5 clocks ==> higher average framerates in games with dynamic resolution.