52 CUs lead to a larger chip and fixed clock rates come with the downside of unused clock potential.

Which is a reason why every CPU and GPU nowadways is clocking dynamically based on the circumstances and the PS5 is using similar principles even though the parameters it uses are different and it follows a reference model for deterministic behaviour.

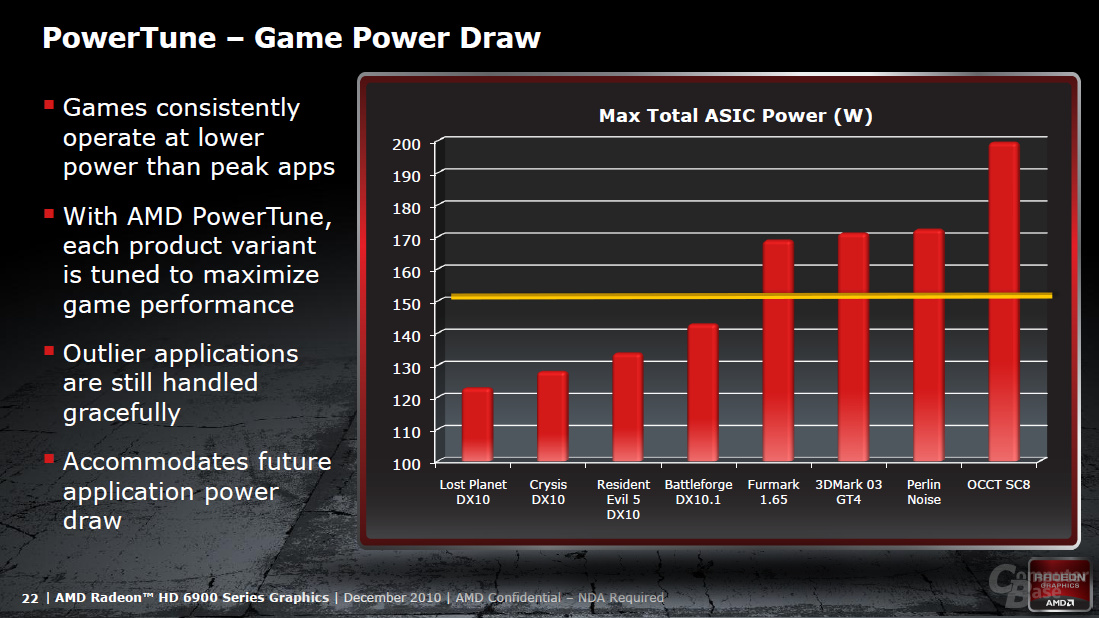

Just as an example, when the GPU is clocking at 2.23GHz it could consume 170W in one game and 200W in another.

That's because depending on the game more or less transistors will switch.

Logic which is not in active use is also aggressively clock gated, saving energy.

Now some dev studio might develop a game which behaves similar to a power virus and it would consume 250W at 2.23, so they would need to reduce the frequency to 1.9 GHz to be at 200W again, if that's the level of their power supply and cooling solution they want to be at.

If one is using a fixed clock target they must think about the worst case scenario with most transistors switching and they have to design the cooling solution around that.

That strategy is of course just adjusted around that worst case scenario and most games don't behave that way and performance potential for many applications is suffering because of that, leading to 1.9GHz for all games.

Instead it makes obviously sense to dynamically downclock the machine, when a certain threshold is reached.

Now most games can run at 2.23 and benefit from better performance while the worst case scenarios are also covered, where the machine will automatically downclock to 1.9GHz to keep the 200W target.

This is just an extreme illustration behind the idea, as Mark Cerny said, which is also evidently by the power/frequency curve from GPU measurements, if you run at the top frequency levels of a design and the PS5 is at a high spot, efficiency can be hugely improved by lowering the frequency by just couple of percent.

And those are Mark Cerny's words, if that's a trustworthy person for the people here and he further stated that they expect the processing units to stay most of the time at

or close to max frequency, meaning that yeah it goes down and may even go down consistently but that they expect the downlock to be pretty minor.

Which ties into my initial statement, saying that 18% is the worst case advantage for the XSX, with the potential that it may be larger.

Later I said to 20, 22 or 25% just as an example and to also state that even with larger downclocks it's hard to tell in final games without precise data.

Now how it will develop over the years is something I definitely can't foresee and I take Mark Cerny's claim as an orientation point as many others.

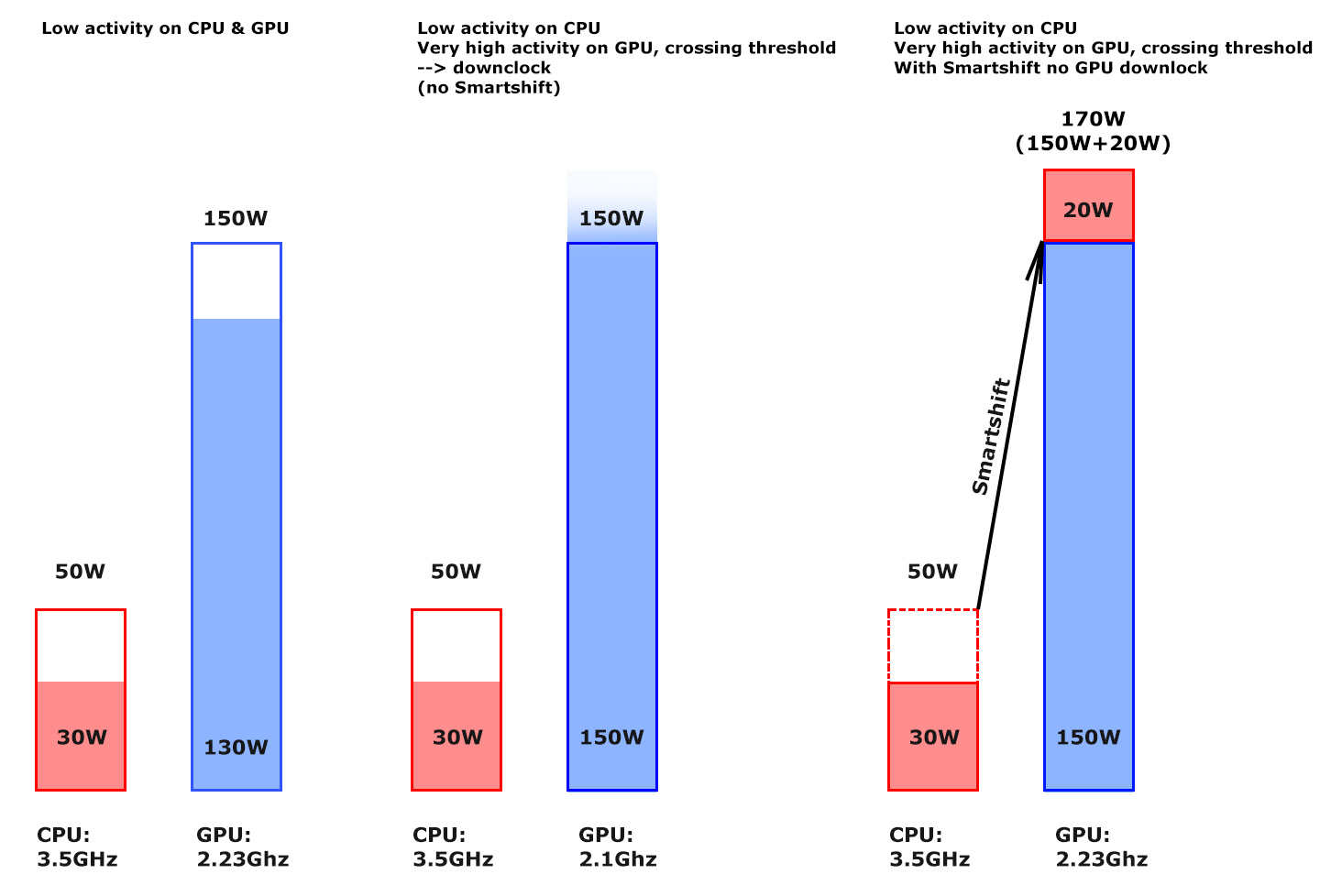

According to the diagram and Mark Cerny's presentation, Smartshift appears to work only in one direction, giving power to the GPU if extra power is available.

This means that in practise the total GPU budget is tied to the CPU utilization.

It will never go down below a certain treshold, let's say 150W, but when both units are stressed the chances for a necessary GPU downlock could be much higher because the GPU can't use free power from the CPU side anymore.

Which is why I made the point that with next generation games which stress more of the GPU and the CPU, we may see the PS5 GPU clocking lower than 2.23GHz.

Here is something which I don't really get.

I just said that if the threshold is exceeded the GPU has to downlock and the avg. clock rates for that time period will be lower.

You say "not at all" but at the same time you are basically saying the same thing, that the system will need to downclock the GPU in cases where the threshold is exceeded, just adding the stance that it should happen very rarely.

I have the feeling we basically agree on many points but my statements are taken further than they are.