3liteDragon

Member

PS5 is developers' favorite console, according to GDC poll

State of the Game Industry 2021 reveals a lot, from preferred console to VR decline

I think we're all aware how bad traditional rasterization can look. Lots of the time it looks great, though. Personally, I'm a fan of bad lighting cause it reflects my soul.Nothing @VFXVeteran said was wrong, though. I can understand why he's frustrated. A lot of people are circling the drain and promoting 'subjectivity' as a reason as to why rasterization can compete with proper ray-tracing solutions. Once people start getting properly acclimated with RT, I guarantee you they're gonna balk at these flawed notions. The tricks just become woefully apparent, and everything looks wrong.

Rasterization can produce accurate outcomes, but there is a reason most FX and animation studios for film are using RT solutions. You can look at Pixar films pre 2013 vs post 2013 to see the difference. Also Disney with big hero 6. For game development most of it is faked, and devs would most likely want to use the extra compute elsewhere at least until RT processes mature. I think Epics software solution or something like that would be the way devs would go. Ive seen experiments of baking in the RT info into the shader. Basically you would do a large amount of ray cast store that info in the shader, then at runtime cast a minimum amount rays to recall the mass of RT info baked in the shader. Im probably butchering this, i gotta find that video.Spot on.

Well, according to @VFXVeteran the BDPR term and the lighting terms are independent. So using PBR with non-RT lighting models can still produce materials that look just as accurate and realistic. So RT doesn't seem relevant to this

Agree, many people see the Fancy words Raytracing then they are shitting on rasterization. But they didn't know that the ray tracing games are still hybrid of Rasterization and Raytracing. Full Raytracing is very computationally expensive for gaming.Absolutely. Agreed.

It absolutely does! And again it just goes to expose the silly reductive reasoning of some who only seem to consider things within this artificial and very binary dichotomy of RT vs non-RT games.

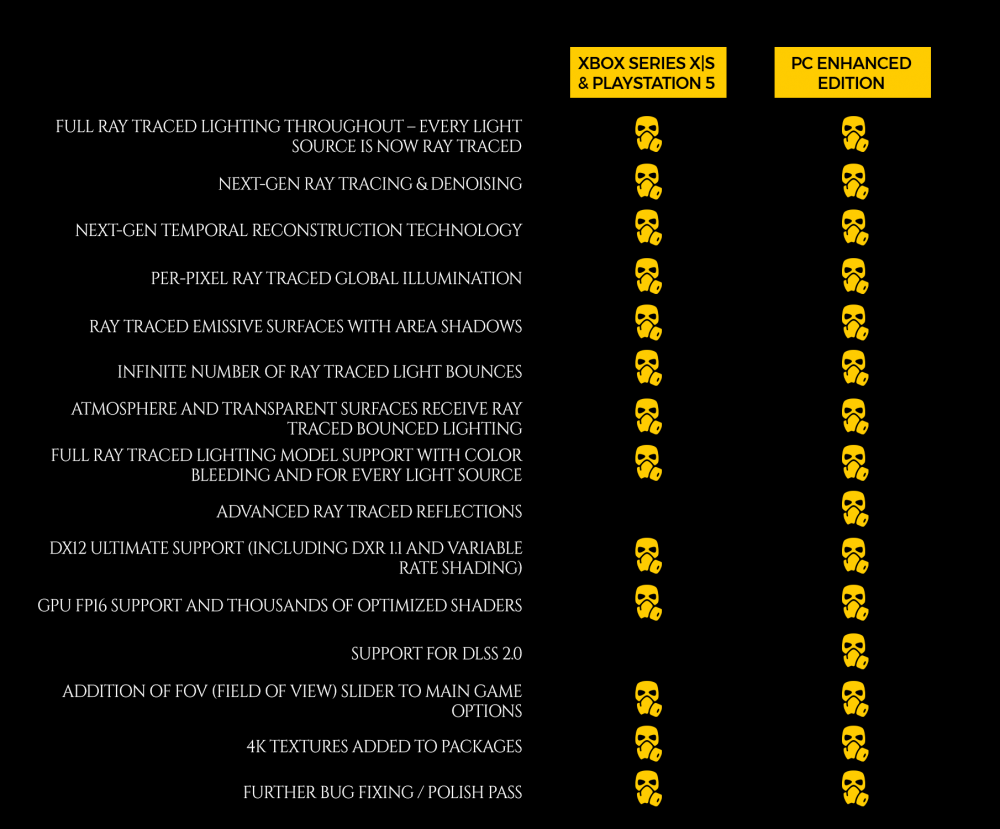

This recent Metro news is a great example of how RT can be improved on a graduated scale based on one of many factors, i.e. number of ray bounces considered in the simulation.

RT quality can also be adjusted using many other variables like ray sampling rate, for example, i.e. the number of rays per pixel.

We're in the infancy of RT for real-time applications, so people disingenuously pretending RT as we have it now is the best it'll ever be, is just as bad and obtuse as those who tried to pretend that traditional non-RT lighting effects haven't improved at all in the past 20 years (and aren't still continuously improving).

i think i read somewhere that those pixar movies can take almost 25 hours to render one frame. we are not going to get there any time soon. maybe at 100 tflops. maybe not even then.Rasterization can produce accurate outcomes, but there is a reason most FX and animation studios for film are using RT solutions. You can look at Pixar films pre 2013 vs post 2013 to see the difference. Also Disney with big hero 6. For game development most of it is faked, and devs would most likely want to use the extra compute elsewhere at least until RT processes mature. I think Epics software solution or something like that would be the way devs would go. Ive seen experiments of baking in the RT info into the shader. Where you do multiple ray cast store that info in the shader, then at runtime cast a minimum amount out rays to recall the mass of RT info baked in the shader. Im probably butchering this, i gotta find that video.

i think i read somewhere that those pixar movies can take almost 25 hours to render one frame. we are not going to get there any time soon. maybe at 100 tflops. maybe not even then.

The ray tracing performance hit on rtx cards is already pretty bad, and it's even worse on AMD cards. the PS5 and XSX are basically performing equivalent to the 2060 in watch dogs. thats a 6 tflops gpu. no thanks.

I am ok with some devs like the Metro guys using rt for everything. it will be a good case study to compare with games using standard rasterization, but there is so so much more we should be doing with those tflops than just trying to get the most accurate picture. Lets get to photorealism first, then lets add some actual physics in games for destruction, and other levels of interactivity, then lots of NPCs and fancy visual effects we see in movies and then we can worry about doing all of that accurately.

This is on UE4 running in realtime. no ray tracing. Lets get to this first.

We seem to be talking about two different things, and you also seem to have misunderstood Alex. What Alex meant when he said "Metro has the best lighting in a video game" is the accuracy of the lighting. It's objectively the best. What you're talking about is subjective, I even agree with you that there are many better looking games out there compared to Metro. But when we talk about the accuracy of lighting, Metro is king. No need to shit on Alex for saying an objective truthWhat? RT only Metro is coming out on PS5 too. Literally the only thing missing is RT reflections. Everything else is ray traced, and their next full game is already confirmed to be RT only.

This isnt about Lumens vs RT GI. Dynamic GI is dynamic GI, who cares how you get there anyway. The end result is what matters, and right now the 'gamey' games i showed looked better than the Metro RT GI lighting Alex is jerking off over. I am just not telling everyone how they look better, i literally posted screenshots to invite people to see how they look better. Anyone with two pairs of eyes would look at those screenshots and know that they look better. It has fuck all to do with ray tracing or Lumens. Just your eyes.

A year ago, back when we thought Hellblade 2 was realtime, I thought this was the best graphics Ive ever seen. There is no RT here. None whatsoever. It just looks amazing to my eyes, not because a PS5 dev made it or because its using Lumens, which its not. It looks amazing because it looks photorealistic, and the cinematography is approaching hollywood quality.

Quite frankly, i couldnt care less about Dynamic GI. Most games dont need it. Not even open world with dynamic time of day. Sun doesnt move that fast. I say go ahead bake them in. We need to first get to photorealistic graphics. Then we can worry about dynamic GI.

Yep, it's one of those cases where we will look at current games ten years from now and think "wait, we thought this looked great?" after we got accustomed to years of fully raytraced games. Rasterized lighting always has a shitton of compromises, but we don't care because we don't know any better. Once we do know better, we will never want to go backNothing @VFXVeteran said was wrong, though. I can understand why he's frustrated. A lot of people are circling the drain and promoting 'subjectivity' as a reason as to why rasterization can compete with proper ray-tracing solutions. Once people start getting properly acclimated with RT, I guarantee you they're gonna balk at these flawed notions. The tricks just become woefully apparent, and everything looks wrong.

Half of this forum ignores you, don't worry

uptobox.com

uptobox.com

Rasterization can produce accurate outcomes, but there is a reason most FX and animation studios for film are using RT solutions. You can look at Pixar films pre 2013 vs post 2013 to see the difference. Also Disney with big hero 6.

For game development most of it is faked, and devs would most likely want to use the extra compute elsewhere at least until RT processes mature. I think Epics software solution or something like that would be the way devs would go. Ive seen experiments of baking in the RT info into the shader. Basically you would do a large amount of ray cast store that info in the shader, then at runtime cast a minimum amount rays to recall the mass of RT info baked in the shader. Im probably butchering this, i gotta find that video.

Because the actual show doesn't looks better.Yep, it's one of those cases where we will look at current games ten years from now and think "wait, we thought this looked great?" after we got accustomed to years of fully raytraced games. Rasterized lighting always has a shitton of compromises, but we don't care because we don't know any better. Once we do know better, we will never want to go back

Each material that surrounds us absorbs light in its own way, diffuses and reflects, and is be able to transfer some of the color (wave) to another material (RT).

The characteristics of the material and its color are the result of the action of light on matter.

But in games we have +artistic aspect.

So, one could never say for sure what is the best looking game or best lighting in the business etc. It that what your're saying?Wut....?!?

In a discussion about how people subjectively appreciate lighting in games, he's trying to use terms in a fucking rendering equation to prove his own subjective opinion correct.

His knowledge and technical arguments aren't technically incorrect, but in the context of the wider discussion, they're irrelevant because he's trying to change scope of the entire discussion to fit a narrow technical definition of lighting that applies only to himself.

You're also making the mistake of misunderstanding/misrepresenting the arguments presented in the thread, because this:

Isn't even part of the discussion, nor is it a valid argument anyone has made.

The main thrust of the arguments presented is that both RT and non-RT lighting in games is less important than the end image said lighting approach produces. And that what people subjectively consider is "the best" is based on subjective qualities, because whether it's RT or non-RT lighting, the quality of the end result is still largely dependent on the artistic input to craft the final scene... thus it's an artistic measure, not an objective technical one.

My Link To download the 2GBs 4K StateOfPlay Gameplay SHowcase trailer :

Enjoy !This page is not allowed in the US

uptobox.com

Yeah, it's going to be a tough time for people expecting a massive jump in visual quality whilst retaining 60 fps.Absolutely bizarre rendering setup. Alex's counts are 1080p with TAA upscaling to 4k. He asked Housemarquee and they told him they use TAA upscaling to get to 1440p and then use checkerboarding to get to 4k.

To me that just goes to show that 60 fps in these next gen games is going to very GPU hungry. If we are going to want to see a big jump in visuals, we need to settle for 30 fps.

Absolutely bizarre rendering setup. Alex's counts are 1080p with TAA upscaling to 4k. He asked Housemarquee and they told him they use TAA upscaling to get to 1440p and then use checkerboarding to get to 4k.

To me that just goes to show that 60 fps in these next gen games is going to very GPU hungry. If we are going to want to see a big jump in visuals, we need to settle for 30 fps.

If anything it makes me think we'll see 60 fps even more this gen. Developers are getting tons of R&D with upscaling this time around and we'll see all kinds of cool tricks pop up and the inevitable hardware specific inclusions in the Pro versions of these consoles.Absolutely bizarre rendering setup. Alex's counts are 1080p with TAA upscaling to 4k. He asked Housemarquee and they told him they use TAA upscaling to get to 1440p and then use checkerboarding to get to 4k.

To me that just goes to show that 60 fps in these next gen games is going to very GPU hungry. If we are going to want to see a big jump in visuals, we need to settle for 30 fps.

Right. Last gen's 60 fps only titles dont exactly look all that amazing especially when we talk about the best looking games of the gen.You have to compare 60 now vs 60 then, 30 now vs 30 then. Different games need different targets, and how it gets to the final image is a curiosity, it doesn't mean anything.

Right. Last gen's 60 fps only titles dont exactly look all that amazing especially when we talk about the best looking games of the gen.

But ever since this gen's specs were announced and people saw that CPU boost, I see a lot of people wanting to 60 fps to become the standard next gen. Well, if it does then expect games to look marginally better than last gen.

BF6 should be 60 fps. Lets see what they can do, but if Returnal is the best we can do at 60 fps and devs focus on 60 fps then we are in for a disappointing gen.

Well, unless AMDs FSR is at DLSS level of quality. Then upscaling from 1080p would look better and even 60fps games can push some serious visuals.Right. Last gen's 60 fps only titles dont exactly look all that amazing especially when we talk about the best looking games of the gen.

But ever since this gen's specs were announced and people saw that CPU boost, I see a lot of people wanting to 60 fps to become the standard next gen. Well, if it does then expect games to look marginally better than last gen.

BF6 should be 60 fps. Lets see what they can do, but if Returnal is the best we can do at 60 fps and devs focus on 60 fps then we are in for a disappointing gen.

We don't make games. The best WE can do is keyboard nitpick devs on GAF.BF6 should be 60 fps. Lets see what they can do, but if Returnal is the best we can do at 60 fps and devs focus on 60 fps then we are in for a disappointing gen.

I ain't confident sadly.Well, unless AMDs FSR is at DLSS level of quality. Then upscaling from 1080p would look better and even 60fps games can push some serious visuals.

I'm quite confident FSR will be good, you have AMD, Microsoft, and likely Sony all working to achieve something like it, as it would be a beneficial boon to all of them.

One of the things that is helping games like R&C and Demon Souls, is that these games were shown initially in realtime, or realtime captures. Not just in-engine, and definitely not pre-rendered. This allowed us to be blown away at reveal, but still keeping expectations in check, because the graphics were real. No concept renders like we've seen in the past. It allows a game like R&C to outperform its reveal footage, as launch nears.

This bodes really well for Horizon and Gran Turismo, among others. What we saw last year should be baseline expectations for the finished product. That's fantastic, because those were really strong baselines.

COD Modern Warfare 2019 is a good example of a last gen 60fps game that is up there in visuals. In my opinion, it's more impressive than the latest Doom games even though I haven't played neither of them. COD Cold War is a straight up downgrade in many aspects including visuals.Right. Last gen's 60 fps only titles dont exactly look all that amazing especially when we talk about the best looking games of the gen.

But ever since this gen's specs were announced and people saw that CPU boost, I see a lot of people wanting to 60 fps to become the standard next gen. Well, if it does then expect games to look marginally better than last gen.

BF6 should be 60 fps. Lets see what they can do, but if Returnal is the best we can do at 60 fps and devs focus on 60 fps then we are in for a disappointing gen.

So, one could never say for sure what is the best looking game or best lighting in the business etc. It that what your're saying?

So, how do you objectively compare games between each other?

Yeah, MW is a looker. Especially the night levels. Hell, i thought the space Cod Warfare looked stunning, but you almost never see them brought up in graphics discussions.COD Modern Warfare 2019 is a good example of a last gen 60fps game that is up there in visuals. In my opinion, it's more impressive than the latest Doom games even though I haven't played neither of them. COD Cold War is a straight up downgrade in many aspects including visuals.

No that's not correct, maybe for some game, but for fast paced shooter like this absolutely not. Even then 60FPS+ all the way for me, hopefully this gen.Absolutely bizarre rendering setup. Alex's counts are 1080p with TAA upscaling to 4k. He asked Housemarquee and they told him they use TAA upscaling to get to 1440p and then use checkerboarding to get to 4k.

To me that just goes to show that 60 fps in these next gen games is going to very GPU hungry. If we are going to want to see a big jump in visuals, we need to settle for 30 fps.

Yeah, I am fine with online shooters being 60 fps. Just like they were last gen. Wouldnt want to play warzone and battlefield at 30 fps.No that's not correct, maybe for some game, but for fast paced shooter like this absolutely not. Even then 60FPS+ all the way for me, hopefully this gen.

I could definitely see 120fps modes with resolution lower than 1080P. Wouldn't be surprised if it's around 720P. I kinda want that if AMDs Super Resolution is good enough.Yeah, MW is a looker. Especially the night levels. Hell, i thought the space Cod Warfare looked stunning, but you almost never see them brought up in graphics discussions.

It's interesting to see the results though. I wonder what this means for the series s. On one hand, if we cant tell that the internal resolution in a 4kcb game is just 1080p, series s games being rendered at 1080p, could easily do the same. However, if a PS5 which is 2.5x more powerful than the series s, is rendering something at 1080p 60 fps then that means we are looking at a 640p internal resolution for the same game on the series s. Would TAA upscaling even work well in a resolution that low?

Surely we would all like the new SP and ND to obtain a similar visual finish. Truth?

The internal resolution is 1080p?

Yeah, MW is a looker. Especially the night levels. Hell, i thought the space Cod Warfare looked stunning, but you almost never see them brought up in graphics discussions.

It's interesting to see the results though. I wonder what this means for the series s. On one hand, if we cant tell that the internal resolution in a 4kcb game is just 1080p, series s games being rendered at 1080p, could easily do the same. However, if a PS5 which is 2.5x more powerful than the series s, is rendering something at 1080p 60 fps then that means we are looking at a 640p internal resolution for the same game on the series s. Would TAA upscaling even work well in a resolution that low?

Objectivity based on personal opinion.We seem to be talking about two different things, and you also seem to have misunderstood Alex. What Alex meant when he said "Metro has the best lighting in a video game" is the accuracy of the lighting. It's objectively the best. What you're talking about is subjective, I even agree with you that there are many better looking games out there compared to Metro. But when we talk about the accuracy of lighting, Metro is king. No need to shit on Alex for saying an objective truth

Also didn't know that the full RT thing is coming to consoles. Looking forward to seeing how they run.

Alex has also been wrong a LOT of times.Objectivity based on personal opinion.People you should stop to listen person as Alex. Have more knowledge doesn't implies to be more objective. Indeed people like him leans to become quite arrogant and egocentric just because knows tech stuff forgetting completely what objectivity means.

Let's wait forThe internal resolution is 1080p?

I've been pretty busy for a while. And now I am not going to bring you specific news. But...

Everyone knows that all kinds of things are shared between Sony's internal studios (tools, assets, techniques, etc.). It is not a novelty.

Let's see how I say this ... I'm going to leave it a bit to free interpretation (almost as always). I will not answer questions publicly or privately.

Do you remember these CGI trailers?

Surely we would all like the new SP and ND to obtain a similar visual finish. Truth?

As a personal opinion, I could say that it is no longer entirely necessary to have a physically correct render calculation to obtain a physically (or visually) incredible result in real time.

If I were not older, I would like to go directly to 2022+.

I leave it there.

@Mod of War Please forgive (or delete) this message if this is no longer allowed in this thread.

Wow, this is surprising. I know you weren't sold on this game ahead of time...I certainly wasn't either. Now I'm thinking I have to get it and check it out!Returnal is really something special. Yeah, it might have some progression issues, but this is what Cerny must have had in mind when he designed the 3d audio and the dualsense features. It feels immersive in ways other shooters simply arent. the game might not be a looker, but its atmospheric as fuck. I was watching Ratchet's state of play today to try and imagine the dualsense and 3d audio in that game, and it felt so empty in comparison. Atmosphere is so important. This is far more impressive overall than Demon Souls, Miles and Astrobot.

I'd highly recommend it as a technical showcase even if you arent into rougelikes or brutal difficulty spikes. This is Cerny's vision brought to life.