You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Die Namek Ability

Member

The point is your current GPU would still have value so you can sell it to subsidies the cost of a new GPU. Waiting two gens this strategy is much less beneficial. Even if waiting a gen yielded a similar cost analysis your still in a net negative situation because you are doing so at the cost of not having the newest GPU at each release as opposed to the other strategy. My .02I don't see the point of upgrading each gen, unless you're going from low end to high. Skipping a gen is usually the best in terms of cost and performance gains you'll actually notice.

Moses85

Member

Nvidia lowering prices. LOL

The next day that happens, pigs will fly.

asdasdasdbb

Member

Still rocking two 3080s myself.

Can I get ~4090 perf in a 3080FE sized and power hungry card at <$1,000? Hmmm; maybe it's time…

5080 is expected to be 400-420 W TDP, so no.

Hohenheim

Member

5070TI will definitly have 16gb, at least according to most leaks. Seems to be very similar to 5080.Hoping for 16GB VRAM in a 5070ti.

asdasdasdbb

Member

5070TI will definitly have 16gb, at least according to most leaks. Seems to be very similar to 5080.

Probably like 15% slower. The price wlil be though... uh.. interesting...

SolidQ

Member

Hoping for 16GB VRAM in a 5070ti.

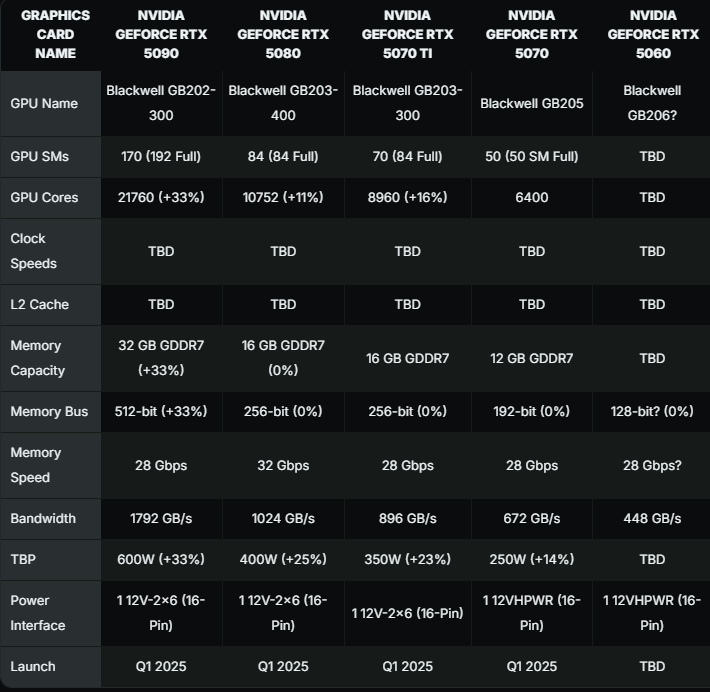

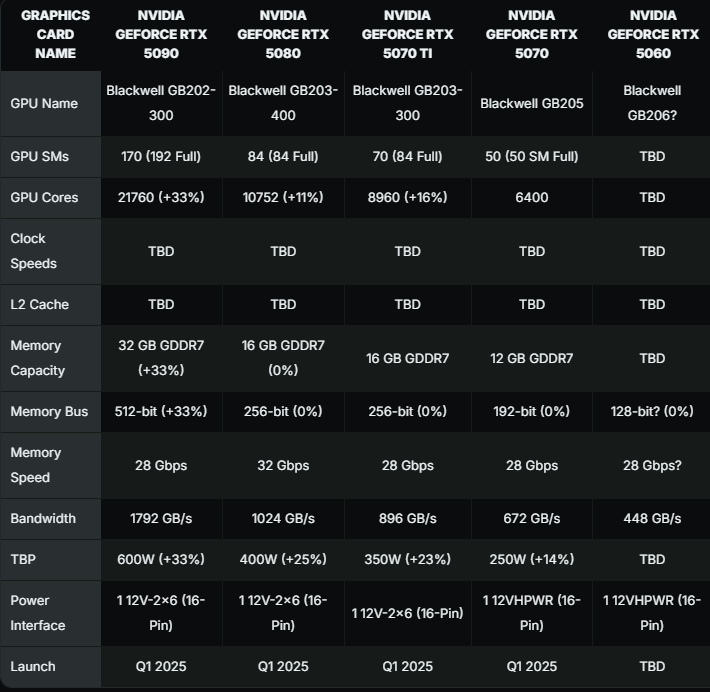

NVIDIA GeForce RTX 5070 Ti Gets 16 GB GDDR7 Memory, GB203-300 GPU, 350W TBP

Last edited:

asdasdasdbb

Member

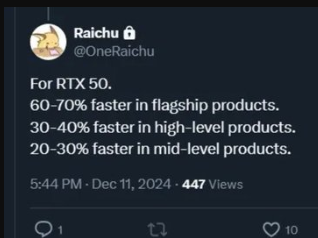

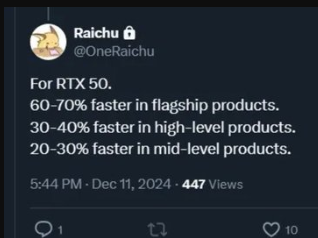

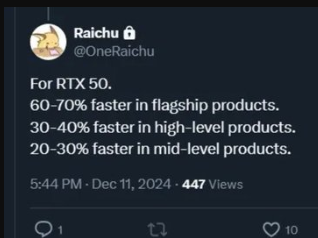

If Raichu means the 5070 and Ti as the mid level products... that's realistic but probally at the lower end. And figure the MSRP is going to be decently higher.

Bojji

Gold Member

NVIDIA GeForce RTX 5070 Ti Gets 16 GB GDDR7 Memory, GB203-300 GPU, 350W TBP

5090 - 33% more cores, 33% more power yet it will be 60-70% more performant?

He is smoking crack unless we have the biggest IPC increase in decades. Same is true about 5080 being faster than 4090, it doesn't make any sense.

There was rumor about 5070 being more or less on par with 4070ti, it has less cores (than 4070ti) but probably higher clock so it's logical.

asdasdasdbb

Member

5090 - 33% more cores, 33% more power yet it will be 60-70% more performant?

He is smoking crack unless we have the biggest IPC increase in decades. Same is true about 5080 being faster than 4090, it doesn't make any sense.

The 4090 is extremely bandwidth starved. Like seriously. You could get 30% faster without changing anything else if it had GDDR7.

Last edited:

SolidQ

Member

it's likely 4080 vs 5080 and comparision like NV does per tier 4060 vs 5060, 4070 vs 5070 etcSame is true about 5080 being faster than 4090

Yeah 1tb vs 1.8 + clocks from GPU and MemoryThe 4090 is extremely bandwidth starved

Last edited:

Bojji

Gold Member

The 4090 is extremely bandwidth starved. Like seriously. You could get 30% faster without changing anything else if it had GDDR7.

Depends on the game? In some games you will need more compute than memory bandwidth. Look at 4070 vs. 3080 - about the same compute power (4070 has less cores but far higher clock) but 3080 has ~50% memory BW. Results? In 1080 and 1440p both cards are ~ the same, 3080 starts to win at 4k but it's far from 50% difference.

This memory BW difference will make the most difference for people aiming to play at 8k.

Last edited:

asdasdasdbb

Member

Depends on the game? In some games you will need more compute than memory bandwidth. Look at 4070 vs. 3080 - about the same compute power (4070 has less cores but far higher clock) but 3080 has ~50% memory BW. Results? In 1080 and 1440p both cards are ~ the same, 3080 starts to win at 4k but it's far from 50% difference.

This memory BW difference will make the most difference for people aiming to play at 8k.

I am only talking about the 4090 specifically. It is more complicated at the lower tier models.

Spukc

always chasing the next thrill

Just get a new CC to pay off the otherI spent some of my RTX 5090 savings fund on Christmas. I don't want to have to use a credit card to buy a graphics card. Come on Nvidia surprise us with some nice low prices this round.

Lifehacks

Gaiff

SBI’s Resident Gaslighter

60-70% more performant is bollocks. It's going to be more like 30%.NVIDIA GeForce RTX 5070 Ti Gets 16 GB GDDR7 Memory, GB203-300 GPU, 350W TBP

LavitzSlambert

Member

Wait until we see nearly all the performance gains being attributed to the higher power budget. I remember when new generations of hardware were magnitudes more powerful and more efficient too.

Black_Stride

do not tempt fate do not contrain Wonder Woman's thighs do not do not

Excited to see these cards.

But def skipping the generation.

RTX60s should bring real advancement for xx70 class cards.

Im too poor to afford an xx90.

Magnitudes?

When was that?

Also consider 3090 vs 4090 is one of the biggest upgrades weve seen in like what 20 years?

But def skipping the generation.

RTX60s should bring real advancement for xx70 class cards.

Im too poor to afford an xx90.

Wait until we see nearly all the performance gains being attributed to the higher power budget. I remember when new generations of hardware were magnitudes more powerful and more efficient too.

Magnitudes?

When was that?

Also consider 3090 vs 4090 is one of the biggest upgrades weve seen in like what 20 years?

Hawk The Slayer

Member

Right now I'm aiming for a RTX 4060 TI 16GB VRAM version but waiting to see how much better the RTX 5060 Ti 12GB or 16 GB versions are if they do have one. If it's around 20% better for the same price point than definitely getting the 5060 TI. But if it's going to be 20% by being 20% more expensive than no cigar. The RTX 4060 TI 16gb vram would be more than enough for my 1080P self especially since my current card is a GTX 1070. GTA VI, FFVII Remake Part 3, Stellar Blade 2, the next few Resident Evil, Space Marine 3 etc should all get handled well by the 4060 TI 16gb at 1080p. But I'm truly aiming for a decently modded Elder Scrolls VI which might require a tiny bit more than a 4060TI so a 5060 TI would make me feel safer.

Rickyiez

Member

3080 was magnitudes faster than 2080 (40% at 4k)Excited to see these cards.

But def skipping the generation.

RTX60s should bring real advancement for xx70 class cards.

Im too poor to afford an xx90.

Magnitudes?

When was that?

Also consider 3090 vs 4090 is one of the biggest upgrades weve seen in like what 20 years?

1080 Ti was magnitudes faster than 980 Ti (46% at 4k)

4090 is 40% faster than 3090 in 4k

Its always that case except 2080 Ti

FalconPunch

Member

600 Watts is truly crazy. I cannot imagine how anyone can justify 600 watts for the GPU just to play games. If you have a 14900k plus Oled monitor, you could be at almost 1kw to play games. That's just wild man.

Man you are hugely downplaying the performance differences here3080 was magnitudes faster than 2080 (40% at 4k)

1080 Ti was magnitudes faster than 980 Ti (46% at 4k)

4090 is 40% faster than 3090 in 4k

Its always that case except 2080 Ti

3080 was magnitudes faster than 2080 (40% at 4k) -- %70 over 2080 source HUB

1080 Ti was magnitudes faster than 980 Ti (46% at 4k) -- 85% over 980 TI source TPU

4090 is 40% faster than 3090 in 4k - 75% over 3090 source HUB

all AVG raster performance at 4k

Last edited:

Hohenheim

Member

Hoping for 16GB VRAM in a 5070ti.

NVIDIA GeForce RTX 5070 Ti Leak Tips More VRAM, Cores, and Power Draw

It's an open secret by now that NVIDIA's GeForce RTX 5000 series GPUs are on the way, with an early 2025 launch on the cards. Now, preliminary details about the RTX 5070 Ti have leaked, revealing an increase in both VRAM and TDP and suggesting that the new upper mid-range GPU will finally...

BlownUpRich

Member

Off-topic, but them Morgott lines hit strong lol.put these foolish ambitions to rest. thou'rt but a fool!

it's a CGI trailer. even when we get gameplay i still won't expect the game to look like that. remember Witcher 3 went through a "downgrade" phase.

but then Cyberpunk actually ended up looking a lot better than it did in the gameplay trailers.

i know but i don't have any brains or sense.

i only play at 1440p but i did upgrade to a 360hz monitor. i only run it at 240 but still i wish i had a bit more power.

i had a 1070 then got a 2080 and now a 4080. so i guess i'll wait for a 6080 or something. the xx90 cards are just way too expensive

BlownUpRich

Member

I'm really curious about what DLSS 4 will bring, I'm hoping it's more than "Ray Reconstruction 2.0 so now you have more accurate rays", I mean I'd be content with that & it's always welcome, I just want a new shiny feature like how DLSS3 brought Frame Generation. Bring it on Jensen!

Last edited:

asdasdasdbb

Member

Wait until we see nearly all the performance gains being attributed to the higher power budget. I remember when new generations of hardware were magnitudes more powerful and more efficient too.

The higher clocks don't come for free. And it's basically on a refresh of the same node, not a new one.

Celcius

°Temp. member

I'm so curious if I'll be able to get an RTX 5090 at launch. I assume they'll launch late January right around the time ff7 rebirth launches. I'm also curious if they're reaaaaaally going to be 600w and if so, would my 1000w psu be enough. I would think I'd be fine but it will be interesting to see how this all plays out.

Not real time. Already confirmed by cdpr. They used ue5 as a render engine and let the frames take as long as they needed. A 4090 would have produced identical image quality, just taken longer.

It was still made on the 5 series.

It was a dumb thing to put on the trailer.

daninthemix

Member

I can't remember if the 3000 series had any features? 2000 introduced DLSS and RT. 4000 brought framegen. 3000...nothing? So it's not guaranteed we'll get a new feature.If the next DLSS is exclusive to the 50 series I'm never buying Nvidia again

rofif

Banned

lol copeWe got the reveal last night though.

BlownUpRich

Member

Not to be dramatic or confrontational, but why won't you buy Nvidia cards if that's the case? You're not going to see these newer next-gen features in any other cards unless if you're fine with waiting 3-4 years for AMD & Intel to catch up which by then, Nvidia would've already done more DLSS stuff.If the next DLSS is exclusive to the 50 series I'm never buying Nvidia again

Hohenheim

Member

Then you're probably never buying Nvidia again is my guess.If the next DLSS is exclusive to the 50 series I'm never buying Nvidia again

Dr.D00p

Member

If the next DLSS is exclusive to the 50 series I'm never buying Nvidia again

Lol.

Jensen knows the almighty, all consuming power of FOMO amongst PC Gamers is enough to overcome such moral indignation.

DirtyBast8rd

Member

Don't cheap out on motherboard. Get one with good vrm's and read about people having stability issues while you think, huh?, runs fine in my setup.Do you guys think I should get a PCE 5.0 mobo for the 5090 or save some bucks and get a cheaper mobo if GPU would performance same on both?

YeulEmeralda

Linux User

It's not FOMO it's real. Neither AMD or Intel is going to come up with technology that rivals DLSS.Lol.

Jensen knows the almighty, all consuming power of FOMO amongst PC Gamers is enough to overcome such moral indignation.

On that note I cringe whenever someone says Nvidia is a "scam"- look up the definition in a dictionary.

winjer

Member

NVIDIA GeForce RTX 5060 Ti to feature 16GB VRAM, RTX 5060 reportedly sticks to 8GB - VideoCardz.com

NVIDIA RTX 5060 series rumors Finally, something about next-gen in the budget area. Wccftech claims to have obtained new information on the GeForce RTX 5060 series. NVIDIA is now said to be working on not one but two models in the series, which would follow the path of the current-generation...

Ellery

Member

Looking at all the specs it seems like it is same again as it was with the 40 series.

The 4090 was imho the only real juicy card and when you look at the RTX 5090 it is seemingly the same story again.

Sure it is going to be the most expensive one, but looking at price/perf or fps per Dollar it might closely slot next to the other cards. And since it has more VRAM and people wanting to go 4K (or even higher with multi monitor setups) and juice up the RTX it is quite likely that even at $2000 it will look like the best buy. Unless the 5080 and 5070 Ti are much cheaper than I think, but probably not.

Still not sure whether I am going to join in on this generation or wait. My 3080 is still fine. So it depends on the benchmarks and price, but in terms of PC games the upgrade is not that important. Not that much on the horizon that requires it. By the time Witcher 4 etc. come out there will be new hardware.

The 4090 was imho the only real juicy card and when you look at the RTX 5090 it is seemingly the same story again.

Sure it is going to be the most expensive one, but looking at price/perf or fps per Dollar it might closely slot next to the other cards. And since it has more VRAM and people wanting to go 4K (or even higher with multi monitor setups) and juice up the RTX it is quite likely that even at $2000 it will look like the best buy. Unless the 5080 and 5070 Ti are much cheaper than I think, but probably not.

Still not sure whether I am going to join in on this generation or wait. My 3080 is still fine. So it depends on the benchmarks and price, but in terms of PC games the upgrade is not that important. Not that much on the horizon that requires it. By the time Witcher 4 etc. come out there will be new hardware.

Unknown Soldier

Member

600 Watts is truly crazy. I cannot imagine how anyone can justify 600 watts for the GPU just to play games. If you have a 14900k plus Oled monitor, you could be at almost 1kw to play games. That's just wild man.