Cyberpunkd

Member

I wonder if Nvidia will lower the prices of their cards now they've seen how cheap Intel are willing to go.

I wonder if Nvidia will lower the prices of their cards now they've seen how cheap Intel are willing to go.

NVIDIA GeForce RTX 5060 Ti to feature 16GB VRAM, RTX 5060 reportedly sticks to 8GB - VideoCardz.com

NVIDIA RTX 5060 series rumors Finally, something about next-gen in the budget area. Wccftech claims to have obtained new information on the GeForce RTX 5060 series. NVIDIA is now said to be working on not one but two models in the series, which would follow the path of the current-generation...videocardz.com

Fuck the planet (and your electricity costs), it's the pixels that count.600 Watts is truly crazy. I cannot imagine how anyone can justify 600 watts for the GPU just to play games. If you have a 14900k plus Oled monitor, you could be at almost 1kw to play games. That's just wild man.

I've got solar panels in my house. They're extremely effective in the summer - I generate more electricity via solar than actual consumption. Unfortunately we only have 4 months of sunlight in the UK and have zero energy production for most of the year. When playing games, my electric bills are sky high.Fuck the planet (and your electricity costs), it's the pixels that count.

Don't cheap out on motherboard. Get one with good vrm's and read about people having stability issues while you think, huh?, runs fine in my setup.

If you can do it. You probably wish you had later.I know. I still plan to get a pretty decent mobo after going through many reviews etc., but I am not sure if paying even more for PCIE 5.0 is a good idea or not.

Man you are hugely downplaying the performance differences here

3080 was magnitudes faster than 2080 (40% at 4k) -- %70 over 2080 source HUB

1080 Ti was magnitudes faster than 980 Ti (46% at 4k) -- 85% over 980 TI source TPU

4090 is 40% faster than 3090 in 4k - 75% over 3090 source HUB

all AVG raster performance at 4k

oh, I see you meant that way!!This is how I read the performance difference https://www.techpowerup.com/review/nvidia-geforce-rtx-3080-founders-edition/34.html

It's actually the same as how you read it , 40% more frame rate or 170% faster (i.e 100FPS for 3080 will be 60FPS for 2080)

But my counter argument towards the person I quoted remains , its that the performance increase were always magnitudes more , not just from 3090 to 4090 .

Seems another exclusivity DLSS for 5xxxVery interesting details!

Very interesting details!

Seems another exclusivity DLSS for 5xxx

Now that evga isn't around anymore, just whatever you can get your hands on.I'm not as clued up as most of you with pc building. But what's the usual best option with NVIDIA cards? The founders edition or another manufacturer? If it's the latter who is is usually best?

I wonder if it's going to be a gen-locked feature a'la frame gen for the 40 series. Better than 50% chance probably. They gotta have some shiny reason to sell yet another 60 series card with 8GB VRAM on a 128bit bus for probably higher MSRP than the previous one.

Over and under on "Neural Rendering" being marketed with another DLSS prefix (DLSS4?) or some new designation entirely?

I'm thinking "DLNR" if it really has nothing to do with upscaling or frame gen.

There is no pressure if it doesn't either, as proven by 3050 or 4060.depends on how the 5060 performs, if it beats the intel cards comfortably there's no pressure on the price.

That future and still far, but problem like 95% users won't use AI, except DLSS.How much of this is just PR bullshit?

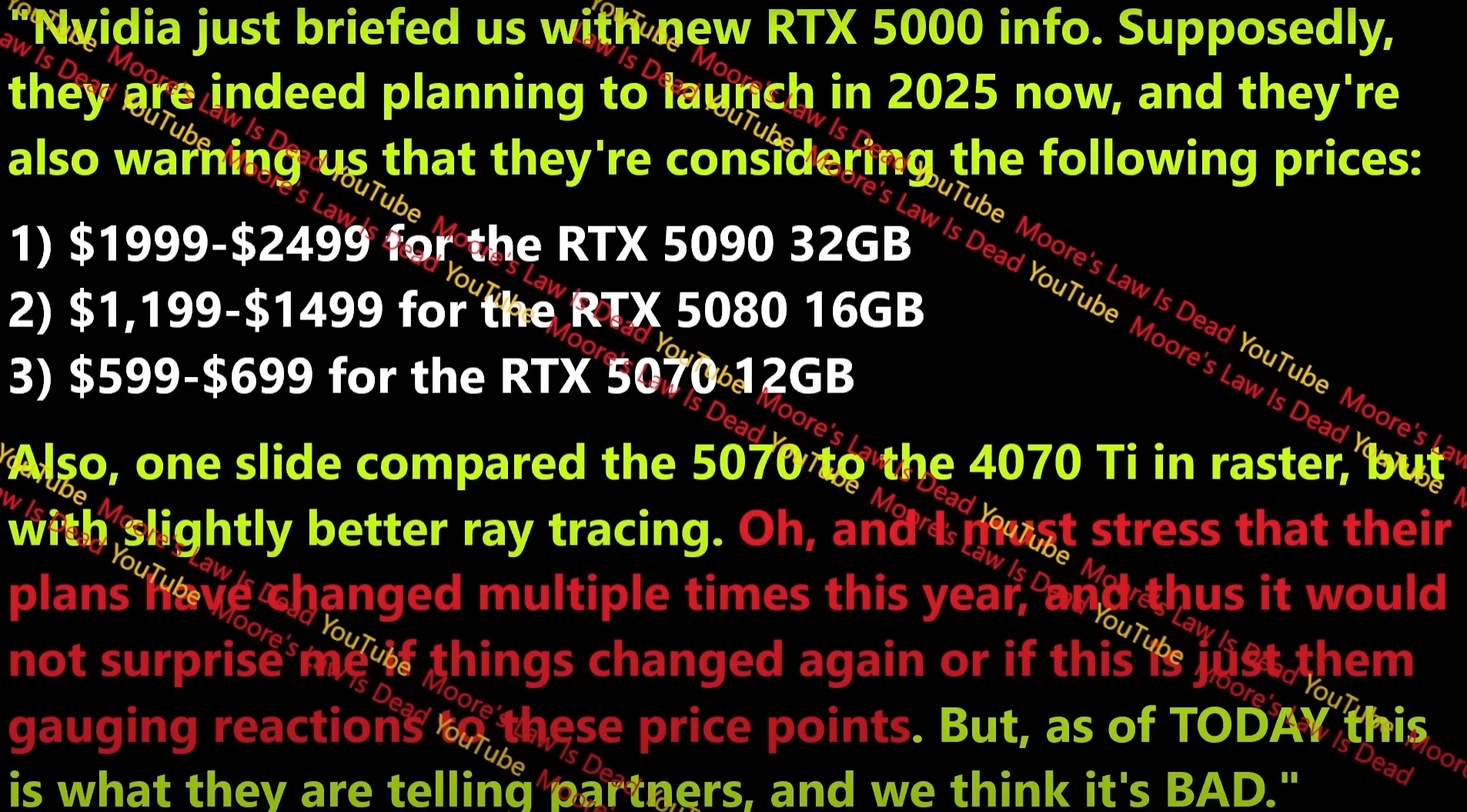

Some "leaks". It's your opinion trust/not trust

thats the one thing that may make me not get a 5090--power consumption, and therefore temps/fan noise.600 Watts is truly crazy. I cannot imagine how anyone can justify 600 watts for the GPU just to play games.

Is impossible to "create" a better glorified upscaler without bribing PF.technology that rivals DLSS.

It will come out just in time for GTA VI on PCGonna wait for the 6090

Let's go point by point:How much of this is just PR bullshit?

Let's go point by point:

- "There will be a new DLSS feature that PF will hype that won't run on series below 5000" - no doubt

- RT cores that deliver "more realistic" lighting. BS. One can twist it like "but it's faster, so kinda allows for more realistic"

- "AI accelerated graphics" - modern "Y2K compatible speakers"

- "Improved AI performance" - no sh*t, watson, faster cards do scheisse faster

- "Better integration of AI in games" - I'm afraid another try to pull proprietary API trick

- "Neural Rendering Capabilities" - perhaps a runtime filter. I'm skeptical.

- "AI enhanced power efficiency" - guaranteed lie

- "Improved AI upscaling" and DLSS used outside games to upscale thingies. Yeah, why not.

- "Generative AI acceleration" - most GPUs can do that, of course new, faster gen will be even faster.

from fresh videoIsn't this quite old?

Me too, gonna sell my 5090 to get it.Gonna wait for the 6090

from fresh video

That was about 5090 onlyI think I saw the same slide from his video like ~2 months ago? Maybe some things changed.

People will happy with any "RTX" label, even if Huang will sell shampoo for 1k with "RTX" nameAnyway, people won't be happy if this is true.

I mean there was an interview with Jensen Huang 5 months ago where he was teasing what the future of DLSS will be, he said "generating much better models & textures" than what the game has using AI of some sorts, I think this is its manifestation? Or rather I should say, it's 1st attempt at doing just that with the 50-series graphics cards? No wonder why the 5090 has 32GB as this stuff is pretty expensive on video memory… Maybe it's just a hunk of bologna, we'll see…

AI rendering could be a huge game changer depending on what the 1st iteration will be like.

Imagine a game with basic assets, like you define how the game will play, the building blocks, the physics/animation, etc. The basic geometry of the level and objects. But AI puts the coat of paint on it, whatever style you want. It knows what lighting is supposed to look like, a fraction the cost of path tracing.

I mean there was an interview with Jensen Huang 5 months ago where he was teasing what the future of DLSS will be, he said "generating much better models & textures" than what the game has using AI of some sorts, I think this is its manifestation? Or rather I should say, it's 1st attempt at doing just that with the 50-series graphics cards? No wonder why the 5090 has 32GB as this stuff is pretty expensive on video memory… Maybe it's just a hunk of bologna, we'll see…

That would be nice but it'll still be useless if it's only at 8gb vram even for 1080P. So hope it'll be at 12gb at least.I wonder if Nvidia will lower the prices of their cards now they've seen how cheap Intel are willing to go.

Maybe we'll get a 5060 at £300.

5090 is 100% going to be 3k€

If PC is all about price point than Nvidia would have not got 90% share in PC market. PC is the only market, where price point has a very least effect.If the RX8800XT comes with 16GB VRAM and a (estimated) $500 price point -- that may be enough for me to ditch Nvidia. Can't fathom a 5080 at twice (or more) the price is going to give me twice the performance.

But I'll ultimately wait for reviews. For me, I'd personally take 25% less performance from a card at half the price as a no brainer.

I have a hamster sweatshop. They run around wheels 24/7 and generate free electricity for my house.600 Watts is truly crazy. I cannot imagine how anyone can justify 600 watts for the GPU just to play games. If you have a 14900k plus Oled monitor, you could be at almost 1kw to play games. That's just wild man.

I'm not sure what you point is? I know that a lot of folks are happy to spend that money on a Nvidia card -- I've got a 3070 now.If PC is all about price point than Nvidia would have not got 90% share in PC market. PC is the only market, where price point has a very least effect.

Paying up thousands of dollars ain't making them blowjobs free, man.You see, arrogant Sony priced the PS5 Pro so high that Nvidia is going to swoop in and lower the prices for everyone! Also we get unicorns and free blowjobs.

100% the 90s are for the junkiesMost people won't find it worth the price. Monitors and TVs can't even reach the maximum of 4090 right now.

I'm sure some tech enthusiasts will love the latest and greatest, but it's not going to be a popular choice for the general public. In fact, I think this new card might actually help the 40 series sell better.

This is the first gen I'm sitting on the sideline at launch.

4090s price still range from 2300 to 2500€in France atm. I probably even under evaluated it, make it 3k5€ for the premium versions.Nah I'm guessing 2-2,5k€.

Everything above would mean that the bottom tier also has to creep up by a lot.

With Intel and AMD most likely fighting with Nvidia in low and mid-tier, they can't just make a 1k€ gap between 70 and 80/90 series.