Clear

CliffyB's Cock Holster

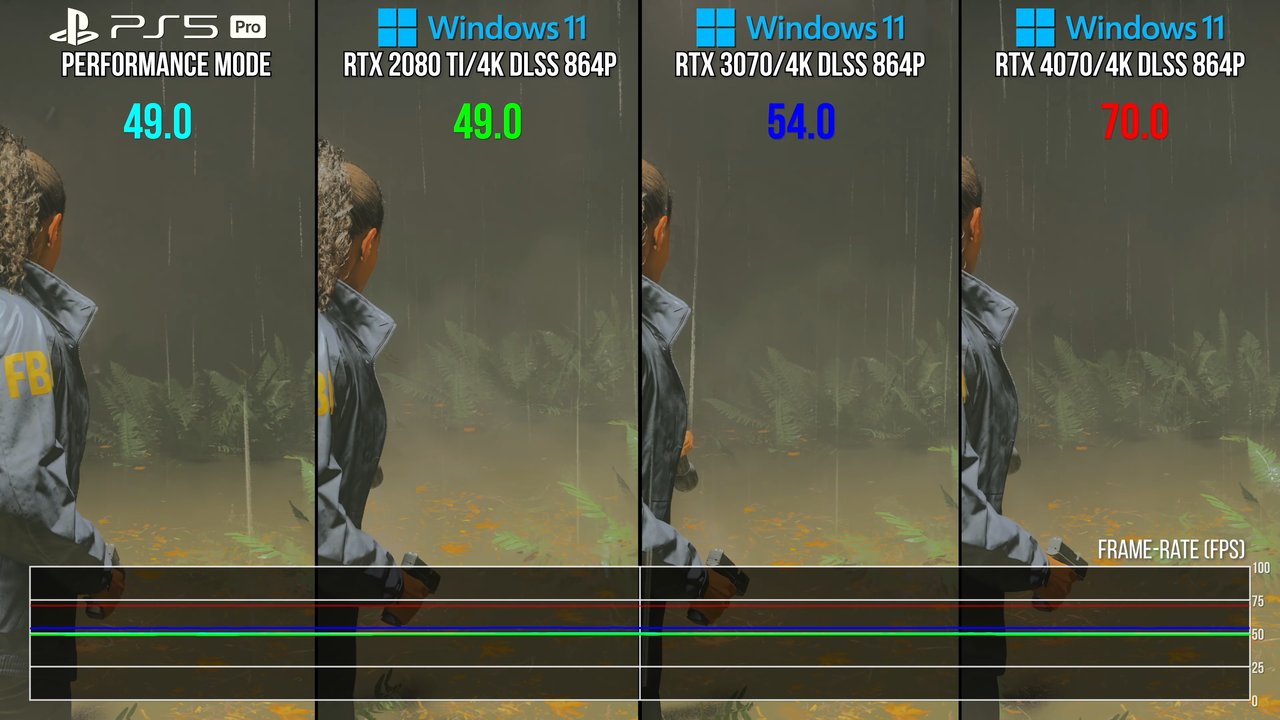

My PC did not cost me much more than the Pro and wipes it's ass with the pro

-Case = $60

-750s modular PSU (gold rated) $75

-CPU (I5-13600kf) $175

-CPU Cooler ($25)

-2tb nvme ($100)

-GPU 4070 ($450)

-Ram 32gb DDR 5 ($99)

-Micro atx mobo - ($150)

(about $1150)

Minus 6 years of PS+ and the pc becomes cheaper and cheaper.

So what you're saying is, I'm right to point out that you are paying more to get more. You're just choosing to ignore that very significant price bump over the Pro, and of course the fairly massive one compared to a regular PS5 which offers the exact same gaming experiences at a modestly lower level of fidelity.

Also the whole "savings over time" argument only works if you spend less on software over time, which is in no way a certainty. And in fact strikes me as rather counter-intuitive as if you are willing to stump up more up-front, why are you going to scrimp and save on smaller purchases over time? Its not like there aren't subscription services on PC too!