cormack12

Gold Member

You're wrong.

You're wrong.

That's because you haven't updated the firmware on your Xbox.I just bought my rtx 4080

I tried plugging it into my television.

Nothing is happening.

Price differences vs console(s).If that was true everybody would have a 4090.

If he was CPU limited - both 6800 and 6700 would have the same performance.

No shit, but your edit from "these two are only the overclock" happened before I replied without a page refresh making me think you thought the third with SAM didn't have an overclock despite stating "@ OC"OC is OC alone, OC + SAM is the same OC with Smart Access Memory enabled. It makes big differences in some games.

Then claimedHow can anyone explain this shit? Normally 6800 is ~44% faster than 6700.

You got an explanation that in that specific benchmark it's using SAM at a low resolution and is CPU IO bound so a bigger GPU wouldn't really help.It's unoptimized for all GPUs except 6700 and 6700XT

It's one of the dumbest things I have ever seen on PC.

Meaning you knew how your initial "why isn it 44%" claim was completely disingenuous then?Most reviewers don't enable ReBar (or at least they didn't when tested this game). You see that massive difference is only seen with it. Have you watched the video?

In terms of a larger (52 CUs) GPU not resulting in better performance since the GPU is higher clocked on the smaller 36CU GPU because it helps performance in other specific scenarios, yes I do. But I know I'm wasting my time here. You're likely to ignore everything said, post a laugh emoji, then say something completely irrelevant or even contradictory.You really think that this is comparable in any shape or form to Xbox vs. PS5?

Everyone who buys a GPU knows you need a PC to use it with. Just like everyone who buys a console knows you need a display of some sort.I just bought my rtx 4080

I tried plugging it into my television.

Nothing is happening.

Right but Nvidia has DLSS which boosts the performance of 4070 60% or something in game that support DLSS (most modern games)A few PS5 exclusives. The 4070S is only 36% faster than the regular PS5. The Pro is 29% faster. They're almost equal there.

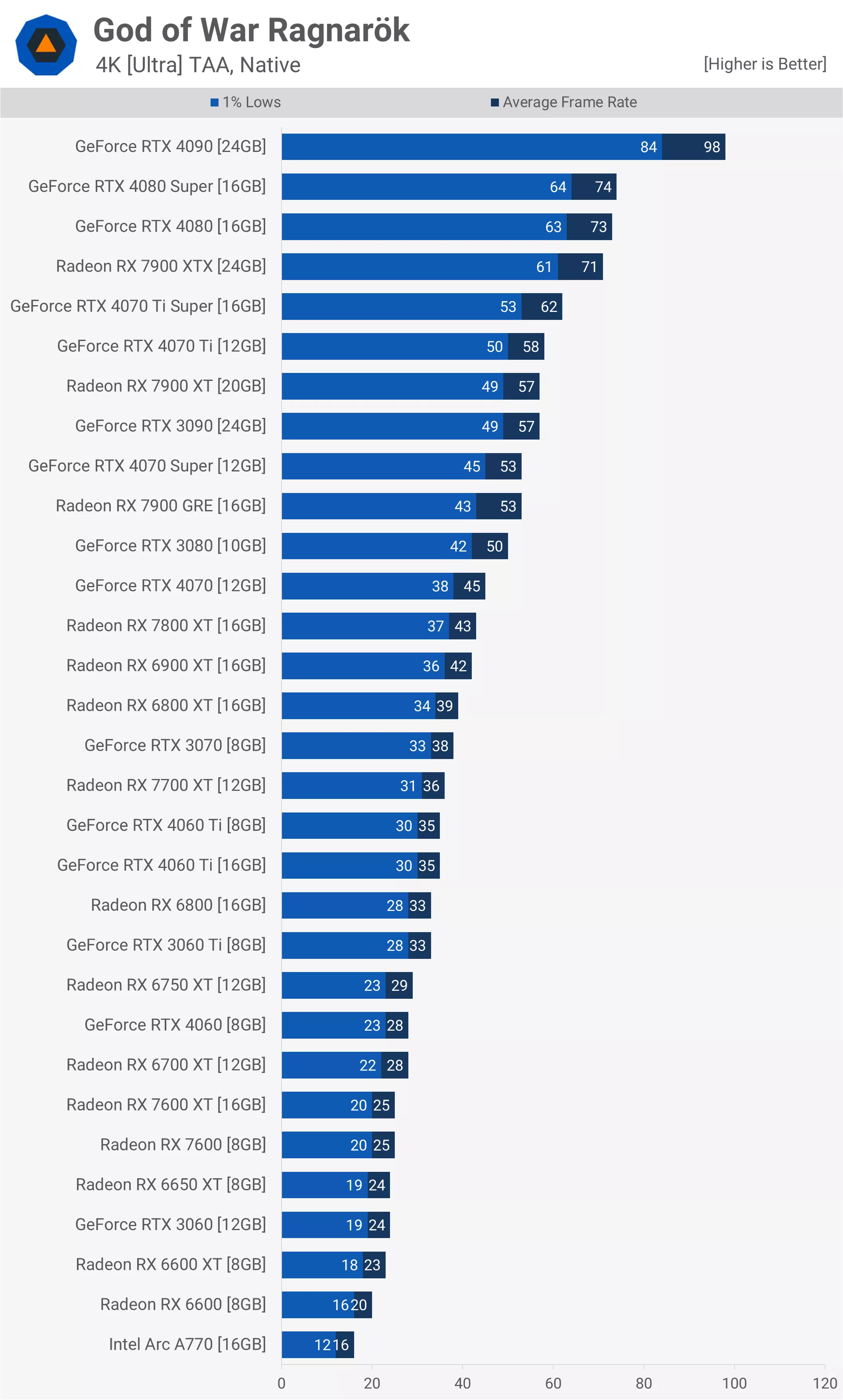

GOWR is another candidate. The 4070 is only around 20% faster than the regular PS5. The Pro should come close to a 4070S there as well.

6800 at 89 fps average

6700 at 88.2 fps average

The 6800 actually edges the 6700 by a whole 0.8 fps.

YUGE!

These are averages and these are fairly balanced configs for this game.

And it's not purely CPU bound its CPU access to GPU memory bound hence why with no hardware change it massively benefits from SAM and higher clocks. "Magic" performance for you it seems.

No shit, but your edit from "these two are only the overclock" happened before I replied without a page refresh making me think you thought the third with SAM didn't have an overclock despite stating "@ OC"

But again you're just muddling everything up in your discussions until you confuse people enough to not care about any of the details you're arguing over and contradicting yourself with.

You asked

Then claimed

You got an explanation that in that specific benchmark it's using SAM at a low resolution and is CPU IO bound so a bigger GPU wouldn't really help.

this isn't what that 44% you got from techpower up has enabled so you knew this wasn't the "normal" situation and that the clocks are all different to tech power ups 44% relative performance tests. The overclock is not equal either it's a 46% overclock on the 6700 vs 32% overclock on the 6800 (with 6800 stock already lower clocked than the 6700) and you're comparing that to 44% stock 6700 vs 6800 from tech powerup without SAM and completely different clocks. Then asking why it's not 44%. Then whenGaiff asked why other benchmarks are showing the 6800XT as much faster on TLOU you pretend that you knew why with this?:

Meaning you knew how your initial "why isn it 44%" claim was completely disingenuous then?

Why ask about why it doesn't match a 44% relative increase of the GPU seen on tech power up. Then when asked why the sites actually have TLOU showing big gains say because the benchmark that you initially presented is different and you knew the answer?

TLOU is 48% faster on a 6800XT vs a 6700XT on tech powerup tests.

You didn't even answer the questionGaiff asked:

Is there a reason you're using a single benchmark to prove that the 6700 outperforms the 6800 XT in TLOU Part I when every other reputable source on the internet clearly has the 6800 XT being much faster?

You only contradicted yourself by pretending you actually knew the answer to your own question. which you were saying (in common DF fashion) was inexplicable earlier. It was inexplicable why a benchmark didn't match a completely different set of relative performance benchmarks from techpowerup (44%) and when asked why this benchmark doesn't match techpowerups actual TLOU benchmarks and other sites better performance of TLOU on a 6800XT say "this is why, the setup isn't the same". Wtf, does your own logic even make sense to you?

If you wanted to test the relative performance of 6700 vs 6800 to compare with techpowerups 44% you would match the system and settings of that 44% relative difference which you claim is the reason why there are much better gains in TLOU on 6800XT benchmarks to begin with now.

Or alternatively you could do the relative SAM performance of the 6800 vs 6700 at these mismatched overclocks and then compare how TLOU fairs to claim it is unoptimised or not.

In terms of a larger (52 CUs) GPU not resulting in better performance since the GPU is higher clocked on the smaller 36CU GPU because it helps performance in other specific scenarios, yes I do. But I know I'm wasting my time here. You're likely to ignore everything said, post a laugh emoji, then say something completely irrelevant or even contradictory.

Can you tell me the names of the games that PSSR outputs to 1440p?- Upscaling from 720p to 1400p with PSSR seems to cost almost as much as render native 1440p

6800 at 89 fps average

6700 at 88.2 fps average

The 6800 actually edges the 6700 by a whole 0.8 fps.

YUGE!

These are averages and these are fairly balanced configs for this game.

And it's not purely CPU bound its CPU access to GPU memory bound hence why with no hardware change it massively benefits from SAM and higher clocks. "Magic" performance for you it seems.

No shit, but your edit from "these two are only the overclock" happened before I replied without a page refresh making me think you thought the third with SAM didn't have an overclock despite stating "@ OC"

But again you're just muddling everything up in your discussions until you confuse people enough to not care about any of the details you're arguing over and contradicting yourself with.

You asked

Then claimed

You got an explanation that in that specific benchmark it's using SAM at a low resolution and is CPU IO bound so a bigger GPU wouldn't really help.

this isn't what that 44% you got from techpower up has enabled so you knew this wasn't the "normal" situation and that the clocks are all different to tech power ups 44% relative performance tests. The overclock is not equal either it's a 46% overclock on the 6700 vs 32% overclock on the 6800 (with 6800 stock already lower clocked than the 6700) and you're comparing that to 44% stock 6700 vs 6800 from tech powerup without SAM and completely different clocks. Then asking why it's not 44%. Then whenGaiff asked why other benchmarks are showing the 6800XT as much faster on TLOU you pretend that you knew why with this?:

Meaning you knew how your initial "why isn it 44%" claim was completely disingenuous then?

Why ask about why it doesn't match a 44% relative increase of the GPU seen on tech power up. Then when asked why the sites actually have TLOU showing big gains say because the benchmark that you initially presented is different and you knew the answer?

TLOU is 48% faster on a 6800XT vs a 6700XT on tech powerup tests.

You didn't even answer the questionGaiff asked:

Is there a reason you're using a single benchmark to prove that the 6700 outperforms the 6800 XT in TLOU Part I when every other reputable source on the internet clearly has the 6800 XT being much faster?

You only contradicted yourself by pretending you actually knew the answer to your own question. which you were saying (in common DF fashion) was inexplicable earlier. It was inexplicable why a benchmark didn't match a completely different set of relative performance benchmarks from techpowerup (44%) and when asked why this benchmark doesn't match techpowerups actual TLOU benchmarks and other sites better performance of TLOU on a 6800XT say "this is why, the setup isn't the same". Wtf, does your own logic even make sense to you?

If you wanted to test the relative performance of 6700 vs 6800 to compare with techpowerups 44% you would match the system and settings of that 44% relative difference which you claim is the reason why there are much better gains in TLOU on 6800XT benchmarks to begin with now.

Or alternatively you could do the relative SAM performance of the 6800 vs 6700 at these mismatched overclocks and then compare how TLOU fairs to claim it is unoptimised or not.

In terms of a larger (52 CUs) GPU not resulting in better performance since the GPU is higher clocked on the smaller 36CU GPU because it helps performance in other specific scenarios, yes I do. But I know I'm wasting my time here. You're likely to ignore everything said, post a laugh emoji, then say something completely irrelevant or even contradictory.

Mileage will vary for sure and as I've said before this is extremely EARLY days here. Most of the games have had minimal efforts into PRO patches. It's also very difficult to get absolute matched settings across PRO and PC because many titles have custom settings on PRO (i.e Alan Wake 2). That said, there are more than a few games where you can clearly see that the general level of performance on the new PRO modes are in the ballpark of a RTX 4070 or higher. And yes in some cases you may say well the settings aren't an exact match or the test scenes aren't exact matches etc. But having tried many of these titles on both the PRO and PC myself, I would challenge that any differences are so slight that they'd hardly be noticeable by eye to the vast majority of gamers and the story across the broad game experience is close enough.

So without further ado (tried to minimize comparison to no DRS and upscaling where possible to reduce variability):

GOD OF WAR RAGNAROK

[PS5 PRO] Quality Mode (Native 4K TAA) - 45-60fps (avg low 50s)

[PC] God of War Ragnarok PC (Native 4K TAA) - RTX 4070 avg ~45fps - 7900GRE/4070Super level to match general PRO performance

THE LAST OF US PT 1

[PS5 PRO] 4K Fidelity Mode runs at 50-60fps with avg in the mid 50s

[PC] Native 4K on RTX 4070 = avg FPS is mid 30s. Even a 4080 can't hit 60fps avg at 4K. PRO definitely superior here

RATCHET AND CLANK RIFT APART

[PS5 PRO] 4K Fidelity Mode with All Ray Tracing options enabled: Framerate is ~50-60fps with an avg in the mid 50s

[PC] Native 4K with Ray Tracing: RTX 4070 is under 60fps on average (low 50s). Pretty close to the PRO experience

CALL OF DUTY BLACK OPS 6

[PS5 PRO] 60hz Quality mode (w/PSSR) runs at ~60-100fps with average in the mid-upper 70s (Campaign and Zombies)

[PC]RTX 4070 Extreme + DLSS Quality drops below 60fps quite often in Campaign (one example below). Lowering settings a little bit will be a closer match in quality and performance to the PS5 PRO

Resident Evil Village

[PS5 PRO] 4K Mode with Ray Tracing Enabled runs at ~60-90fps with average in the 70s

[PC] Native 4K with RT: RTX 4070 averages ~75fps. Right in the ballpark of the PS5 PRO experience

Hint: got a feeling TLOU PT2 and SpiderMan 2 will be good candidates for this list in a few months

Mileage will vary for sure and as I've said before this is extremely EARLY days here. Most of the games have had minimal efforts into PRO patches. It's also very difficult to get absolute matched settings across PRO and PC because many titles have custom settings on PRO (i.e Alan Wake 2). That said, there are more than a few games where you can clearly see that the general level of performance on the new PRO modes are in the ballpark of a RTX 4070 or higher. And yes in some cases you may say well the settings aren't an exact match or the test scenes aren't exact matches etc. But having tried many of these titles on both the PRO and PC myself, I would challenge that any differences are so slight that they'd hardly be noticeable by eye to the vast majority of gamers and the story across the broad game experience is close enough.

So without further ado (tried to minimize comparison to no DRS and upscaling where possible to reduce variability):

GOD OF WAR RAGNAROK

[PS5 PRO] Quality Mode (Native 4K TAA) - 45-60fps (avg low 50s)

[PC] God of War Ragnarok PC (Native 4K TAA) - RTX 4070 avg ~45fps - 7900GRE/4070Super level to match general PRO performance

THE LAST OF US PT 1

[PS5 PRO] 4K Fidelity Mode runs at 50-60fps with avg in the mid 50s

[PC] Native 4K on RTX 4070 = avg FPS is mid 30s. Even a 4080 can't hit 60fps avg at 4K. PRO definitely superior here

RATCHET AND CLANK RIFT APART

[PS5 PRO] 4K Fidelity Mode with All Ray Tracing options enabled: Framerate is ~50-60fps with an avg in the mid 50s

[PC] Native 4K with Ray Tracing: RTX 4070 is under 60fps on average (low 50s). Pretty close to the PRO experience

CALL OF DUTY BLACK OPS 6

[PS5 PRO] 60hz Quality mode (w/PSSR) runs at ~60-100fps with average in the mid-upper 70s (Campaign and Zombies)

[PC]RTX 4070 Extreme + DLSS Quality drops below 60fps quite often in Campaign (one example below). Lowering settings a little bit will be a closer match in quality and performance to the PS5 PRO

Resident Evil Village

[PS5 PRO] 4K Mode with Ray Tracing Enabled runs at ~60-90fps with average in the 70s

[PC] Native 4K with RT: RTX 4070 averages ~75fps. Right in the ballpark of the PS5 PRO experience

Hint: got a feeling TLOU PT2 and SpiderMan 2 will be good candidates for this list in a few months

This one should be fairly accurate. Once again though, you need to compare scene-for-scene and same settings. Also, Ragnarok on PS5 actually scales higher than on PC. Slightly better shadows and ray-traced cubemaps reflections in Quality Mode.Mileage will vary for sure and as I've said before this is extremely EARLY days here. Most of the games have had minimal efforts into PRO patches. It's also very difficult to get absolute matched settings across PRO and PC because many titles have custom settings on PRO (i.e Alan Wake 2). That said, there are more than a few games where you can clearly see that the general level of performance on the new PRO modes are in the ballpark of a RTX 4070 or higher. And yes in some cases you may say well the settings aren't an exact match or the test scenes aren't exact matches etc. But having tried many of these titles on both the PRO and PC myself, I would challenge that any differences are so slight that they'd hardly be noticeable by eye to the vast majority of gamers and the story across the broad game experience is close enough.

So without further ado (tried to minimize comparison to no DRS and upscaling where possible to reduce variability):

GOD OF WAR RAGNAROK

[PS5 PRO] Quality Mode (Native 4K TAA) - 45-60fps (avg low 50s)

[PC] God of War Ragnarok PC (Native 4K TAA) - RTX 4070 avg ~45fps - 7900GRE/4070Super level to match general PRO performance

This one isn't. The Pro is around 29% faster than the PS5 in this game. The 4070S is 36% faster. All according to DF, so pretty much a wash. Your extrapolation suggests the Pro runs like a 4080 in this game. It doesn't. I'm uncertain if the Pro has increased settings over the PS5, but TLOU doesn't run at max settings on PS5. It should run faster than the 4070 though.THE LAST OF US PT 1

[PS5 PRO] 4K Fidelity Mode runs at 50-60fps with avg in the mid 50s

[PC] Native 4K on RTX 4070 = avg FPS is mid 30s. Even a 4080 can't hit 60fps avg at 4K. PRO definitely superior here

That's with the full RT suite on PC. As far as I'm aware, Rift Apart on the Pro didn't add RT shadows. Maybe AO, but I'm not 100% sure. Additionally, we don't know the exact settings of the Pro mode either because max settings on PC scale above even Quality Mode on PS5.RATCHET AND CLANK RIFT APART

[PS5 PRO] 4K Fidelity Mode with All Ray Tracing options enabled: Framerate is ~50-60fps with an avg in the mid 50s

[PC] Native 4K with Ray Tracing: RTX 4070 is under 60fps on average (low 50s). Pretty close to the PRO experience

No matched settings or scenes. Pointless.CALL OF DUTY BLACK OPS 6

[PS5 PRO] 60hz Quality mode (w/PSSR) runs at ~60-100fps with average in the mid-upper 70s (Campaign and Zombies)

[PC]RTX 4070 Extreme + DLSS Quality drops below 60fps quite often in Campaign (one example below). Lowering settings a little bit will be a closer match in quality and performance to the PS5 PRO

Same as before. I'm not sure if you deliberately do this or your just unaware of how it works, but settings and scenes can have a dramatic impact on performance. You can't just pick a benchmark and assume that it runs at settings comparable to the consoles and that the consoles would maintain the same performance in the same scenes. In GOWR for instance, the 6800 XT runs at 80-100fps in Vanaheim, but in Svartalfheim, this drops to below 60 using 4K FSRQ. Using your method, I could come to the conclusion that the 6800 XT easily beats a Pro or is slower. Those benchmarks are all max settings and consoles seldom run at those, even in the graphics/quality modes.Resident Evil Village

[PS5 PRO] 4K Mode with Ray Tracing Enabled runs at ~60-90fps with average in the 70s

[PC] Native 4K with RT: RTX 4070 averages ~75fps. Right in the ballpark of the PS5 PRO experience

Hint: got a feeling TLOU PT2 and SpiderMan 2 will be good candidates for this list in a few months

There's something wrong with hardware unboxed God Of War benchmark. The RX6800XT has only 39fps in their benchmark, yet as you can see this card can run this game at 55-60fps at 4K native max settings.

As for the TLOU remake benchmarks, keep in mind you are looking at maxed out settings. I dont know what settings the PS5Pro use, but base PS5 use High settings that arnt nearly as demanding.

Resident Evil Village on the PS5Pro isnt running at native 4K. The RTX4070 would get well over 100fps with PS5PRO settings

He clarified and the game where it ran like a 4070 was COD (forgot which one). However, AMD performs much better in this game and the benchmark had the 4070 and 7700 XT neck-and-neck, so the Pro matching a 7700 XT is perfectly plausible...at least in this game.HeisenbergFX4 Comeon man give us some hope here for the pro to perform close to the 4070 in real world scenarios in the future. Im hoping its growing pains with the developers because currently the pro is plain underwhelming.

Is AW2 AMD optimized? No.Call of duty runs much better on AMD cards vs Nvidia cards.

Last of us and Ragnarok are bad ports on pc.

ONLY game that was properly compared between pc and PS5 pro is Alan wake 2 so far.

I really don't have time to discuss this further with you, I posted this as example why TLoU is a shit port - there was no need to turn it into offtopic inside this thread.

In the end - TLoU is outlier in performance when comparing PS5 with PC vs. other games - can you at at least agree on that?

This discussion is about Pro reaching 4070 levels, answer is: In most games it won't. In few games not utilizing PC hardware properly (like TLoU), it might.

You explain it as "better optimised for a specific machine" and not "unoptimised for PC" considering you're using a PC to show this anyway.

It's not that the game is unoptimised or "made by idiots".

Mileage will vary for sure and as I've said before this is extremely EARLY days here. Most of the games have had minimal efforts into PRO patches. It's also very difficult to get absolute matched settings across PRO and PC because many titles have custom settings on PRO (i.e Alan Wake 2). That said, there are more than a few games where you can clearly see that the general level of performance on the new PRO modes are in the ballpark of a RTX 4070 or higher. And yes in some cases you may say well the settings aren't an exact match or the test scenes aren't exact matches etc. But having tried many of these titles on both the PRO and PC myself, I would challenge that any differences are so slight that they'd hardly be noticeable by eye to the vast majority of gamers and the story across the broad game experience is close enough.

So without further ado (tried to minimize comparison to no DRS and upscaling where possible to reduce variability):

GOD OF WAR RAGNAROK

[PS5 PRO] Quality Mode (Native 4K TAA) - 45-60fps (avg low 50s)

[PC] God of War Ragnarok PC (Native 4K TAA) - RTX 4070 avg ~45fps - 7900GRE/4070Super level to match general PRO performance

THE LAST OF US PT 1

[PS5 PRO] 4K Fidelity Mode runs at 50-60fps with avg in the mid 50s

[PC] Native 4K on RTX 4070 = avg FPS is mid 30s. Even a 4080 can't hit 60fps avg at 4K. PRO definitely superior here

RATCHET AND CLANK RIFT APART

[PS5 PRO] 4K Fidelity Mode with All Ray Tracing options enabled: Framerate is ~50-60fps with an avg in the mid 50s

[PC] Native 4K with Ray Tracing: RTX 4070 is under 60fps on average (low 50s). Pretty close to the PRO experience

CALL OF DUTY BLACK OPS 6

[PS5 PRO] 60hz Quality mode (w/PSSR) runs at ~60-100fps with average in the mid-upper 70s (Campaign and Zombies)

[PC]RTX 4070 Extreme + DLSS Quality drops below 60fps quite often in Campaign (one example below). Lowering settings a little bit will be a closer match in quality and performance to the PS5 PRO

Resident Evil Village

[PS5 PRO] 4K Mode with Ray Tracing Enabled runs at ~60-90fps with average in the 70s

[PC] Native 4K with RT: RTX 4070 averages ~75fps. Right in the ballpark of the PS5 PRO experience

Hint: got a feeling TLOU PT2 and SpiderMan 2 will be good candidates for this list in a few months

Is AW2 AMD optimized? No.

Is AW2 a good port for PS5 Pro? No.

Remedy's engine needs to work harder to optimize for PS5 Pro.

This is what I mean about you ignoring everything said, leaving a laugh emoji and arguing in circles with irrelevant stuff instead. You brought up TLOU PC here and I disagree with your take on it.

I already discussed this in the thread with you and said just because TLOU runs extremely well on a PS5 doesn't mean the PC version is "unoptimized" or "a shit port". So why are you using the size of that gap as indication of this.

Of course TLOU would be an outlier when comparing PS5 performance gap vs other games. It's Playstations premier studio, if they can't push PS5 better than most studios, who and what game would? This doesn't mean it's unoptimized or a poor port on PC though, it means the PS5 is being well utilised by a PS studio.

We've discussed this already:

so I don't need to agree with something that I wasn't disagreeing with in the first place. It's the idea that this means it's poorly optimised or a shit port that I disagree with. I even asked what exactly is your problem with the game today, it's a great looking game running at high fps on PC, are you just upset that it runs well on a PS5?

I already explained your logic is completely flawed. Using your logic: there are a bunch of poor looking games with poor performance on PC, say TLOU rans 125fps but that shit looking game is considered optimised as long as the PS5 version was even shitter?

You're far too invested in "PS software/hardware bad" to take anything said on board and your aim is to just post that in so many words ignoring everything said to the contrary. You're after confirmation bias mostly.

You've posted the same image multiple times, but AW2(RT) is one of those games that is heavily nVidia optimized.

TLOU on PC is clearly unoptimized. 10.6TF RX6600XT run this game at around 30fps 1440p, yet the PS5 with 10.4TF has locked 60fps. This game game is twice as demanding on PC.This is what I mean about you ignoring everything said, leaving a laugh emoji and arguing in circles with irrelevant stuff instead. You brought up TLOU PC here and I disagree with your take on it.

I already discussed this in the thread with you and said just because TLOU runs extremely well on a PS5 doesn't mean the PC version is "unoptimized" or "a shit port". So why are you using the size of that gap as indication of this.

Of course TLOU would be an outlier when comparing PS5 performance gap vs other games. It's Playstations premier studio, if they can't push PS5 better than most studios, who and what game would? This doesn't mean it's unoptimized or a poor port on PC though, it means the PS5 is being well utilised by a PS studio.

We've discussed this already:

so I don't need to agree with something that I wasn't disagreeing with in the first place. It's the idea that this means it's poorly optimised or a shit port that I disagree with. I even asked what exactly is your problem with the game today, it's a great looking game running at high fps on PC, are you just upset that it runs well on a PS5?

I already explained your logic is completely flawed. Using your logic: there are a bunch of poor looking games with poor performance on PC, say TLOU rans 125fps but that shit looking game is considered optimised as long as the PS5 version was even shitter?

You're far too invested in "PS software/hardware bad" to take anything said on board and your aim is to just post that in so many words ignoring everything said to the contrary. You're after confirmation bias mostly.

Mileage will vary for sure and as I've said before this is extremely EARLY days here. Most of the games have had minimal efforts into PRO patches. It's also very difficult to get absolute matched settings across PRO and PC because many titles have custom settings on PRO (i.e Alan Wake 2). That said, there are more than a few games where you can clearly see that the general level of performance on the new PRO modes are in the ballpark of a RTX 4070 or higher. And yes in some cases you may say well the settings aren't an exact match or the test scenes aren't exact matches etc. But having tried many of these titles on both the PRO and PC myself, I would challenge that any differences are so slight that they'd hardly be noticeable by eye to the vast majority of gamers and the story across the broad game experience is close enough.

So without further ado (tried to minimize comparison to no DRS and upscaling where possible to reduce variability):

GOD OF WAR RAGNAROK

[PS5 PRO] Quality Mode (Native 4K TAA) - 45-60fps (avg low 50s)

[PC] God of War Ragnarok PC (Native 4K TAA) - RTX 4070 avg ~45fps - 7900GRE/4070Super level to match general PRO performance

THE LAST OF US PT 1

[PS5 PRO] 4K Fidelity Mode runs at 50-60fps with avg in the mid 50s

[PC] Native 4K on RTX 4070 = avg FPS is mid 30s. Even a 4080 can't hit 60fps avg at 4K. PRO definitely superior here

RATCHET AND CLANK RIFT APART

[PS5 PRO] 4K Fidelity Mode with All Ray Tracing options enabled: Framerate is ~50-60fps with an avg in the mid 50s

[PC] Native 4K with Ray Tracing: RTX 4070 is under 60fps on average (low 50s). Pretty close to the PRO experience

CALL OF DUTY BLACK OPS 6

[PS5 PRO] 60hz Quality mode (w/PSSR) runs at ~60-100fps with average in the mid-upper 70s (Campaign and Zombies)

[PC]RTX 4070 Extreme + DLSS Quality drops below 60fps quite often in Campaign (one example below). Lowering settings a little bit will be a closer match in quality and performance to the PS5 PRO

Resident Evil Village

[PS5 PRO] 4K Mode with Ray Tracing Enabled runs at ~60-90fps with average in the 70s

[PC] Native 4K with RT: RTX 4070 averages ~75fps. Right in the ballpark of the PS5 PRO experience

Hint: got a feeling TLOU PT2 and SpiderMan 2 will be good candidates for this list in a few months

You are looking at PT results. Without RT/PT AMD cards perform well in this game.You've posted the same image multiple times, but AW2(RT) is one of those games that is heavily nVidia optimized.

Don't jump to conclusions based on one or two games.

1080p Max+RT benchmark

That's not true at all, while PSSR seems a bit heavier to run vs DLSS, it is hardly anywhere near native. And upscaling with DLSS is not free either.Right but Nvidia has DLSS which boosts the performance of 4070 60% or something in game that support DLSS (most modern games)

The problem with PS5 Pro it that it seems that PSSR cost a lot of frametime so it barely boost performance,

- Upscaling from 720p to 1400p with DLSS costs about as much render native 720p

- Upscaling from 720p to 1400p with PSSR seems to cost almost as much as render native 1440p

For Alan Wake 2 Remedy used primitve shaders on PS5 like they use mesh shaders on XSX or PC. As far as we know this was the first game that had that.

They also created super specyfic version of the game for RT on Pro, you can't use settings they used on PC.

Poor performing? Maybe. Shit port? No, it took them months according to them.

For comparisons with PC we only have AW2:

ER:

No one other than DF know how to do proper comparison between console and PC, they use the same settings, resolution, scene (obviously) etc. Sadly, they are not interested in doing comparisons with Pro for some reason.

In YOUR opinion this game is a good port.

It isn't, look how it was on day one - complete garbage:

It took them months to fix basic issues. But performance wasn't that much fixed.

Now look at Horizon Zero Dawn Port on PC: https://www.tomshardware.com/featur...pc-benchmarks-performance-system-requirements

Shit performance on Pascal GPUs, you could say that fuck users of old hardware game is optimized for the newest one! Look what happened few months later:

Pascal GPUs didn't get any better in the meantime... They actually optimzed their game. Sony games not ported by nixxed usually have not so great performance on PC - other than Days Gone and Returnal, but those two were UE4.

The RX6600XT has just 8GB of VRAM though vs the PS5s 16GB. It has 256GB/s memory bandwidth vs the 448GB/s memory bandwidth of the PS5. It has 32 CUs vs 36CUs on the PS5. It's not equivalent to a PS5 just because of its TF value.TLOU on PC is clearly unoptimized. 10.6TF RX6600XT run this game at around 30fps 1440p, yet the PS5 with 10.4TF has locked 60fps. This game game is twice as demanding on PC.

You've posted the same image multiple times, but AW2(RT) is one of those games that is heavily nVidia optimized.

Don't jump to conclusions based on one or two games.

1080p Max+RT benchmark

We're not talking about a buggy launch though. You were talking about today. There are updates to games , drivers, os that improve performance. TLOU was particularly buggy at launch before ND took over and fixed it. That's not what we're on about though.

The RX6600XT has just 8GB of VRAM though vs the PS5s 16GB. It has 256GB/s memory bandwidth vs the 448GB/s memory bandwidth of the PS5. It has 32 CUs vs 36CUs on the PS5. It's not equivalent to a PS5 just because of its TF value.

It's easier to use fewer CUs running at higher frequencies, so just because the 6600XT has fewer CUs doesn't mean it's slower (It has even more flops than the PS5 GPU, not by much but still). As for memory bandwidth keep in mind consoles share memory bandwidth between components. In many games the RX6600 XT can match PS5 framerate if you only adjust some settings to fit into 8GB VRAM. The RX6600XT has comparable performance to RTX2070super and this nvidia card can also match PS5 settings in a lot of games (NXgamer used RTX2070super in his PS5 comparisons).The RX6600XT has just 8GB of VRAM though vs the PS5s 16GB. It has 256GB/s memory bandwidth vs the 448GB/s memory bandwidth of the PS5. It has 32 CUs vs 36CUs on the PS5. It's not equivalent to a PS5 just because of its TF vavalue.

The PS5 equivalent is the 6700 though, not the 6600 XT. Sure, the CPU and GPU share bandwidth on consoles, but come on, the PS5 has 75% more bandwidth. Shared or not, the PS5's GPU has access to far, far more bandwidth.It's easier to use fewer CUs running at higher frequencies, so just because the 6600XT has fewer CUs doesn't mean it's slower (It has even more flops than the PS5 GPU, not by much but still). As for memory bandwidth keep in mind consoles share memory bandwidth between components. In many games the RX6600 XT can match PS5 framerate if you only adjust some settings to fit into 8GB VRAM. The RX6600XT has comparable performance to RTX2070super and this nvidia card can also match PS5 settings in a lot of games.

The RX6700 has 36CUs like PS5, but keep in mind it also has 1TF more.

Xbox Series X with it's 12TF can match RTX2080 in raster (gears of war 5), but the PS5 is certainly slower, yet some people on this forum compare PS5 GPU to 6700XT, but this AMD card has 13.2TF and is even faster than 2080Super.

Believe it or not, I asked about it because some posters spent their time spamming laughing emojis at posts that didn't fellate their favored platforms and the mods told me nothing could be done about it. I just ignore them.Isn't there some kind site policy going against shamelessly spamming laughing emojis at purely technical posts presenting data at that? It's becoming quite annoying, pitiful sight to look at.

In which realm of reality PS5 is "certainly slower than XSX" in rasterisation? That is not true either by spec or actual results wise.Xbox Series X with it's 12TF can match RTX2080 in raster (gears of war 5), but the PS5 is certainly slower, yet some people on this forum compare PS5 GPU to 6700XT, but this AMD card has 13.2TF and is even faster than RTX2080Super and even RTX3060ti.

No AMD card on a PC is truly equivalent to PS5 custom made GPU. All RNDA2 cards on PC platform have some differences, the 6600XT has less memory bandwidth, but RX6700 has 1TF more compared to PS5.The PS5 equivalent is the 6700 though, not the 6600 XT. Sure, the CPU and GPU share bandwidth on consoles, but come on, the PS5 has 75% more bandwidth. Shared or not, the PS5's GPU has access to far, far more bandwidth.

That 1 extra TFLOPs translates to 10% more compute. The 6700 (non XT) also has 352GB/s of bandwidth + Infinity Cache. That's much closer in terms of specs than the 6600 XT and as per DF, the PS5 and 6700 perform within 5% of each other almost every time. The 6600 XT will keep up so long as its bandwidth or VRAM aren't stretched, but when they are, it falls apart. I wouldn't use a GPU with such an enormous bandwidth deficit to prove that the game isn't optimized on PC. It's a game targeting 448GB/s of shared bandwidth.No AMD card on PC that can be considered equvalent. All RNDA2 cards on PC platform have some differences, the 6600XT has less memory bandwidth, but 6700XT has 1TF more compared to PS5.

Sure but when you're talking about the "max settings" test of TLOU that you're referring to on techpowerup you end up in a situation where the 8GB @256GB/s bandwidth memory is getting thrashed.It's easier to use fewer CUs running at higher frequencies, so just because the 6600XT has fewer CUs doesn't mean it's slower (It has even more flops than the PS5 GPU, not by much but still).

You can lower settings on TLOU remake too. the "max" settings requires more than 8GB VRAM and higher bandwidth, you end up in a situation where like for like you hit 34fps due to the poor memory of the 6600XT. When you lower settings to run on the card you get respectable framerates and visuals above 60fps:As for memory bandwidth keep in mind consoles share memory bandwidth between components. In many games the RX6600 XT can match PS5 framerate if you only adjust some settings to fit into 8GB VRAM.

Because of realworld performance and not TF I assume.Xbox Series X with it's 12TF can match RTX2080 in raster (gears of war 5), but the PS5 is certainly slower, yet some people on this forum compare PS5 GPU to 6700XT, but this AMD card has 13.2TF and is even faster than RTX2080Super and even RTX3060ti.

Of course, TLOU isn't optimized for PC at all. The GPU performance hasn't dramatically changed since launch. They made significant improvements to the CPU, but GPU-wise, it's similar. Most of the updates were to fix the bugs such as memory leaks or the terrible textures.

Sure but when you're talking about the "max settings" test of TLOU that you're referring to on techpowerup you end up in a situation where the 8GB @256GB/s bandwidth memory is getting thrashed.

You can lower settings on TLOU remake too. the "max" settings requires more than 8GB VRAM and higher bandwidth, you end up in a situation where like for like you hit 34fps due to the poor memory of the 6600XT. When you lower settings to run on the card you get respectable framerates and visuals:

Because of realworld performance and not TF I assume.

Improvements to CPU that improved benchmarks running at 1080p and were not really held back by GPU anyway.Of course, TLOU isn't optimized for PC at all. The GPU performance hasn't dramatically changed since launch. They made significant improvements to the CPU, but GPU-wise, it's similar. Most of the updates were to fix the bugs such as memory leaks or the terrible textures.

Huh? So all PC games are unoptimized now? People are not talking about TLOU specifically when discussing GPU equivalents they're talking realworld performance in games in general.Real world performance? You mean when the PC game is not optimised.

TFLOPS is just one of the specifications, and many other factors are involved in the benchmark.Real world performance? You mean when the PC game is not optimised. RTX2080Super, not to mention the RTX3060ti, offer better performance than the PS5 in well optimised raster games, not to mention in RT games. The RX6700XT (13.2TF) is even faster than both 2080S and 3060ti, at least in raster. If people really believe that the PS5 GPU offers 6700XT performance, then what about XSX which is even faster than PS5 GPU? The XSX GPU would be 6750XT?

Of course, not all PC games are unoptimised, but TLOU is the most unoptimised example of a PS5 port you could ask for. Even digital foundry cannot explain such a massive performance difference between the PC port and the PS5 version.Improvements to CPU that improved benchmarks running at 1080p and were not really held back by GPU anyway.

Huh? So all PC games are unoptimized now? People are not talking about TLOU specifically when discussing GPU equivalents they're talking realworld performance in games in general.

Did you even look at the screenshots you posted? In alan wake 2 for example:TFLOPS is just one of the specifications, and many other factors are involved in the benchmark.

DF has evaluated both the PS5 and Xbox Series X as being in the RTX2080 class. (Gotham KnightsS5 & XSX, Death Stranding PS5 ver. etc.)

PS5 XSX

CPU 3.5Ghz vs 3.6Ghz ( +2.3% for XSX)

RAM 448GB vs 336GB + 560GB (+22% PS5 on 3.5GB and +22% XSX on 10GB)

SHADER COMPUTE 10.2TF vs 12.2TF ( +16.7% XSX)

GPU CLOCK (It's tied to the speed of all GPU caches and other components in the gpu)2.23Ghz vs 1.8Ghz ( + 21% PS5)

GPU TRIANGLE RASTER (billions/s)8.92 vs 7.3 ( +20% PS5)

GPU CULLING RATE (billions/s)17.84 vs 14.6 ( +20% PS5)

GPU PIXEL FILL RATE ( Gpixels/s)142 vs 116 ( +20% PS5)

GPU TEXTURE FIL RATE (Gtexels/s)321 vs 379 ( +16% XSX)

SSD RAW5.5GB vs 2.4GB ( + 78% PS5)

DF pretend things are inexplicable all the time and it's easy to see who buys into their talking points. when there are other forms of "poor optimisation" (ie starfield launching 30fps) they "explain" it's because of how great the game is. Then performance improvements like the 60fps patch launches months and months later. You don't see Bojji, Senua and co using a performance "then and now" to prove how "idiotic" their games are.Of course, not all PC games are unoptimised, but TLOU is the most unoptimised example of a PS5 port you could ask for. Even digital foundry cannot explain such a massive performance difference between the PC port and the PS5 version.

DF pretend things are inexplicable all the time and it's easy to see who buys into their talking points. when there are other forms of "poor optimisation" (ie starfield launching 30fps) they "explain" it's because of how great the game is. Then performance improvements like the 60fps patch launches months and months later. You don't see Bojji, Senua and co using a performance "then and now" to prove how "idiotic" their games are.

How did I know you'd come to it's and their defence without knowing what you're arguing against. You're just proving the point here.You are funny sometimes.

Starfield was CPU limited as fuck and still is, some areas drop to ~40-something FPS:

You see why developers locked it to 30fps at launch? I always said this game should have "unlocked VRR" option but this arrived many months later. DF always said this game is CPU limited and all PC players could tell this as well

How did I know you'd come to it's and their defence without knowing what you're arguing against. You're just proving the point here.

you're explaining the reason why it was limited. I gave you explanations why on an 6600xt specifically at max settings TLOU is limited by its memory and IO mostly. The difference and the point that you completely missed though is that DF often "explain" away poor optimisation as how great or revolutionary the game is. Yet they usually treat poor or lower performance on other hardware (eg xbox or 6600XT having poor memory performance and TLOU being memory limited as "inexplicable". One makes you think it's your hardwares fault the other makes you think it's the games and you follow those talking points blindly.

When I looked at vram usage in TLOUR it was around 9-10GB at 4K. This game has very sharp textures and high quality assets, so I think VRAM requirements are justified. I seen games that can use 14-15GB VRAM at 4K, crysis 2 remaster for example.DF overhypes many Sony and Ms games, this is nothing new.

The last of us is mostly limited by game inability to fully use GPU power, not exactly other technical metrics, it's also very vram hungry (much more at launch). You can even see this in lower than usual power draw of GPU. Very similar game to that is AC Valhalla on pc, especially on Nvidia hardware.

So you think there isn't an actual technical reason and it's just not able for no reason? Why do you ignore results in this particular case?DF overhypes many Sony and Ms games, this is nothing new.

The last of us is mostly limited by game inability to fully use GPU power, not exactly other technical metrics, it's also very vram hungry (much more at launch). You can even see this in lower than usual power draw of GPU. Very similar game to that is AC Valhalla on pc, especially on Nvidia hardware.

When I looked at vram usage in TLOUR it was around 9-10GB at 4K. This game has very sharp textures and high quality assets, so I think VRAM requirements are justified. I seen games that can use 14-15GB VRAM at 4K, crysis 2 remaster for example.

So you think there isn't an actual technical reason and it's just not able for no reason? Why do you ignore results in this particular case?

When SAM is enabled, much better performance, when you go from 6700XT to 6800XT 48% better performance. It's just not able is not true really.