You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

TLZ

Banned

I don't think 499 would be a problem if it reflected a premium product. The Xbone at 499 came with a forced accessory nobody asked for and a weaker console. It wasn't worth that price for many when you can get the more powerful PS4 for a $100 cheaper. 499 means it has to be worthy of its price to the customer.

xPikYx

Member

Honestly, whatever the price, at the moment none of the leaks are very encouraging... and for sure 499 console will never be that amazing, especially in a market where GPUs cost have increased drastically despite 8 years agoI don't think 499 would be a problem if it reflected a premium product. The Xbone at 499 came with a forced accessory nobody asked for and a weaker console. It wasn't worth that price for many when you can get the more powerful PS4 for a $100 cheaper. 499 means it has to be worthy of its price to the customer.

Munki

Member

WAIT DOES THIS MEAN ONE PLATFORM HAS HBM???

That has Scarlett written all over it.

(I'm joking)

ArabianPrynce

Member

Goddamn if sony has this then the 10+tflop dream maybe true lmaoThat has Scarlett written all over it.

(I'm joking)

CyberPanda

Banned

Oh SSD is probably one of the most expensive things in each system for sure.I wonder if we'll get $499 not because powerful, but because SSD.

Prices on SSD are plummetting, but they still are way more expensive than spinning HDD

Kazekage1981

Member

ps5 and scarlett need wifi 6 minimum

Hope they get Bluetooth 5.0, 5g, and USB 3.1 gen 2

Hope they get Bluetooth 5.0, 5g, and USB 3.1 gen 2

Last edited:

Munki

Member

At this point, I think that dream is pretty much shut down.Goddamn if sony has this then the 10+tflop dream maybe true lmao

Last edited:

LordOfChaos

Member

ps5 and scarlett need wifi 6 minimum

Hope they get Bluetooth 5.0, 5g, and USB 3.1 gen 2

PS4s wifi was such shit until the Slim/Pro, they cheaped out on that implementation hardcore. Hope they don't do that again, but the minimum hardware standard now probably keeps us safe from that (we'd at leaest have 5GHz AC)

I also had some problems with the 2.4GHz bluetooth the DS4 used, but the chipset got better by the lightstrip models, even though it's on the same old Bluetooth version (2.1, even though the PS4 side supports more). If wifi direct or something else would gleam some benefit like higher resolution inputs or lower latency that would be bonus points.

Last edited:

MS gave an outline of their plans on the software side at E3. Obviously leading with Halo.

Sony still has big hitters like TLOU2, Ghost and more? that need full reveals and release dates. I can't see all of that stuff being bundled into a single event. The PS4 titles will get their time in the sun first and then the PS5 will be detailed in a later event once the decks are clear. Got to milk those free column inches for all they're worth...

It won't be a single event to keep the console in people's minds till fall 2020. February hardware reveal with out the final box. Then a direct E3 week with final design, price,date and games. Repeat of last generation except a direct instead of E3 stage show. Then between E3 and launch be the build up of ads and other game shows with hands on for press and gamers.

Bryank75

Banned

Not really, from what I understand the new architecture is more efficient and each TF is not equivalent to the old one. So 9.5 TF on the new hardware would be equal to 11.5 or nearly 12 TF of the old chips.At this point, I think that dream is pretty much shut down.

Anyway, I'd wait for PlayStation to say... this is all speculation.

StreetsofBeige

Member

Yup.Apart from all these speculations the only thing we can state is that Halo Infinite Trailer which was far cry away from what I expect from the next gen, those monsters that xbox scarlett eats for brackfast must be little puppies

Next gen systems will have improved graphics. But at this rate, I don't think I'll ever see photorealistic visuals in my lifetime.

Some things that looked kind of close is that Unreal Engine 3 Samaritan demo from 2011. Ya, 8 years ago on UE3.

It's now 2019, people have moved to UE4, have consoles and PCs that are probably 5-10x the power, and whatever is churned out still doesn't look as good as that 8 year old demo.

Who knows. Maybe Scarlett 6 and PS10 in the year 2050 using UE12 will bring lifelike graphics.

Last edited:

CyberPanda

Banned

I found the solution!Yup.

Next gen systems will have improved graphics. But at this rate, I don't think I'll ever see photorealistic visuals in my lifetime.

Some things that looked kind of close is that Unreal Engine 3 Samaritan demo from 2011. Ya, 8 years ago on UE3.

It's now 2019, people have moved to UE4, have consoles and PCs that are probably 5-10x the power, and whatever is churned out still doesn't look as good as that 8 year old demo.

Who knows. Maybe Scarlett 6 and PS10 in the year 2050 using UE12 will bring lifelike graphics.

xPikYx

Member

I think you're overstimating that Samaritan demo, I remeber that too and I was impressed in old 2011, but nowadays Many games look better (especially Sony's first party titles)Yup.

Next gen systems will have improved graphics. But at this rate, I don't think I'll ever see photorealistic visuals in my lifetime.

Some things that looked kind of close is that Unreal Engine 3 Samaritan demo from 2011. Ya, 8 years ago on UE3.

It's now 2019, people have moved to UE4, have consoles and PCs that are probably 5-10x the power, and whatever is churned out still doesn't look as good as that 8 year old demo.

Who knows. Maybe Scarlett 6 and PS10 in the year 2050 using UE12 will bring lifelike graphics.

here few examples

But yeah the real thing is companies are pushing to much towards the resolution of a game wasting important hw resources which could be used to improve other aspects of a 3d scene and if you think of it you can't even notice the difference lol. Try to play whaterver game on a 27 inch screen and tell me you can clearly see the difference between a 1080p rendered scene and a 4k rendered scene apart from the fps drop hahaha... try to use those resources to render a proper scene with Ray tracing instead of rasterization lights and I bet whatever resolution you could tell me the difference

Negotiator

Banned

Detroit Become Human was also pretty impressive and Uncharted 4 in the cutscenes.

Samaritan is no longer the benchmark.

Samaritan is no longer the benchmark.

TeamGhobad

Banned

i think 449 is a good sweet spot for price. something inbetween lockhart and anaconda. i of course prefer 499 but this thing needs to be mass market.

Considerably more. 9.5TF ~ 13+TF. 8.5TF would be ~12TF (I think even a bit more).Not really, from what I understand the new architecture is more efficient and each TF is not equivalent to the old one. So 9.5 TF on the new hardware would be equal to 11.5 or nearly 12 TF of the old chips.

Anyway, I'd wait for PlayStation to say... this is all speculation.

Insane Metal

Member

DeeDogg_

Banned

"i wAs ToLd bY sOmeOne!!"Nah id be really surprised if ps5 was more powerful. As i was told by someone with much knowledge on subject, Xbox will be at very least same or comparable power. So maybe Xbox does the feb reveal ?

LordOfChaos

Member

I'm rooting for the underdog here but I press doubt on this headline, Nvidia hasn't felt the need to drop 7nm Ampere to compete with this yet, just spec bumps and price drops on 12nm FFN. If I were to guess how this goes it's that AMD continues to drive value through competition through 2019 but Ampere bounds away on total performance again, unless RDNA 2.0 has another massive IPC pull in the cards.

SonGoku

Member

That's not how it works... the number of EUV layers depends of the process: 7nm EUV, 6nm, 5nm etcI think both will be 7nm. Problem with consoles is design has to be "locked" years in advance, therefore ever increasing amount of "+" nodes cannot be correctly pin pointed

7nm EUV spec was done in 2018 (3 years before launch), nothing prevents them from having 7nm+ given the timelinesTherefore, I think they made their bed years ago when they started to design for 7nm node where both, Zen2 and Navi+, are designed upon.

RDNA2 being designed on 7nm+ supports the idea of going with a 7nm+ design

Zen2 is a 7nm design true but zen3 on 7nm+ already layed the work for posting a zen2

link?well known source "Digitime" has said consoles are going to be on TSMC 7nm.

Last edited:

ArabianPrynce

Member

That's not how it works... the number of EUV layers depends of the process: 7nm EUV, 6nm, 5nm etc

7nm EUV spec was done in 2018 (3 years before launch), nothing prevents them from having 7nm+ given the timelines

RDNA2 being designed on 7nm+ supports the idea of going with a 7nm+ design

Zen2 is a 7nm design true but zen3 on 7nm+ already layed the work for posting a zen2

link?

i guess its this

AMD 7nm chips for next-generation PlayStation to be ready in 3Q20

AMD's 7nm CPU and GPU are expected to be adopted by Sony in its next-generation PlayStation and the processors are estimated to be ready in the third quarter of 2020 for the games console's expected release in the second half of 2020, according to industry sources.

www.digitimes.com

vpance

Member

link?

Digitimes is not a reliable source for leaks. At least not for consoles.

SonGoku

Member

7nm EUV is still 7nn with EUV layers...i guess its this

AMD 7nm chips for next-generation PlayStation to be ready in 3Q20

AMD's 7nm CPU and GPU are expected to be adopted by Sony in its next-generation PlayStation and the processors are estimated to be ready in the third quarter of 2020 for the games console's expected release in the second half of 2020, according to industry sources.www.digitimes.com

Chips being on 7nm is not conclusive of anything other than the 7nm process node, it could be 7nm DUV or 7nm EUV

If anything this fits perfectly with 7nm EUV, i would think 7nm DUV chips would be much earlier than that.7nm chips for next-generation PlayStation to be ready in 3Q20

3Q20 is cutting it pretty close for a Q4 launch, it might slip into 2021 Q1

Assuming the info is right that is.

Negotiator

Banned

PS4 Super Slim (7nm APU) will most likely release at the end of this year (OG PS4 28nm/2013 -> PS4 Slim 16nm/2016 -> PS4 Super Slim 7nm 2019).

That will give us an indication of what kind of process they have used. It might use Samsung's 7nm EUV, especially if it allows Sony to add a cheaper cooling system.

But then again, it's a small die (110mm2), so it might not need it.

That will give us an indication of what kind of process they have used. It might use Samsung's 7nm EUV, especially if it allows Sony to add a cheaper cooling system.

But then again, it's a small die (110mm2), so it might not need it.

Tarin02543

Member

Since Sony is aiming for the hardcore gamers now, instead of a d pad I want a mini arcade thumbstick, like the neogeo cd pad.

Chronos24

Member

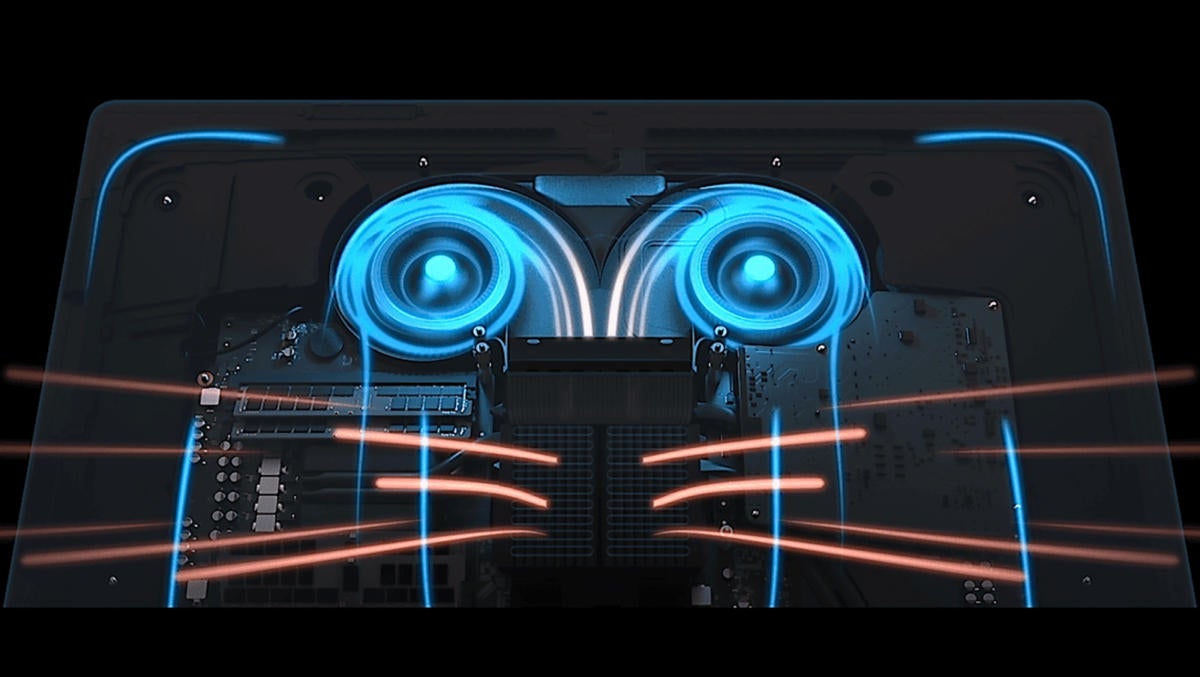

On a much smaller node than what PS4 is, what do you guys think the thermals are going to be like? I'm sure everyone remembers all the Ps4's flying off like jets especially the earlier models of the OG and the Pro. I would say equal to what we think/hope/expect these next new consoles to be in power, they have to be kept cool and I feel that should be close to the top on concerns for the next consoles. Nothing ruins an experience more than the loud noise coming from your console is louder than the game itself. I know remedies like headphones and just turning up the volume can drown out the noise but it's still something that needs to be heavily addressed going into the next gen. What do you guys think? What would be a viable cooling solution that wouldn't break the bank but not cause the system to fly off the shelf?

SonGoku

Member

But then again considering this is the last PS4 shrink might as well go with 7nm EUV to maximize cost reductions: smaller die and power reductions (less cooling required) .But then again, it's a small die (110mm2), so it might not need it.

If they ditched HDD and used flash memory (like the Super Slim PS3) they could even make a small case design reminiscent of PS2 slim priced at $199 and...

Super slim just funded launch PS5 losses, not to mention the benefit of bringing new users to the PlayStation ecosystem that might buy a PS5 later on.

Vapor chamber or a 3 fan cooler design similar to GPU's AIBWhat do you guys think? What would be a viable cooling solution that wouldn't break the bank but not cause the system to fly off the shelf?

Last edited:

LordOfChaos

Member

On a much smaller node than what PS4 is, what do you guys think the thermals are going to be like? I'm sure everyone remembers all the Ps4's flying off like jets especially the earlier models of the OG and the Pro. I would say equal to what we think/hope/expect these next new consoles to be in power, they have to be kept cool and I feel that should be close to the top on concerns for the next consoles. Nothing ruins an experience more than the loud noise coming from your console is louder than the game itself. I know remedies like headphones and just turning up the volume can drown out the noise but it's still something that needs to be heavily addressed going into the next gen. What do you guys think? What would be a viable cooling solution that wouldn't break the bank but not cause the system to fly off the shelf?

I think a lot of the jet engine models actually had bad thermal paste applications, right? By the CUH-1200 it stayed fairly silent apart from standout titles (doom, GoW4) that really pushed it, and that was without a die shrink, just halving the number of GDDR5 chips that decreased total system power, and addressing the factory thermal paste applications.

While creative cooling would be interesting I actually don't think it needs to be all that exotic to manage more watts than the first PS4, more heat fins and possibly a second fan don't cost all that much, but do impact internal volume and weight for Sony shipping logistics.

The CUH-1000 used about 150W in game? I wonder if there's anything that could be learned from gaming laptop cooling design, rather than one fan blowing through one heatsink, use heat pipes to break that into two cooling endpoints, two fans at lower RPM vs one at higher.

Negotiator

Banned

Hmmm, economies of scale are indeed a thing.But then again considering this is the last PS4 shrink might as well go with 7nm EUV to maximize cost reductions: smaller die and power reductions (less cooling required) .

If they ditched HDD and used flash memory (like the PS3) they could even make a small case design reminiscent of PS2 slim priced at $199 and...

Super slim just funded launch PS5 losses, not to mention the benefit of bringing new users to the PlayStation ecosystem that might buy a PS5 later on.

Maybe it would also be possible to use 4 DRAM chips (GDDR6 2GB is in mass production by Samsung) instead of 8 chips (GDDR5 1GB) for further cost reductions?

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

I'm assuming this could help Sony by allowing them to order higher amounts of GDDR6 chips for both PS4 SS and OG PS5 and thus reducing costs.

Of course this is all speculation on my part, but there's a historical precedent with RSX 28nm (they switched from GDDR3 to GDDR5):

Meanwhile, Microsoft is still stuck at using 16 DRAM chips (DDR3 512MB), even on XB1 Slim.

Last edited:

SonGoku

Member

Would 3 fans add that much cost compared to a Vapor Chamber solution?The CUH-1000 used about 150W in game? I wonder if there's anything that could be learned from gaming laptop cooling design, rather than one fan blowing through one heatsink, use heat pipes to break that into two cooling endpoints, two fans at lower RPM vs one at higher.

300W GPUs stay pretty chill and silent using those 2-3 fans + big heatsink designs

Thats a really good observation: Reduce heat/power all the while bringing costs down for PS4/5 using economies of scale.I'm assuming this could help Sony by allowing them to order higher amounts of GDDR6 chips for both PS4 SS and OG PS5 and thus reducing costs.

Cant they use higher density DDR4 chips?Meanwhile, Microsoft is still stuck at using 16 DRAM chips (DDR3 512MB), even on XB1 Slim.

Last edited:

Negotiator

Banned

Probably, but you would need to keep the memory bandwidth the same (68.3 GB/s).Cant they use higher density DDR4 chips?

Is that possible by using 8 DDR4 chips?

LordOfChaos

Member

Would 3 fans add that much cost compared to a Vapor Chamber solution?

300W GPUs stay pretty chill and silent using those 2-3 fans + big heatsink designs

Those three fan GPU coolers are open air ones, they push heat into the case and rely on you also having case exhaust. A console would need a blower style fan that actually directs air out in one direction so it's a bit different than GPU boards, but it could have two of them breaking things into two thermal zones like I suggested learning from gaming laptops

Top is open air which spits hot air everywhere, bottom is a blower that pushes air out, top gaming laptop uses two blowers

Last edited:

SonGoku

Member

Good point!Probably, but you would need to keep the memory bandwidth the same (68.3 GB/s).

Is that possible by using 8 DDR4 chips?

PS4 bus would also drop to 128bit bringing further reductions

Four 2GB GDDR6 at 14Gbps nets 224GB/s they would down-clock these

Negotiator

Banned

Yeap, 11 Gbps seems to yield exactly 176 GB/s.Good point!

PS4 bus would also drop to 128bit bringing further reductions

Four 2GB GDDR6 at 14Gbps nets 224GB/s they would down-clock these

Burrito Bandito

Banned

Alternatively, enjoy the warm up act for an upcoming mic drop.

How do you guarantee momentum and fast uptake?

Not with a high price...

Sony is in no position to take a huge loss on console sales. I dont mean financially, i mean strategically. They have no reason to. They have a massive part of the market secured already, theres no point for them. Taking a $100 loss on each console sold is something a company does out of desperation to steal market share from the dominating force. If anyone is gonna take a loss its MS and i doubt they will either

Negotiator

Banned

It's not "desperation". It's actually the traditional console business model:Sony is in no position to take a huge loss on console sales. I dont mean financially, i mean strategically. They have no reason to. They have a massive part of the market secured already, theres no point for them. Taking a $100 loss on each console sold is something a company does out of desperation to steal market share from the dominating force. If anyone is gonna take a loss its MS and i doubt they will either

Razor and blades model - Wikipedia

Sell the hardware for cheap and make a profit by selling games/subscriptions/accessories.

SpinningBirdKick

Banned

Sony is in no position to take a huge loss on console sales. I dont mean financially, i mean strategically. They have no reason to. They have a massive part of the market secured already, theres no point for them. Taking a $100 loss on each console sold is something a company does out of desperation to steal market share from the dominating force. If anyone is gonna take a loss its MS and i doubt they will either

They have no reason to?

They're the current market leader with a huge customer base that is generating substantial profits for the whole corporation.

All the more reason why they will do everything in their power to maintain that position.

Low priced, high spec hardware and timed exclusives are exactly the weapons of choice I would use in their position.

Play this coming generation right and they snuff out a competitor. Why get sloppy now?

Last edited:

xool

Member

Hat trick post (sorry)

Let's take a moment to appreciate sane design that works - the OG XBOXONE cooling solution.

Reliable, whisper quiet, cheap, replaceble (they obv. didn't want a repeat of the RROD fiasco)

via https://www.ifixit.com/Guide/Xbox+One+Fan+Replacement/36730

That's how you do cooling. What worked last gen works today too.

Let's take a moment to appreciate sane design that works - the OG XBOXONE cooling solution.

Reliable, whisper quiet, cheap, replaceble (they obv. didn't want a repeat of the RROD fiasco)

via https://www.ifixit.com/Guide/Xbox+One+Fan+Replacement/36730

That's how you do cooling. What worked last gen works today too.

SonGoku

Member

Could they design the console case like one big GPU with vents on the back, each side and top? Something similar to the OG XBONE design but smaller and bigger ventsThose three fan GPU coolers are open air ones, they push heat into the case and rely on you also having case exhaust

I read on the 2080TI full lenghth vapor chamber, this would be perfect for consoles, any idea on costs?A console would need a blower style fan that actually directs air out in one direction

Isnt that due to separate CPU-GPU dies though?but it could have two of them breaking things into two thermal zones like I suggested learning from gaming laptops

Last edited:

CrustyBritches

Gold Member

I agree the cooling on all 3 Xbox One models(XO, X1S, X1X) was great. I'd imagine the Xbox One X type setup will be used on both consoles. AMD put a blower setup with vapor chamber cooler on their new 180W and 225W cards(don't like that on PC since I'm not cramming it into a htpc/sff case). Will work just fine for sub-200W consoles, especially if they're ~175W like X1X.

Last edited:

SonGoku

Member

That's a good point, a full length vapor chamber would cool 200W+ comfortablyAMD put a blower setup with vapor chamber cooler on their new 225W cards(don't like on PC, not cramming it into a htpc/sff case).

a 200W+ system would require a bigger heatsink and at least two fans or full length vapor chamber to remain quiet and chill while minimizing hw failureLet's take a moment to appreciate sane design that works - the OG XBOXONE cooling solution.

SonGoku

Member

First year $500 will do fine regardless, early adopters are the enthusiastsAs much as I'm willing to spend 499 dollars in don't think the general consumer is. Anywhere between 399 and 449 is key next gen

Even if IPC remains the same (on par with Pascal+) Just adding more dCUs would be of huge benefit towards perfomanceunless RDNA 2.0 has another massive IPC pull in the cards.

LordOfChaos

Member

Could they design the console case like one big GPU with vents on the back, each side and top? Something similar to the OG XBONE design but smaller and bigger vents

I read on the 2080TI full lenghth vapor chamber, this would be perfect for consoles, any idea on costs?

Isnt that due to separate CPU-GPU dies though?

It doesn't have to be two chips for heat pipes to go multiple ways, one chip or two doesn't change anything there, it's just typical that dual chip laptops would have higher wattage to dissipate and do this. But one higher wattage APU could have heatpipes leading to two (or more) heatsinks just the same.

They could go the XBO route with an open air fan, I was assuming they would stay with blowing it out the back like the PS4. But if we're talking about multiple fans, I'm thinking it would be preferable to have them coordinating on airflow rather than creating more turbulence by each fan blowing everywhere? Apart from high wattage GPU coolers I think computer design always would rather go this way, both blowing the same way, it was even mentioned on the iMac Pro

SonGoku

Member

I've found these bits very interesting:

AMD Files Patent for Hybrid Ray Tracing Solution

AMD's stayed quiet about how it plans to support ray tracing, but a patent application published on June 27 offered a glimpse at what it's been working on.www.tomshardware.com

AMD said that software-based solutions "are very power intensive and difficult to scale to higher performance levels without expending significant die area." It also said that enabling ray tracing via software "can reduce performance substantially over what is theoretically possible" because they "suffer drastically from the execution divergence of bounded volume hierarchy traversal."

AMD didn't think hardware-based ray tracing was the answer either. The company said those solutions "suffer from a lack of programmer flexibility as the ray tracing pipeline is fixed to a given hardware configuration," are "generally fairly area inefficient since they must keep large buffers of ray data to reorder memory transactions to achieve peak performance," and are more complex than other GPUs.

So the company developed its hybrid solution. The setup described in this patent application uses a mix of dedicated hardware and existing shader units working in conjunction with software to enable real-time ray tracing without the drawbacks of the methods described above.

This pretty much confirms the RT solution consoles are going with and by extent RDNA2.

Solution is very similar to what P Panajev2001a theorizedBut right now it seems like the company doesn't want to go the exact same route as Nvidia, which included dedicated ray tracing cores in Turing-based GPUs, and would rather use a mix of dedicated and non-dedicated hardware to give devs more flexibility.

I think this further supports the idea of consoles going with RDNA2: Its in AMDs best interest both consoles support this so that game developers optimize for AMDs RT solution giving their cards an edge over nvidias

PS: Was a new thread made about this?

Last edited:

- Status

- Not open for further replies.