I mean, you probably won't believe me and I really don't want a full back and forth, so I just ran your comment through ChatGPT:

What they get right (credit where due)

- Z-buffering moved to hardware very early

- Yes: since the mid-/late-90s (N64, PS1 add-ons, PC accelerators), depth testing and stencil tests are GPU fixed-function.

- CPUs have not been doing per-pixel depth tests for real-time 3D games for decades.

- Hidden surface removal used to exist on the CPU

- Early engines did CPU-side visibility (BSPs, portals, PVS, painter's algorithm).

- Static BVHs / BSP trees are still used — but for culling, not depth resolution.

So far, all fine.

Where the argument goes off the rails

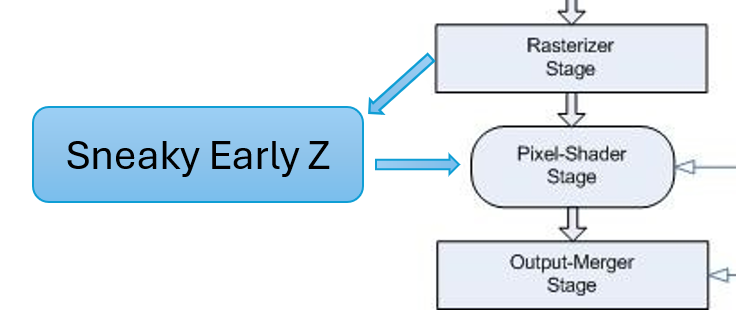

Mistake #1: Conflating z-buffering with CPU wait states

"So no, the zbuffer is still the wait state limiting factor for a CPU at lower resolution"

This is incorrect.

A CPU does not stall because the GPU is doing depth testing.

Modern (and even older) pipelines are:

- Asynchronous

- Deeply buffered

- Decoupled by command queues, fences, and frame latency

The CPU:

- Submits command buffers

- Advances simulation

- Prepares the next frame(s)

The GPU:

- Executes those commands later

- Performs depth tests internally

There is no CPU wait state tied to the z-buffer unless:

- You explicitly insert a GPU → CPU sync (readback, fence wait)

- Or the driver queue is completely full

Depth testing alone does not block the CPU.

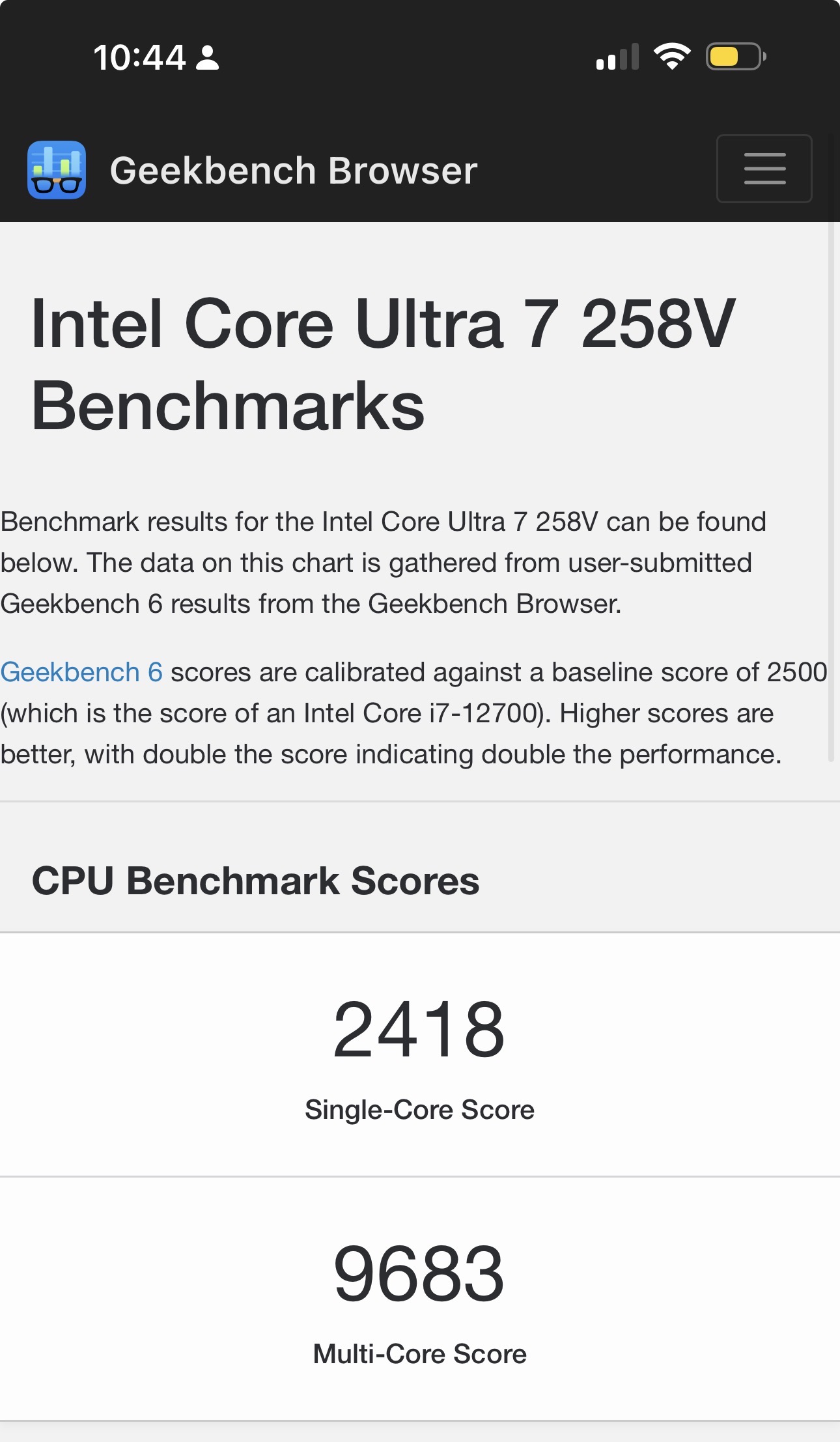

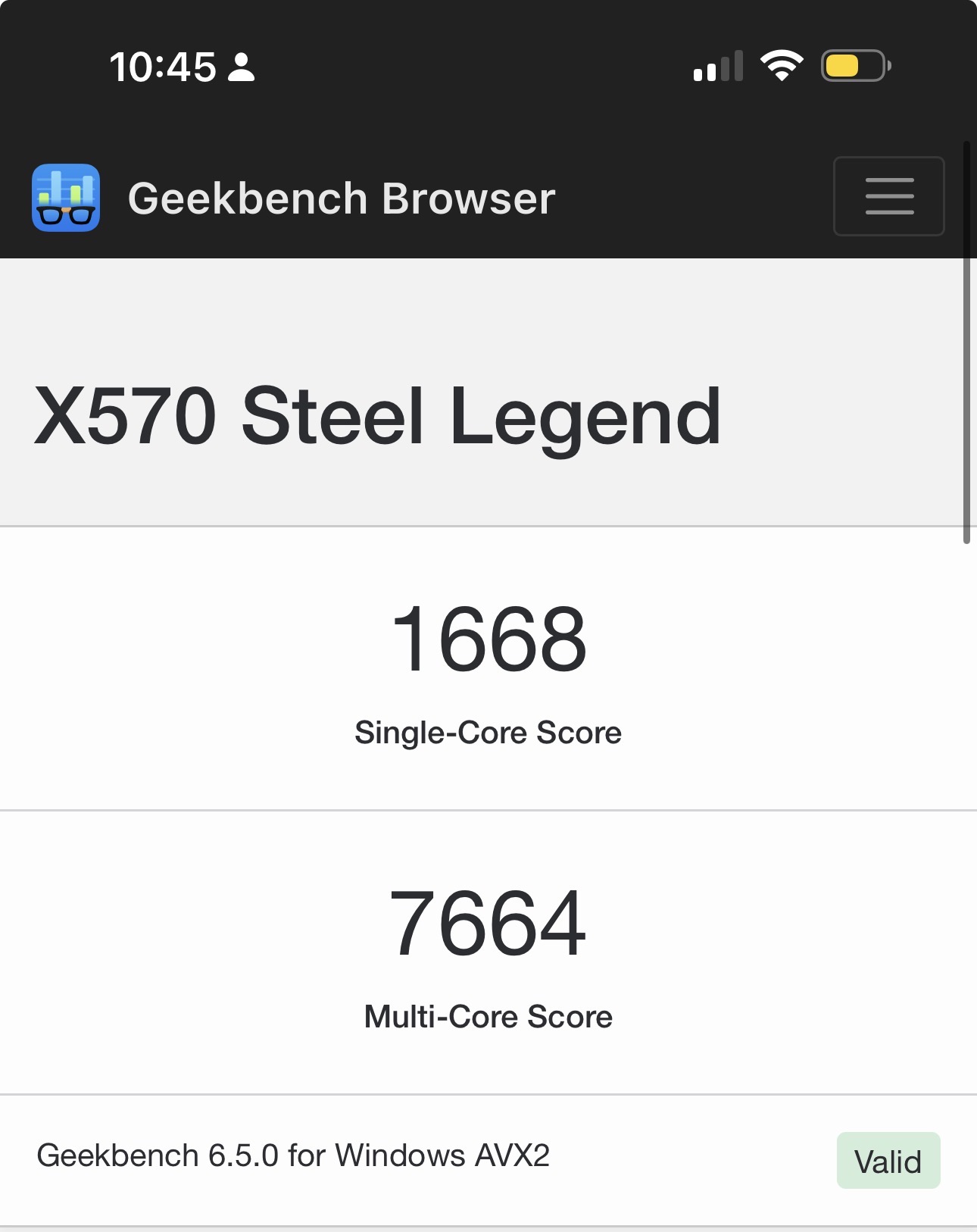

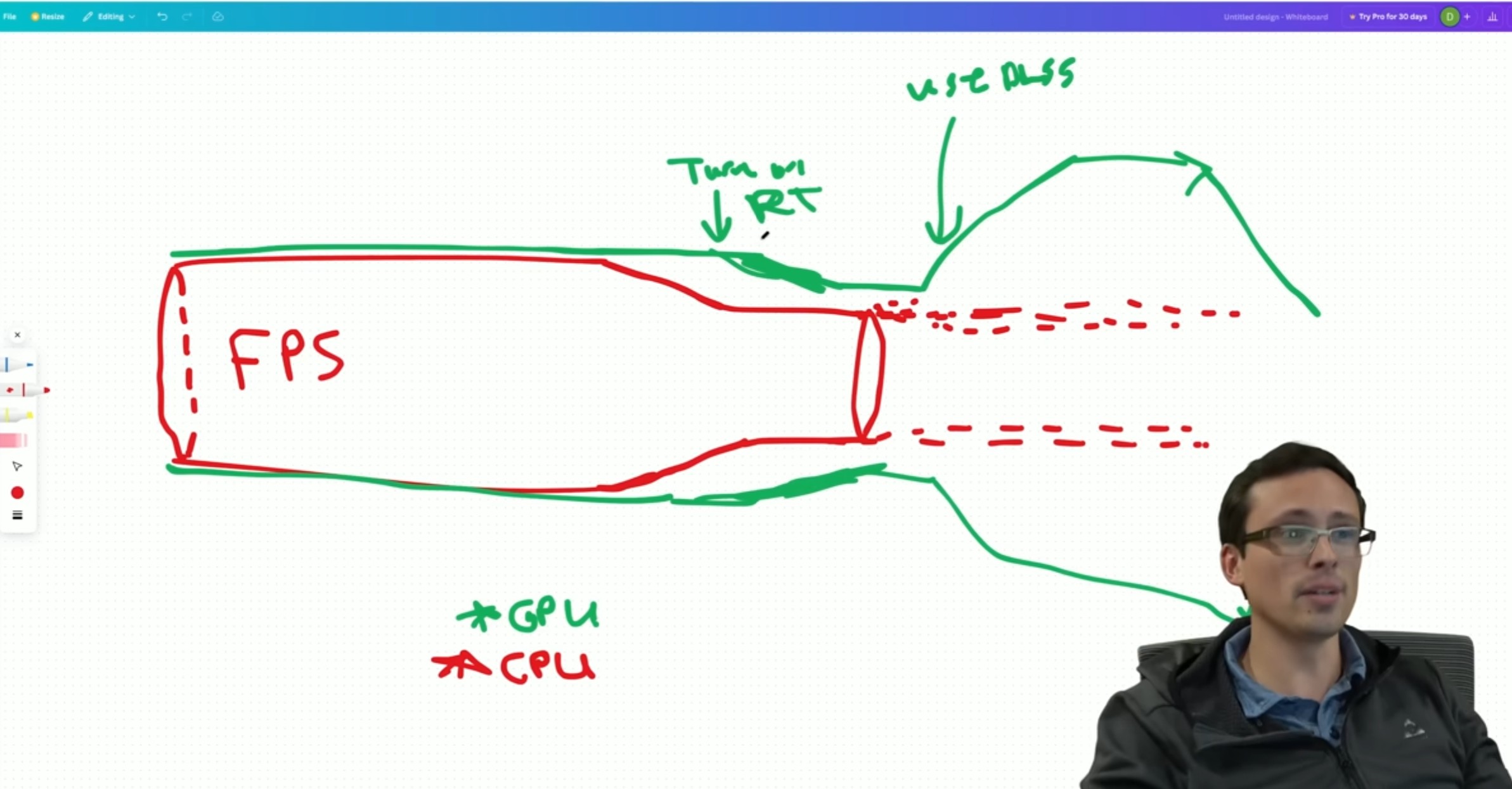

Mistake #2: Misunderstanding why low resolution exposes CPU limits

"At lower resolution the zbuffer ties the CPU and GPU simulation together"

No — resolution has almost nothing to do with CPU↔GPU coupling.

Lower resolution reduces:

- Pixel shading cost

- ROP bandwidth

- Z fill cost

What it does not reduce:

- Draw call count

- State changes

- Simulation complexity

- Animation, AI, physics

- Visibility determination

- Command submission overhead

So when you drop resolution and FPS doesn't go up, that means:

The CPU is the bottleneck — not because of z-buffering, but because the GPU finished early.

The GPU is waiting on the CPU, not the other way around.

Mistake #3: Incorrect console vs PC distinction

"Just because games on PC aren't CPU limited at low resolution, doesn't mean they would be on a console"

This is backwards.

Consoles are more likely to be CPU-limited at low resolution because:

- Fixed hardware → engines are tuned to push GPU harder

- CPU cores are weaker per-core than high-end PCs

- Draw call scaling is more sensitive

In fact:

- Most console engines deliberately target GPU saturation

- CPU headroom is precious and tightly budgeted

- Simulation does not magically escape CPU limits

If consoles were immune to CPU bottlenecks:

- 30 FPS caps wouldn't exist

- CPU-heavy scenes wouldn't tank performance

But they do.

Mistake #4: Overstating "primary core runs everything"

"Game logic runs by the primary CPU core"

This hasn't been broadly true for over a decade.

Modern engines:

- Run simulation, animation, culling, physics, audio, streaming across many threads

- Still have a main thread, yes — but it is often command orchestration, not "all logic"

And critically:

- The main thread is not blocked by z-buffer operations

- It is limited by its own workload and synchronization, not GPU depth tests

The correct model (short & precise)

- Z-buffering is GPU-internal and asynchronous

- Lowering resolution removes GPU pressure

- If FPS doesn't increase → CPU or submission bottleneck

- CPU bottlenecks come from:

- Simulation

- Draw call submission

- State changes

- Synchronization

- Engine architecture

Not from depth testing.

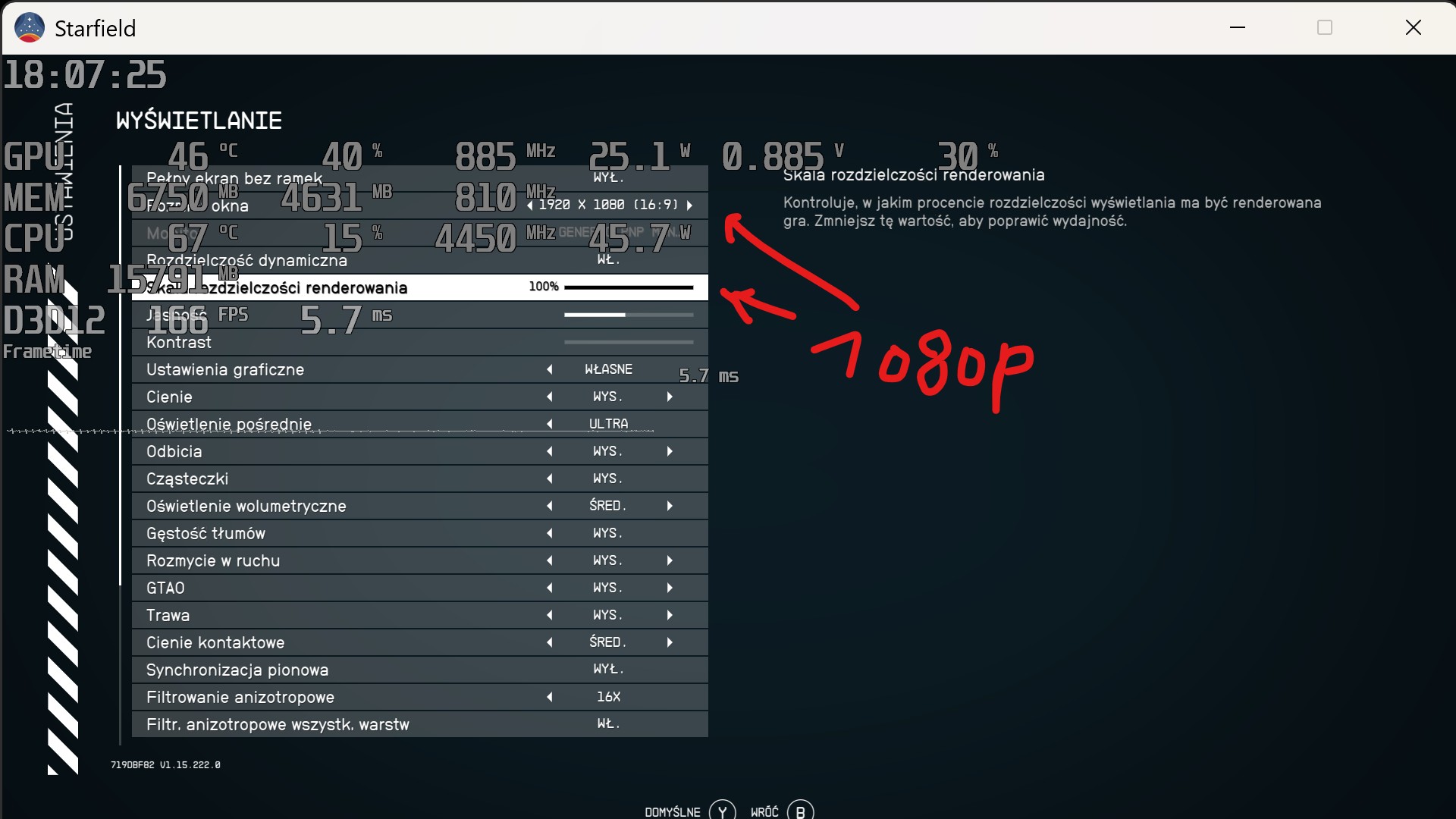

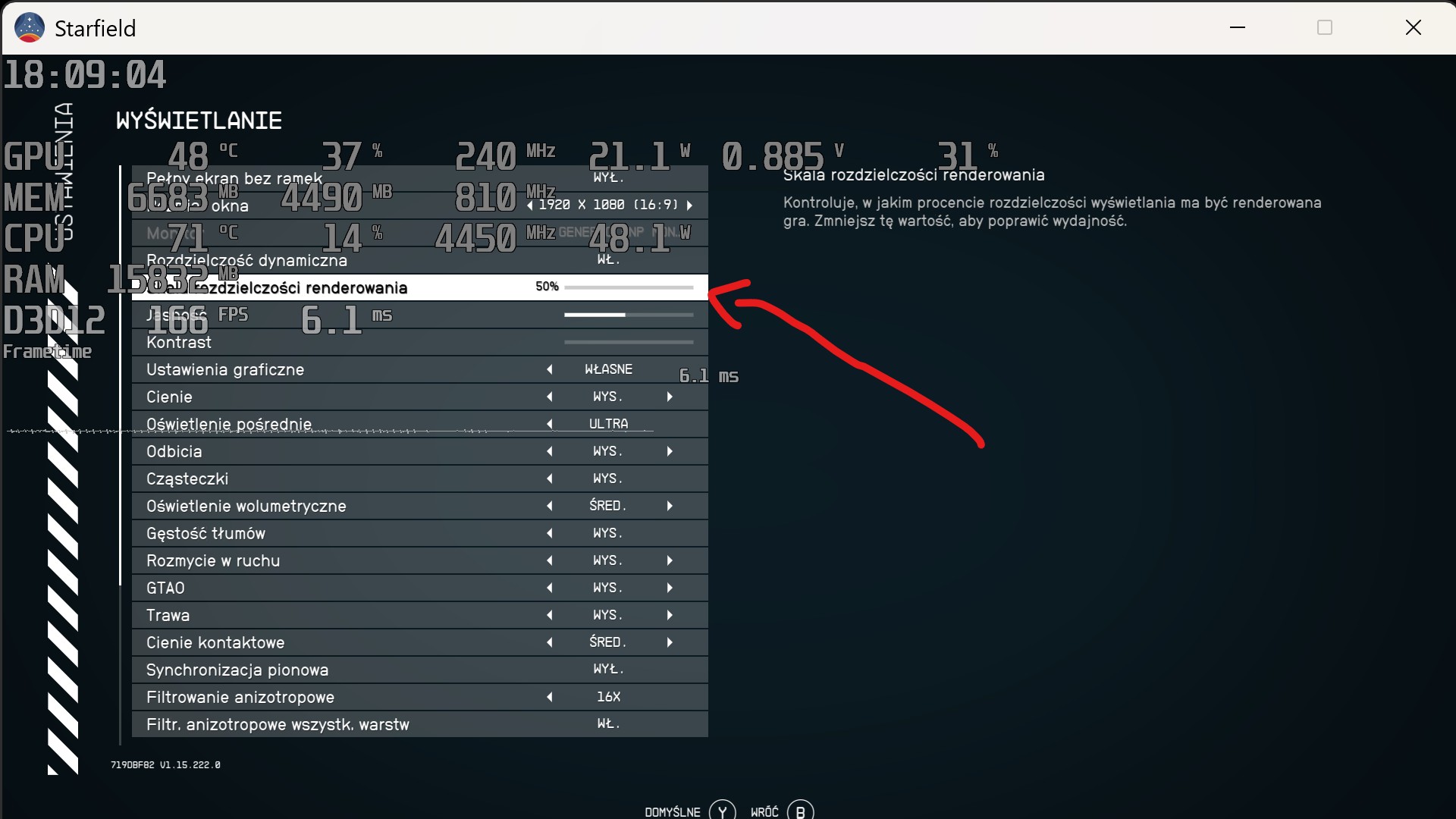

We can easily probably do a whole back and forth via ChatGPT but, I mean, we can make this rather easy. I can provide proof that lowering resolution on modern game engines provides minimal to no performance gain when CPU limited. Can you provide counter proof?

CPUs Game Benchmarks & Graphics Guides Starfield CPU Benchmarks & Bottlenecks: Intel vs. AMD Comparison September 4, 2023 Last Updated: 2024-02-08 We benchmark over a dozen CPUs in Starfield while exploring potential GPU and RAM bottlenecks The Highlights There is some core scaling between six...

gamersnexus.net

What advantages do low resolution tests have when it comes to game benchmarks or are there even alternatives?

www.capframex.com

Clear info about z-buffer work:

This section covers the steps for setting up the depth-stencil buffer, and depth-stencil state for the output-merger stage.

learn.microsoft.com

docs.vulkan.org

Depth In The Logical Rendering Pipeline Where Does Early-Z Fit In? When Does Early-Z Have To Be Disabled? Discard/Alpha Test Pixel Shader Depth Export UAVs/Storage Textures/Storage Buffers Forcing Early-Z Forced Early-Z With UAVs And Depth Writes Rasterizer Order Views/Fragment Shader Interlock...

therealmjp.github.io