winjer

Member

i'm talking about Xess Upscaling on IGPU. Need to know how much perfomance drop.

If, a lot that why AMD not doing ML upscaling, because APU's is still weaks

But PC APUs are much weaker than a PS5.

And have much lower memory bandwidth.

i'm talking about Xess Upscaling on IGPU. Need to know how much perfomance drop.

If, a lot that why AMD not doing ML upscaling, because APU's is still weaks

Yeah, that's a good point and they won't pay AMD to do that.Yes, Starfield uses FSR2.

But MS is not going to create an ML pass for FSR2.

That why need comparision Xess vs FSR perfomance on IGPU and that give to us answer, why AMD ignore ML upscaling for nowBut PC APUs are much weaker than a PS5.

Yeah, that's a good point and they won't pay AMD to do that.

Isn't there a rumor of MS working on their own upscale for DirectX?

Hmm. Guess XS doesn't have the hardware or MS just plain doesn't care anymore.Yes. It's called Automatic Super Resolution, but it seems it's only for Copilot PCs.

Automatic Super Resolution: The First OS-Integrated AI-Based Super Resolution for Gaming - DirectX Developer Blog

Auto SR automatically enhances select existing games allowing players to effortlessly enjoy stunning visuals and fast framerates on CoPilot+ PCs equipped with a Snapdragon® X processor. DirectSR focuses on next generation games and developers, Together, they create a comprehensive SR solution...devblogs.microsoft.com

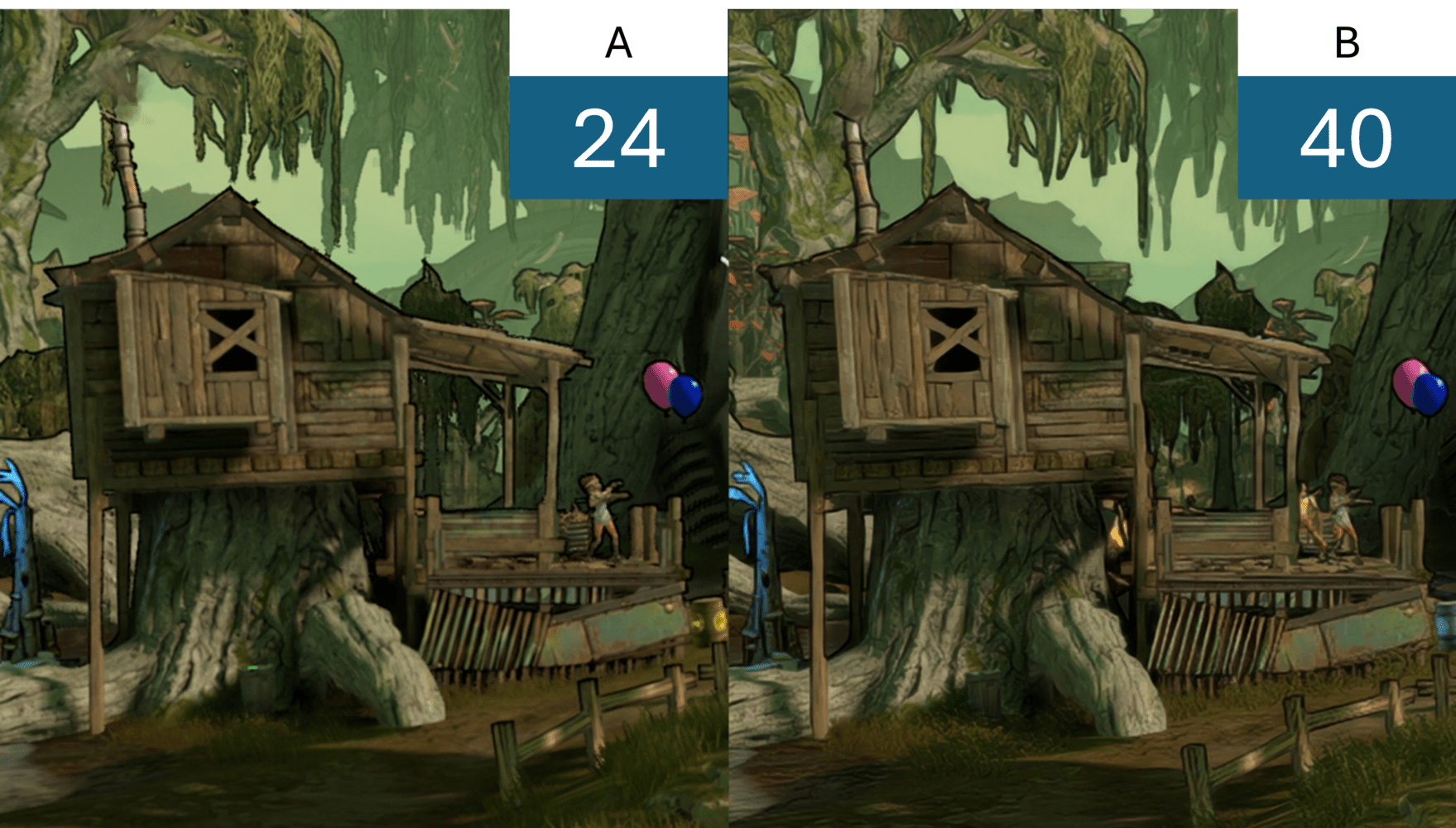

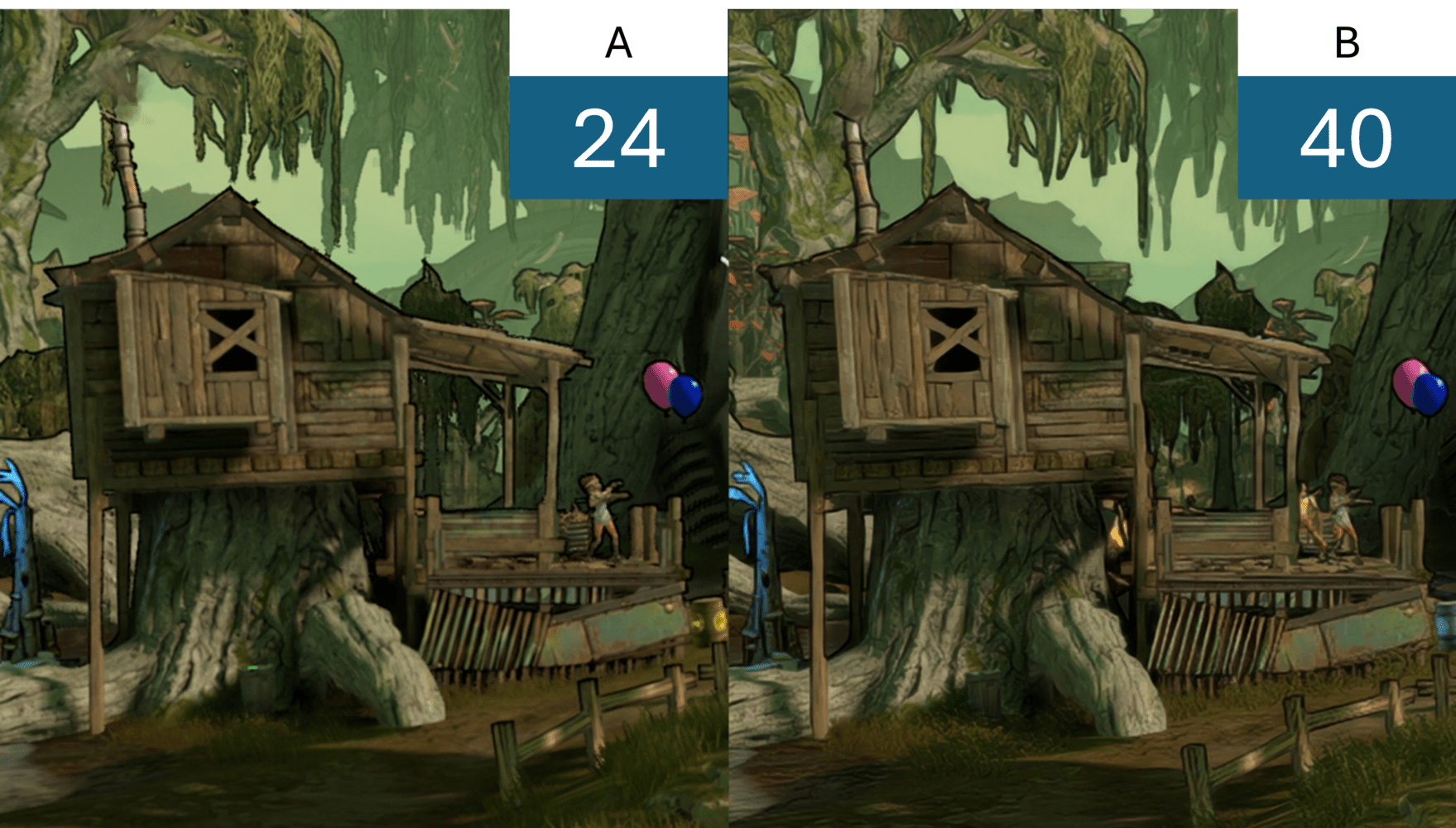

Found this this, seems Xess kill perfomance on IGPU

only ingame bench, and there fps higher without any upscaling.would run even worse without XeSS.

only ingame bench, and there fps higher without any upscaling.

I get what you are getting at, but the inference is happening in the GPU prior to rendering, just not on immediate demand, so more like a decompression algorithm, much like Ragnaroks in which the data is unpacked and prepared (inferenced) before the renderer actually references it in a render call, but the 10.8ms time for neural network 2048x2048 to 4096x4096 upscale gives plenty of margin to get that in to real-time, whether by lowering the texture sizes or fitting into a 33ms(30fps) target frame-rate.I suspect they used ML to train more realistic animations. But during runtime those animations run as any other in the game.

So there isn't ML running in that game. Only during the process of making the animations.

That why we need someone, who have IGPU and can test FSR vs Xess. That really strange none tests them.But he didn't test CP2077 without XeSS.

You know, it's kind of comical that today we'd consider 10ms latency before accessing a texture in pipeline 'acceptable' but just 2 decades ago ppl were still losing their shit over idea that ps2 had to move texture to edram to use it, and those latencies were measured in microseconds. And not even 10s of them usually.but the 10.8ms time for neural network 2048x2048 to 4096x4096 upscale gives plenty of margin to get that in to real-time, whether by lowering the texture sizes or fitting into a 33ms(30fps) target frame-rate.

I don't think so.This rumor sounds like cope and bullshit. AMD never shied away from giving exclusive features to their PC cards. They dropped ML upscaling entirely, completely leaving it wide open for their competitors because little Sony wouldn't have benefited?

Not buying it.

In practice, no. Not one games showed RDNA2 features in XSX improved anything.The PS5 is a great console. But using RDNA1, instead of RDNA2 features, did limit the PS5.

One good example is the lack of support for DP4A on the PS5. This means it's impossible to run an upscaler with ML, on the console.

This limits the quality of upscaling that the console can be done.

Fortunately, the Pro does have hardware that can accelerate ML tasks, and we can get upscalers that do use ML, such as PSSR.

I don't think so.

There is a thread here talking about how Sony saved AMD. Sony consoles sells more the their GPUs.

Better to hold ofMLon ML upscaling until your biggest customer has a console that can do ML upscaling.

In practice, no. Not one games showed RDNA2 features in XSX improved anything.

Yea, PS5 doesn't support DPA4 sadly.Well on RDNA comparisons the scheduling might be the bigger bottleneck, which was the big x4 optimisation, and from reading the Ragnarok paper, it reads like the PS5 hardware solution used all the same features of DPA4.

That's how he does his tweets.He asks a question and this thread has 5 replies. AMD sabotaging themselves because of Sony makes no sense. They wouldn't have made more money either way.

AMD simply didn't have a solution in place or even in the works. Furthermore, the utility of a DP4A pass for resolution upscaling is questionable at best.

No. Doom (and the others games) actually looked higher resolution on PS5 thanks to sharper looking textures! Those games actually showed RDNA2 VRS was a half cooked feature and it was better to not use it. Do you know of many recent multiplatform games using RDNA2 VRS on XSX? There are none as developers know it will dramatically worsen IQ.There were a few games that had better usage of features. Such as VRS in Doom and a few more games.

But the Series S/X is not the leading platform, so no one cares to do anything special with the extra features it has.

But uf these features were on the PS5 as well, then all devs would use them.

No. Doom (and the others games) actually looked higher resolution on PS5 thanks to sharper looking textures! Those games actually showed RDNA2 VRS was a half cooked feature and it was better to not use it. Do you know of many recent multiplatform games using RDNA2 VRS on XSX? There are none as developers know it will dramatically worsen IQ.

I dunno. You'd think Microsoft would have made something with it if it were so easy. It would have helped their most powerful console on Earth narrative tremendously. We saw how important it was for them in those emails to have an Xbox that could outmuscle the PS5.And then we have the lack of support for DP4A. If the PS5 had this feature we might already have PSSR.

I dunno. You'd think Microsoft would have made something with it if it were so easy. It would have helped their most powerful console on Earth narrative tremendously. We saw how important it was for them in those emails to have an Xbox that could outmuscle the PS5.

If it were PSSR, it would have been a bastardized version of it that's hardly better than FSR2.

I'm not even sure how good XeSS is on a a DP4A pass because it's only on Intel iGPUs. On non-Intel GPUs, it doesn't use it and is thus much slower to the point of being unviable a lot of the time.Have you seen Microsoft this generation?

They couldn't make anything good, if their lives depended on it.

And PSSR could be as good as XeSS on DP4A. Not as good as DLSS, but significantly better than FSR2.

I'm not even sure how good XeSS is on a a DP4A pass because it's only on Intel iGPUs. On non-Intel GPUs, it doesn't use it and is thus much slower to the point of being unviable a lot of the time.

Yes, I know, which is what I just said. The thing is non-Intel GPUs run XeSS on the shaders, not DP4A, so we don't actually know how well XeSS does on the DP4A pass outside of integrated Intel GPUs.XeSS has 3 data paths. One is for XMX, that only runs on Intel GPUs. It uses the XMX units and has the best image quality.

The second runs on DP4A. This means it can run on most modern GPUs. Such as Nvidia Pascal or newer. Or AMD's RDNA2 or newer. It's image quality is good, but not as good as the XMX path.

The third one runs on shaders, can run even on old GPUs. Has the worst image quality and performance loss.

Yes, I know, which is what I just said. The thing is non-Intel GPUs run XeSS on the shaders, not DP4A, so we don't actually know how well XeSS does on the DP4A pass outside of integrated Intel GPUs.

Has it changed? Last I checked it was on the shaders and only Intel intel integrated GPUs used the DPA4A path. At least, that's what Intel told Alex unless he misunderstood.Non Intel, modern GPUs run XeSS on DP4A.

i just hope its blacklove it. Screw the haters

Why would they make it black when they know most people want black and they can sell platesi just hope its black

IMO you should go read the Ragnarok presentation, DP4A would be less flexible than the solution they optimised, and the same is true of VRS. VRS is used, it is just done via async compute when used where they can extract better quality and better scheduling/performance/efficiencyVRS in Doom, on the Series X looked better than the VRS on the PS5.

Meanwhile on PC, we had a few games that had a very good implementation of VRS.

If the PS5 had hardware VRS, chances are most games would use it.

And then we have the lack of support for DP4A. If the PS5 had this feature we might already have PSSR.

hzd remake hell yes be realIf I were the head of marketing over at PlayStation:

- I would reveal the PS5 Pro via an in-depth tech interview with Mark Cerny on Wired in the first week of September.

That same day, a PlayStation Showcase for the following week would be announced.- At the PlayStation Showcase, I would make it clear that all in-game and in-engine footage shown has been captured from PS5 Pro systems.

- Then in October, I would hold a special State of Play episode focusing on PS5 Pro enhancements for the existing first-party catalog and select major third-party games, such as the Final Fantasy titles.

- Release on November 15th, alongside the much-rumored Horizon: Zero Dawn remake - with Assassin's Creed, Call of Duty, Silent Hill and Star Wars all having Pro enhancements built into them already.

I want silver, a really good silver that has a sheen, may even silver AND black--something high tech looking.Why would they make it black when they know most people want black and they can sell plates

Has it changed? Last I checked it was on the shaders and only Intel intel integrated GPUs used the DPA4A path. At least, that's what Intel told Alex unless he misunderstood.

IMO you should go read the Ragnarok presentation, DP4A would be less flexible than the solution they optimised, and the same is true of VRS. VRS is used, it is just done via async compute when used where they can extract better quality and better scheduling/performance/efficiency

I want silver, a really good silver that has a sheen, may even silver AND black--something high tech looking.

Can point the source?

RedRiders linked it back on page 67, third message down.The only thing I could find is this PDF presentation which was used at GDC, I'm guessing there's a full video presentation out their locked behind the GDC paywall.

But that's exactly what he says?I realize GAF hates the guy (and especially the typical Sonyposters) but at least try to accurately represent what the guy has said on any given subject. Literally disproven in 2 minutes by watching the first video he ever made on the topic.

RedRiders linked it back on page 67, third message down.

It was clarified later. I think he misunderstood it in the video.Alex is a dumb ass.

DP4A is for all GPUs that support it.

The path that is exclusive to Intel is the XMX.

I have a feeling it will be white and share the same external disk drive as the PS5.i just hope its black

But that's exactly what he says?

Arc GPUs: Run on XMX units on an Int-8 pass.

Intel iGPUs: Run on DP4A pass

Non intel GPUs: Shader Model 6.4 pass

Did I miss something?

The ultimate test for RDNA2 VRS has being done by Activision on one of their COD. They compared their own custom software VRS implementation vs using RDNA2 VRS and found out their solution had better IQ while being more flexible and have the same of better performance results. With RDNA2 VRS you get blocky textures. Textures are basically destroyed and there are no ways to avoid it (while you can avoid it with smart software VRS). the lead developer did a paper with benchmarks and visual comparisons. Chances most multiplat developers have read that paper and this is why no multplatform games ever used it recently. Case is closed.VRS in Doom, on the Series X looked better than the VRS on the PS5.

Meanwhile on PC, we had a few games that had a very good implementation of VRS.

If the PS5 had hardware VRS, chances are most games would use it.

And then we have the lack of support for DP4A. If the PS5 had this feature we might already have PSSR.

The ultimate test for RDNA2 VRS has being done by Activision on one of their COD. They compared their own custom software VRS implementation vs using RDNA2 VRS and found out their solution had better IQ while being more flexible and have the same of better performance results. With RDNA2 VRS you get blocky textures. Textures are basically destroyed and there are no ways to avoid it (while you can avoid it with smart software VRS). the lead developer did a paper with benchmarks and visual comparisons. Chances most multiplat developers have read that paper and this is why no multplatform games ever used it recently. Case is closed.

Do you mean this?IMO you should go read the Ragnarok presentation, DP4A would be less flexible than the solution they optimised

That's not true about texturing because the shader fragment count is proportional to the number of sampler calls(texture samples) that blend to produce the final pixel.Yes, I know about the CoD implementation of VRS. But most studios can't develop such a feature.

Hardware VRS as Nvidia and AMD implemented in hardware can provide very good results. Such as in Gears 5 and Wolfenstein.

VRS does not affect textures. It just renders selective portions of an image at a lower shading rate.

The question is whether the implementation is good. VRS will always result in a degradation in image quality. Even in the case of what CoD and Ragnarök implemented.

This is because it will always shade portions of an area at lower rates. The point of contention is choosing the proper rate, and choosing the areas where the player won't notice the lower rate.

That's not true about texturing because the shader fragment count is proportional to the number of sampler calls(texture samples) that blend to produce the final pixel.

In addition to that if the VRS rate across the render resolves to at least 4 fragments per pixel, then other than a possible undersample for the indirect light sampling, image quality should be pretty much a match AFAIK.

If you are doing 4 or more fragments per pixel with VRS, you would also be doing 4 or more texture lookups for that pixel in the variable rate shading, so you will be capturing virtually all the same direct rendering detail that can be displayed at the limit of minification.But the texture remains the same, it's the amount of fragments that change, per location.

The texture won't show up with the full resolution, only because the shading doesn't have enough detail.

The quality will only remain similar, if the group of pixels are similar enough. Otherwise, it will be immediately noticeable.

That is why picking the regions to apply VRS is so important.

If you are doing 4 or more fragments per pixel with VRS, you would also be doing 4 or more texture lookups for that pixel in the variable rate shading, so you will be capturing virtually all the same direct rendering detail that can be displayed at the limit of minification.